Yaser Sheikh

Most Recent Affiliation(s):

- Facebook Reality Labs, Carnegie Mellon University, Disney Research Pittsburgh

Other / Past Affiliation(s):

- Carnegie Mellon University

- META Corporation

Bio:

2019

Yaser Sheikh directs the Facebook Reality Lab in Pittsburgh, which is devoted to achieving photorealistic social interactions in AR and VR, and is an adjunct professor at Carnegie Mellon University. His research broadly focuses on machine perception and rendering of social behavior, spanning sub-disciplines in computer vision, computer graphics, and machine learning. With colleagues and students, he has won the Honda Initiation Award (2010), Popular Science’s “Best of What’s New” Award, best student paper award at CVPR (2018), best paper finalists at (CVPR 2019), best paper awards at WACV (2012), SAP (2012), SCA (2010), ICCV THEMIS (2009), best demo award at ECCV (2016), and he received the Hillman Fellowship for Excellence in Computer Science Research (2004). Yaser has served as a senior committee member at leading conferences in computer vision, computer graphics, and robotics including SIGGRAPH (2013, 2014), CVPR (2014, 2015, 2018), ICRA (2014, 2016), ICCP (2011), and served as an Associate Editor of CVIU. His research has been featured by various media outlets including The New York Times, BBC, MSNBC, Popular Science, and in technology media such as WIRED, The Verge, and New Scientist.

Learning Category: Jury Member:

Experience(s):

Learning Category: Presentation(s):

Type: [Technical Papers]

Authentic volumetric avatars from a phone scan Presenter(s): [Cao] [Simon] [Kim] [Schwartz] [Zollhoefer] [Saito] [Lombardi] [Wei] [Belko] [Yu] [Sheikh] [Saragih]

[SIGGRAPH 2022]

Type: [Frontiers]

Frontier Talk: Metric Telepresence using Codec Avatars Presenter(s): [Sheikh]

[SIGGRAPH 2022]

Type: [Courses]

State of the art in telepresence Presenter(s): [Lawrence] [Pan] [Goldman] [McDonnell] [Robillard] [O'Sullivan] [Sheikh] [Zollhoefer] [Saragih]

Entry No.: [14]

[SIGGRAPH 2022]

Type: [Technical Papers]

Deep relightable appearance models for animatable faces Presenter(s): [Bi] [Lombardi] [Saito] [Simon] [Wei] [Mcphail] [Ramamoorthi] [Sheikh] [Saragih]

[SIGGRAPH 2021]

Type: [Technical Papers]

Driving-signal aware full-body avatars Presenter(s): [Bagautdinov] [Wu] [Simon] [Prada] [Shiratori] [Wei] [Xu] [Sheikh] [Saragih]

[SIGGRAPH 2021]

Type: [Technical Papers]

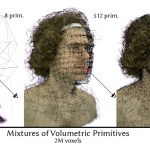

Mixture of volumetric primitives for efficient neural rendering Presenter(s): [Lombardi] [Simon] [Schwartz] [Zollhoefer] [Sheikh] [Saragih]

[SIGGRAPH 2021]

Type: [Technical Papers]

Real-time 3D neural facial animation from binocular video Presenter(s): [Cao] [Agrawal] [Torre] [Chen] [Saragih] [Simon] [Sheikh]

[SIGGRAPH 2021]

Type: [Technical Papers]

The Eyes Have It: An Integrated Eye and Face Model for Photorealistic Facial Animation Presenter(s): [Schwartz] [Wei] [Wang] [Lombardi] [Simon] [Saragih] [Sheikh]

[SIGGRAPH 2020]

Type: [Technical Papers]

Neural volumes: learning dynamic renderable volumes from images Presenter(s): [Lombardi] [Simon] [Saragih] [Schwartz] [Lehrmann] [Sheikh]

[SIGGRAPH 2019]

Type: [Technical Papers]

VR facial animation via multiview image translation Presenter(s): [Wei] [Saragih] [Simon] [Harley] [Lombardi] [Perdoch] [Hypes] [Wang] [Badino] [Sheikh]

[SIGGRAPH 2019]

Type: [Technical Papers]

Deep appearance models for face rendering Presenter(s): [Lombardi] [Saragih] [Simon] [Sheikh]

Entry No.: [68]

[SIGGRAPH 2018]

Type: [Technical Papers]

Gaze-Driven Video Re-Editing Presenter(s): [Jain] [Sheikh] [Shamir] [Hodgins]

[SIGGRAPH 2015]

Type: [Technical Papers]

3D object manipulation in a single photograph using stock 3D models Presenter(s): [Kholgade] [Simon] [Efros] [Sheikh]

[SIGGRAPH 2014]

Type: [Technical Papers]

Automatic editing of footage from multiple social cameras Presenter(s): [Arev] [Park] [Sheikh] [Hodgins] [Shamir]

[SIGGRAPH 2014]

Type: [Technical Papers]

Bilinear Spatiotemporal Basis Models Presenter(s): [Akhter] [Simon] [Khan] [Matthews] [Sheikh]

[SIGGRAPH 2012]

Learning Category: Moderator:

Type: [Technical Papers]

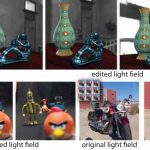

How do people edit light fields? Presenter(s): [Jarabo] [Masia] [Bousseau] [Pellacini] [Gutierrez]

[SIGGRAPH 2014]

Type: [Technical Papers]

Modeling and optimizing eye vergence response to stereoscopic cuts Presenter(s): [Templin] [Didyk] [Myszkowski] [Hefeeda] [Seidel] [Matusik]

[SIGGRAPH 2014]

Type: [Technical Papers]

Simulating and compensating changes in appearance between day and night vision Presenter(s): [Wanat] [Mantiuk]

[SIGGRAPH 2014]

Type: [Technical Papers]

Style transfer for headshot portraits Presenter(s): [Shih] [Paris] [Barnes] [Freeman] [Durand]

[SIGGRAPH 2014]

Type: [Technical Papers]

Transient attributes for high-level understanding and editing of outdoor scenes Presenter(s): [Laffont] [Ren] [Tao] [Qian] [Hays]

[SIGGRAPH 2014]

Type: [Technical Papers]

3D shape regression for real-time facial animation Presenter(s): [Cao] [Weng] [Lin] [Zhou]

[SIGGRAPH 2013]

Type: [Technical Papers]

Online modeling for realtime facial animation Presenter(s): [Bouaziz] [Wang] [Pauly]

[SIGGRAPH 2013]

Role(s):

- Course Presenter

- Frontiers Presenter

- Studio (SIGGRAPH Lab) Presenter

- Technical Paper Moderator

- Technical Paper Presenter

- Technical Papers Jury Member