“Movie editing and cognitive event segmentation in virtual reality video” by Serrano, Sitzmann, Ruiz-Borau, Wetzstein, Gutierrez, et al. …

Conference:

Type:

Title:

- Movie editing and cognitive event segmentation in virtual reality video

Session/Category Title: People Power

Presenter(s)/Author(s):

Moderator(s):

Abstract:

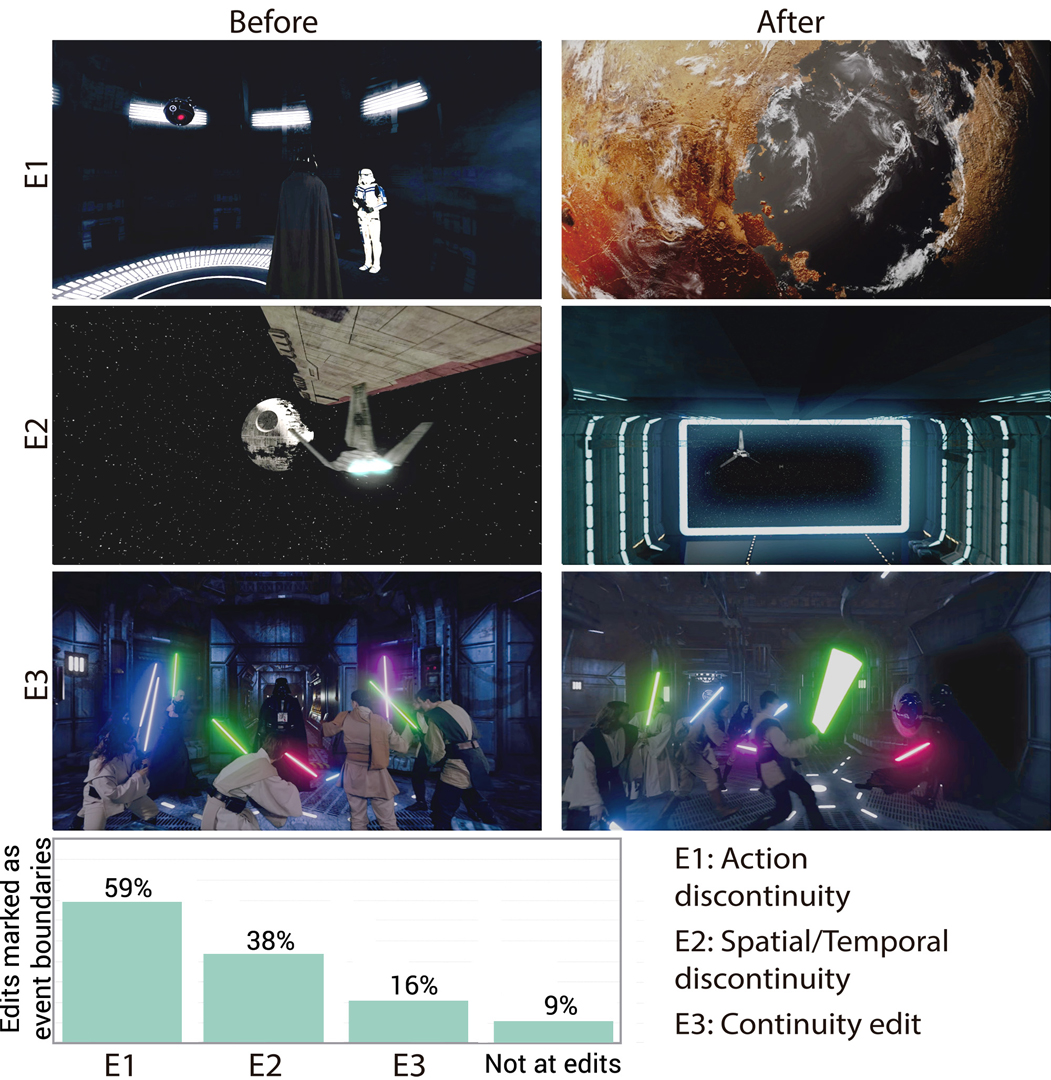

Traditional cinematography has relied for over a century on a well-established set of editing rules, called continuity editing, to create a sense of situational continuity. Despite massive changes in visual content across cuts, viewers in general experience no trouble perceiving the discontinuous flow of information as a coherent set of events. However, Virtual Reality (VR) movies are intrinsically different from traditional movies in that the viewer controls the camera orientation at all times. As a consequence, common editing techniques that rely on camera orientations, zooms, etc., cannot be used. In this paper we investigate key relevant questions to understand how well traditional movie editing carries over to VR, such as: Does the perception of continuity hold across edit boundaries? Under which conditions? Does viewers’ observational behavior change after the cuts? To do so, we rely on recent cognition studies and the event segmentation theory, which states that our brains segment continuous actions into a series of discrete, meaningful events. We first replicate one of these studies to assess whether the predictions of such theory can be applied to VR. We next gather gaze data from viewers watching VR videos containing different edits with varying parameters, and provide the first systematic analysis of viewers’ behavior and the perception of continuity in VR. From this analysis we make a series of relevant findings; for instance, our data suggests that predictions from the cognitive event segmentation theory are useful guides for VR editing; that different types of edits are equally well understood in terms of continuity; and that spatial misalignments between regions of interest at the edit boundaries favor a more exploratory behavior even after viewers have fixated on a new region of interest. In addition, we propose a number of metrics to describe viewers’ attentional behavior in VR. We believe the insights derived from our work can be useful as guidelines for VR content creation.

References:

1. Joseph D. Anderson. 1996. The Reality of Illusion: An Ecological Approach to Cognitive Film Theory. Southern Illinois University Press.Google Scholar

2. Ido Arev, Hyun Soo Park, Yaser Sheikh, Jessica K. Hodgins, and Ariel Shamir. 2014. Automatic editing of footage from multiple social cameras. ACM Trans. Graph. 33, 4 (2014), 81:1–81:11.Google ScholarDigital Library

3. Floraine Berthouzoz, Wilmot Li, and Maneesh Agrawala. 2012. Tools for placing cuts and transitions in interview video. ACM Trans. Graph. 31, 4 (2012), 67:1–67:8.Google ScholarDigital Library

4. I. Biederman. 1987. Recognition-by-Components: A Theory of Human Image Understanding. Psychological Review 94 (1987), 115–147. Google ScholarCross Ref

5. David Bordwell, Kristin Thompson, and Jeremy Ashton. 1997. Film art: An introduction. Vol. 7. McGraw-Hill New York.Google Scholar

6. W. Browne and J. Rasbash. 2004. Multilevel Modelling. In Handbook of data analysis. Sage Publications, 459–478. Google ScholarCross Ref

7. John M Carroll and Thomas G Bever. 1976. Segmentation in cinema perception. Science 191, 4231 (1976), 1053–1055. Google ScholarCross Ref

8. Susana Castillo, Tilke Judd, and Diego Gutierrez. 2011. Using Eye-Tracking to Assess Different Image Retargeting Methods. In Symposium on Applied Perception in Graphics and Visualization (APGV). ACM Press. Google ScholarDigital Library

9. Gael Chandler. 2004. Cut by cut. Michael Wiese Productions.Google Scholar

10. David B Christianson, Sean E Anderson, Li-wei He, David H Salesin, Daniel S Weld, and Michael F Cohen. 1996. Declarative camera control for automatic cinematography. In AAAI/IAAI, Vol. 1. 148–155.Google ScholarDigital Library

11. Marc Christie, Rumesh Machap, Jean-Marie Normand, Patrick Olivier, and Jonathan H. Pickering. 2005. Virtual Camera Planning: A Survey. In Int. Symposium on Smart Graphics. 40–52. Google ScholarDigital Library

12. Neil Cohn. 2013. Visual Narrative Structure. Cognitive Science 37, 3 (2013), 413–452. Google ScholarCross Ref

13. Antoine Coutrot and Nathalie Guyader. 2014. How saliency, faces, and sound influence gaze in dynamic social scenes. Journal of vision 14, 8 (2014), 5–5. Google ScholarCross Ref

14. James Cutting. 2004. Perceiving Scene in Film and in the World. In Moving image theory: ecological considerations, J. D. Anderson and B. F. Anderson (Eds.). Chapter 1, 9–26.Google Scholar

15. James E Cutting. 2014. Event segmentation and seven types of narrative discontinuity in popular movies. Acta psychologica 149 (2014), 69–77. Google ScholarCross Ref

16. Nicholas M. Davis, Alexander Zook, Brian O’Neill, Brandon Headrick, Mark Riedl, Ashton Grosz, and Michael Nitsche. 2013. Creativity support for novice digital filmmaking. In Proc. ACM SIGCHI. 651–660. Google ScholarDigital Library

17. Edward Dmytryk. 1984. On Film Editing. An Introduction to thè Art of Film Construction. (1984).Google Scholar

18. Alexis Gabadinho, Gilbert Ritschard, Nicolas MÃiller, and Matthias Studer. 2011. Analyzing and Visualizing State Sequences in R with TraMineR. Journal of Statistical Software 40, 1 (2011).Google ScholarCross Ref

19. Quentin Galvane, Rémi Ronfard, Christophe Lino, and Marc Christie. 2015. Continuity editing for 3d animation. In AAAI Conference on Artificial Intelligence.Google Scholar

20. Li-wei He, Michael F Cohen, and David H Salesin. 1996. The virtual cinematographer: a paradigm for automatic real-time camera control and directing. In Proceedings of the 23rd annual conference on Computer graphics and interactive techniques. ACM, 217–224.Google Scholar

21. Rachel Heck, Michael N. Wallick, and Michael Gleicher. 2007. Virtual videography. TOMCCAP 3, 1 (2007). Google ScholarDigital Library

22. Julian Hochberg and Virginia Brooks. 2006. Film cutting and visual momentum. In the mind’s eye: Julian Hochberg on the perception of pictures, films, and the world (2006), 206–228.Google Scholar

23. Eakta Jain, Yaser Sheikh, Ariel Shamir, and Jessica Hodgins. 2014. Gaze-driven Video Re-editing. ACM Transactions on Graphics (2014).Google Scholar

24. Thomas C Kübler, Katrin Sippel, Wolfgang Fuhl, Guilherme Schievelbein, Johanna Aufreiter, Raphael Rosenberg, Wolfgang Rosenstiel, and Enkelejda Kasneci. 2015. Analysis of eye movements with Eyetrace. In International Joint Conference on Biomedical Engineering Systems and Technologies. Springer, 458–471.Google ScholarCross Ref

25. Christopher A Kurby and Jeffrey M Zacks. 2008. Segmentation in the perception and memory of events. Trends in cognitive sciences 12, 2 (2008), 72–79. Google ScholarCross Ref

26. Olivier Le Meur and Thierry Baccino. 2013. Methods for comparing scanpaths and saliency maps: strengths and weaknesses. Behavior research methods 45, 1 (2013), 251–266. Google ScholarCross Ref

27. Olivier Le Meur, Thierry Baccino, and Aline Roumy. 2011. Prediction of the Inter-Observer Visual Congruency (IOVC) and application to image ranking. In Proc. ACM Multimedia. ACM, 373–382. Google ScholarDigital Library

28. Zheng Lu and Kristen Grauman. 2013. Story-Driven Summarization for Egocentric Video. In Proc. IEEE CVPR. 2714–2721. Google ScholarDigital Library

29. Joseph Magliano and Jeffrey M. Zacks. 2011. The Impact of Continuity Editing in Narrative Film on Event Segmentation. Cognitive Science 35, 8 (2011), 1489–1517. Google ScholarCross Ref

30. Bobbie O’Steen. 2009. The Invisible Cut. Michael Wiese Productions.Google Scholar

31. Abhishek Ranjan, Jeremy P. Birnholtz, and Ravin Balakrishnan. 2008. Improving meeting capture by applying television production principles with audio and motion detection. In Proc. ACM SIGCHI. 227–236. Google ScholarDigital Library

32. S.W. Raudensbush and A.S. Bryk. 2002. Hierarchical Linear Models. Sage Publications.Google Scholar

33. Jeremy R. Reynolds, Jeffrey M. Zacks, and Todd S. Braver. 2007. A Computational Model of Event Segmentation From Perceptual Prediction. Cognitive Science 31, 4 (2007), 613–643. Google ScholarCross Ref

34. Vincent Sitzmann, Ana Serrano, Amy Pavel, Maneesh Agrawala, Diego Gutierrez, and Gordon Wetzstein. 2016. Saliency in VR: How do people explore virtual environments? arXiv preprint arXiv:1612.04335 (2016).Google Scholar

35. Tim J Smith. 2012. The attentional theory of cinematic continuity. Projections 6, 1 (2012), 1–27.Google ScholarCross Ref

36. Tim J Smith and John M Henderson. 2008. Edit Blindness: The relationship between attention and global change blindness in dynamic scenes. Journal of Eye Movement Research 2, 2 (2008).Google Scholar

37. Anh Truong, Floraine Berthouzoz, Wilmot Li, and Maneesh Agrawala. 2016. QuickCut: An Interactive Tool for Editing Narrated Video. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, UIST 2016, Tokyo, Japan, October 16–19, 2016. 497–507.Google ScholarDigital Library

38. Hui-Yin Wu and Marc Christie. 2015. Stylistic patterns for generating cinematographic sequences. In Workshop on Intelligent Cinematography and Editing.Google ScholarDigital Library

39. Jeffrey M Zacks. 2010. How we organize our experience into events. Psychological Science Agenda 24, 4 (2010).Google Scholar

40. Jeffrey M Zacks, Nicole K Speer, Khena M Swallow, and Corey J Maley. 2010. The brain’s cutting-room floor: Segmentation of narrative cinema. Frontiers in human neuroscience 4 (2010), 168.Google Scholar

41. Jeffrey M Zacks and Khena M Swallow. 1976. Foundations of attribution: The perception of ongoing behavior. New directions in attribution research 1, 223–247.Google Scholar

42. Jeffrey M Zacks and Khena M Swallow. 2007. Event segmentation. Current directions in psychological science 14, 2 (2007), 80–84.Google Scholar