“JALI-Driven Expressive Facial Animation and Multilingual Speech in Cyberpunk 2077” by Edwards, Landreth, Popławski, Malinowski, Watling, et al. …

Conference:

Type:

Entry Number: 60

Title:

- JALI-Driven Expressive Facial Animation and Multilingual Speech in Cyberpunk 2077

Presenter(s)/Author(s):

Abstract:

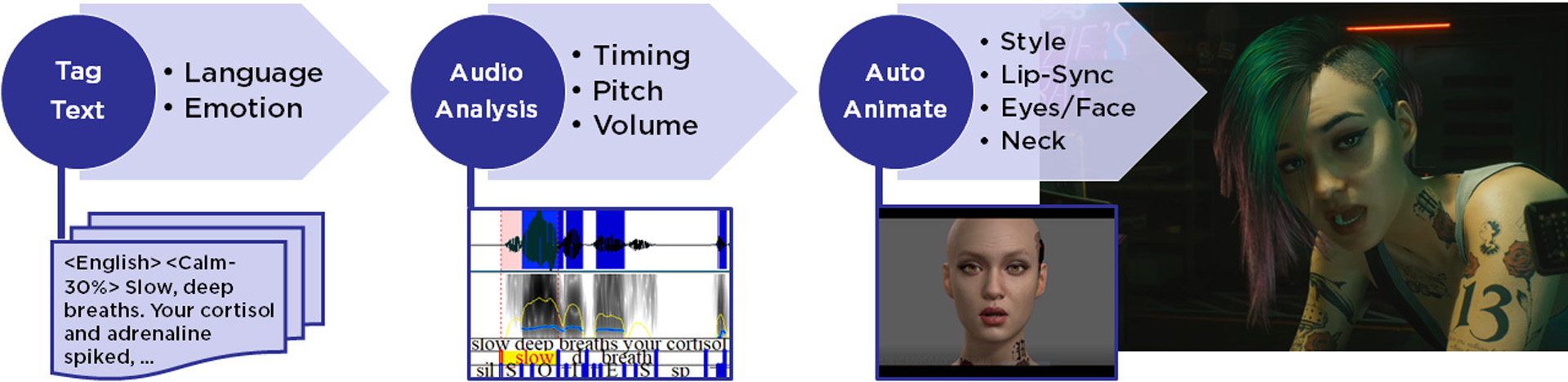

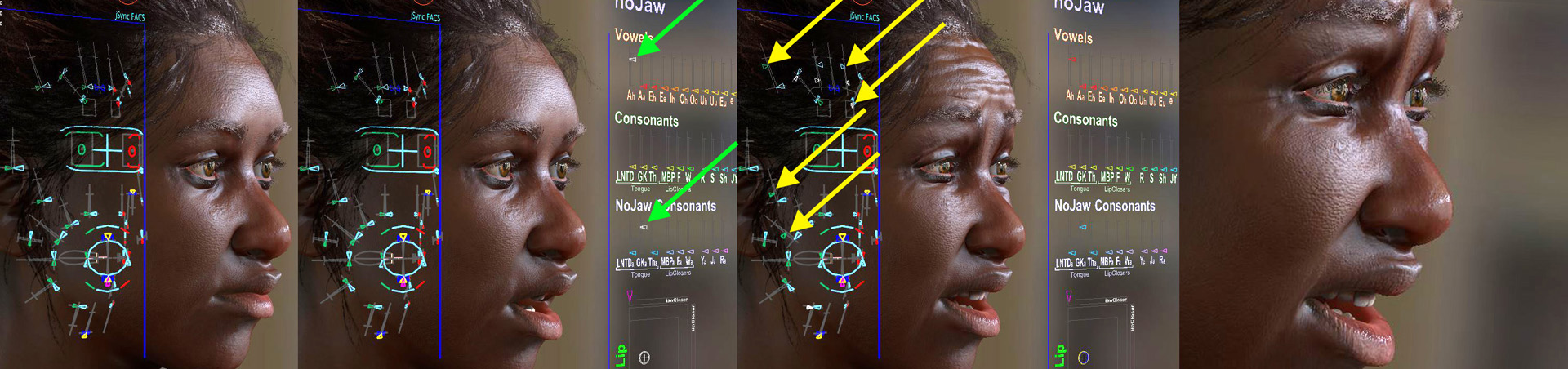

Cyberpunk 2077 is a highly anticipated massive open-world video game, with a complex, branching narrative. This talk details new research and innovative workflow contributions, developed by Jali, toward the generation of an unprecedented number of hours of realistic, expressive speech animation in ten languages, often with multiple languages interleaved within individual sentences. The speech animation workflow is largely automatic but remains under animator control, using a combination of audio and tagged text transcripts. We use insights from anatomy, perception, and the psycho-linguistic literature to develop independent and combined language models that drive procedural animation of the mouth and paralingual (speech supportive non-verbal expression) motion of the neck, brows and eyes. Directorial tags in the speech transcript further enable the integration of performance capture driven facial emotion. The entire workflow is animator-centric, allowing efficient key-frame customization and editing of the resulting facial animation on any typical faces-like face rig. The talk will focus equally on technical contributions and its integration and creative use within the animation pipeline of the highly anticipated AAA game title: Cyberpunk 2077.

References:

Pif Edwards, Chris Landreth, Eugene Fiume, and Karan Singh. 2016. JALI: An Animator-centric Viseme Model for Expressive Lip Synchronization. ACM Trans. Graph. 35, 4 (July 2016), 127:1–127:11.

Paul Ekman. 2004. Emotional and conversational nonverbal signals. In Language, knowledge, and representation. Springer, 39–50.

Frederic I Parke and Keith Waters. 2008. Facial Animation. In Computer Facial Animation. A K Peters Ltd, 1–277.

Daniel Povey et al. 2011. The Kaldi Speech Recognition Toolkit. IEEE 2011 Workshop on Automatic Speech Recognition and Understanding (Dec. 2011).