“A guided synthesizer for blendshape characters” by Ma and Lewis

Conference:

Title:

- A guided synthesizer for blendshape characters

Session/Category Title: Play Time

Presenter(s)/Author(s):

Abstract:

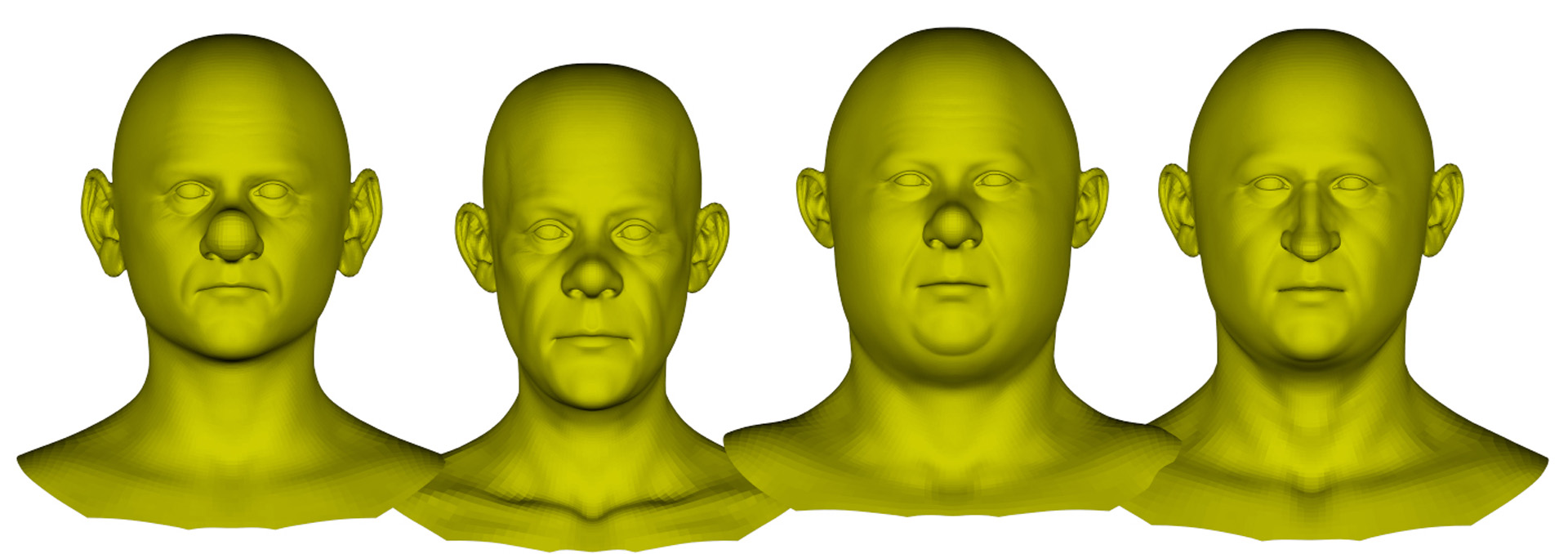

We have designed an interactive editing system that will help users to create new characters based on a limited number of training characters. The user provides a desired preliminary shape of the new character. The output of our system will be a convex linear combination of the existed characters in a local sense while maintaining the proximity to the user desired shape as much as possible. This feature guarantees the creation process is guided by the information of artists’ previous works. The system first finds the best set of training characters and blending weights that represents the user desired shape, then computes the result shape, which matches the obtained composition, using an edge-based integration.

References:

1. Ma, W.-C., Fyffe, G., and Debevec, P. 2011. Optimized local blendshape mapping for facial motion retargeting. In ACM SIGGRAPH 2011 Talks.