“Hands-Free Augmented Reality for Vascular Interventions” by Grinshpoon, Sadri, Loeb, Elvezio, Siu, et al. …

Notice: Pod Template PHP code has been deprecated, please use WP Templates instead of embedding PHP. has been deprecated since Pods version 2.3 with no alternative available. in /data/siggraph/websites/history/wp-content/plugins/pods/includes/general.php on line 518

Conference:

- SIGGRAPH 2018

-

More from SIGGRAPH 2018:

Notice: Array to string conversion in /data/siggraph/websites/history/wp-content/plugins/siggraph-archive-plugin/src/next_previous/source.php on line 345

Notice: Array to string conversion in /data/siggraph/websites/history/wp-content/plugins/siggraph-archive-plugin/src/next_previous/source.php on line 345

Type(s):

Entry Number: 09

Title:

- Hands-Free Augmented Reality for Vascular Interventions

Presenter(s):

Description:

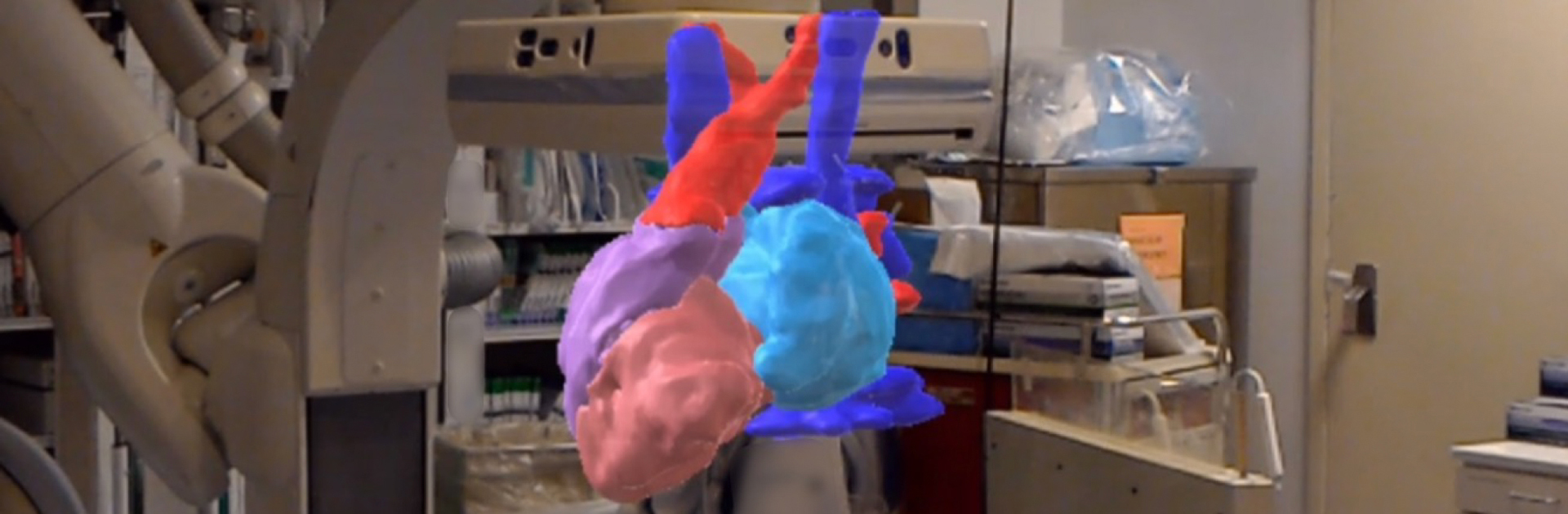

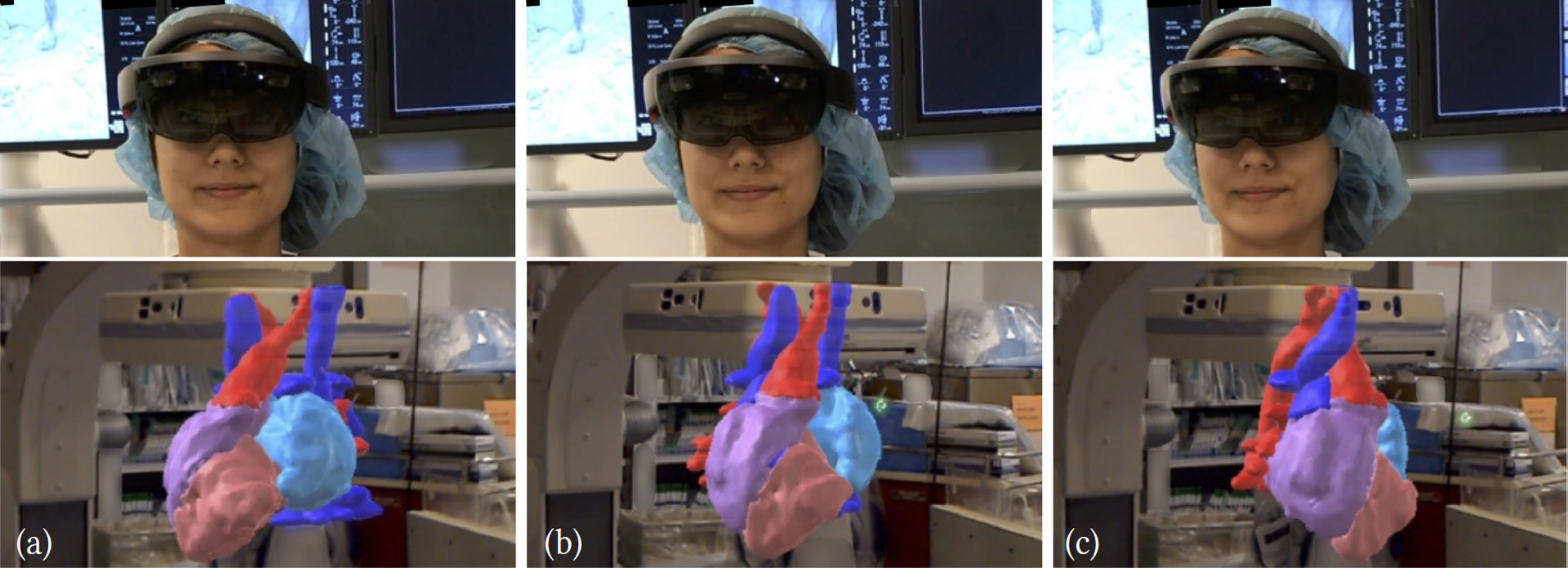

During a vascular intervention (a type of minimally invasive surgical procedure), physicians maneuver catheters and wires through a patient’s blood vessels to reach a desired location in the body. Since the relevant anatomy is typically not directly visible in these procedures, virtual reality and augmented reality systems have been developed to assist in 3D navigation. Because both of a physician’s hands may already be occupied, we developed an augmented reality system supporting hands-free interaction techniques that use voice and head tracking to enable the physician to interact with 3D virtual content on a head-worn display while leaving both hands available intraoperatively. We demonstrate how a virtual 3D anatomical model can be rotated and scaled using small head rotations through first-order (rate) control, and can be rigidly coupled to the head for combined translation and rotation through zero-order control. This enables easy manipulation of a model while it stays close to the center of the physician’s field of view.

References:

A. Grinshpoon, S. Sadri, G. Loeb, C. Elvezio, and S. Feiner. 2018. Hands-free interaction for augmented reality in vascular interventions. In Proc. IEEE Virtual Reality.

M. Hasan and H. Yu. 2017. Innovative developments in HCI and future trends. Int. J. of Automat. and Comp. 14, 1 (Feb 2017), 10–20. https://doi.org/10.1007/s11633-016-1039-6

S. Jalaliniya, J. Smith, M. Sousa, L. Büthe, and T. Pederson. 2013. Touch-less interaction with medical images using hand & foot gestures. In Proc. UbiComp 2013 Adjunct. ACM Press, NY, NY, 1265–1274. https://doi.org/10.1145/2494091.2497332

S. M. LaValle, A. Yershova, M. Katsev, and M. Antonov. 2014. Head tracking for the Oculus Rift. In Proc. ICRA 2014. 187–194. https://doi.org/10.1109/ICRA.2014.6906608

G. Loeb, S. Sadri, A. Grinshpoon, J. Carroll, C. Cooper, C. Elvezio, S. Mutasa, G. Mandigo, S. Lavine, J.Weintraub, A. Einstein, S. Feiner, and P. Meyers. 2018. 3:54 PM Abstract No. 29 Augmented reality guidance for cerebral angiography. JVIR 29, 4 (Apr 2018), S17. https://doi.org/10.1016/j.jvir.2018.01.036

A. Mewes, B. Hensen, F. Wacker, and C. Hansen. 2017. Touchless interaction with software in interventional radiology and surgery: A systematic literature review. Int. J. Comput. Assist. Radiol. Surg. 12, 2 (Feb 2017), 291–305. https://doi.org/10.1007/s11548-016-1480-6

A. Nishikawa, T. Hosoi, K. Koara, D. Negoro, A. Hikita, S. Asano, H. Kakutani, F. Miyazaki, M. Sekimoto, M. Yasui, Y. Miyake, S. Takiguchi, and M. Monden. 2003. FAce MOUSe: A novel human–machine interface for controlling the position of a laparoscope. IEEE Transactions on Robotics and Automation 19, 5 (Oct 2003), 825–841. https://doi.org/10.1109/TRA.2003.817093

R. Reilink, G. de Bruin, M. Franken, M. A. Mariani, S. Misra, and S. Stramigioli. 2010. Endoscopic camera control by head movements for thoracic surgery. In Proc. IEEE RAS & EMBS Int. Conf. on Biomed. Robotics and Biocmechatronics. IEEE, 510–515. https://doi.org/10.1109/BIOROB.2010.5627043

J. P.Wachs, K. Vujjeni, E. T. Matson, and S. Adams. 2010. “A window on tissue”—Using facial orientation to control endoscopic views of tissue depth. Proc. IEEE EMBC 2010 2010 (2010), 935–938. https://doi.org/10.1109/iembs.2010.5627538

Keyword(s):

- Hands-free interaction

- Augmented reality

- vascular interventions

- head tracking

- head-worn display

Acknowledgements:

This material is based on work supported in part by the National Science Foundation under Grant IIS-1514429 (S. Feiner, PI), the National Institutes of Health under Grant NHLBI: 5T35HL007616-37 (R. Leibel, PI), and Columbia University Vagelos College of Physicians and Surgeons under Dean’s Research Fellowships to G. Loeb and S. Sadri.We thank the physicians at NewYork-Presbyterian/Columbia University Medical Center who contributed to this research.