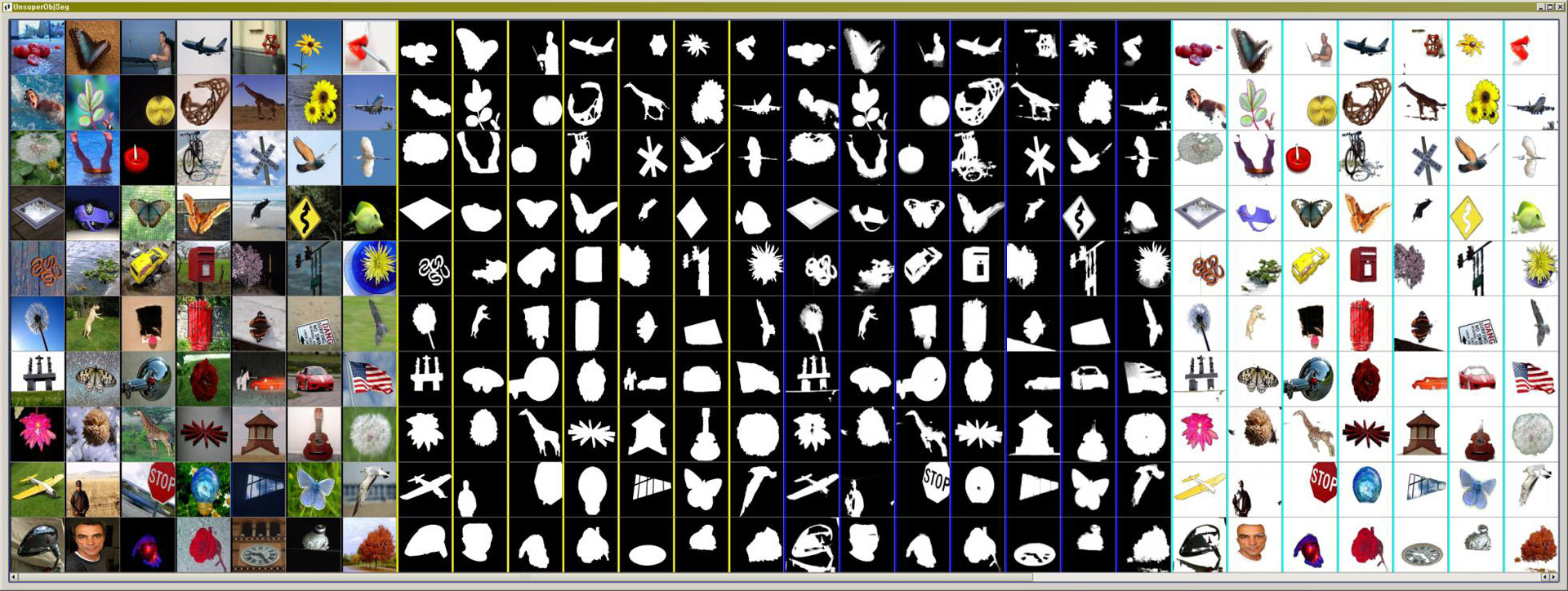

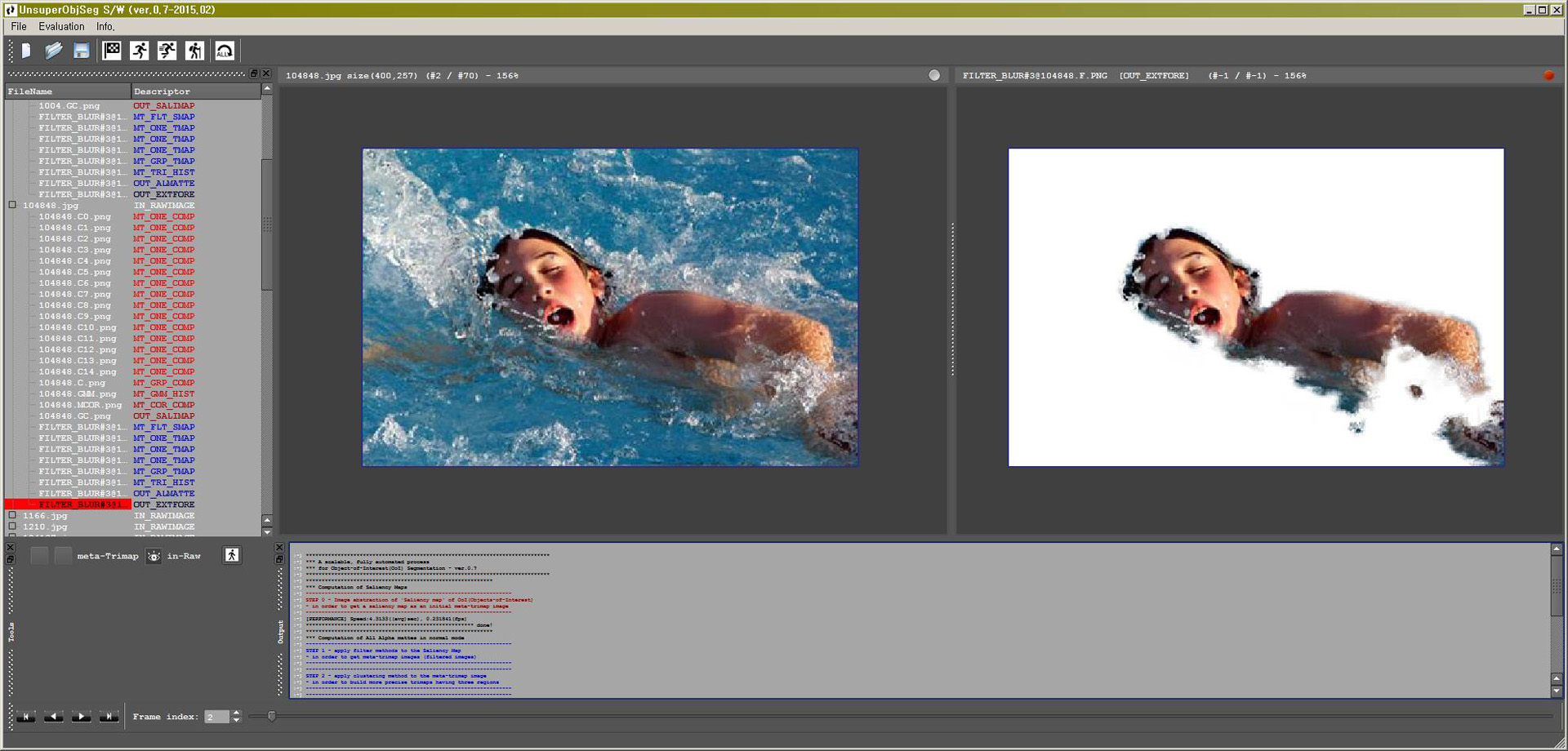

“UnAMT: Unsupervised Adaptive Matting Tool for Large-Scale Object Collections” by Kim, Park and Park

Conference:

Type(s):

Entry Number: 56

Title:

- UnAMT: Unsupervised Adaptive Matting Tool for Large-Scale Object Collections

Presenter(s)/Author(s):

Abstract:

Unsupervised matting, whose goal is to extract interesting foreground components from arbitrary and natural background regions without any additional information of the contents of the corresponding scenes, plays an important role in many computer vision and graphics applications. Especially, the precisely extracted object images from the matting process can be useful for automatic generation of large-scale annotated training sets with more accuracy, as well as for improving the performance of a variety of applications including content-based image retrieval. However, unsupervised matting problem is intrinsically ill-posed so that it is hard to generate a perfect segmented object matte from a given image without any prior knowledge. This additional information is usually fed by means of a trimap which is a rough pre-segmented image consisting of three subregions of foreground, background and unknown. When such matting process is applied to object collections in a large-scale image set, the requirement for manually specifying every trimap for each of independent input images can be a serious drawback definitely. Recently, automatic detection of salient object regions in images has been widely researched in computer vision tasks including image segmentation, object recognition and so on. Although there are many different types of proposal measures in methodology under the common perceptual assumption of a salient region standing out its surrounding neighbors and capturing the attention of a human observer, most final saliency maps having lots of noises are not sufficient to take advantage of the consequent computational processes of highly accurate low-level representation of images.