“SketchFaceNeRF: Sketch-based Facial Generation and Editing in Neural Radiance Fields” by Gao, Liu, Chen, Jiang, Li, et al. …

Conference:

Type(s):

Title:

- SketchFaceNeRF: Sketch-based Facial Generation and Editing in Neural Radiance Fields

Session/Category Title: NeRFs for Avatars

Presenter(s)/Author(s):

Moderator(s):

Abstract:

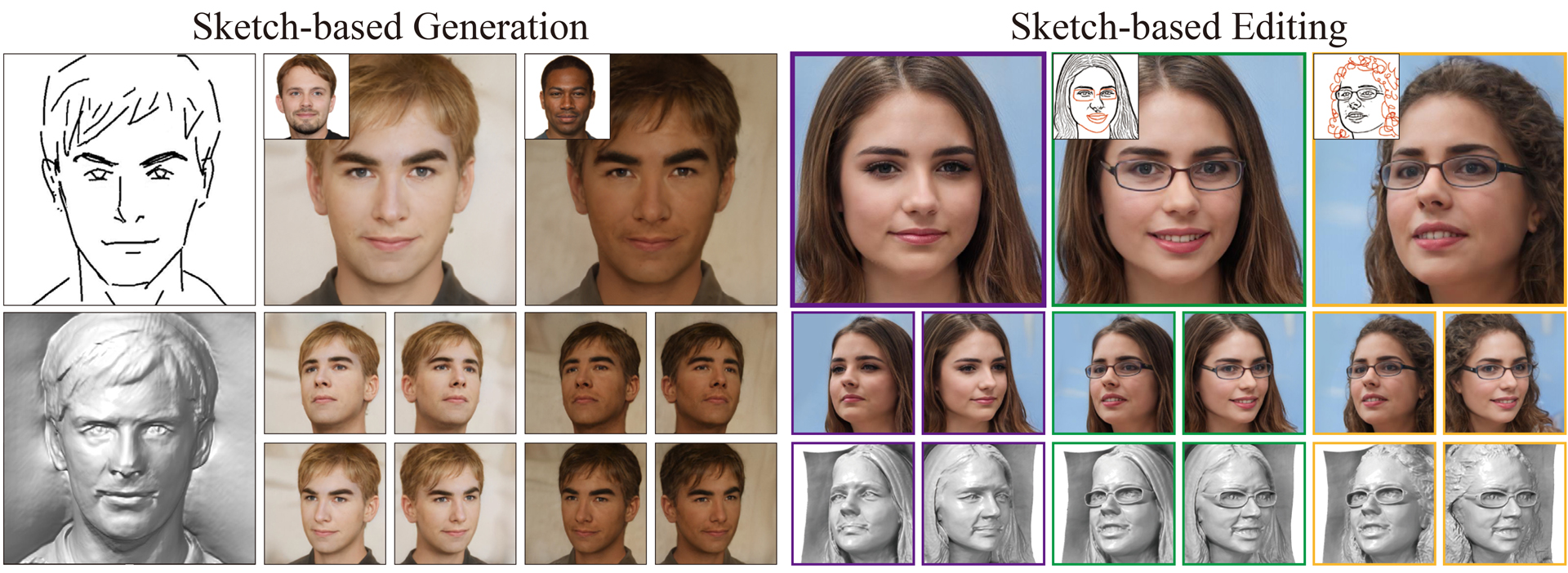

Realistic 3D facial generation based on Neural Radiance Fields (NeRFs) from 2D sketches benefits various applications. Despite the high realism of free-view rendering results of NeRFs, it is tedious and difficult for artists to achieve detailed 3D control and manipulation. Meanwhile, due to its conciseness and expressiveness, sketching has been widely used for 2D facial image generation and editing. Applying sketching to NeRFs is challenging due to the inherent uncertainty for 3D generation with 2D constraints, a significant gap in content richness when generating faces from sparse sketches, and potential inconsistencies for sequential multi-view editing given only 2D sketch inputs. To address these challenges, we present SketchFaceNeRF, a novel sketch-based 3D facial NeRF generation and editing method, to produce free-view photo-realistic images. To solve the challenge of sketch sparsity, we introduce a Sketch Tri-plane Prediction net to first inject the appearance into sketches, thus generating features given reference images to allow color and texture control. Such features are then lifted into compact 3D tri-planes to supplement the absent 3D information, which is important for improving robustness and faithfulness. However, during editing, consistency for unseen or unedited 3D regions is difficult to maintain due to limited spatial hints in sketches. We thus adopt a Mask Fusion module to transform free-view 2D masks (inferred from sketch editing operations) into the tri-plane space as 3D masks, which guide the fusion of the original and sketch-based generated faces to synthesize edited faces. We further design an optimization approach with a novel space loss to improve identity retention and editing faithfulness. Our pipeline enables users to flexibly manipulate faces from different viewpoints in 3D space, easily designing desirable facial models. Extensive experiments validate that our approach is superior to the state-of-the-art 2D sketch-based image generation and editing approaches in realism and faithfulness.

References:

1. Mahmoud Afifi, Marcus A Brubaker, and Michael S Brown. 2021. Histogan: Controlling colors of gan-generated and real images via color histograms. In Conference on Computer Vision and Pattern Recognition. 7941–7950.

2. Autodesk, INC. 2019. Maya. https:/autodesk.com/maya

3. Pierre Bénard, Aaron Hertzmann, et al. 2019. Line drawings from 3D models: A tutorial. Foundations and Trends® in Computer Graphics and Vision 11, 1–2 (2019), 1–159.

4. Alexander W. Bergman, Petr Kellnhofer, Wang Yifan, Eric R. Chan, David B. Lindell, and Gordon Wetzstein. 2022. Generative Neural Articulated Radiance Fields. In Advances in Neural Information Processing Systems.

5. Mikolaj Binkowski, Danica J. Sutherland, Michael Arbel, and Arthur Gretton. 2018. Demystifying MMD GANs. In International Conference on Learning Representations.

6. John Canny. 1986. A computational approach to edge detection. IEEE Transactions on pattern analysis and machine intelligence 6 (1986), 679–698.

7. Eric R Chan, Connor Z Lin, Matthew A Chan, Koki Nagano, Boxiao Pan, Shalini De Mello, Orazio Gallo, Leonidas J Guibas, Jonathan Tremblay, Sameh Khamis, et al. 2022. Efficient geometry-aware 3D generative adversarial networks. In Conference on Computer Vision and Pattern Recognition. 16123–16133.

8. Eric R Chan, Marco Monteiro, Petr Kellnhofer, Jiajun Wu, and Gordon Wetzstein. 2021. pi-gan: Periodic implicit generative adversarial networks for 3d-aware image synthesis. In Conference on Computer Vision and Pattern Recognition. 5799–5809.

9. Shu-Yu Chen, Feng-Lin Liu, Yu-Kun Lai, Paul L. Rosin, Chunpeng Li, Hongbo Fu, and Lin Gao. 2021. DeepFaceEditing: deep face generation and editing with disentangled geometry and appearance control. ACM Trans. Graph. 40, 4, Article 90 (2021), 15 pages.

10. Shu-Yu Chen, Wanchao Su, Lin Gao, Shihong Xia, and Hongbo Fu. 2020. DeepFace-Drawing: Deep generation of face images from sketches. ACM Trans. Graph. 39, 4, Article 72 (2020), 16 pages.

11. Yuedong Chen, Qianyi Wu, Chuanxia Zheng, Tat-Jen Cham, and Jianfei Cai. 2022. Sem2NeRF: Converting Single-View Semantic Masks to Neural Radiance Fields. In European Conference Computer Vision, Vol. 13674. 730–748.

12. Pei-Ze Chiang, Meng-Shiun Tsai, Hung-Yu Tseng, Wei-Sheng Lai, and Wei-Chen Chiu. 2022. Stylizing 3D scene via implicit representation and HyperNetwork. In Winter Conference on Applications of Computer Vision. 1475–1484.

13. Pinaki Nath Chowdhury, Tuanfeng Wang, Duygu Ceylan, Yi-Zhe Song, and Yulia Gryaditskaya. 2022. Garment Ideation: Iterative View-Aware Sketch-Based Garment Modeling. In International Conference on 3D Vision. 22–31.

14. Doug DeCarlo, Adam Finkelstein, Szymon Rusinkiewicz, and Anthony Santella. 2003. Suggestive Contours for Conveying Shape. ACM Trans. Graph. 22, 3, 848–855.

15. Jiankang Deng, Jia Guo, Niannan Xue, and Stefanos Zafeiriou. 2019a. Arcface: Additive angular margin loss for deep face recognition. In Conference on Computer Vision and Pattern Recognition. 4690–4699.

16. Yu Deng, Jiaolong Yang, Jianfeng Xiang, and Xin Tong. 2022. Gram: Generative radiance manifolds for 3d-aware image generation. In Conference on Computer Vision and Pattern Recognition. 10673–10683.

17. Yu Deng, Jiaolong Yang, Sicheng Xu, Dong Chen, Yunde Jia, and Xin Tong. 2019b. Accurate 3D Face Reconstruction With Weakly-Supervised Learning: From Single Image to Image Set. In Conference on Computer Vision and Pattern Recognition Workshops. 285–295.

18. Dong Du, Xiaoguang Han, Hongbo Fu, Feiyang Wu, Yizhou Yu, Shuguang Cui, and Ligang Liu. 2020. SAniHead: Sketching animal-like 3D character heads using a view-surface collaborative mesh generative network. IEEE Transactions on Visualization and Computer Graphics 28, 6 (2020), 2415–2429.

19. Leon A Gatys, Alexander S Ecker, and Matthias Bethge. 2016. Image style transfer using convolutional neural networks. In Winter Conference on Applications of Computer Vision. 2414–2423.

20. Jiatao Gu, Lingjie Liu, Peng Wang, and Christian Theobalt. 2022. StyleNeRF: A Style-based 3D Aware Generator for High-resolution Image Synthesis. In International Conference on Learning Representations.

21. Xiaoguang Han, Chang Gao, and Yizhou Yu. 2017. DeepSketch2Face: a deep learning based sketching system for 3D face and caricature modeling. ACM Trans. Graph. 36, 4, Article 126 (2017), 12 pages.

22. Xiaoguang Han, Kangcheng Hou, Dong Du, Yuda Qiu, Shuguang Cui, Kun Zhou, and Yizhou Yu. 2018. Caricatureshop: Personalized and photorealistic caricature sketching. IEEE Transactions on Visualization and Computer Graphics 26, 7 (2018), 2349–2361.

23. Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. 2017. GANs trained by a two time-scale update rule converge to a local Nash equilibrium. In Advances in Neural Information Processing Systems. 6626–6637.

24. Shi-Min Hu, Dun Liang, Guo-Ye Yang, Guo-Wei Yang, and Wen-Yang Zhou. 2020. Jittor: a novel deep learning framework with meta-operators and unified graph execution. Science China Information Sciences 63, 222103 (2020), 1–21.

25. Shi-Min Hu, Fang-Lue Zhang, Miao Wang, Ralph R. Martin, and Jue Wang. 2013. PatchNet: A Patch-Based Image Representation for Interactive Library-Driven Image Editing. ACM Trans. Graph. 32, 6, Article 196 (2013), 12 pages.

26. Hsin-Ping Huang, Hung-Yu Tseng, Saurabh Saini, Maneesh Singh, and Ming-Hsuan Yang. 2021. Learning to Stylize Novel Views. In International Conference on Computer Vision. 13849–13858.

27. Xun Huang and Serge Belongie. 2017. Arbitrary style transfer in real-time with adaptive instance normalization. In Conference on Computer Vision and Pattern Recognition. 1501–1510.

28. Xin Huang, Dong Liang, Hongrui Cai, Juyong Zhang, and Jinyuan Jia. 2022b. CariPainter: Sketch Guided Interactive Caricature Generation. In ACM International Conference on Multimedia. 1232–1240.

29. Yi-Hua Huang, Yue He, Yu-Jie Yuan, Yu-Kun Lai, and Lin Gao. 2022a. StylizedNeRF: consistent 3D scene stylization as stylized NeRF via 2D-3D mutual learning. In Conference on Computer Vision and Pattern Recognition. 18342–18352.

30. Kaiwen Jiang, Shu-Yu Chen, Feng-Lin Liu, Hongbo Fu, and Lin Gao. 2022. NeRFFaceEditing: Disentangled Face Editing in Neural Radiance Fields. In SIGGRAPH Asia 2022 Conference Papers. Association for Computing Machinery, Article 31, 9 pages.

31. Kyungmin Jo, Gyumin Shim, Sanghun Jung, Soyoung Yang, and Jaegul Choo. 2021. Cg-nerf: Conditional generative neural radiance fields. arXiv preprint arXiv:2112.03517 (2021).

32. Youngjoo Jo and Jongyoul Park. 2019. Sc-fegan: Face editing generative adversarial network with user’s sketch and color. In Conference on Computer Vision and Pattern Recognition. 1745–1753.

33. Tilke Judd, Frédo Durand, and Edward Adelson. 2007. Apparent Ridges for Line Drawing. In ACM Trans. Graph., Vol. 26. 19.

34. Tero Karras, Samuli Laine, and Timo Aila. 2019. A style-based generator architecture for generative adversarial networks. In Conference on Computer Vision and Pattern Recognition. 4401–4410.

35. Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2020. Analyzing and improving the image quality of stylegan. In Conference on Computer Vision and Pattern Recognition. 8110–8119.

36. Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

37. Tianye Li, Timo Bolkart, Michael. J. Black, Hao Li, and Javier Romero. 2017. Learning a model of facial shape and expression from 4D scans. ACM Trans. Graph. 36, 6, Article 194 (2017), 17 pages.

38. Yuhang Li, Xuejin Chen, Feng Wu, and Zheng-Jun Zha. 2019. Linestofacephoto: Face photo generation from lines with conditional self-attention generative adversarial networks. In ACM International Conference on Multimedia. 2323–2331.

39. Yuhang Li, Xuejin Chen, Binxin Yang, Zihan Chen, Zhihua Cheng, and Zheng-Jun Zha. 2020. Deepfacepencil: Creating face images from freehand sketches. In ACM International Conference on Multimedia. 991–999.

40. Jingwang Ling, Zhibo Wang, Ming Lu, Quan Wang, Chen Qian, and Feng Xu. 2022. Structure-Aware Editable Morphable Model for 3D Facial Detail Animation and Manipulation. In European Conference on Computer Vision. 249–267.

41. Difan Liu, Mohamed Nabail, Aaron Hertzmann, and Evangelos Kalogerakis. 2020. Neural contours: Learning to draw lines from 3d shapes. In Winter Conference on Applications of Computer Vision. 5428–5436.

42. Feng-Lin Liu, Shu-Yu Chen, Yu-Kun Lai, Chunpeng Li, Yue-Ren Jiang, Hongbo Fu, and Lin Gao. 2022. DeepFaceVideoEditing: sketch-based deep editing of face videos. ACM Trans. Graph. 41, 4, Article 167 (2022), 16 pages.

43. Hongyu Liu, Ziyu Wan, Wei Huang, Yibing Song, Xintong Han, Jing Liao, Bin Jiang, and Wei Liu. 2021. DeFLOCNet: Deep Image Editing via Flexible Low-Level Controls. In Conference on Computer Vision and Pattern Recognition. 10765–10774.

44. William E. Lorensen and Harvey E. Cline. 1987. Marching Cubes: A High Resolution 3D Surface Construction Algorithm. SIGGRAPH Comput. Graph. 21, 4 (aug 1987), 163–169.

45. Zhongjin Luo, Jie Zhou, Heming Zhu, Dong Du, Xiaoguang Han, and Hongbo Fu. 2021. SimpModeling: Sketching Implicit Field to Guide Mesh Modeling for 3D Animalmorphic Head Design. In ACM Symposium on User Interface Software and Technology. 854–863.

46. Ben Mildenhall, Pratul P Srinivasan, Matthew Tancik, Jonathan T Barron, Ravi Ramamoorthi, and Ren Ng. 2021. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 65, 1 (2021), 99–106.

47. Thu Nguyen-Phuoc, Feng Liu, and Lei Xiao. 2022. SNeRF: Stylized Neural Implicit Representations for 3D Scenes. ACM Trans. Graph. 41, 4, Article 142 (2022), 11 pages.

48. Michael Niemeyer and Andreas Geiger. 2021. Giraffe: Representing scenes as compositional generative neural feature fields. In Conference on Computer Vision and Pattern Recognition. 11453–11464.

49. NVIDIA. 2023. NVIDIA Omniverse. https://www.nvidia.com/en-us/omniverse/

50. Yutaka Ohtake, Alexander Belyaev, and Hans-Peter Seidel. 2004. Ridge-valley lines on meshes via implicit surface fitting. Vol. 23. 609–612.

51. Roy Or-El, Xuan Luo, Mengyi Shan, Eli Shechtman, Jeong Joon Park, and Ira Kemelmacher-Shlizerman. 2022. Stylesdf: High-resolution 3d-consistent image and geometry generation. In Conference on Computer Vision and Pattern Recognition. 13503–13513.

52. Ethan Perez, Florian Strub, Harm De Vries, Vincent Dumoulin, and Aaron Courville. 2018. Film: Visual reasoning with a general conditioning layer. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 32.

53. Pixologic. 2023. ZBrush. http://pixologic.com/features/about-zbrush.php

54. Tiziano Portenier, Qiyang Hu, Attila Szabó, Siavash Arjomand Bigdeli, Paolo Favaro, and Matthias Zwicker. 2018. Faceshop: deep sketch-based face image editing. ACM Trans. Graph. 37, 4, Article 99 (2018), 13 pages.

55. Alec Radford, Luke Metz, and Soumith Chintala. 2016. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. In International Conference on Learning Representations.

56. Elad Richardson, Yuval Alaluf, Or Patashnik, Yotam Nitzan, Yaniv Azar, Stav Shapiro, and Daniel Cohen-Or. 2021. Encoding in style: a stylegan encoder for image-to-image translation. In Conference on Computer Vision and Pattern Recognition. 2287–2296.

57. Andreas Rossler, Davide Cozzolino, Luisa Verdoliva, Christian Riess, Justus Thies, and Matthias Nießner. 2019. Faceforensics++: Learning to detect manipulated facial images. In International Conference on Computer Vision. 1–11.

58. Katja Schwarz, Yiyi Liao, Michael Niemeyer, and Andreas Geiger. 2020. Graf: Generative radiance fields for 3d-aware image synthesis. Advances in Neural Information Processing Systems 33 (2020), 20154–20166.

59. Tianchang Shen, Jun Gao, Kangxue Yin, Ming-Yu Liu, and Sanja Fidler. 2021. Deep marching tetrahedra: a hybrid representation for high-resolution 3d shape synthesis. Advances in Neural Information Processing Systems (2021), 6087–6101.

60. Vincent Sitzmann, Julien Martel, Alexander Bergman, David Lindell, and Gordon Wetzstein. 2020. Implicit neural representations with periodic activation functions. Advances in Neural Information Processing Systems 33 (2020), 7462–7473.

61. Wanchao Su, Hui Ye, Shu-Yu Chen, Lin Gao, and Hongbo Fu. 2022. DrawingInStyles: Portrait Image Generation and Editing with Spatially Conditioned StyleGAN. IEEE Transactions on Visualization and Computer Graphics (2022).

62. Jingxiang Sun, Xuan Wang, Yichun Shi, Lizhen Wang, Jue Wang, and Yebin Liu. 2022a. IDE-3D: Interactive Disentangled Editing for High-Resolution 3D-Aware Portrait Synthesis. ACM Trans. Graph. 41, 6, Article 270 (2022), 10 pages.

63. Jingxiang Sun, Xuan Wang, Lizhen Wang, Xiaoyu Li, Yong Zhang, Hongwen Zhang, and Yebin Liu. 2022b. Next3D: Generative Neural Texture Rasterization for 3D-Aware Head Avatars. arXiv preprint arXiv:2211.11208 (2022).

64. Jingxiang Sun, Xuan Wang, Yong Zhang, Xiaoyu Li, Qi Zhang, Yebin Liu, and Jue Wang. 2022c. Fenerf: Face editing in neural radiance fields. In Conference on Computer Vision and Pattern Recognition. 7672–7682.

65. Junshu Tang, Bo Zhang, Binxin Yang, Ting Zhang, Dong Chen, Lizhuang Ma, and Fang Wen. 2022. Explicitly Controllable 3D-Aware Portrait Generation. arXiv preprint arXiv:2209.05434 (2022).

66. Ruben Tolosana, Ruben Vera-Rodriguez, Julian Fierrez, Aythami Morales, and Javier Ortega-Garcia. 2020. Deepfakes and beyond: A survey of face manipulation and fake detection. Information Fusion 64 (2020), 131–148.

67. Yael Vinker, Ehsan Pajouheshgar, Jessica Y. Bo, Roman Christian Bachmann, Amit Haim Bermano, Daniel Cohen-Or, Amir Zamir, and Ariel Shamir. 2022. CLIPasso: Semantically-Aware Object Sketching. ACM Trans. Graph. 41, 4, Article 86 (2022), 11 pages.

68. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018. High-resolution image synthesis and semantic manipulation with conditional gans. In Conference on Computer Vision and Pattern Recognition. 8798–8807.

69. Yue Wu, Yu Deng, Jiaolong Yang, Fangyun Wei, Chen Qifeng, and Xin Tong. 2022. AniFaceGAN: Animatable 3D-Aware Face Image Generation for Video Avatars. In Advances in Neural Information Processing Systems.

70. Saining Xie and Zhuowen Tu. 2015. Holistically-Nested Edge Detection. In International Conference on Computer Vision. 1395–1403.

71. Li Yang, Jing Wu, Jing Huo, Yu-Kun Lai, and Yang Gao. 2021b. Learning 3D face reconstruction from a single sketch. Graphical Models 115 (2021), 101102.

72. Shuai Yang, Zhangyang Wang, Jiaying Liu, and Zongming Guo. 2020. Deep plastic surgery: Robust and controllable image editing with human-drawn sketches. In European Conference on Computer Vision. 601–617.

73. Shuai Yang, Zhangyang Wang, Jiaying Liu, and Zongming Guo. 2021a. Controllable sketch-to-image translation for robust face synthesis. IEEE Transactions on Image Processing 30 (2021), 8797–8810.

74. Ran Yi, Yong-Jin Liu, Yu-Kun Lai, and Paul L. Rosin. 2020. Unpaired Portrait Drawing Generation via Asymmetric Cycle Mapping. In Conference on Computer Vision and Pattern Recognition. 8214–8222.

75. Ran Yi, Yong-Jin Liu, Yu-Kun Lai, and Paul L Rosin. 2019. APDrawingGAN: Generating artistic portrait drawings from face photos with hierarchical gans. In Conference on Computer Vision and Pattern Recognition. 10743–10752.

76. Alex Yu, Vickie Ye, Matthew Tancik, and Angjoo Kanazawa. 2021. pixelnerf: Neural radiance fields from one or few images. In Conference on Computer Vision and Pattern Recognition. 4578–4587.

77. Jiahui Yu, Zhe Lin, Jimei Yang, Xiaohui Shen, Xin Lu, and Thomas S Huang. 2019. Free-form image inpainting with gated convolution. In Conference on Computer Vision and Pattern Recognition. 4471–4480.

78. Yu Zeng, Zhe Lin, and Vishal M Patel. 2022. Sketchedit: Mask-free local image manipulation with partial sketches. In Conference on Computer Vision and Pattern Recognition. 5951–5961.

79. Kai Zhang, Nicholas I. Kolkin, Sai Bi, Fujun Luan, Zexiang Xu, Eli Shechtman, and Noah Snavely. 2022. ARF: Artistic Radiance Fields. In European Conference Computer Vision, Vol. 13691. 717–733.

80. Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The unreasonable effectiveness of deep features as a perceptual metric. In Conference on Computer Vision and Pattern Recognition. 586–595.

81. Hanqing Zhao, Wenbo Zhou, Dongdong Chen, Tianyi Wei, Weiming Zhang, and Nenghai Yu. 2021. Multi-attentional deepfake detection. In Conference on Computer Vision and Pattern Recognition. 2185–2194.