“RigNet: Neural Rigging for Articulated Characters” by Xu, Zhou, Kalogerakis, Landreth and Singh

Conference:

Type(s):

Title:

- RigNet: Neural Rigging for Articulated Characters

Session/Category Title: Face and Full Body Motion

Presenter(s)/Author(s):

Abstract:

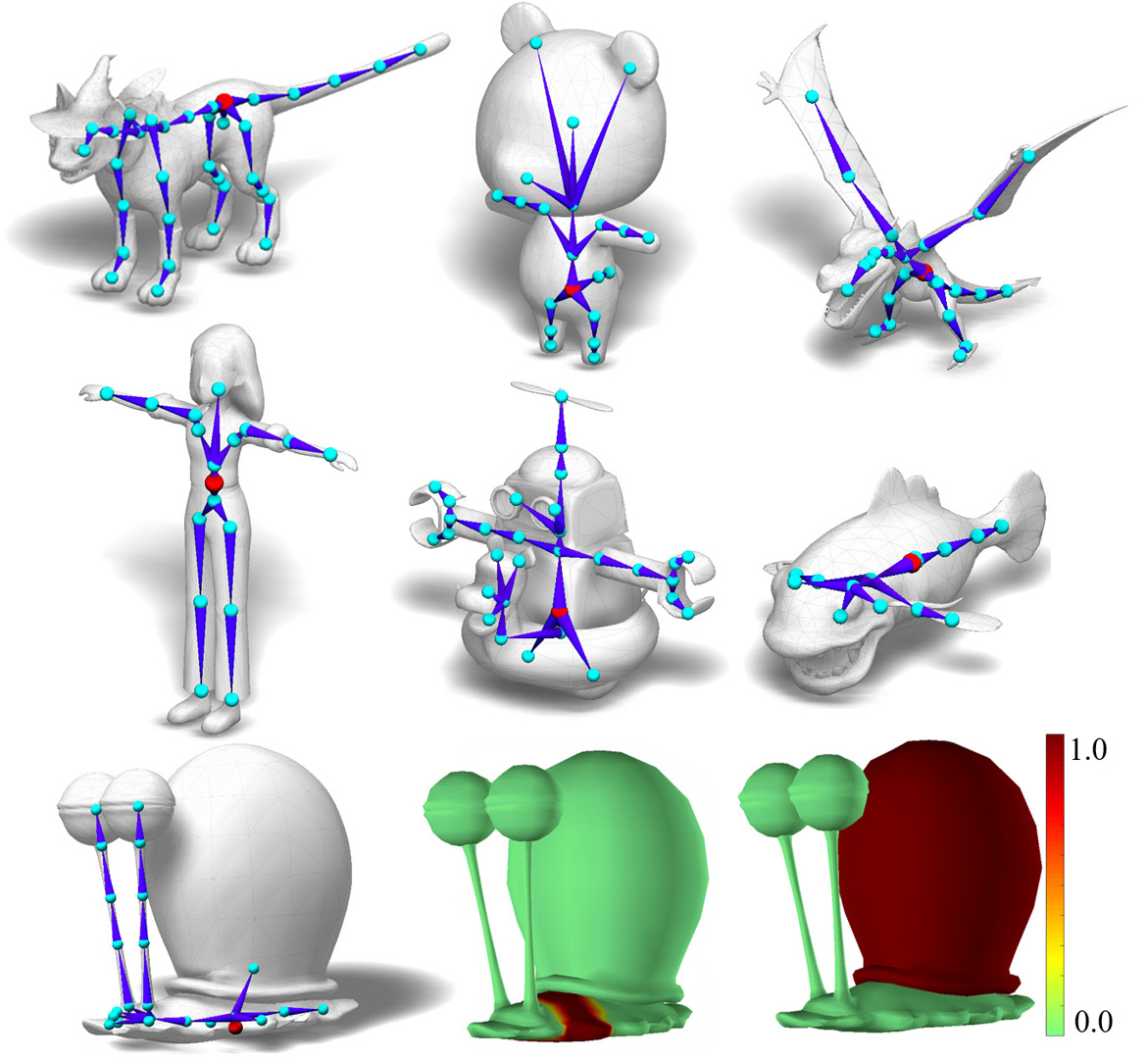

We present RigNet, an end-to-end automated method for producing animation rigs from input character models. Given an input 3D model representing an articulated character, RigNet predicts a skeleton that matches the anima- tor expectations in joint placement and topology. It also estimates surface skin weights based on the predicted skeleton. Our method is based on a deep architecture that directly operates on the mesh representation without making assumptions on shape class and structure. The architecture is trained on a large and diverse collection of rigged models, including their mesh, skeletons and corresponding skin weights. Our evaluation is three-fold: we show better results than prior art when quantitatively compared to anima- tor rigs; qualitatively we show that our rigs can be expressively posed and animated at multiple levels of detail; and finally, we evaluate the impact of various algorithm choices on our output rigs.