“Dart-It: Interacting with a Remote Display by Throwing Your Finger Touch” by Huang, Liang, Chan and Chen

Conference:

Type(s):

Entry Number: 33

Title:

- Dart-It: Interacting with a Remote Display by Throwing Your Finger Touch

Presenter(s)/Author(s):

Abstract:

Hand tracking technologies allow users to control a remote display freely. The most prominent freehand remote controlling method is through a body-centric cursor, e.g. Kinect. Using that method, a user can first place the cursor to a rough position on the remote display, move the cursor to the exact position, and then commit the selection by a gesture. Although controlling the body-centric cursor is intuitive, it is not efficient for novel users who are not familiar with their proprioception. Inaccurate cursor placement results in long dragging movement, and therefore causes consequent arm fatigue problems.

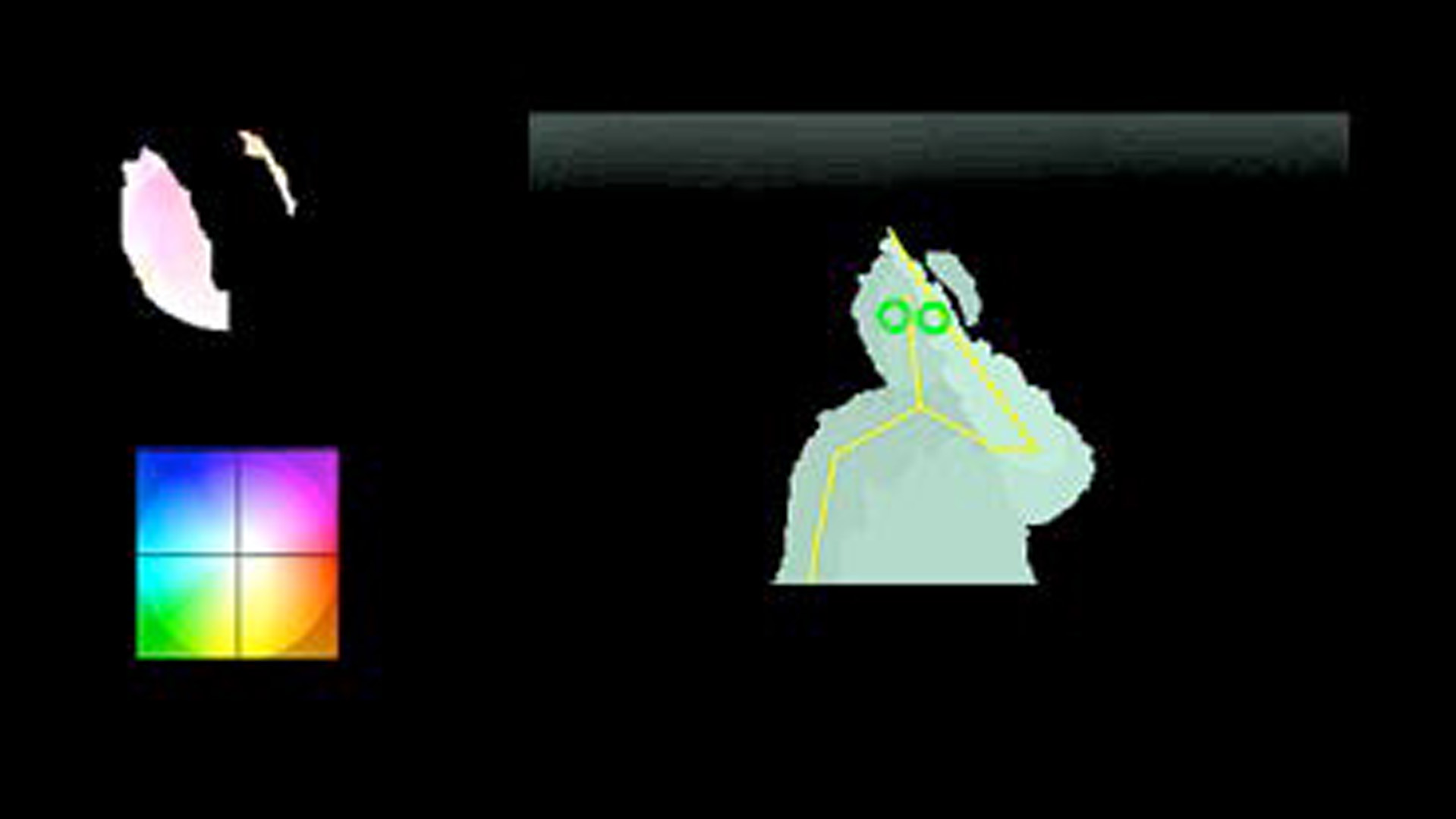

Perspective-based pointing [Pierce et al. 1997] is another freehand remote pointing method that allows users to select a target on a remote display by directly pointing at where they see. When a user points at an on-screen target, the remote pointing position is defined by the ray casting from the user’s dominant eye to his/her fingertip. Perspective-based pointing is efficient because users are allowed to aim the target accurately with their eyes. Nonetheless, since it requires reliable face and fingertip tracking, it usually needs extra cameras and motion trackers in real-world deployment [Banerjee et al. 2012], which is too heavyweight for general usage. Hence, we present Dart-It (Figure 1), a lightweight system that uses only one RGBD camera to enable perspective-based remote direct-pointing on any deployed remote display.

References:

- Banerjee, A., Burstyn, J., Girouard, A., and Vertegaal, R. 2012. MultiPoint: Comparing laser and manual pointing as remote input in large display interactions. IJHCS 70, 10, 690–702.

- Pierce, J. S., Forsberg, A. S., Conway, M. J., Hong, S., Zeleznik, R. C., and Mine, M. R. 1997. Image plane interaction techniques in 3D immersive environments. In Proc. I3D ’97, 39–43.

Additional Images:

Acknowledgements:

This research was supported in part by the National Science Council of Taiwan under grants NSC101-2219- E-002-026.