“Compressive epsilon photography for post-capture control in digital imaging” by Ito, Tambe, Mitra, Sankaranarayanan and Veeraraghavan

Conference:

Type(s):

Title:

- Compressive epsilon photography for post-capture control in digital imaging

Session/Category Title:

- Computational Sensing & Display

Presenter(s)/Author(s):

Moderator(s):

Abstract:

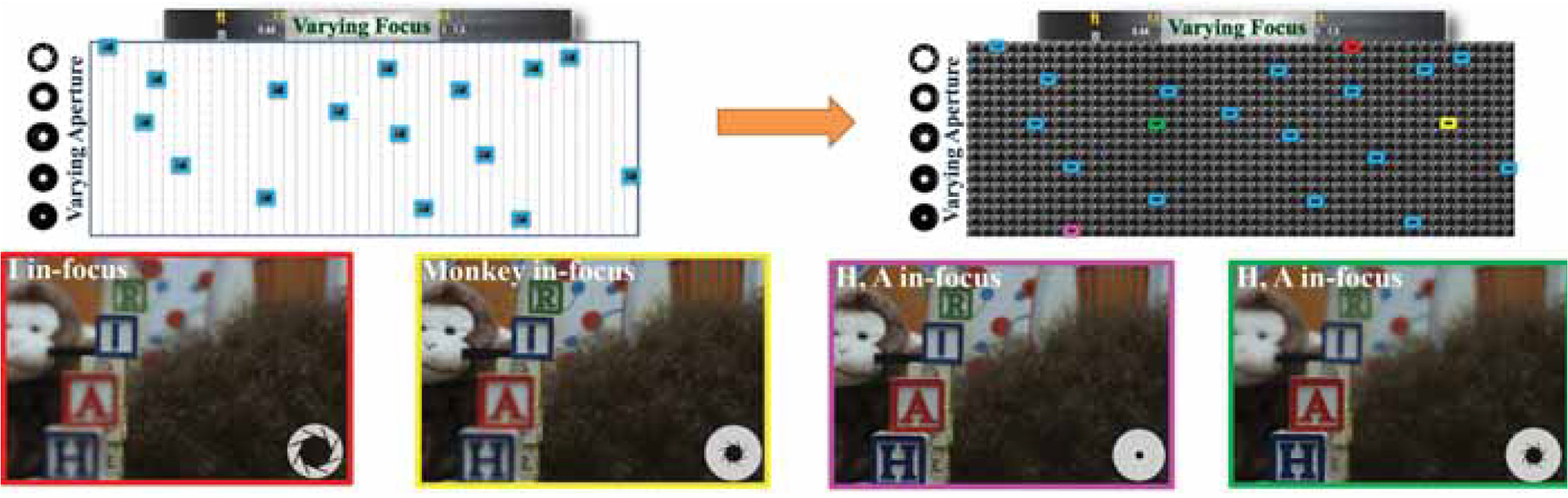

A traditional camera requires the photographer to select the many parameters at capture time. While advances in light field photography have enabled post-capture control of focus and perspective, they suffer from several limitations including lower spatial resolution, need for hardware modifications, and restrictive choice of aperture and focus setting. In this paper, we propose “compressive epsilon photography,” a technique for achieving complete post-capture control of focus and aperture in a traditional camera by acquiring a carefully selected set of 8 to 16 images and computationally reconstructing images corresponding to all other focus-aperture settings. We make the following contributions: first, we learn the statistical redundancies in focal-aperture stacks using a Gaussian Mixture Model; second, we derive a greedy sampling strategy for selecting the best focus-aperture settings; and third, we develop an algorithm for reconstructing the entire focal-aperture stack from a few captured images. As a consequence, only a burst of images with carefully selected camera settings are acquired. Post-capture, the user can then select any focal-aperture setting of choice and the corresponding image can be rendered using our algorithm. We show extensive results on several real data sets.

References:

1. Agarwala, A., Dontcheva, M., Agrawala, M., Drucker, S., Colburn, A., Curless, B., Salesin, D., and Cohen, M. 2004. Interactive digital photomontage. In ACM Trans. Graphics, vol. 23, 294–302. Google ScholarDigital Library

2. Baraniuk, R. G. 2007. Compressive sensing. IEEE Signal Processing Magazine 24, 4, 118–121.Google ScholarCross Ref

3. Baron, D., Sarvotham, S., and Baraniuk, R. G. 2010. Bayesian compressive sensing via belief propagation. IEEE Trans. Signal Processing 58, 1, 269–280. Google ScholarDigital Library

4. Boominathan, V., Mitra, K., and Veeraraghavan, A. 2014. Improving resolution and depth-of-field of light field cameras using a hybrid imaging system. In IEEE Intl. Conf. Computational Photography.Google Scholar

5. Bourrier, A., Gribonval, R., P?rez, P., et al. 2013. Compressive gaussian mixture estimation. In IEEE Intl. Conf. Acoustics, Speech and Signal Processing.Google ScholarCross Ref

6. Brown, M., and Lowe, D. G. 2007. Automatic panoramic image stitching using invariant features. International Journal of Computer Vision 74, 1, 59–73. Google ScholarDigital Library

7. Buades, T., Lou, Y., Morel, J.-M., and Tang, Z. 2009. A note on multi-image denoising. In Intl. Workshop on Local and Non-Local Approximation in Image Processing, 1–15.Google Scholar

8. Cand?, E. J., and Wakin, M. B. 2008. An introduction to compressive sampling. IEEE Signal Processing Magazine 25, 2, 21–30.Google ScholarCross Ref

9. Debevec, P., and Malik, J. 1997. Recovering high dynamic range radiance maps from photographs. In SIGGRAPH, 369–378. Google ScholarDigital Library

10. Flam, J. T., Chatterjee, S., Kansanen, K., and Ekman, T. 2011. Minimum mean square error estimation under gaussian mixture statistics. arXiv preprint arXiv:1108.3410.Google Scholar

11. Flam, J. T., Chatterjee, S., Kansanen, K., and Ekman, T. 2012. On MMSE Estimation: A linear model under gaussian mixture statistics. IEEE Trans. Signal Processing 60, 7, 3840–3845. Google ScholarDigital Library

12. Gortler, S., Grzeszczuk, R., Szeliski, R., and Cohen, M. 1996. The lumigraph. In SIGGRAPH, 43–54. Google ScholarDigital Library

13. Green, P., Sun, W., Matusik, W., and Durand, F. 2007. Multi-aperture photography. ACM Trans. Graphics 26, 3, 68. Google ScholarDigital Library

14. Grossmann, P. 1987. Depth from focus. Pattern Recognition Letters 5, 1 (Jan.), 63–69. Google ScholarDigital Library

15. Hasinoff, S. W., and Kutulakos, K. N. 2006. Confocal stereo. In European Conf. Computer Vision. Springer, 620–634. Google ScholarDigital Library

16. Hasinoff, S. W., and Kutulakos, K. N. 2007. A layer-based restoration framework for variable-aperture photography. In IEEE Intl. Conf. Computer Vision, 1–8.Google Scholar

17. Hasinoff, S. W., and Kutulakos, K. N. 2009. Confocal stereo. International Journal of Computer Vision 81, 1, 82–104. Google ScholarDigital Library

18. Hasinoff, S. W., Durand, F., and Freeman, W. T. 2010. Noise-optimal capture for high dynamic range photography. In IEEE Conf. Computer Vision and Pattern Recognition, 553–560.Google Scholar

19. Joshi, N., and Cohen, M. F. 2010. Seeing Mt. Rainier: Lucky imaging for multi-image denoising, sharpening, and haze removal. In IEEE Intl. Conf. Computational Photography, 1–8.Google Scholar

20. Krotkov, E. 1988. Focusing. International Journal of Computer Vision 1, 3, 223–237.Google ScholarCross Ref

21. Kuthirummal, S., Nagahara, H., Zhou, C., and Nayar, S. K. 2011. Flexible depth of field photography. IEEE Trans. Pattern Analysis and Machine Intelligence 33, 1, 58–71. Google ScholarDigital Library

22. Kutulakos, K., and Hasinoff, S. W. 2009. Focal Stack Photography: High-performance photography with a conventional camera. In Intl. Conf. Machine Vision and Applications, 332–337.Google Scholar

23. Law, N. M., Mackay, C. D., and Baldwin, J. E. 2005. Lucky imaging: High angular resolution imaging in the visible from the ground. arXiv preprint astro-ph/0507299. Google ScholarDigital Library

24. Levin, A., and Durand, F. 2010. Linear view synthesis using a dimensionality gap light field prior. In IEEE Conf. Computer Vision and Pattern Recognition, 1831–1838.Google Scholar

25. Levoy, M., and Hanrahan, P. 1996. Light field rendering. In SIGGRAPH, 31–42. Google ScholarDigital Library

26. Lytro. The lytro camera. https://www.lytro.com/.Google Scholar

27. Mann, S., and Picard, R. 1994. Being undigital with digital cameras. MIT Media Lab Perceptual.Google Scholar

28. Marwah, K., Wetzstein, G., Bando, Y., and Raskar, R. 2013. Compressive light field photography using overcomplete dictionaries and optimized projections. ACM Trans. Graphics 32, 4, 46. Google ScholarDigital Library

29. McNally, J. G., Karpova, T., Cooper, J., and Conchello, J. A. 1999. Three-dimensional imaging by deconvolution microscopy. Methods 19, 3, 373–385. Google ScholarDigital Library

30. Mitra, K., and Veeraraghavan, A. 2012. Light field denoising, light field superresolution and stereo camera based re-focussing using a gmm light field patch prior. In IEEE Conf. Computer Vision and Pattern Recognition Workshops (CVPRW), 22–28.Google Scholar

31. Mitra, K., Cossairt, O., and Veeraraghavan, A. 2014. Can we beat hadamard multiplexing? data driven design and analysis for computational imaging systems. In IEEE Intl. Conf. Computational Photography.Google Scholar

32. Park, J. Y., and Wakin, M. B. 2009. A multiscale framework for compressive sensing of video. In Picture Coding Symposium, 1–4. Google ScholarDigital Library

33. Peers, P., Mahajan, D. K., Lamond, B., Ghosh, A., Matusik, W., Ramamoorthi, R., and Debevec, P. 2009. Compressive light transport sensing. ACM Trans. Graphics 28, 1, 3. Google ScholarDigital Library

34. Raskar, R. 2009. Computational photography: Epsilon to coded photography. In Emerging Trends in Visual Computing. Springer, 238–253. Google ScholarDigital Library

35. Raytrix. 3d light field camera technology. http://www.raytrix.de/.Google Scholar

36. Rubinstein, R., Bruckstein, A. M., and Elad, M. 2010. Dictionaries for sparse representation modeling. Proceedings of the IEEE 98, 6, 1045–1057.Google ScholarCross Ref

37. Sibarita, J.-B. 2005. Deconvolution microscopy. In Microscopy Techniques. 201–243.Google Scholar

38. Sroubek, F., and Milanfar, P. 2012. Robust multichannel blind deconvolution via fast alternating minimization. IEEE Trans. Image Processing 21, 4, 1687–1700. Google ScholarDigital Library

39. Tambe, S., Veeraraghavan, A., and Agrawal, A. 2013. Towards motion-aware light field video for dynamic scenes. In IEEE Intl. Conf. Computer Vision. Google ScholarDigital Library

40. Wagadarikar, A., John, R., Willett, R., and Brady, D. 2008. Single disperser design for coded aperture snapshot spectral imaging. Applied optics 47, 10, B44–B51.Google Scholar

41. Yang, J., Liao, X., Yuan, X., Llull, P., Brady, D. J., Sapiro, G., and Carin, L. Compressive sensing by learning a gaussian mixture model from measurements.Google Scholar

42. Yang, J., Yuan, X., Liao, X., Llull, P., Sapiro, G., Brady, D. J., and Carin, L. 2013. Gaussian mixture model for video compressive sensing. In International Conference on Image Processing.Google Scholar

43. Yu, G., Sapiro, G., and Mallat, S. 2012. Solving inverse problems with piecewise linear estimators: From gaussian mixture models to structured sparsity. IEEE Trans. Image Processing 21, 5.Google Scholar

44. Yuan, L., Sun, J., Quan, L., and Shum, H.-Y. 2007. Image deblurring with blurred/noisy image pairs. In ACM Trans. Graphics, vol. 26, 1. Google ScholarDigital Library