“Blendshapes from Commodity RGB-D Sensors”

Conference:

Type(s):

Entry Number: 33

Title:

- Blendshapes from Commodity RGB-D Sensors

Presenter(s)/Author(s):

Abstract:

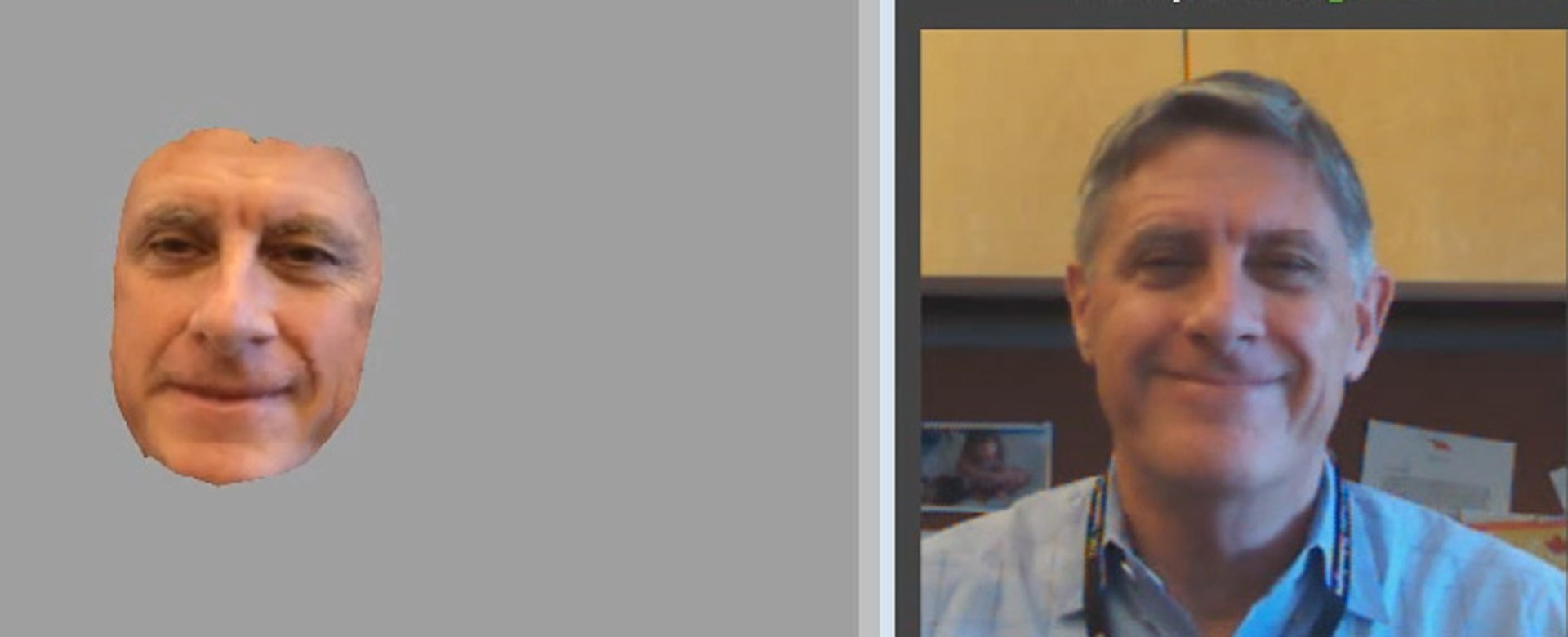

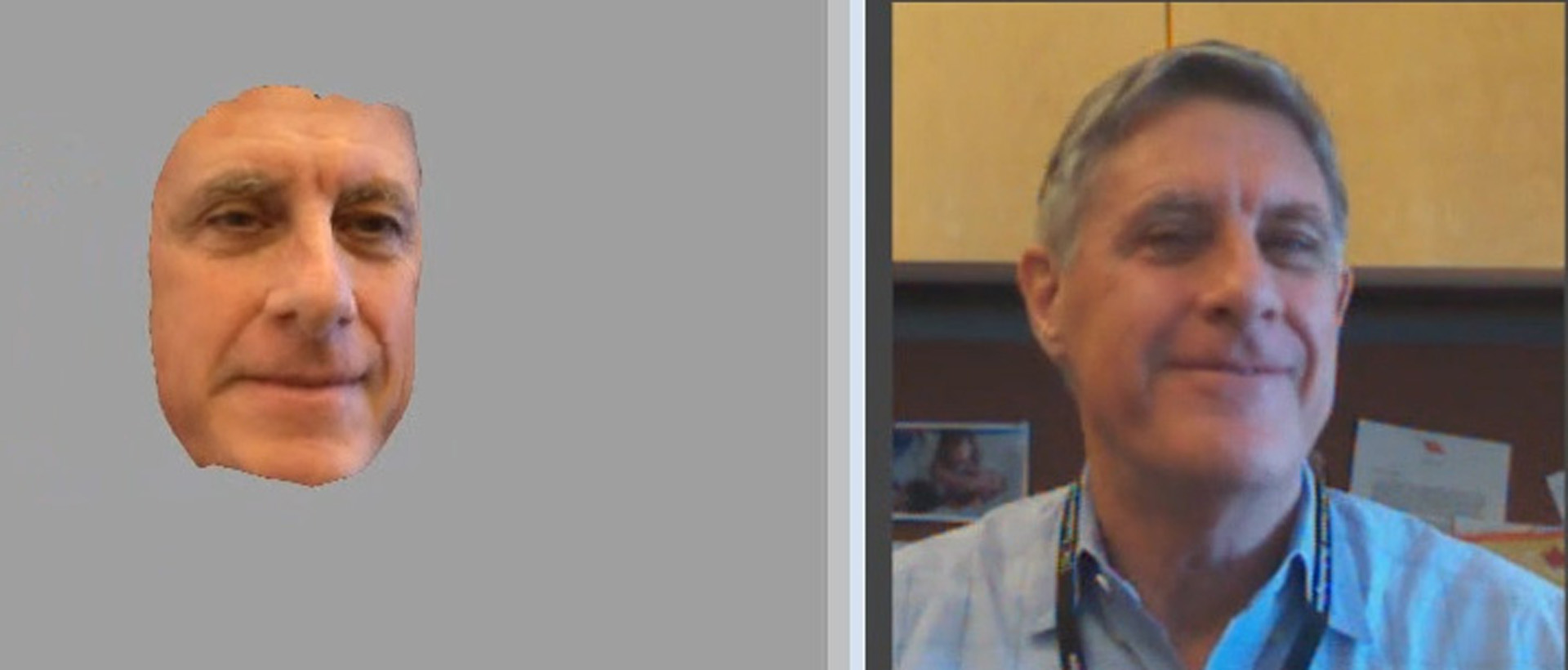

Creating and animating a realistic 3D human face is an important task in computer graphics. The capability of capturing the 3D face of a human subject and reanimate it quickly will find many applications in games, training simulations, and interactive 3D graphics. We demonstrate a system to capture photorealistic 3D faces and generate the blendshape models automatically using only a single commodity RGB-D sensor. Our method can rapidly generate a set of expressive facial poses from a single depth sensor, such as a Microsoft Kinect version 1, and requires no artistic expertise in order to process those scans. The system takes only a matter of seconds to capture and produce a 3D facial pose and only requires a few minutes of processing time to transform it into a blendshape-compatible model. Our main contributions include an end-to-end pipeline for capturing and generating face blendshape models automatically, and a registration method that solves dense correspondences between two face scans by utilizing facial landmarks detection and optical flows. We demonstrate the effectiveness of the proposed method by capturing different human subjects and puppeteering their 3D faces in an animation system with real-time facial performance retargeting.