“SemanticPaint: Interactive Segmentation and Learning of 3D Worlds” by Golodetz, Sapienza, Valentin, Vineet, Cheng, et al. …

Conference:

Experience Type(s):

Entry Number: 22

Title:

- SemanticPaint: Interactive Segmentation and Learning of 3D Worlds

Organizer(s)/Presenter(s):

Description:

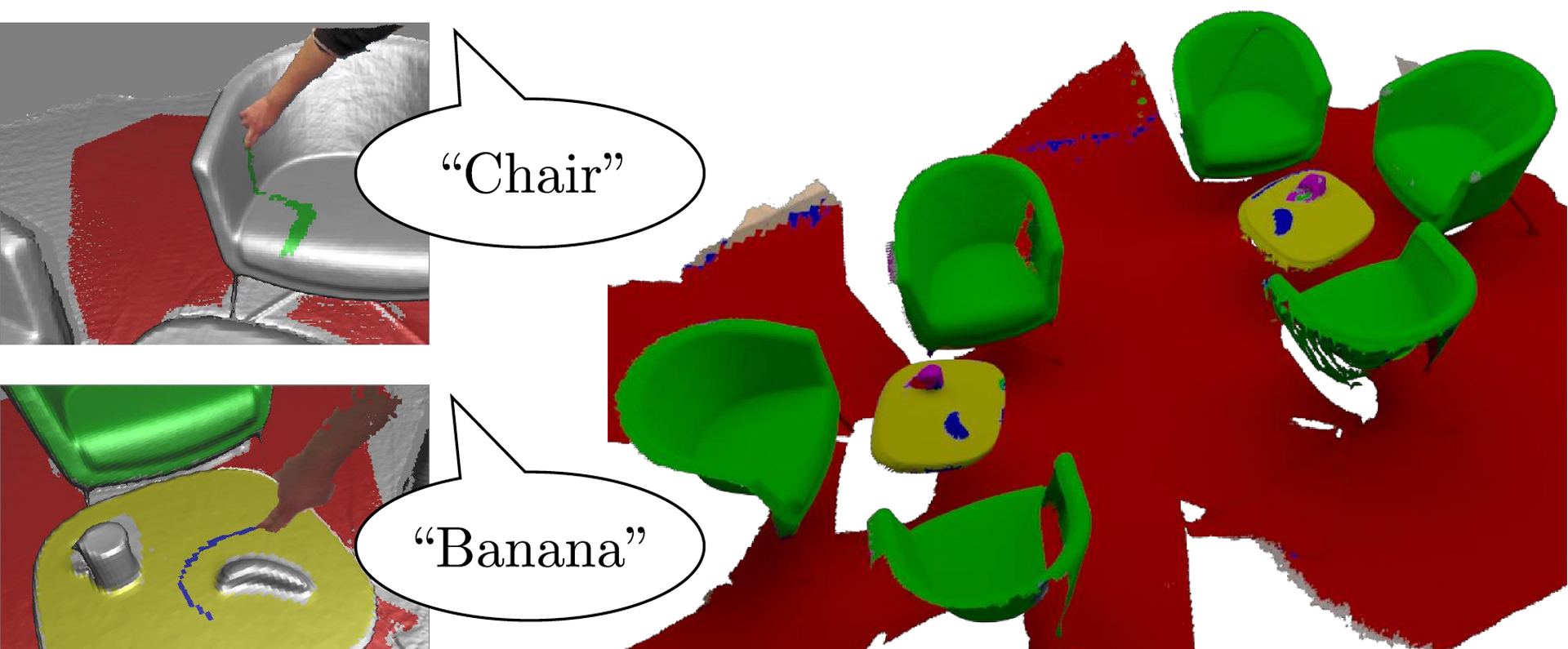

We present a real-time, interactive system for the geometric reconstruction, object-class segmentation and learning of 3D scenes [Valentin et al. 2015]. Using our system, a user can walk into a room wearing a depth camera and a virtual reality headset, and both densely reconstruct the 3D scene [Newcombe et al. 2011; Nießner et al. 2013; Prisacariu et al. 2014]) and interactively segment the environment into object classes such as ‘chair’, ‘floor’ and ‘table’. The user interacts physically with the real-world scene, touching objects and using voice commands to assign them appropriate labels. These user-generated labels are leveraged by an online random forest-based machine learning algorithm, which is used to predict labels for previously unseen parts of the scene. The predicted labels, together with those provided directly by the user, are incorporated into a dense 3D conditional random field model, over which we perform mean-field inference to filter out label inconsistencies. The entire pipeline runs in real time, and the user stays ‘in the loop’ throughout the process, receiving immediate feedback about the progress of the labelling and interacting with the scene as necessary to refine the predicted segmentation.

References:

NEWCOMBE, R. A. et al. 2011. KinectFusion: Real-Time Dense Surface Mapping and Tracking. In ISMAR, IEEE.

NIESSNER, M. et al. 2013. Real-time 3D Reconstruction at Scale using Voxel Hashing. ACM TOG 32, 6, 169.

PRISACARIU, V. A., K¨A HLER, O. et al. 2014. A Framework for the Volumetric Integration of Depth Images. ArXiv e-prints.

VALENTIN, J. P. C. et al. 2015. SemanticPaint: Interactive 3D Labeling and Learning at your Fingertips. To appear in ACM TOG.