“Interacting With Virtual Actors” by Perlin

Conference:

- SIGGRAPH 1995

-

More from SIGGRAPH 1995:

Type(s):

Entry Number: 05

Title:

- Interacting With Virtual Actors

Program Title:

- Interactive Entertainment

Presenter(s):

Collaborator(s):

- Athomas Goldberg

- Maria Augusta

- Leo Cadaval

- Troy Downing

- Mehmet Karaul

- Kouchen Lin

- Dan Moss

- Ruggero Ruschioni

- Eduardo Toledo

- Daniel Wey

- Marcelo Knorich-Zuffo

Project Affiliation:

- NYU Media Research Lab

Description:

- Creating a sense of social space and interaction.

- Making embodied characters believable and responsive to users in real time.

- Effective use of a distributive network for computing the behavior of actors.

- Generating “suspended dis belief” among the participants, so they feel the experience is really happening.

The New York University Media Research Laboratory (NYU- MRL) aims to create a sense of social space and interaction, to create virtual actors that respond believably in social ways in real time, without the intervention of human puppeteers, and to effectively use a distributed network for computing the behavior of the virtual actors. These are enabling technologies for interactive television and embodied meta verses.

History

In 1985, NYU-MRL used pseudo-random functions to create natural surface textures of surprisingly realistic appearance without modeling the underlying physics. This work led, for example, to a popular texture “noise function,” the skin on the “Jurassic Park” dinosaurs, and the atmosphere effects in “The Lion King.”

Last year, the lab applied the same approach to the problem of building real-time graphic puppets that appear to be emotionally responsive. Instead of trying to model the correct physics of human movement, they applied noise functions, together with rhythmic motions. The results, which were surprisingly lifelike, can be used to convey very subtle expressions of personality and body language. They were demonstrated in the short film “Danse Interactif” in the SIGGRAPH 94 Electronic Theatre.

NYU-MRL shares an interest in real-time parallel computation with the Laboratorio de Sistemas Integraveis at the University of Sao Paolo. Together, the two labs have now successfully extended these techniques to real-time social interaction between groups of puppets.

Basic Functionalities

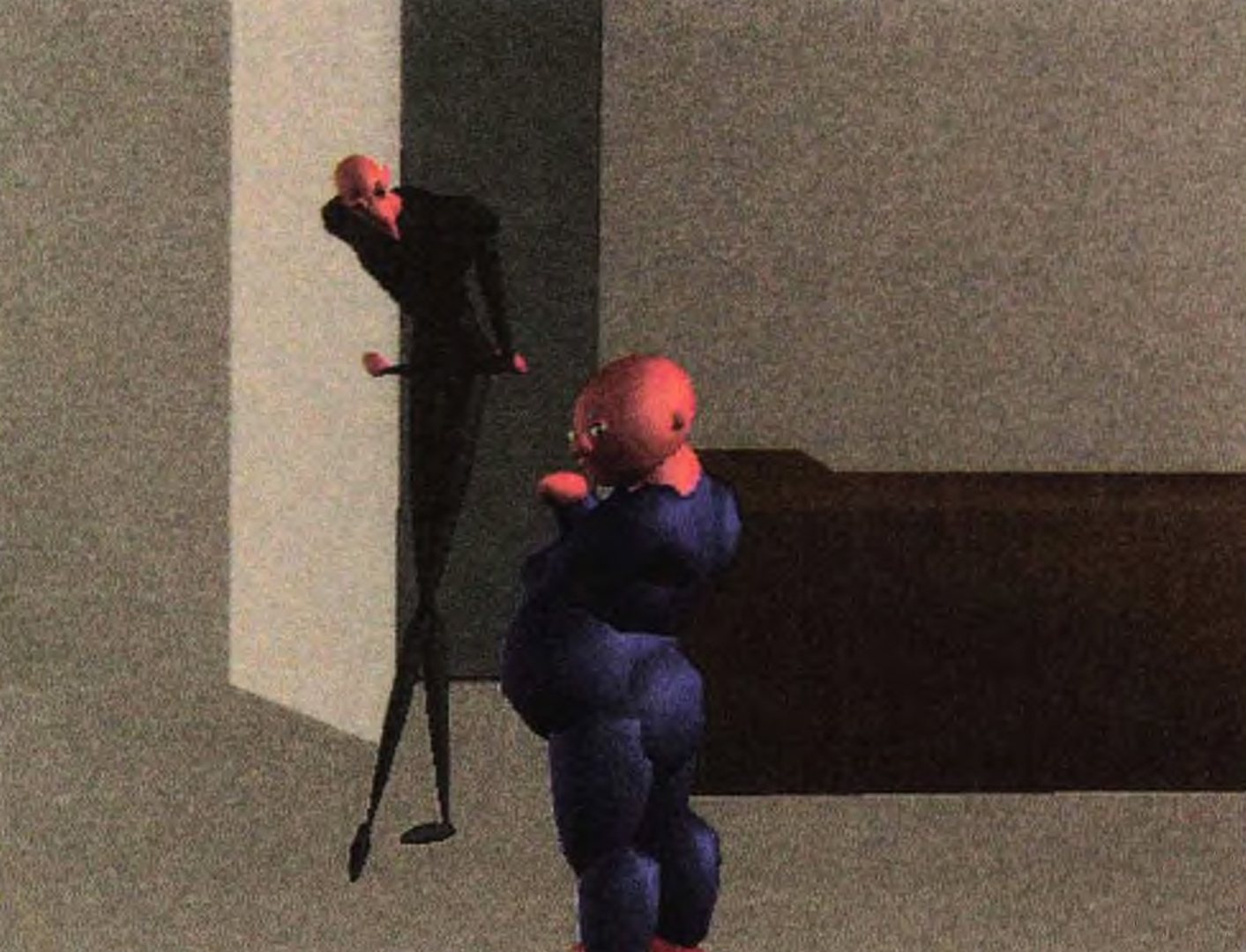

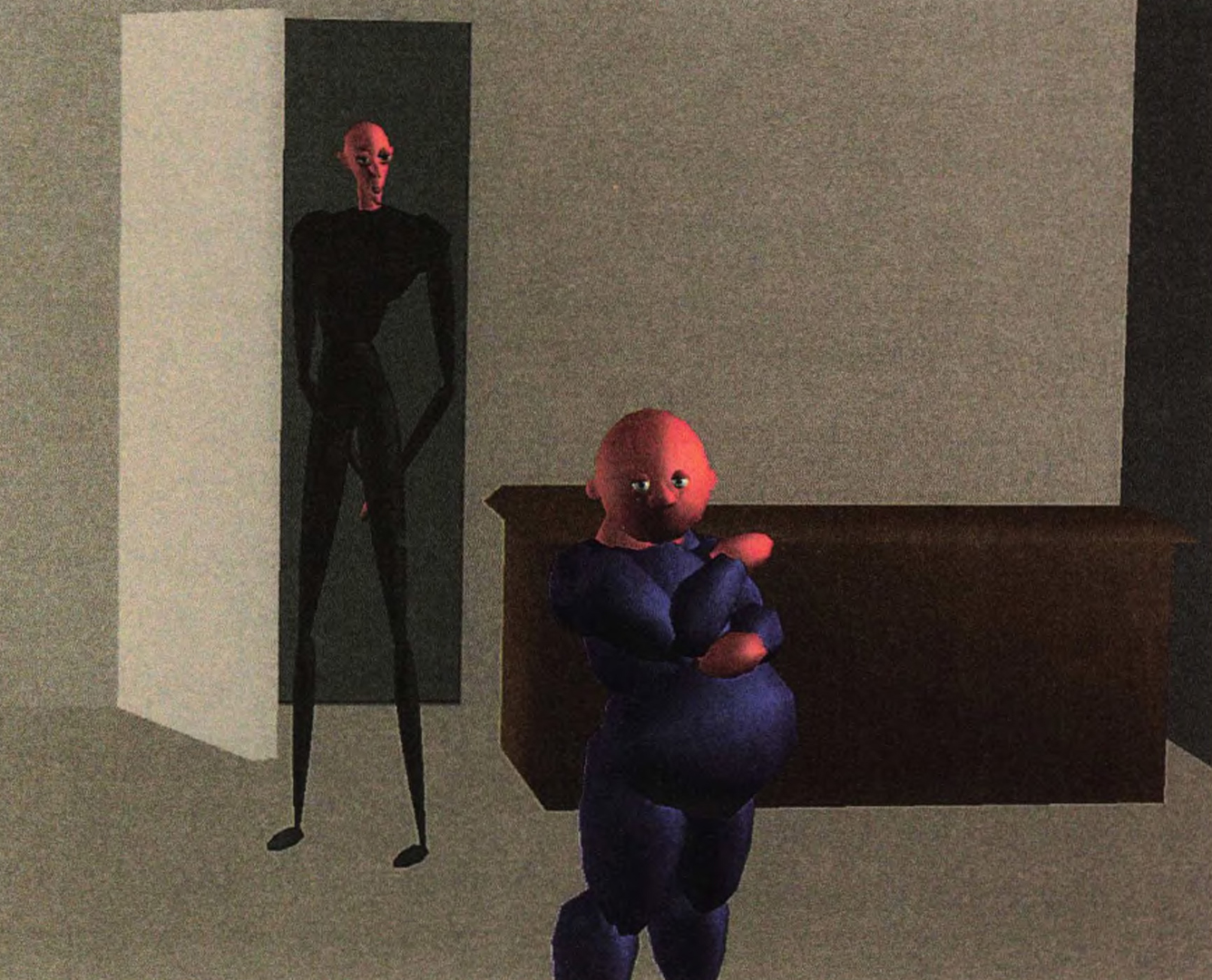

In this project, participants enter a darkened corridor and see a virtual room through a “picture window.” A few virtual people in the virtual room are interacting socially with each other. The virtual room is rendered on a Silicon Graphics workstation and rear projected via a high-resolution projector. A ceiling-mounted video tracker tracks each participant’s position. There is no trained operator intervening in the activities. Naive participants interact on their own, in complex ways, with the virtual actors.

The virtual people in the virtual room interact with each other. They move about, perhaps give each other things, look out the window, or shake hands. It’s a typical social gathering. To the participant, their “talking” is heard as indistinct murmuring. It is clear that they are talking (even their tone of voice can be discerned), but it is not clear what language they are speaking.

The participants are interesting to these virtual people. One or two of them might glance over to see what a participant is up to. Another might walk over to the “window” and peer at the participant. As the participant moves about, virtual people follow the movements with

their eyes. One might point, or wave. If the participant knocks on the window, one of them might knock back, and the sound is audible. If the participant gets too close, the virtual person near the “window” steps back, to maintain a safe distance. Two or more people might argue about the participant’s presence, pointing and waving or raising their voices. Participants try to get the attention of the virtual people in the virtual room. They try to be noticed, and to provoke a reaction.

To the participant, the virtual people seem to be from another country or even another planet. They do not speak the same language, but there is an attempt to communicate, mostly through body language.

Technical Information

Each actor is computed on a different computer in a local area network (LAN). They communicate with each other over the LAN in real time at interac tive frame rates. The actors actually pass entire behavior procedures to each other over the network at each animation frame, giving each other com plex instructions and hints on how to respond to each other and allowing them to coordinate their responses to the par ticipant.

The behavior description language that is passed around the network is the same language that was originally developed for NYU-MRL’s inter active texture work in 1 984. It has special features not usually found in programming languages (including very light weight procedure definitions and a powerful method for fast dynamic scoping) that are necessary to realize this sort of performance.

Research Agenda

This research focuses on situations in which people communicate richly through body language, such as parties, bar scenes, and meetings. The goals of this project include:

In particular, NYU-MRL is exploring how to convey peripheral awareness, approach/avoidance,

“paying attention,” “listening,” etc. The lab also studies immersive scenarios involving two or more walls, to deter mine how simulated body language will help to convey the impression of various competing social or attention-getting activities.

Ultimately, NYU-MRL’s goal is to determine to what extent the mere rhythm of interpersonal interaction can be encoded, in order to convey the impression of social complexity. For exam ple, could one structure entire narratives in this manner?

Assessment of Potential Future Impact

Consider this possible scenario: you send your “agent” to the Metaverse library to get some information. Along the way, the agent encounters your neighbor strolling the Metaverse. You’ve already told your agent that if she encounters this person, she must remind him of your lunch date tomorrow.

Alternatively, for networked video games, this NYU-MRL work demonstrates that it is feasible to generate fully 3D, interactive characters. This allows networked video games to break away from the current restrictive reliance on canned CD-ROM-based video footage and animation clips.

This project also shows that it is feasible, using current technology, to apply these techniques non-invasively. People can interact in their own homes with real-time personal agents who have convincing and interesting personalities and live behind wall-size screens. Such agents can be used for entertainment, information access, or simply for company.

Additional Images: