“ILMxLAB AUGMENTED REALITY EXPERIENCE” by Rasmussen, Wooley, Santos and Rose

Conference:

- SIGGRAPH 2016

-

More from SIGGRAPH 2016:

Type(s):

E-Tech Type(s):

- Gaming and Entertainment

Title:

- ILMxLAB AUGMENTED REALITY EXPERIENCE

Developer(s):

Description:

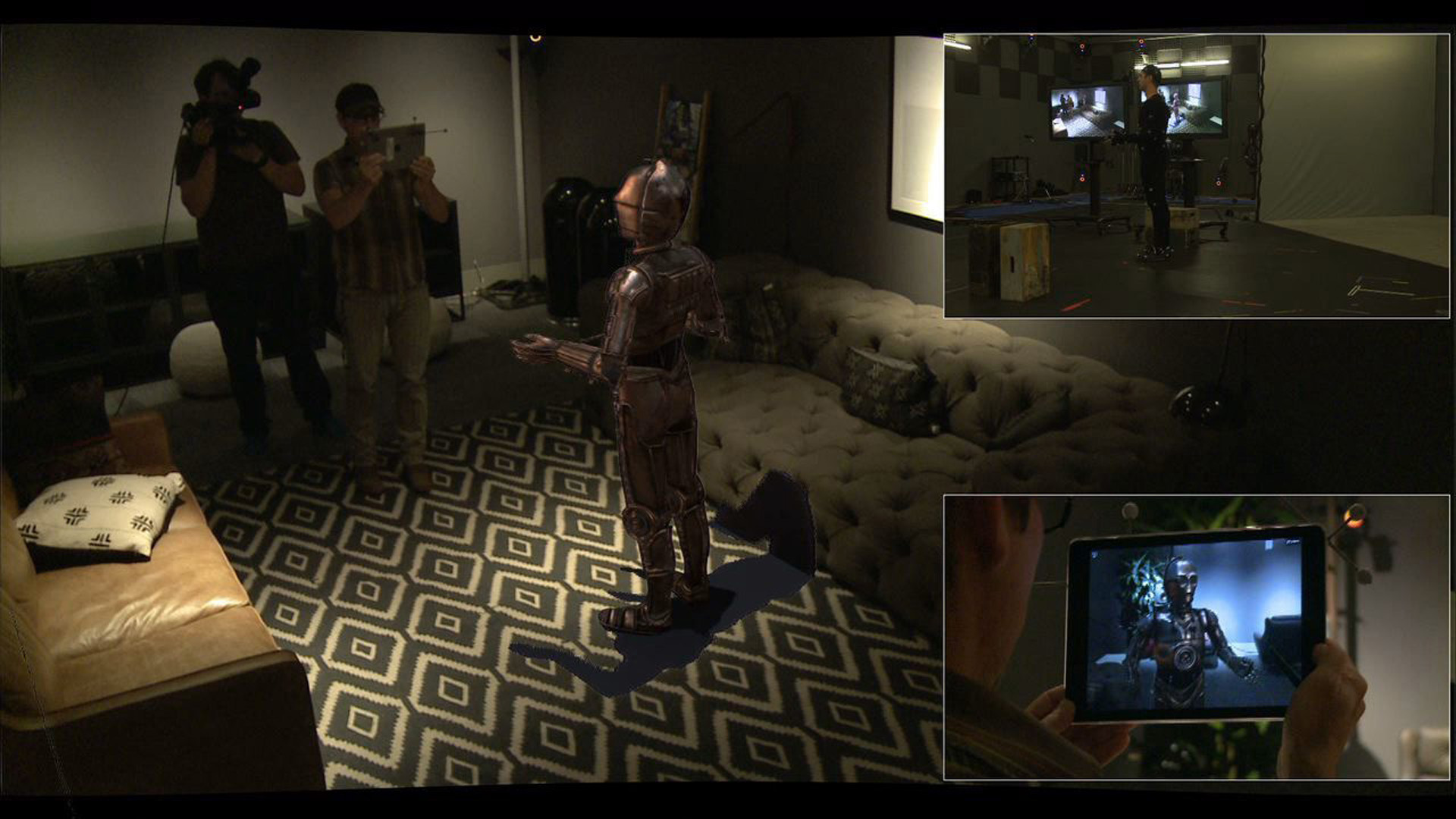

Industrial Light & Magic has developed an iPad app that composites real-time computer-generated imagery with the on-board video camera. Using the iPad instead of a VR headset, the system places digital characters in the user’s visual field and reveals them in the real-world environment. The iPad is tracked using the HTC Vive and Valve Lighthouse system to provide the camera perspective to the 3D engine. Other tracking systems, such as a Vicon motion capture system, can also be used.

ILM’s Zeno application framework is used to generate the digital imagery. It supports high-quality animation and real-time rendering, and because it is integrated into ILM’s asset pipeline, it can import any character asset. The characters are rendered from the point of view of the tracked iPad so they can be integrated into the scene. Optionally, a live motion-capture performer can drive the digital character via a proprietary retargeting system.

The rendered image is encoded to H264 using NVIDIA’s FBC technology, which takes advantage of the high-performance hardware video encoder for Kepler- and Maxwell- class NVIDIA GPUs. This dedicated hardware is faster than CUDA-based or CPU- based encoders and leaves the CUDA cores and CPU free for other Zeno operations. The encoded video stream is then streamed over wifi to the iPad.