“An Interactive Exploration of Computer Music Research” by Waxman

Notice: Pod Template PHP code has been deprecated, please use WP Templates instead of embedding PHP. has been deprecated since Pods version 2.3 with no alternative available. in /data/siggraph/websites/history/wp-content/plugins/pods/includes/general.php on line 518

Conference:

- SIGGRAPH 1993

-

More from SIGGRAPH 1993:

Notice: Array to string conversion in /data/siggraph/websites/history/wp-content/plugins/siggraph-archive-plugin/src/next_previous/source.php on line 345

Notice: Array to string conversion in /data/siggraph/websites/history/wp-content/plugins/siggraph-archive-plugin/src/next_previous/source.php on line 345

Type(s):

Entry Number: 33

Title:

- An Interactive Exploration of Computer Music Research

Program Title:

- Tomorrow's Realities

Presenter(s):

Collaborator(s):

- Jean-Baptiste Barrière

- Arnauld Soulard

- Atau Tanaka

- I nstitut de Recherche et Coordination Acoustique/Musique (IRCAM). Centre Georges Pompidou

Project Affiliation:

- IRCAM

Description:

The understanding of sound and music-essential aspects of the human experience-has progressed considerably in the last 20 years. This is due in part to the growth of computer music as a field of musical as well as scientific experimentation. With computers, composers can explore and manipulate sound and musical structure on both the micro and macro levels, and psychoacousticians can provide models that help us understand cognitive and perceptual processes.

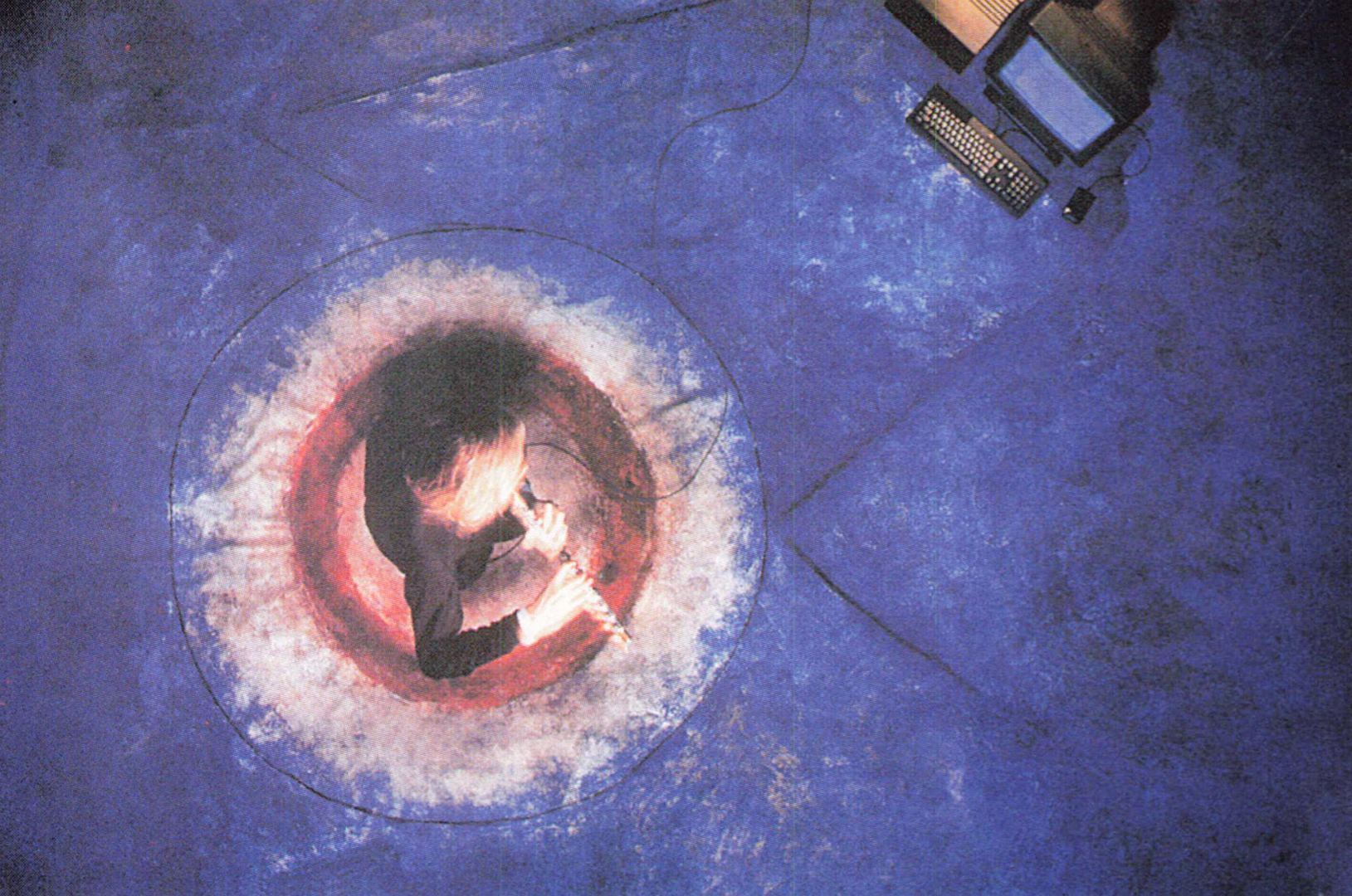

In our presentation, the visitor participates in a series of interactive situations based on work realized at the Institut de Recherche et Coordination Acoustique/Musique (IRCAM) of the Centre Georges Pompidou in Paris-a leading international research and production center for new music. The aim of this set of musical examples is to illustrate in an inviting and game-like fashion how the computer makes it possible to realize three aspects of ongoing research at IRCAM: psychoacoustics and perception of musical pitch, rhythm, nuance, timbre and space; digital signal processing (DSP) as a means to realize sound synthesis transformations; and compositional concepts and structural transformations.

The interactive setup helps to catalyze the pedagogical aim of the examples. At the same time, the emphasis on real-time realization reflects exactly the basic underlying methodology of research at IRCAM. In this way, the visitor discovers and learns in the same ways as our researchers and composers. The exhibit is comprised of a series of interactive “situations,” inviting the visitor to explore the different categories outlined above via a common interface scenario.

The Exhibit

The visitor is briefly introduced to the theme of the particular situation, then is presented with some pre-recorded examples to be used as models, and is finally invited to explore on his or her own. S/he will primarily interact with the system either by moving the screen cursor, by playing on a real piano keyboard, or by speaking/singing into a microphone. The computer responds in real time by transforming the sound of the piano or his/her voice, and extending them with synthetic sounds. On some occasions, the piano itself will also be controlled by the computer.

The front end, or interface-to-user input, is the Max real-time environment on the Macintosh (originally developed at IRCAM and published by Opcode Systems). The back end, where sounds will be transformed and synthesized in realtime, is the IRCAM Signal Processing Workstation (ISPW), a hardware/software package (developed at IRCAM and distributed by Ariel) comprised of a series of dual Intel i860/Motorola DSP56000 cards and a real-time scheduling kernel.

The Musical Situations

■ Psychoacoustics and room acoustics:

In the first situation, audio techniques applicable to virtual reality are explored. The visitor travels in virtual spaces by moving the mouse around an architectural plan. S/he can choose between the sounds of footsteps, a looping melodic pattern, or his/her own voice, to hear a real time simulation of acoustical changes as s/he navigates through the space.

The psychoacoustics situations help the visitor to learn about streaming and spectral fusion/fission. In audio streaming, the perception of a loop of notes with equal durations and single pitch can be altered by changing the nuance (amplitude) of selected notes. Human perceptual processes group the notes according to their nuances. This results in transformations of perceived rhythm, while the actual physical rhythm is still one of equal spacing and duration. In the situation about fusion or fission of sound spectra, one single sonic object explodes in two discreet sounds by controlling different vibrato on even and odd frequency domain partials.

■ Signal processing, sound synthesis, and transformation:

The DSP situations give examples of real-time sonic transformations and draw parallels with similar effects in the graphics domain. One situation shows the effect of time stretching or expansion, on either prerecorded sounds or on the visitor’s voice. The source material can be transposed without altering the timbre or can be either shortened or lengthened in time without changing its pitch. Textural effects based on the visitor’s voice can be achieved by layering several of these transformations.

A dramatic audio process that has a close parallel to computer graphics techniques is timbral interpolation, the computer music equivalent of morphing. Gradual or rapid shifts of spectral content from one sound to another can be realized, all under interactive control of the visitor. For example, the visitor can record his/her own voice, and have the computer interpolate between it and a trumpet sound. The visitor is also able to select two sounds from a menu to hear timbral interpolations between them.

■ Composition and musical structure manipulation:

Finally a compositional situation invites the visitor to learn how these computer processes can be applied at a macro-structural level. S/he can input a theme upon which an automatic improvisation is generated. The visitor exercises parametric control over the thematic development. Possible transformations are orchestration, rhythmic changes, and melodic variation. The micro-level audio effects from the other examples above can be applied here by the user, to create his/her own “piece.”

Concepts introduced above can also be applied at this level. The visitor can apply the notion of interpolation to rhythm or melody. One rhythmic pattern can be given as a point of departure and another as a destination, and the computer will repeat the first pattern as it gradually transforms into the second one. Finally all interpolations, micro and macro, can be combined to make a super-interpolation on multiple dimensions.

Interactive Tour of IRCAM

A second computer is set up in the exhibit, running a multimedia tour that introduces visitors to IRCAM. The visitor takes a tour of the building, and by entering each department, learns about the different research projects ongoing at IRCAM. Finally, as the visitor enters the Espace de Projection, our concert hall, s/he is able to audition excerpts from various musical pieces composed at IRCAM.

Conclusion

This exhibit demonstrates interactivity and virtual reality in the audio domain by providing examples that are themselves interactive. By exploring areas such as psychoacoutics interactively, the visitor learns by actually applying his/her own perceptual processes. This kind of user involvement, we feel, stimulates the learning process, and helps the visitor to grasp in a humanistic way subjects that are otherwise complex and technical.

The interactive form of presentation is particularly apt to present the work of IRCAM, because the research is in fact carried out exploiting the same techniques used to create the presentation. The visitor, then, shares in the exploration process. Instead of just reading about it, s/he is able to actively participate and make discoveries using the same tools we use at IRCAM.

Finally, by presenting those signal processing techniques that have interesting parallels in computer graphics, we hope to provide the visitor a familiar handle by which s/he can explore musical applications of computer technology. By presenting our work at SIGGRAPH, we hope to introduce the computer graphics community to new developments in computer music, and to provoke discussion about how our respective disciplines might come closer together.

Hardware

■ Apple Macintosh Ouadra 950

■ 16″ RGB display, RasterOps and Digidesign cards

■ Apple Macintosh llci w/16″ RGB display

■ NeXTcube 040 computer

■ Ariel IRCAM Signal Processing Workstation(ISPW)

Software

■ IRCAM NeXT Max

■ Opcode/lRCAM Macintosh Max

■ Macromind Director