“ALIVE: An Artificial Life Interactive Video Environment” by Maes

Conference:

- SIGGRAPH 1993

-

More from SIGGRAPH 1993:

Type(s):

Entry Number: 26

Title:

- ALIVE: An Artificial Life Interactive Video Environment

Program Title:

- Tomorrow's Realities

Presenter(s):

Collaborator(s):

Project Affiliation:

- MIT Media Lab

Description:

The ALIVE interactive installation brings together the latest technological breakthroughs in vision-based gesture recognition, physical modeling, and behavior based computer animation. The user experiences a physically based computer graphics environment and is able to interact with the artificial creatures that inhabit this world using simple and natural gestures.

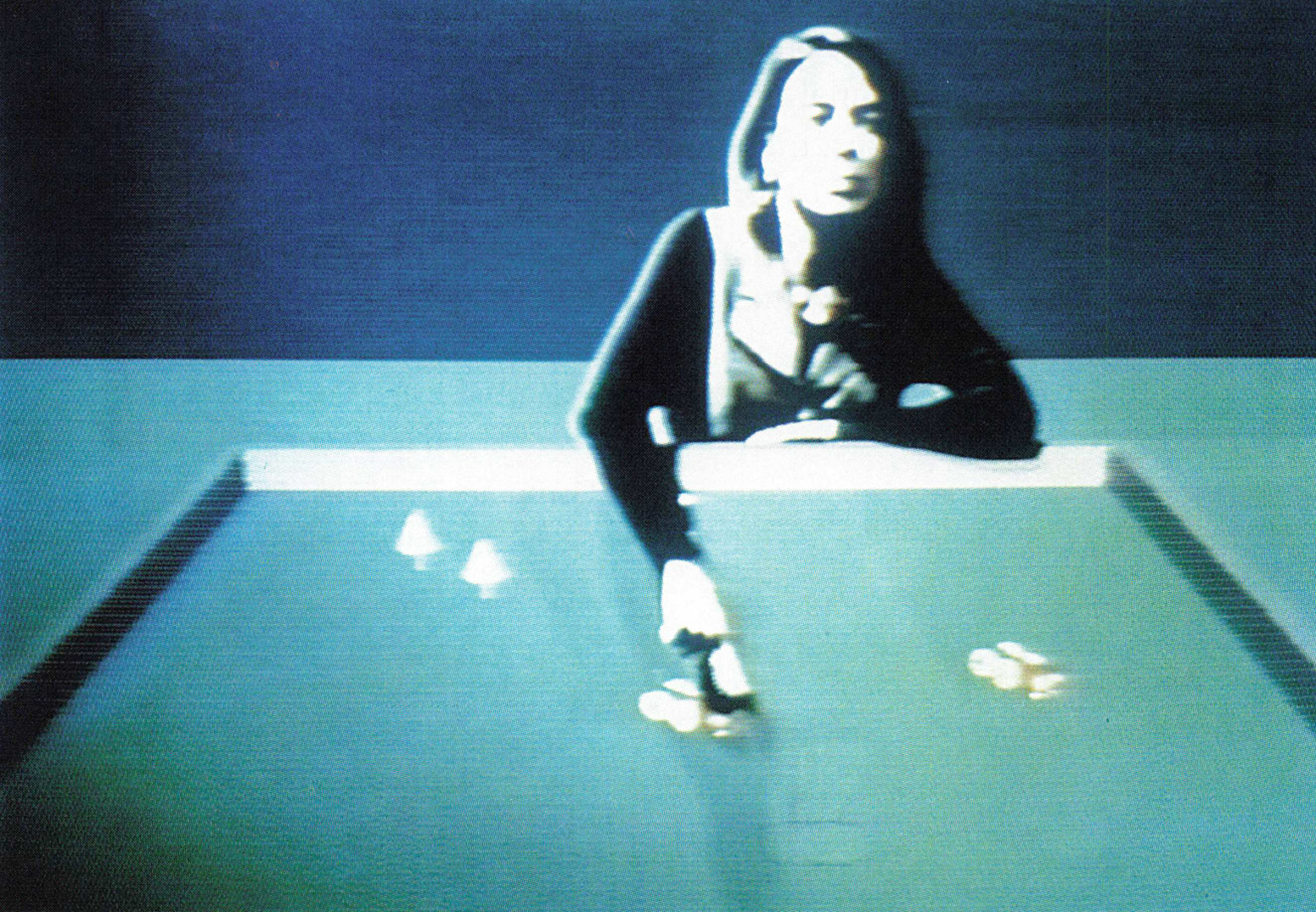

More specifically, chroma-keying technology is used to overlay the image of the user on top of a real-time interactive computer animation. The composited image is displayed on a large screen ( 10 feet x 10 feet) that faces the user, the resulting effect being that of looking in a “magical mirror.” Using natural gestures interpreted by a vision-based pattern recognition system, the user can interact and communicate with the animated creatures in the mirror and as such effect also their behavior.

The goal of the ALIVE system is to demonstrate what recent research achievements in the area of vision-based gesture recognition, modeling autonomous agents or “artificial creatures” and physically based modeling have made possible. The ALIVE system represents one of the first (if not the first) artificial reality systems in which users can interact and communicate with semi-intelligent autonomous agents using natural gestures.

One of the component technologies demonstrated in the ALIVE system is a vision-based algorithm that tracks hands and faces and can recognize the spatiotemporal patterns or gestures a user may perform (Darrell and Pentland, 1993). During a short training phase, several examples of the pattern or gesture are presented. The system automatically builds a view-based representation of the object being tracked (for example, a hand) and uses the resulting search scores over time to store and match gestures. The system relies on correlation search hardware for real-time performance.

The second component technology is a toolkit and set of algorithms that make it possible to create “autonomous goal seeking agents” by specifying their sensors, motivations, and repertoire of behaviors (Maes, 1991; 1991b; 1993). For example, for the ALIVE creatures, the sensor data include the gestures made by the user and the positions of the user’s hands as well as the position and behavior of other creatures in the world. Motivations (or goals) include the desire for creatures to stay close to one another, fear of unknown things/people, and curiosity. Examples of behaviors are: move towards the user, move away from the user, track the user’s hand, etc. Given this information, the toolkit produces an agent that autonomously decides what action to take next based on its current sensor data and its motivational state. The model also incorporates a learning algorithm that makes the agent learn from experience and improve its goalseeking behavior over time.

The third component technology is ThingWorld, a simulation system that uses modal dynamics for high-performance simulation of non-rigid multibody interactions (Pentland and Williams, 1989; Pentland et.al., 1990; Sclaroff and Pentland, 1991). The version demonstrated here is Distributed ThingWorld (Friedmann and Pentland, 1992), which uses several novel strategies for allocation of processing among networked computers to achieve a nearly linear increase in efficiency as a function of the number of processors.

The relation of the ALIVE installation to the theme of the tomorrow’s realities’ show is an indirect one. The installation makes users and viewers aware of what is possible with the latest techniques. It does so through an entertaining and evocative interactive demonstration. Ln addition to the demonstration, informational posters describe the technology used and reflect on its potential impact upon education, the workplace, training, and entertainment.

Hardware

■ Sun IPX workstation w/ Cognex VisionProcessor

■ Silicon Graphics Indigo w/ Elan board

■ 10 feet x 10 feet backlit screen

■ Color camera (for chroma-keying andgesture recognition)

■ Light valve

■ Chroma-keying system

Software

■ All software is written by the contributors in C and C++. We employ SGl’s Inventor and GI software packages.

References:

■ Darrell, T., and A. P Pentland, 1993. Space Time Gestures, IEEE Conference on Vision and Pattern Recognition, New York, NY, June 1993.

■ Friedmann, M , and Pentland, A., 1992. Distributed Physical Simulation, Eurographics Workshop on PhysicallyBased Modeling, Cambridge, England, August 1992.

■ Maes, P., 1991. A Bottom-Up Mechanism for Behavior Selection in an Artificial Creature. In: From Animals to Animats, J.A. Meyer and S. Wilson (editors). MIT-Press.

■ Maes, P., 1991 b. Designing Autonomous Agents, MIT-Press.

■ Maes, P., 1993. Modeling Artificial Creatures, Proceedings of the IMAGINA conference ’93, February 1993.

■ Pentland, A., and Williams, J., 1989. Good Vibrations: Modal Dynamics for Graphics and Animation, ACM Computer Graphics, Vol. 23, No. 4, pp. 215-222, August 1989.

■ Pentland, A., Essa, I., Friedmann, M, Horowitz, B., Sclaroff, S , 1990. The Thingworld Modeling System: Virtual Sculpting by Modal Forces, ACM Computer, Vol. 24, No. 2, pp. 143-144, June 1990.

■ Sclaroff, S., and Pentland, A., 1991. Generalized Implicit Functions for Computer Graphics, ACM Computer Graphics, Vol. 25, No. 2, pp. 247- 250. Software