Qinyuan Liu: Let’s Chat Like This

Notice: Pod Template PHP code has been deprecated, please use WP Templates instead of embedding PHP. has been deprecated since Pods version 2.3 with no alternative available. in /data/siggraph/websites/history/wp-content/plugins/pods/includes/general.php on line 518

Artist(s):

Title:

- Let's Chat Like This

Exhibition:

- SIGGRAPH Asia 2020: Untitled & Untied

-

More artworks from SIGGRAPH Asia 2020:

Notice: Array to string conversion in /data/siggraph/websites/history/wp-content/plugins/siggraph-archive-plugin/src/next_previous/source.php on line 345

Notice: Array to string conversion in /data/siggraph/websites/history/wp-content/plugins/siggraph-archive-plugin/src/next_previous/source.php on line 345

Category:

Artist Statement:

Summary

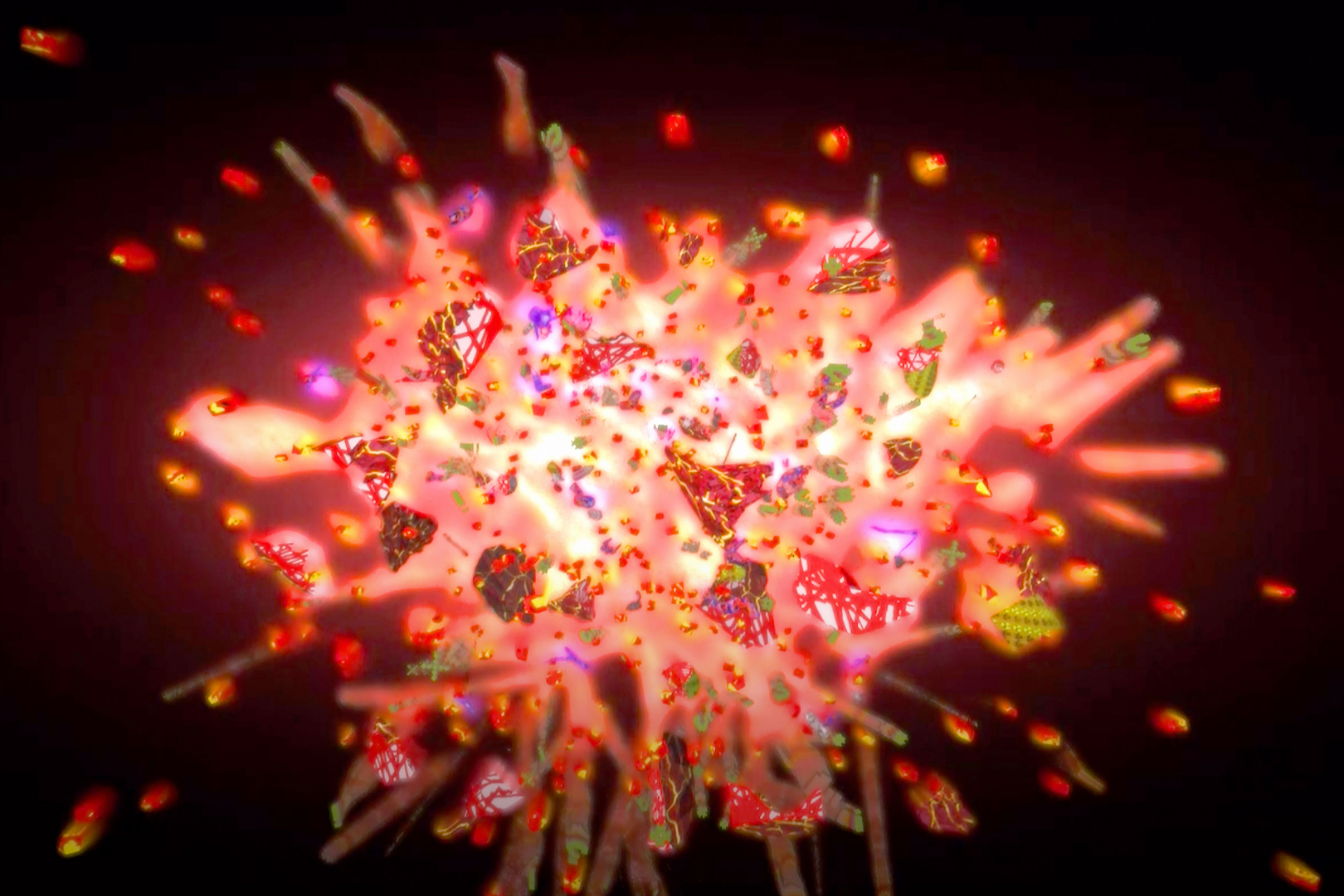

Let’s Chat Like This is an interactive system that allows two people to observe each others’ moods through interacting with a shared interactively generated image. The moving image changes according to the two people’s facial expressions.

Abstract

Let’s Chat Like This is an interactive system that allows two people to observe each others’ moods through interacting with a shared interactively generated image. The moving image changes according to the two people’s facial expressions. Different from traditional ways of communication, Let’s Chat Like This focuses more on the emotional aspect of communication. It shows a visualization of the complexity of human emotion and boosts people’s emotional communication in a creative no-verbal way. When experiencing this work, people’s emotions are bound together with the same moving image they see. The moving image changes depending on their moods. They will be aware of their current moods as well as the other’s, the intimacy and empathy between them will be increased.

This is not only a “social distancing” art installation that helps us connect emotionally during the COVID-19 pandemic, but also my hypothesis of what future emotional communication will be like. I hope this artwork can evoke deep thinking and maybe cheer people up in this challenging time.

Technical Information:

I built the 3D visual elements in Maya and Zbrush, and then imported them to Unity to connect them to the face data captured from Zoom meetings. The data is analyzed by the facial expression recognition program in Processing.