Kyung Chul Lee, Eun Young Lee, Joon Seok Moon, Ji Hun Jung, Hye Yun Park, Hyun Jean Lee, Seung Ah Lee: PLANTEXT

Artist(s):

Title:

- PLANTEXT

Exhibition:

- SIGGRAPH Asia 2020: Untitled & Untied

-

More artworks from SIGGRAPH Asia 2020:

Category:

Artist Statement:

Summary

What would happen if plants can talk, see, and sense as humans do? Based on the imagination, we create an artistic interpretation of the humanization of plants with modern technology to arouse people to think about plants as a dynamic living being.

Abstract

We create an artistic interpretation of the humanization of plants with modern technology to arouse people to think about plants as a living being. We imagine what would happen if plants can talk, see, and sense as humans do. Base on the imagination, we give each plant a character and exaggerate plants’ sensory by adding electronic devices with text to speech (TTS) synthesis and physics-based visual processing.

The cultural and historical backgrounds of plants come out by all different synthesized human voices. Since all plants have different backgrounds, we use five different people’s voices to make an artificial voice using deep learning network. In the voice, its inherent historical contents related between the plant and human culture comes out, and people can understand the story of plants with a human being. Additionally, we embody a vision of the plants by applying image processing of a captured image from a miniaturized camera that is affixed to the leaf. Since human vision is a reaction to light, we imagined plants can see the surrounding environment through their leaves where most of this photosynthesis of light-plant interaction occurs. Based on the physics, we modeled light propagation from surrounding objects to the surface of a leaf to granum which takes charge of photosynthesis of plant. By capturing the surface image of plant leaf by microscope and reflect them into image processing, we visualize the sight of plants. At the same time, the plant’s electrical signal is measured through electrode connecting to a miniaturized computer (raspberry pi), and its audio-visual output is distorted if the plant is touched depending on the electric potential. Our visual – audio output may enable users to think about plants as a dynamic living being and thus makes user to more deeply understand the plants’ context.

Nowadays, people grow plants anywhere even inside buildings and get comfort from the existence of plants. People are usually thought of plant as static objects, but they do move and do behave to their surrounding environment in real-time; it just too slow. Also, plants have existed for thousands of years with human beings. As a result, plant ecology and even its biological evolutions are closely related to human culture. However, since their reaction and their communication method differs far from ours, people hard to understand its biological and ecological backgrounds from seeing plants’ appearance.

Our work is a bio-interactive installation project that aims that what we cannot see does not mean it does not exist; we exaggeratedly express plants’ sensory with modern technology so that users can think about the plant as a living being. Combining speech synthesis and physics-based image processing with a single-board computer, researchers that have all different backgrounds – electronic engineering, computer science, media-art, and visual art – could design a biology- computer hybrid installation.

Interestingly, from the tone and pitch of the synthetic voice to the dynamic range of measured electric potential to visual output, the responses of five plants are all different. From these differences, users may feel the diversity of a plant species. Furthermore, with the TTS synthesis system, users can hear the synthetic human voice. But if listen carefully, they can recognize that voices are not from a human. With this error, our work may enable the user to feel homogeneous yet disorder property of living being. From the diversity and the error comes out from modern technology, we think our work is suitable for Siggraph Asia 2020 themed of “post-algorithm”

Technical Information:

Text to Speech (TTS) Synthesis

– In this project, we used Google’s Tacotron 1 which is an end-to-end TTS synthesis system based on a sequence-to- sequence model with attention mechanism. We implemented a TTS system using speech data from a Korean female speaker who has been pre-trained as a single speaker. The data used for learning is the corpus of 691 Korean sentences composed of 7 to 10 words and the wav sound source data recorded for the sentences. The Adobe Audition program was used to connect the synthesized voices derived from the learning results to a single sound source file.

Physics-based Visual Processing

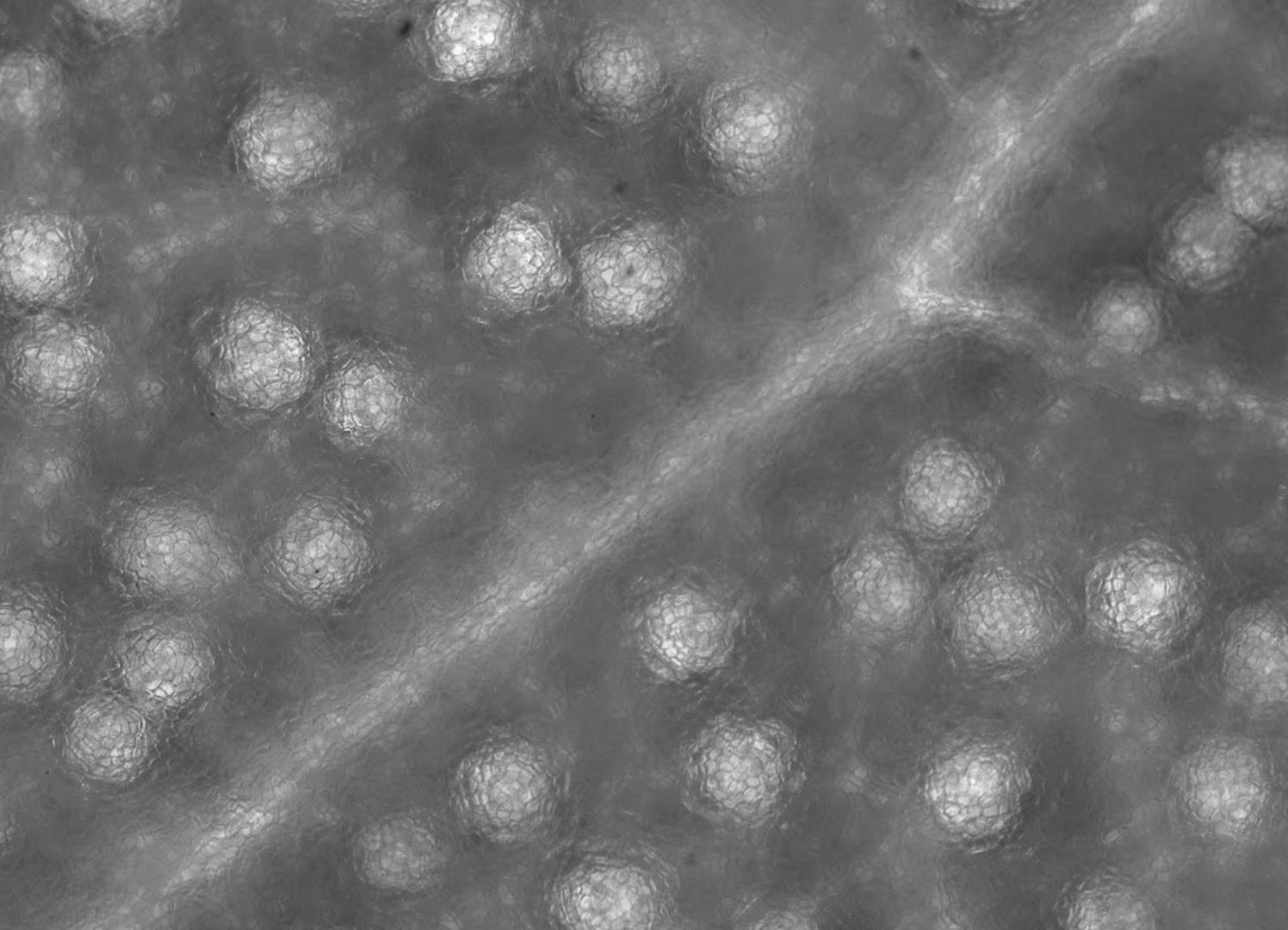

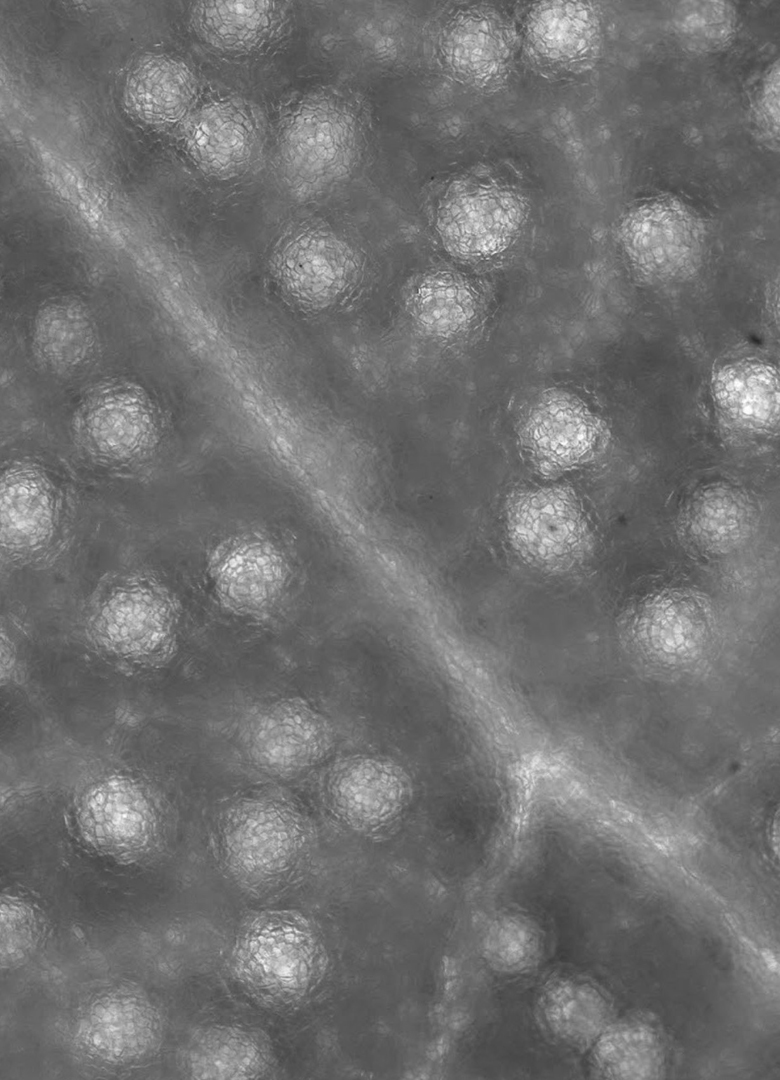

– Based on the wave optics in physics, we modeled light propagation from surrounding objects to the surface of a leaf to granum which takes charge of photosynthesis in plants leaf. Much research has demonstrated that the surface structures of leaves look like multiple microlenses array to effectively gather light energy to the granum and all of the plants have all different surface structures. By capturing the image of the plants’ surface structures with a microscope and by reflecting them into image processing, we visualize the sight of plants. Using the shift-invariance of the imaging system, incidental light field to granum can be modeled as a convolution between the impulse response of the microlens array structures of the leaf (point spread function, PSF) and the object scene. By applying, this physics into visual processing computed by a single-board computer, multiple blurred images are formed at every single frame and are displayed through a monitor near the plant.

Electric Potential Measurements of Plants

– We measure the electric potential of plants by connecting the electrode to plants. This electric potential is transmitted to the raspberry pi. Using these signals, we modulate and distort the visual-audio outputs depending on the electric potential.