“Twech: A Mobile Platform to Search and Share Visuo-tactile Experiences” by Hanamitsu, Nakamura, Saraiji, Minamizawa and Tachi

Conference:

Type(s):

Entry Number: 40

Title:

- Twech: A Mobile Platform to Search and Share Visuo-tactile Experiences

Presenter(s)/Author(s):

Abstract:

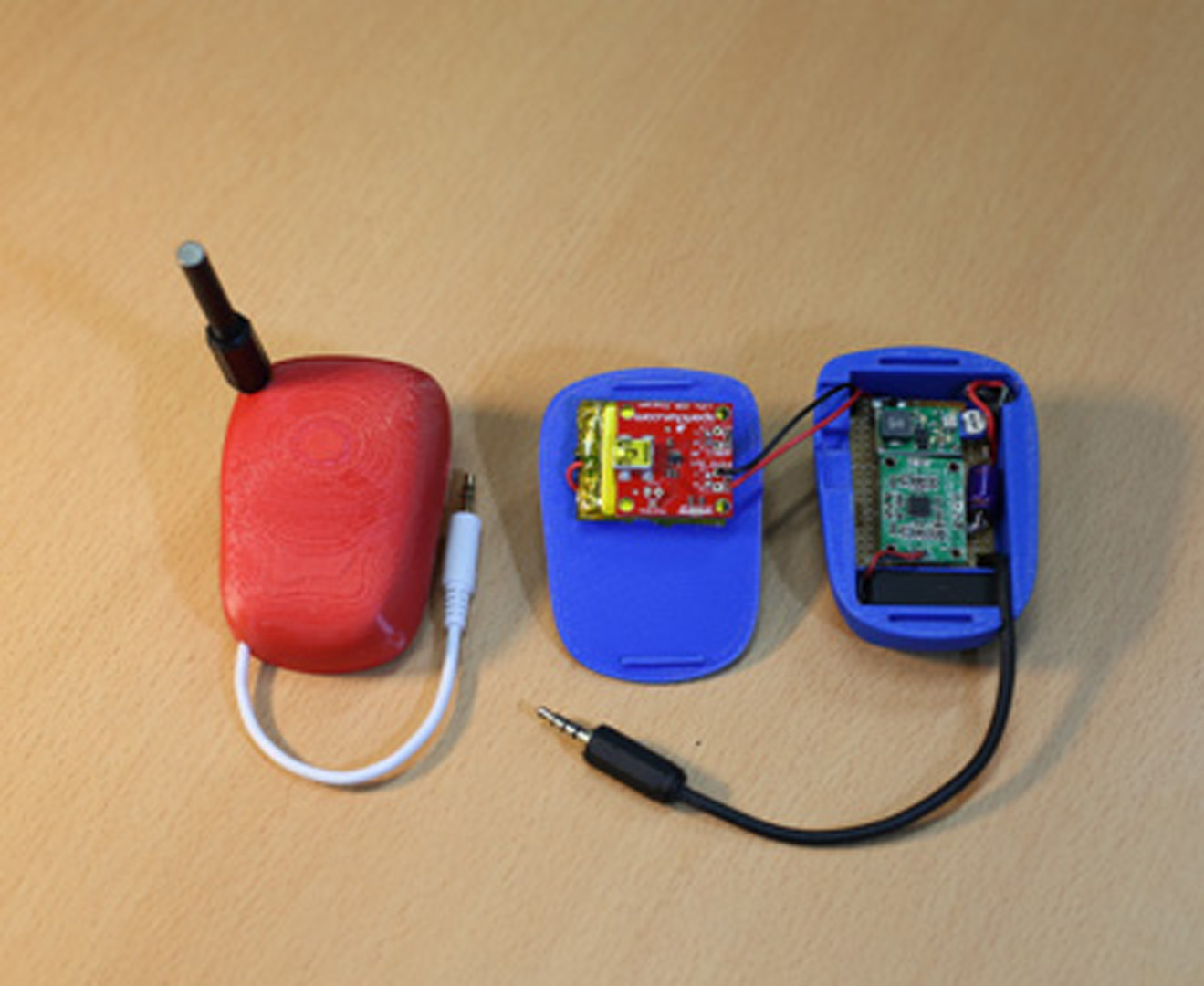

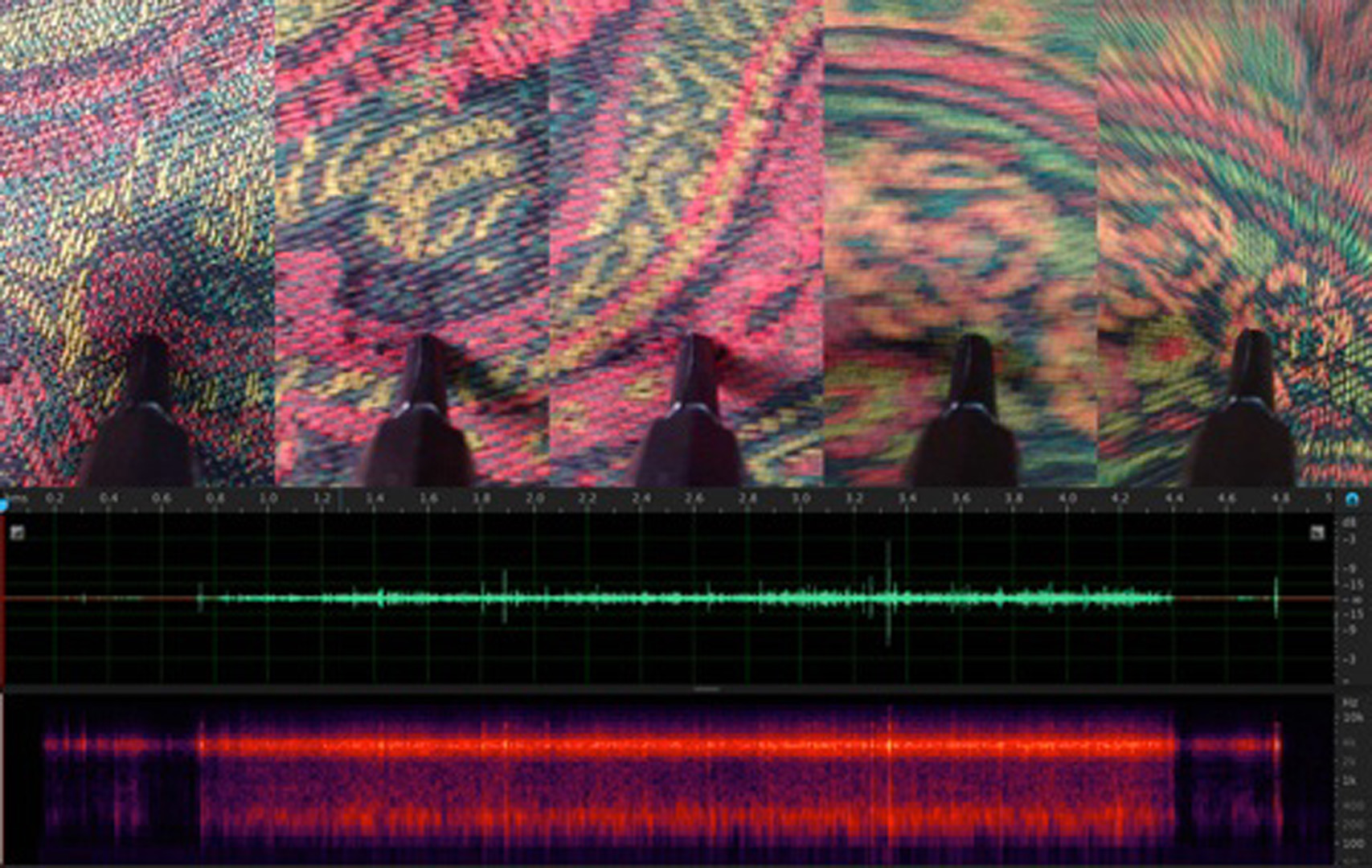

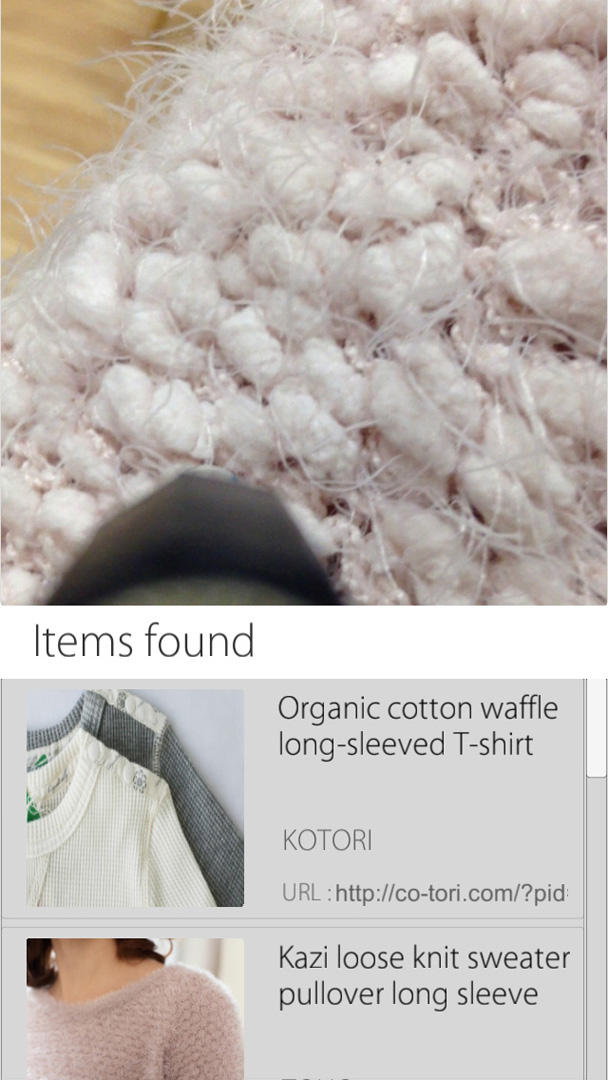

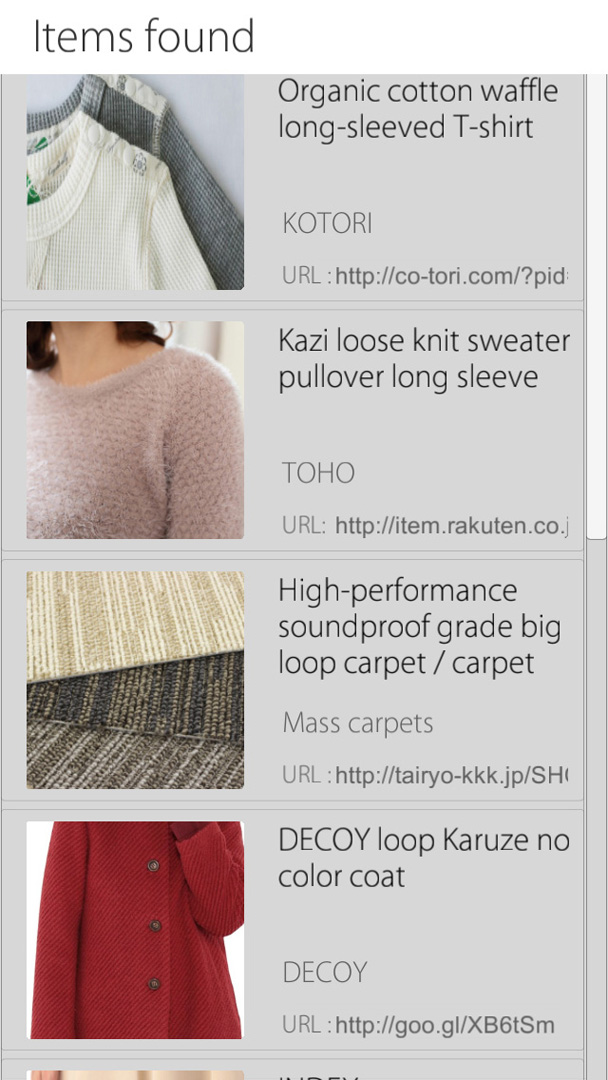

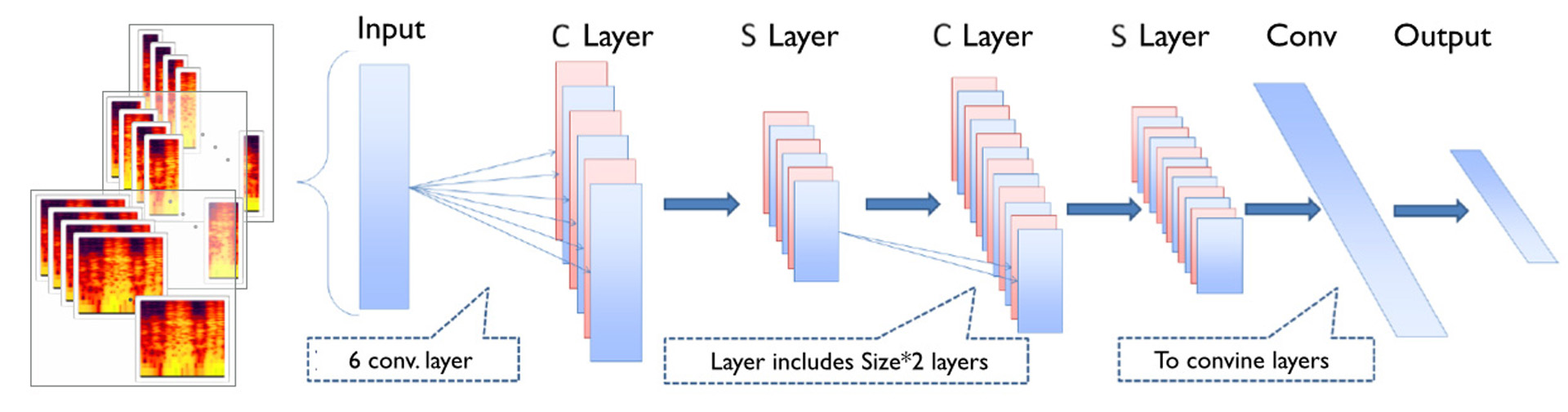

Twech is a mobile platform that enables users to share visuotactile experience and search other experiences for tactile data. User can record and share visuo-tactile experiences by using a visuo-tactile recording and displaying attachment for smartphone, allows the user to instantly such as tweet, and re-experience shared data such as visuo-motor coupling. Further, Twech’s search engine finds similar other experiences, which were scratched material surfaces, communicated with animals or other experiences, for uploaded tactile data by using search engine is based on deep learning that ware expanded for recognizing tactile materials. Twech provides a sharing and finding haptic experiences and users re-experience uploaded visual-tactile data from cloud server.

Additional Images:

Acknowledgements:

This work is supported by JST-ACCEL Embodied Media project and JSPS KAKENHI Grant #26700018.