“Spectral style transfer for human motion between independent actions”

Conference:

Type(s):

Title:

- Spectral style transfer for human motion between independent actions

Session/Category Title: PLANTS & HUMANS

Presenter(s)/Author(s):

Moderator(s):

Abstract:

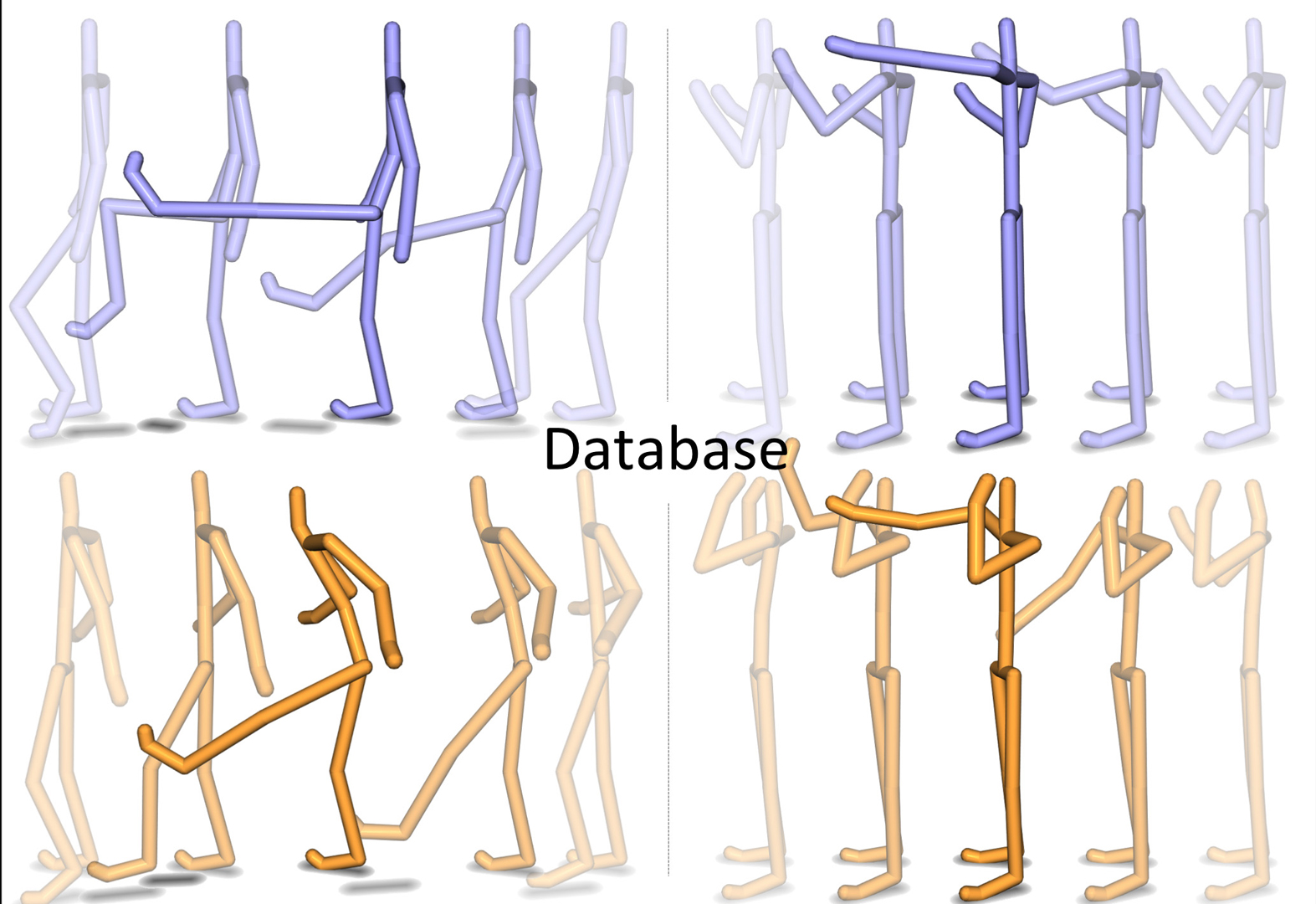

Human motion is complex and difficult to synthesize realistically. Automatic style transfer to transform the mood or identity of a character’s motion is a key technology for increasing the value of already synthesized or captured motion data. Typically, state-of-the-art methods require all independent actions observed in the input to be present in a given style database to perform realistic style transfer. We introduce a spectral style transfer method for human motion between independent actions, thereby greatly reducing the required effort and cost of creating such databases. We leverage a spectral domain representation of the human motion to formulate a spatial correspondence free approach. We extract spectral intensity representations of reference and source styles for an arbitrary action, and transfer their difference to a novel motion which may contain previously unseen actions. Building on this core method, we introduce a temporally sliding window filter to perform the same analysis locally in time for heterogeneous motion processing. This immediately allows our approach to serve as a style database enhancement technique to fill-in non-existent actions in order to increase previous style transfer method’s performance. We evaluate our method both via quantitative experiments, and through administering controlled user studies with respect to previous work, where significant improvement is observed with our approach.

References:

1. Amaya, K., Bruderlin, A., and Calvert, T. 1996. Emotion from motion. In Graphics interface, vol. 96, Toronto, Canada, 222–229. Google ScholarDigital Library

2. Arikan, O., Forsyth, D. A., and O’Brien, J. F. 2003. Motion synthesis from annotations. ACM Transactions on Graphics (TOG) 22, 3, 402–408. Google ScholarDigital Library

3. Brand, M., and Hertzmann, A. 2000. Style machines. In Proceedings of the 27th annual conference on Computer graphics and interactive techniques, ACM Press/Addison-Wesley Publishing Co., 183–192. Google ScholarDigital Library

4. Chai, J., and Hodgins, J. K. 2007. Constraint-based motion optimization using a statistical dynamic model. ACM Transactions on Graphics (TOG) 26, 3, 8. Google ScholarDigital Library

5. Giese, M. A., and Poggio, T. 2000. Morphable models for the analysis and synthesis of complex motion patterns. International Journal of Computer Vision 38, 1, 59–73. Google ScholarDigital Library

6. Hsu, E., Pulli, K., and Popović, J. 2005. Style translation for human motion. ACM Transactions on Graphics (TOG) 24, 3, 1082–1089. Google ScholarDigital Library

7. Hyvärinen, A., Karhunen, J., and Oja, E. 2004. Independent component analysis, vol. 46. John Wiley & Sons.Google Scholar

8. Ikemoto, L., Arikan, O., and Forsyth, D. 2009. Generalizing motion edits with gaussian processes. ACM Transactions on Graphics (TOG) 28, 1, 1. Google ScholarDigital Library

9. Kovar, L., and Gleicher, M. 2004. Automated extraction and parameterization of motions in large data sets. In ACM Transactions on Graphics (TOG), vol. 23, ACM, 559–568. Google ScholarDigital Library

10. Lau, M., Bar-Joseph, Z., and Kuffner, J. 2009. Modeling spatial and temporal variation in motion data. In ACM Transactions on Graphics (TOG), vol. 28, ACM, 171. Google ScholarDigital Library

11. Min, J., and Chai, J. 2012. Motion graphs++: a compact generative model for semantic motion analysis and synthesis. ACM Transactions on Graphics (TOG) 31, 6, 153. Google ScholarDigital Library

12. Min, J., Liu, H., and Chai, J. 2010. Synthesis and editing of personalized stylistic human motion. In Proceedings of the 2010 ACM SIGGRAPH symposium on Interactive 3D Graphics and Games, ACM, 39–46. Google ScholarDigital Library

13. Monzani, J.-S., Baerlocher, P., Boulic, R., and Thalmann, D. 2000. Using an intermediate skeleton and inverse kinematics for motion retargeting. In Computer Graphics Forum, vol. 19, Wiley Online Library, 11–19.Google Scholar

14. Oppenheim, A. V., and Schafer, R. W. 2009. Discrete-time signal processing, vol. 3. Prentice-Hall. Google ScholarDigital Library

15. Shapiro, A., Cao, Y., and Faloutsos, P. 2006. Style components. In Proceedings of Graphics Interface 2006, Canadian Information Processing Society, 33–39. Google ScholarDigital Library

16. Urtasun, R., Glardon, P., Boulic, R., Thalmann, D., and Fua, P. 2004. Style-based motion synthesis. In Computer Graphics Forum, vol. 23, Wiley Online Library, 799–812.Google Scholar

17. Wang, J. M., Fleet, D. J., and Hertzmann, A. 2007. Multifactor gaussian process models for style-content separation. In Proceedings of the 24th international conference on Machine learning, ACM, 975–982. Google ScholarDigital Library

18. Xia, S., Wang, C., Chai, J., and Hodgins, J. 2015. Realtime style transfer for unlabeled heterogeneous human motion. ACM Transactions on Graphics (TOG) 34, 4, 119. Google ScholarDigital Library