“Spacetime Expression Cloning for Blendshapes” by Seol, Lewis, Seo, Choi, Anjyo, et al. …

Conference:

Type(s):

Title:

- Spacetime Expression Cloning for Blendshapes

Presenter(s)/Author(s):

Abstract:

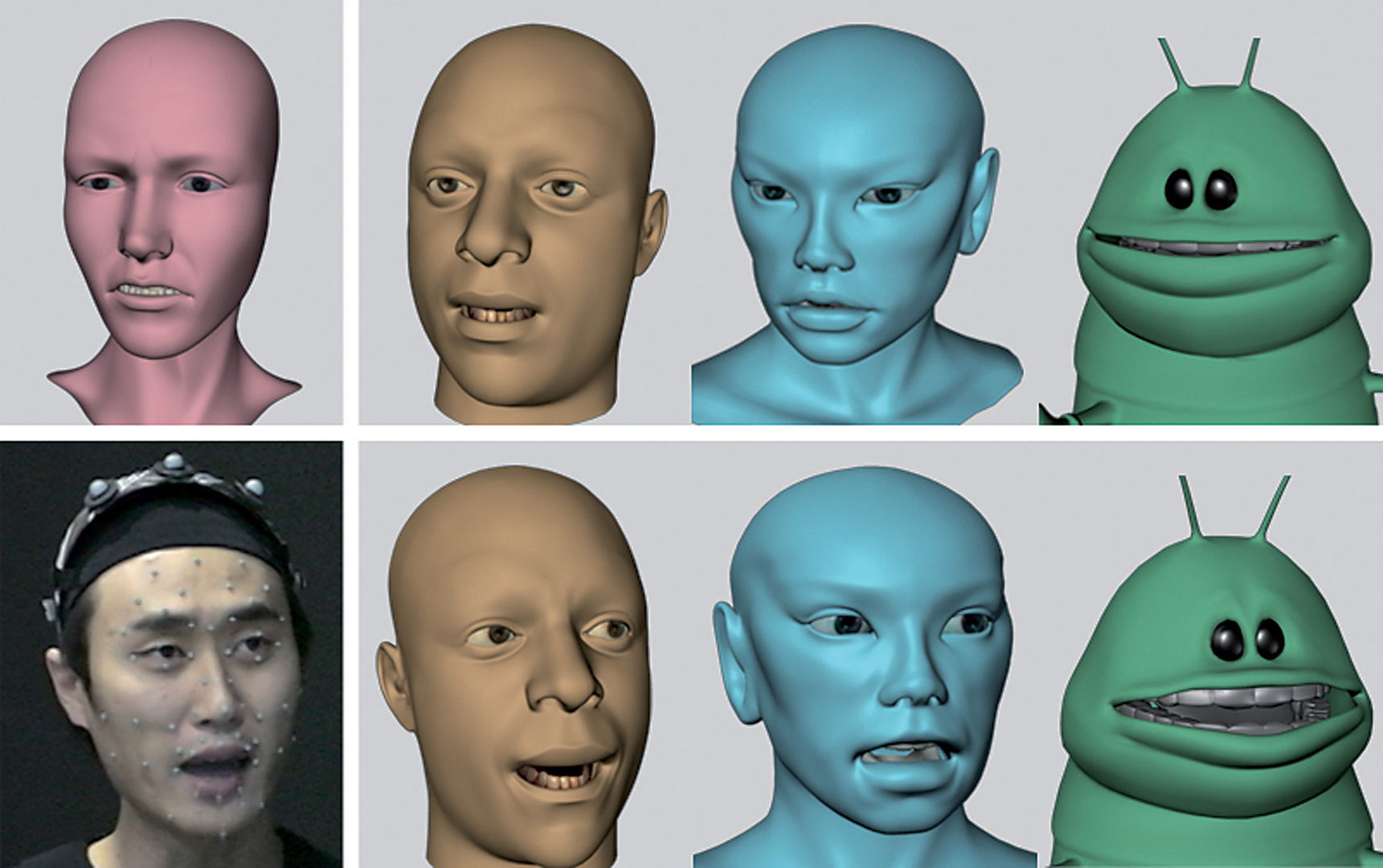

The goal of a practical facial animation retargeting system is to reproduce the character of a source animation on a target face while providing room for additional creative control by the animator. This article presents a novel spacetime facial animation retargeting method for blendshape face models. Our approach starts from the basic principle that the source and target movements should be similar. By interpreting movement as the derivative of position with time, and adding suitable boundary conditions, we formulate the retargeting problem as a Poisson equation. Specified (e.g., neutral) expressions at the beginning and end of the animation as well as any user-specified constraints in the middle of the animation serve as boundary conditions. In addition, a model-specific prior is constructed to represent the plausible expression space of the target face during retargeting. A Bayesian formulation is then employed to produce target animation that is consistent with the source movements while satisfying the prior constraints. Since the preservation of temporal derivatives is the primary goal of the optimization, the retargeted motion preserves the rhythm and character of the source movement and is free of temporal jitter. More importantly, our approach provides spacetime editing for the popular blendshape representation of facial models, exhibiting smooth and controlled propagation of user edits across surrounding frames.

References:

Beeler, T., Hahn, F., Bradley, D., Bickel, B., Beardsley, P., Gotsman, C., Sumner, R. W., and Gross, M. 2011. High-quality passive facial performance capture using anchor frames. ACM Trans. Graph. 30, 75:1–75:10. Google ScholarDigital Library

Bickel, B., Botsch, M., Angst, R., Matusik, W., Otaduy, M., Pfister, H., and Gross, M. 2007. Multi-Scale capture of facial geometry and motion. ACM Trans. Graph. 26, 3, 33. Google ScholarDigital Library

Blanz, V. and Vetter, T. 1999. A morphable model for the synthesis of 3d faces. In Proceedings of the 26th Annual Conference on Computer Graphics and Interactive techniques. ACM Press/Addison-Wesley Publishing Co., New York, 187–194. Google ScholarDigital Library

Borshukov, G., Piponi, D., Larsen, O., Lewis, J., and Tempelaar-Lietz, C. 2003. Universal capture: Image-based facial animation for “The Matrix Reloaded.” In ACM SIGGRAPH 2003 Sketches & Applications. ACM, New York, 1–1. Google ScholarDigital Library

Bregler, C., Loeb, L., Chuang, E., and Deshpande, H. 2002. Turning to the masters: Motion capturing cartoons. ACM Trans. Graph. 21, 3, 399–407. Google ScholarDigital Library

Cao, Y., Faloutsos, P., and Pighin, F. 2003. Unsupervised learning for speech motion editing. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation. Eurographics Association, 225–231. Google ScholarDigital Library

Choe, B., Lee, H., and seok Ko, H. 2001. Performance-driven muscle-based facial animation. J. Vis. Comput. Anim. 12, 67–79.Google ScholarCross Ref

Chuang, E. and Bregler, C. 2002. Performance driven facial animation using blendshape interpolation. Tech. rep., Department of Computer Science, Stanford University.Google Scholar

Chuang, E. and Bregler, C. 2005. Mood swings: Expressive speech animation. ACM Trans. Graph. 24, 2, 331–347. Google ScholarDigital Library

Deng, Z., Chiang, P.-Y., Fox, P., and Neumann, U. 2006. Animating blendshape faces by cross-mapping motion capture data. In Proceedings of the Symposium on Interactive 3D Graphics and Games. ACM, New York, 43–48. Google ScholarDigital Library

Deng, Z. and Noh, J. 2007. Computer Facial Animation: A Survey. Springer, London.Google Scholar

Ekman, P. and Friesen, W. 1977. Manual for the Facial Action Coding System. Consulting Psychologists Press, Palo Alto, CA.Google Scholar

Havaldar, P. 2006. Performance driven facial animation. In ACM SIGGRAPH ’06 Course #30 Notes. Google ScholarDigital Library

Hertzmann, A. 2004. Introduction to Bayesian learning. In ACM SIGGRAPH’04 Course Notes. ACM, New York, 22. Google ScholarDigital Library

Joshi, P., Tien, W. C., Desbrun, M., and Pighin, F. 2003. Learning controls for blend shape based realistic facial animation. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation. Eurographics Association, 187–192. Google ScholarDigital Library

Kovar, L. and Gleicher, M. 2003. Flexible automatic motion blending with registration curves. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation. Eurographics Association, 214–224. Google ScholarDigital Library

Kraevoy, V., Sheffer, A., and Gotsman, C. 2003. Matchmaker: constructing constrained texture maps. ACM SIGGRAPH’03 Papers. ACM, 326–333. Google ScholarDigital Library

Lau, M., Chai, J., Xu, Y.-Q., and Shum, H.-Y. 2009. Face poser: Interactive modeling of 3d facial expressions using facial priors. ACM Trans. Graph. 29, 1, 1–17. Google ScholarDigital Library

Lee, J., Chai, J., Reitsma, P. S. A., Hodgins, J. K., and Pollard, N. S. 2002. Interactive control of avatars animated with human motion data. ACM Trans. Graph. 21, 3, 491–500. Google ScholarDigital Library

Levy, B., Petitjean, S., Ray, N., and Maillo t, J. 2002. Least squares conformal maps for automatic texture atlas generation. In ACM SIGGRAPH Conference Proceedings. Google ScholarDigital Library

Lewis, J. and Anjyo, K. 2010. Direct manipulation blendshapes. IEEE Comput. Graph. Appl. 30, 4, 42–50. Google ScholarDigital Library

Li, H., Sumner, R. W., and Pauly, M. 2008. Global correspondence optimization for non-rigid registration of depth scans. Comput. Graph. Forum 27, 5. Google ScholarDigital Library

Li, H., Weise, T., and Pauly, M. 2010. Example-based facial rigging. ACM Trans. Graph. 29, 4, 1–6. Google ScholarDigital Library

Li, Q. and Deng, Z. 2008. Orthogonal blendshape based editing system for facial motion capture data. IEEE Comput. Graph. Appl., 76–82. Google ScholarDigital Library

Luamanuvae, J. 2010. Personal communication (Weta Digital).Google Scholar

Ma, W.-C., Jones, A., Chiang, J.-Y., Hawkins, T., Frederiksen, S., Peers, P., Vukovic, M., Ouhyoung, M., and Debevec, P. 2008. Facial performance synthesis using deformation-driven polynomial displacement maps. ACM Trans. Graph. 27, 5, 1–10. Google ScholarDigital Library

Ma, X., Le, B. H., and Deng, Z. 2009. Style learning and transferring for facial animation editing. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation. ACM, New York, 123–132. Google ScholarDigital Library

Noh, J. and Neumann, U. 2001. Expression cloning. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques. ACM. 277–288. Google ScholarDigital Library

Orvalho, V. C., Zacur, E., and Susin, A. 2008. Transferring the rig and animations from a character to different face models. Comput. Graph. Forum 27, 8, 1997–2012.Google ScholarCross Ref

Parke, F. I. 1972. Computer generated animation of faces. In Proceedings of the ACM Annual Conference. ACM, New York, 451–457. Google ScholarDigital Library

Parke, F. I. and Waters, K. 1996. Computer Facial Animation. A. K. Peters. Google ScholarDigital Library

Pérez, P., Gangnet, M., and Blake, A. 2003. Poisson image editing. ACM Trans. Graph. 22, 3, 313–318. Google ScholarDigital Library

Pighin, F., Szeliski, R., and Salesin, D. H. 2002. Modeling and animating realistic faces from images. Int. J. Comput. Vis. 50, 2, 143–169. Google ScholarDigital Library

Pyun, H., Kim, Y., Chae, W., Kang, H. W., and Shin, S. Y. 2003. An example-based approach for facial expression cloning. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation. Eurographics Association, 167–176. Google ScholarDigital Library

Reveret, L. and Essa, I. 2001. Visual coding and tracking of speech related facial motion. Tech. rep., IEEE CVPR International Workshop on Cues in Communication.Google Scholar

Sagar, M. and Grossman, R. 2006. Facial performance capture and expressive translation for King Kong. In SIGGRAPH Sketches. Google ScholarDigital Library

Sumner, R. W. and Popovic, J. 2004. Deformation transfer for triangle meshes. ACM SIGGRAPH Papers. ACM. 399–405. Google ScholarDigital Library

Sumner, R. W., Zwicker, M., Gotsman, C., and Popović, J. 2005. Mesh-based inverse kinematics. In ACM SIGGRAPH Papers. ACM, New York, 488–495. Google ScholarDigital Library

Vlasic, D., Brand, M., Pfister, H., and Popović, J. 2005. Face transfer with multilinear models. ACM Trans. Graph. 24, 3, 426–433. Google ScholarDigital Library

Wahba, G. 1990. Spline Models for Observational Data. SIAM.Google Scholar

Weise, T., Li, H., Van Gool, L., and Pauly, M. 2009. Face/off: Live facial puppetry. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation. ACM, New York, 7–16. Google ScholarDigital Library

Williams, L. 1990. Performance-driven facial animation. In Proceedings of the 17th Annual Conference on Computer Graphics and Interactive Techniques. ACM, New York, 235–242. Google ScholarDigital Library

Zhang, L., Snavely, N., Curless, B., and Seitz, S. M. 2004. Spacetime faces: High resolution capture for modeling and animation. ACM SIGGRAPH Papers. ACM, New York, 548–558. Google ScholarDigital Library