“RSMT: Real-time Stylized Motion Transition for Characters” by Tang, Wu, Wang, Hu, Gong, et al. …

Conference:

Type(s):

Title:

- RSMT: Real-time Stylized Motion Transition for Characters

Session/Category Title:

- Character Animation

Presenter(s)/Author(s):

Moderator(s):

Abstract:

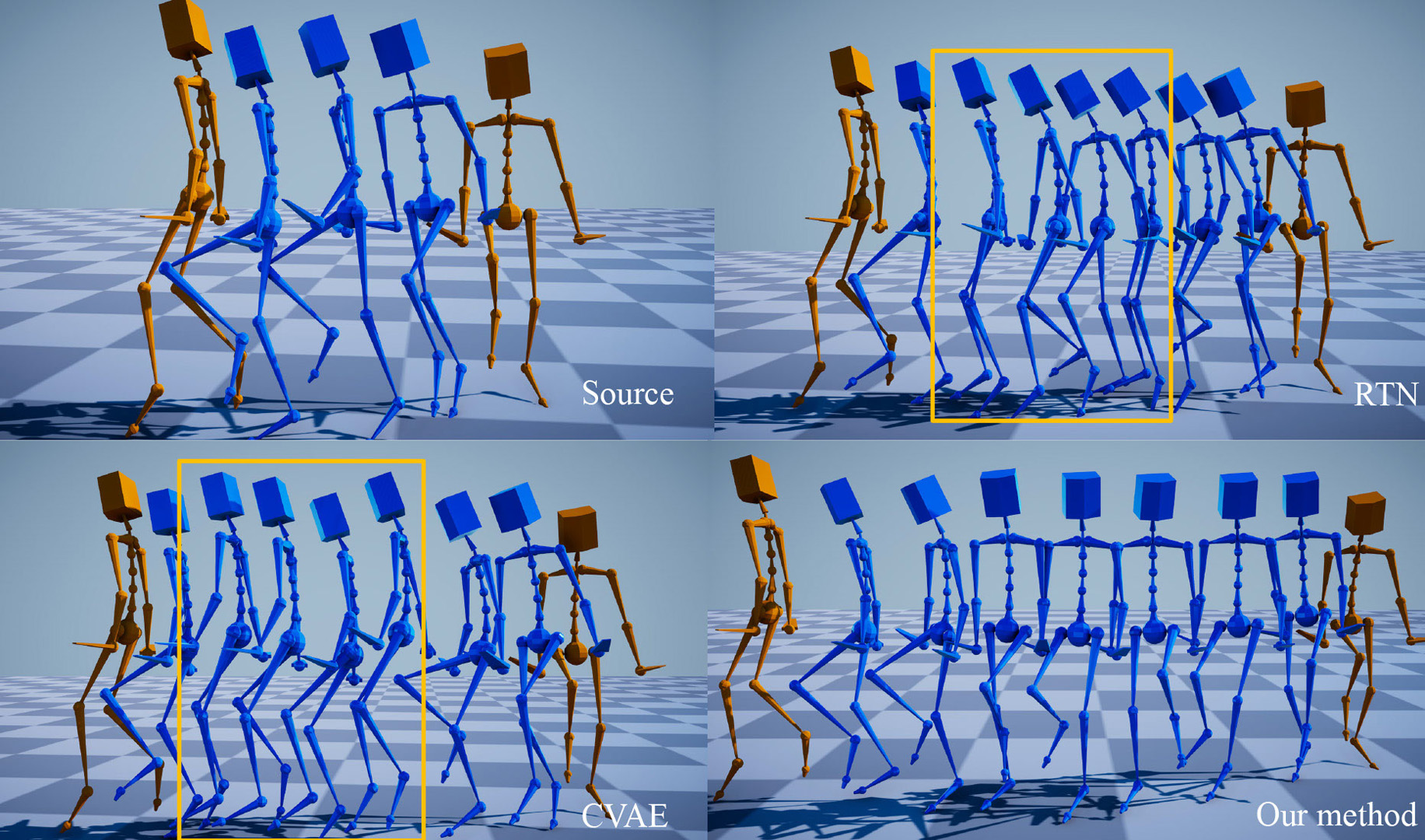

Styled online in-between motion generation has important application scenarios in computer animation and games. Its core challenge lies in the need to satisfy four critical requirements simultaneously: generation speed, motion quality, style diversity, and synthesis controllability. While the first two challenges demand a delicate balance between simple fast models and learning capacity for generation quality, the latter two are rarely investigated together in existing methods, which largely focus on either control without style or uncontrolled stylized motions. To this end, we propose a Real-time Stylized Motion Transition method (RSMT) to achieve all aforementioned goals. Our method consists of two critical, independent components: a general motion manifold model and a style motion sampler. The former acts as a high-quality motion source and the latter synthesizes styled motions on the fly under control signals. Since both components can be trained separately on different datasets, our method provides great flexibility, requires less data, and generalizes well when no/few samples are available for unseen styles. Through exhaustive evaluation, our method proves to be fast, high-quality, versatile, and controllable. The code and data are available at https://github.com/yuyujunjun/RSMT-Realtime-Stylized-Motion-Transition.

References:

1. Kfir Aberman, Yijia Weng, Dani Lischinski, Daniel Cohen-Or, and Baoquan Chen. 2020. Unpaired motion style transfer from video to animation. ACM Transactions on Graphics 39, 4 (2020), 1–12.

2. Okan Arikan and D. A. Forsyth. 2002. Interactive motion generation from examples. ACM Transactions on Graphics 21, 3 (2002), 483–490.

3. Philippe Beaudoin, Stelian Coros, Michiel van de Panne, and Pierre Poulin. 2008. Motion-motif graphs. In Proceedings of the 2008 ACM SIGGRAPH/Eurographics Symposium on Computer Animation. 117–126.

4. Matthew Brand and Aaron Hertzmann. 2000. Style machines. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques. 183–192.

5. Jinxiang Chai and Jessica K. Hodgins. 2007. Constraint-based motion optimization using a statistical dynamic model. ACM Transactions on Graphics 26, 3 (2007), 8–es.

6. Wenheng Chen, He Wang, Yi Yuan, Tianjia Shao, and Kun Zhou. 2020. Dynamic future net: diversified human motion generation. In Proceedings of the 28th ACM International Conference on Multimedia. 2131–2139.

7. Yuzhu Dong, Andreas Aristidou, Ariel Shamir, Moshe Mahler, and Eakta Jain. 2020. Adult2child: motion style transfer using cyclegans. In Proceedings of the 11th Annual International Conference on Motion, Interaction, and Games. 1–11.

8. Han Du, Erik Herrmann, Janis Sprenger, Klaus Fischer, and Philipp Slusallek. 2019. Stylistic locomotion modeling and synthesis using variational generative models. In Proceedings of the 11th Annual International Conference on Motion, Interaction, and Games. 1–10.

9. Yinglin Duan, Tianyang Shi, Zhengxia Zou, Yenan Lin, Zhehui Qian, Bohan Zhang, and Yi Yuan. 2021. Single-shot motion completion with transformer. arXiv:2103.00776 [cs] (2021).

10. Félix G. Harvey and Christopher Pal. 2018. Recurrent transition networks for character locomotion. In SIGGRAPH Asia 2018 Technical Briefs(SA ’18). Association for Computing Machinery, 1–4.

11. Félix G. Harvey, Mike Yurick, Derek Nowrouzezahrai, and Christopher Pal. 2020. Robust motion in-betweening. ACM Transactions on Graphics 39, 4 (2020), 1–12.

12. Chengan He, Jun Saito, James Zachary, Holly Rushmeier, and Yi Zhou. 2022. NeMF: Neural Motion Fields for Kinematic Animation. In NeurIPS.

13. Alejandro Hernandez, Jurgen Gall, and Francesc Moreno-Noguer. 2019. Human motion prediction via spatio-temporal inpainting. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 7134–7143.

14. Daniel Holden, Oussama Kanoun, Maksym Perepichka, and Tiberiu Popa. 2020. Learned motion matching. ACM Transactions on Graphics 39, 4 (2020), 1–12.

15. Daniel Holden, Taku Komura, and Jun Saito. 2017. Phase-functioned neural networks for character control. ACM Transactions on Graphics 36, 4 (2017), 1–13.

16. Daniel Holden, Jun Saito, and Taku Komura. 2016. A deep learning framework for character motion synthesis and editing. ACM Transactions on Graphics 35, 4 (2016), 1–11.

17. Eugene Hsu, Kari Pulli, and Jovan Popović. 2005. Style translation for human motion. ACM Transactions on Graphics 24, 3 (2005), 1082–1089.

18. Xun Huang and Serge Belongie. 2017. Arbitrary style transfer in real-time with adaptive instance normalization. In Proceedings of the IEEE International Conference on Computer Vision. 1501–1510.

19. Deok-Kyeong Jang, Soomin Park, and Sung-Hee Lee. 2022. Motion puzzle: arbitrary motion style transfer by body part. ACM Transactions on Graphics 41, 3 (2022), 1–16.

20. Xu Ji, Razvan Pascanu, Devon Hjelm, Balaji Lakshminarayanan, and Andrea Vedaldi. 2021. Test sample accuracy scales with training sample density in neural networks. In Proceedings of the 1st Conference on Lifelong Learning Agents. 629–646.

21. Manuel Kaufmann, Emre Aksan, Jie Song, Fabrizio Pece, Remo Ziegler, and Otmar Hilliges. 2020. Convolutional autoencoders for human motion infilling. In Proceedings of the 2020 International Conference on 3D Vision. 918–927.

22. Diederik P Kingma and Max Welling. 2013. Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114 (2013).

23. Lucas Kovar, Michael Gleicher, and Frédéric Pighin. 2008. Motion graphs. In ACM SIGGRAPH 2008 Classes(SIGGRAPH ’08). 1–10.

24. Sergey Levine, Jack M Wang, Alexis Haraux, Zoran Popović, and Vladlen Koltun. 2012. Continuous character control with low-dimensional embeddings. ACM Transactions on Graphics 31, 4 (2012), 1–10.

25. Jiaman Li, Ruben Villegas, Duygu Ceylan, Jimei Yang, Zhengfei Kuang, Hao Li, and Yajie Zhao. 2021. Task-generic hierarchical human motion prior using vaes. In Proceedings of the 2021 International Conference on 3D Vision. 771–781.

26. Peizhuo Li, Kfir Aberman, Zihan Zhang, Rana Hanocka, and Olga Sorkine-Hornung. 2022. GANimator: neural motion synthesis from a single sequence. ACM Transactions on Graphics 41, 4 (2022), 1–12.

27. Wanyi Li, Jifeng Sun, Xin Zhang, and Yuanchang Wu. 2013. Spatial constraints-based maximum likelihood estimation for human motions. In Proceedings of the 2013 IEEE International Conference on Signal Processing, Communication and Computing (ICSPCC 2013). 1–6.

28. Yan Li, Tianshu Wang, and Heung-Yeung Shum. 2002. Motion texture: a two-level statistical model for character motion synthesis. In Proceedings of the 29th Annual Conference on Computer Graphics and Interactive Techniques. 465–472.

29. Hung Yu Ling, Fabio Zinno, George Cheng, and Michiel van de Panne. 2020. Character controllers using motion VAEs. ACM Transactions on Graphics 39, 4 (2020), 1–12.

30. Wanli Ma, Shihong Xia, Jessica K Hodgins, Xiao Yang, Chunpeng Li, and Zhaoqi Wang. 2010. Modeling style and variation in human motion. In Proceedings of the 2010 ACM SIGGRAPH/Eurographics Symposium on Computer Animation. 21–30.

31. Julieta Martinez, Michael J Black, and Javier Romero. 2017. On human motion prediction using recurrent neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2891–2900.

32. Ian Mason, Sebastian Starke, and Taku Komura. 2022. Real-time style modelling of human locomotion via feature-wise transformations and local motion phases. Proceedings of the ACM on Computer Graphics and Interactive Techniques 5, 1 (2022), 1–18.

33. Ian Mason, Sebastian Starke, He Zhang, Hakan Bilen, and Taku Komura. 2018. Few-shot learning of homogeneous human Locomotion Styles. Computer Graphics Forum 37, 7 (2018), 143–153.

34. Jianyuan Min and Jinxiang Chai. 2012. Motion graphs++: a compact generative model for semantic motion analysis and synthesis. ACM Transactions on Graphics 31, 6 (2012), 1–12.

35. Boris N Oreshkin, Antonios Valkanas, Félix G Harvey, Louis-Simon Ménard, Florent Bocquelet, and Mark J Coates. 2022. Motion inbetweening via deep Δ -interpolator. arXiv preprint arXiv:2201.06701 (2022).

36. Soomin Park, Deok-Kyeong Jang, and Sung-Hee Lee. 2021. Diverse motion stylization for multiple style domains via spatial-temporal graph-based generative model. Proceedings of the ACM on Computer Graphics and Interactive Techniques 4, 3 (2021), 1–17.

37. Vladimir Pavlovic, James M Rehg, and John MacCormick. 2000. Learning switching linear models of human motion. In Proceedings of the 13th International Conference on Neural Information Processing Systems. 942–948.

38. Mathis Petrovich, Michael J Black, and Gül Varol. 2021. Action-conditioned 3d human motion synthesis with transformer vae. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 10985–10995.

39. Jia Qin, Youyi Zheng, and Kun Zhou. 2022. Motion in-betweening via two-stage transformers. ACM Transactions on Graphics 41, 6 (2022), 1–16.

40. Davis Rempe, Tolga Birdal, Aaron Hertzmann, Jimei Yang, Srinath Sridhar, and Leonidas J Guibas. 2021. Humor: 3d human motion model for robust pose estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 11488–11499.

41. Alla Safonova and Jessica K. Hodgins. 2007. Construction and optimal search of interpolated motion graphs. ACM Transactions on Graphics 26, 3 (2007), 106–es.

42. Yijun Shen, He Wang, Edmond S. L. Ho, Longzhi Yang, and Hubert P. H. Shum. 2017. Posture-based and action-based graphs for boxing skill visualization. Computers and Graphics 69, Supplement C (2017), 104–115.

43. Harrison Jesse Smith, Chen Cao, Michael Neff, and Yingying Wang. 2019. Efficient neural networks for real-time motion style transfer. Proceedings of the ACM on Computer Graphics and Interactive Techniques 2, 2 (2019), 1–17.

44. Sebastian Starke, Ian Mason, and Taku Komura. 2022. DeepPhase: periodic autoencoders for learning motion phase manifolds. ACM Transactions on Graphics 41, 4 (2022), 1–13.

45. Sebastian Starke, Yiwei Zhao, Fabio Zinno, and Taku Komura. 2021. Neural animation layering for synthesizing martial arts movements. ACM Transactions on Graphics 40, 4 (2021), 1–16.

46. Xiangjun Tang, He Wang, Bo Hu, Xu Gong, Ruifan Yi, Qilong Kou, and Xiaogang Jin. 2022. Real-time controllable motion transition for characters. ACM Transactions on Graphics 41, 4 (2022), 1–10.

47. Guy Tevet, Sigal Raab, Brian Gordon, Yonatan Shafir, Daniel Cohen-Or, and Amit H Bermano. 2022. Human motion diffusion model. arXiv preprint arXiv:2209.14916 (2022).

48. Munetoshi Unuma, Ken Anjyo, and Ryozo Takeuchi. 1995. Fourier principles for emotion-based human figure animation. In Proceedings of the 22nd Annual Conference on Computer Graphics and Interactive Techniques. 91–96.

49. He Wang, Edmond SL Ho, and Taku Komura. 2015. An energy-driven motion planning method for two distant postures. IEEE Transactions on Visualization and Computer Graphics 21, 1 (2015), 18–30.

50. He Wang, Edmond SL Ho, Hubert PH Shum, and Zhanxing Zhu. 2019. Spatio-temporal manifold learning for human motions via long-horizon modeling. IEEE Transactions on Visualization and Computer Graphics 27, 1 (2019), 216–227.

51. He Wang and Taku Komura. 2011. Energy-based pose unfolding and interpolation for 3D articulated characters. In Proceedings of the 4th International Conference on Motion in Games. 110–119.

52. He Wang, Kirill A Sidorov, Peter Sandilands, and Taku Komura. 2013. Harmonic parameterization by electrostatics. ACM Transactions on Graphics 32, 5 (2013), 1–12.

53. Jack M Wang, David J Fleet, and Aaron Hertzmann. 2007. Multifactor gaussian process models for style-content separation. In Proceedings of the 24th International Conference on Machine Learning. 975–982.

54. Yu-Hui Wen, Zhipeng Yang, Hongbo Fu, Lin Gao, Yanan Sun, and Yong-Jin Liu. 2021. Autoregressive stylized motion synthesis with generative flow. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 13612–13621.

55. Shihong Xia, Congyi Wang, Jinxiang Chai, and Jessica Hodgins. 2015. Realtime style transfer for unlabeled heterogeneous human motion. ACM Transactions on Graphics 34, 4 (2015), 1–10.

56. Wenjie Yin, Hang Yin, Kim Baraka, Danica Kragic, and Mårten Björkman. 2023. Dance style transfer with cross-modal transformer. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 5058–5067.

57. M Ersin Yumer and Niloy J Mitra. 2016. Spectral style transfer for human motion between independent actions. ACM Transactions on Graphics 35, 4 (2016), 1–8.

58. He Zhang, Sebastian Starke, Taku Komura, and Jun Saito. 2018. Mode-adaptive neural networks for quadruped motion control. ACM Transactions on Graphics 37, 4 (2018), 1–11.