“Rigid stabilization of facial expressions” by Beeler and Bradley

Conference:

Type(s):

Title:

- Rigid stabilization of facial expressions

Session/Category Title: Faces

Presenter(s)/Author(s):

Moderator(s):

Abstract:

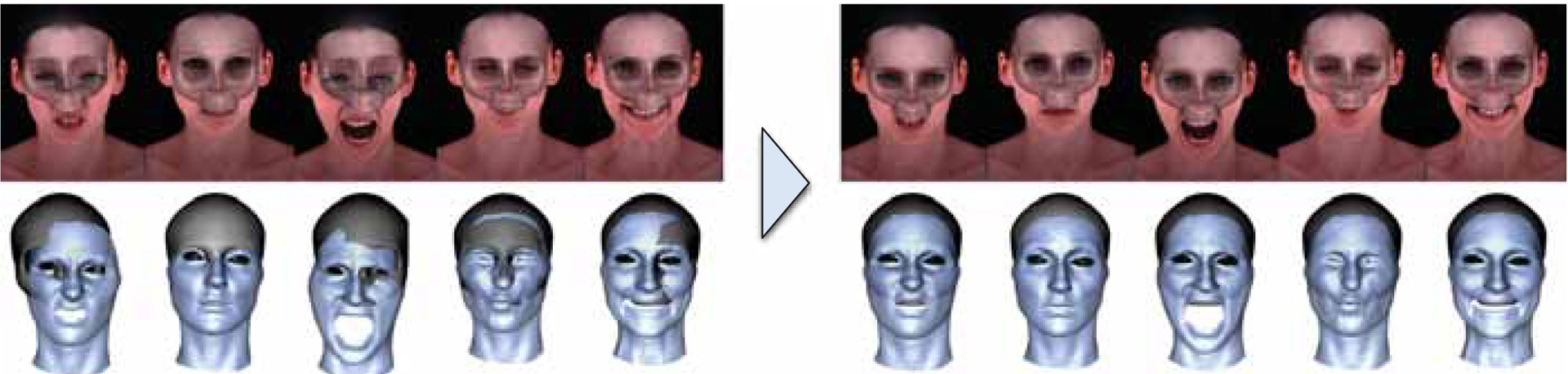

Facial scanning has become the industry-standard approach for creating digital doubles in movies and video games. This involves capturing an actor while they perform different expressions that span their range of facial motion. Unfortunately, the scans typically contain a superposition of the desired expression on top of un-wanted rigid head movement. In order to extract true expression deformations, it is essential to factor out the rigid head movement for each expression, a process referred to as rigid stabilization. In order to achieve production-quality in industry, face stabilization is usually performed through a tedious and error-prone manual process. In this paper we present the first automatic face stabilization method that achieves professional-quality results on large sets of facial expressions. Since human faces can undergo a wide range of deformation, there is not a single point on the skin surface that moves rigidly with the underlying skull. Consequently, computing the rigid transformation from direct observation, a common approach in previous methods, is error prone and leads to inaccurate results. Instead, we propose to indirectly stabilize the expressions by explicitly aligning them to an estimate of the underlying skull using anatomically-motivated constraints. We show that the proposed method not only outperforms existing techniques but is also on par with manual stabilization, yet requires less than a second of computation time.

References:

1. Alexander, O., Rogers, M., Lambeth, W., Chiang, J.-Y., Ma, W.-C., Wang, C.-C., and Debevec, P. 2010. The digital emily project: Achieving a photoreal digital actor. IEEE Computer Graphics and Applications 30, 4, 20–31. Google ScholarDigital Library

2. Ali-Hamadi, D., Liu, T., Gilles, B., Kavan, L., Faure, F., Palombi, O., and Cani, M. 2013. Anatomy transfer. ACM Trans. Graph. 32, 6 (Nov.), 188:1–188:8. Google ScholarDigital Library

3. Amberg, B., and Vetter, T. 2011. Optimal landmark detection using shape models and branch and bound. In Int. Conference on Computer Vision (ICCV). Google ScholarDigital Library

4. Arun, K. S., Huang, T. S., and Blostein, S. D. 1987. Least-squares fitting of two 3-d point sets. IEEE Trans. PAMI 9, 5, 698–700. Google ScholarDigital Library

5. Beeler, T., Bickel, B., Sumner, R., Beardsley, P., and Gross, M. 2010. High-quality single-shot capture of facial geometry. ACM Trans. Graphics (Proc. SIGGRAPH) 29, 40:1–40:9. Google ScholarDigital Library

6. Beeler, T., Hahn, F., Bradley, D., Bickel, B., Beardsley, P., Gotsman, C., Sumner, R. W., and Gross, M. 2011. High-quality passive facial performance capture using anchor frames. ACM Trans. Graphics (Proc. SIGGRAPH) 30, 75:1–75:10. Google ScholarDigital Library

7. Besl, P. J., and McKay, N. D. 1992. A method for registration of 3-d shapes. IEEE Trans. PAMI 14, 2, 239–256. Google ScholarDigital Library

8. Blanz, V., and Vetter, T. 1999. A morphable model for the synthesis of 3d faces. In Proc. SIGGRAPH, 187–194. Google ScholarDigital Library

9. Botsch, M., and Sorkine, O. 2008. On linear variational surface deformation methods. IEEE TVCG 14, 1, 213–230. Google ScholarDigital Library

10. Bouaziz, S., Wang, Y., and Pauly, M. 2013. Online modeling for realtime facial animation. ACM Trans. Graphics (Proc. SIGGRAPH) 32, 4, 40:1–40:10. Google ScholarDigital Library

11. Bradley, D., Heidrich, W., Popa, T., and Sheffer, A. 2010. High resolution passive facial performance capture. ACM Trans. Graphics (Proc. SIGGRAPH) 29, 41:1–41:10. Google ScholarDigital Library

12. Cao, C., Weng, Y., Zhou, S., Tong, Y., and Zhou, K. 2013. Facewarehouse: A 3d facial expression database for visual computing. IEEE TVCG. Google ScholarDigital Library

13. Cao, C., Weng, Y., Lin, S., and Zhou, K. 2013. 3d shape regression for real-time facial animation. ACM Trans. Graphics (Proc. SIGGRAPH) 32, 4, 41:1–41:10. Google ScholarDigital Library

14. Dale, K., Sunkavalli, K., Johnson, M. K., Vlasic, D., Matusik, W., and Pfister, H. 2011. Video face replacement. ACM Trans. Graphics (Proc. SIGGRAPH Asia) 30, 6, 130:1–130:10. Google ScholarDigital Library

15. Fyffe, G., Hawkins, T., Watts, C., Ma, W.-C., and Debevec, P. 2011. Comprehensive facial performance capture. In Eurographics 2011.Google Scholar

16. Ghosh, A., Fyffe, G., Tunwattanapong, B., Busch, J., Yu, X., and Debevec, P. 2011. Multiview face capture using polarized spherical gradient illumination. ACM Trans. Graphics (Proc. SIGGRAPH Asia) 30, 6, 129:1–129:10. Google ScholarDigital Library

17. Gower, J. C. 1975. Generalized procrustes analysis. Psychometrika 40, 1.Google ScholarCross Ref

18. Huang, H., Chai, J., Tong, X., and Wu, H.-T. 2011. Leveraging motion capture and 3d scanning for high-fidelity facial performance acquisition. ACM Trans. Graphics (Proc. SIGGRAPH) 30, 4, 74:1–74:10. Google ScholarDigital Library

19. Li, H., Adams, B., Guibas, L. J., and Pauly, M. 2009. Robust single-view geometry and motion reconstruction. ACM Trans. Graphics (Proc. SIGGRAPH Asia) 28, 5, 175:1–175:10. Google ScholarDigital Library

20. Li, H., Weise, T., and Pauly, M. 2010. Example-based facial rigging. ACM Trans. Graphics (Proc. SIGGRAPH) 29, 4, 32:1–32:6. Google ScholarDigital Library

21. Li, H., Yu, J., Ye, Y., and Bregler, C. 2013. Realtime facial animation with on-the-fly correctives. ACM Trans. Graphics (Proc. SIGGRAPH) 32, 4, 42:1–42:10. Google ScholarDigital Library

22. Ma, W.-C., Hawkins, T., Peers, P., Chabert, C.-F., Weiss, M., and Debevec, P. 2007. Rapid acquisition of specular and diffuse normal maps from polarized spherical gradient illumination. In Eurographics Symposium on Rendering, 183–194. Google ScholarDigital Library

23. Sumner, R. W., and Popović, J. 2004. Deformation transfer for triangle meshes. ACM Trans. Graphics (Proc. SIGGRAPH) 23, 3, 399–405. Google ScholarDigital Library

24. Vlasic, D., Brand, M., Pfister, H., and Popović, J. 2005. Face transfer with multilinear models. ACM Trans. Graphics (Proc. SIGGRAPH) 24, 3, 426–433. Google ScholarDigital Library

25. Weise, T., Li, H., Van Gool, L., and Pauly, M. 2009. Face/off: live facial puppetry. In Proc. Symposium on Computer Animation, 7–16. Google ScholarDigital Library

26. Weise, T., Bouaziz, S., Li, H., and Pauly, M. 2011. Real-time performance-based facial animation. ACM Trans. Graphics (Proc. SIGGRAPH) 30, 4, 77:1–77:10. Google ScholarDigital Library