“MultiView Mannequins for Deep Depth Estimation in 360ºVideos” by Takacs, Vincze and Richter

Conference:

Type(s):

Entry Number: 24

Title:

- MultiView Mannequins for Deep Depth Estimation in 360ºVideos

Presenter(s)/Author(s):

Abstract:

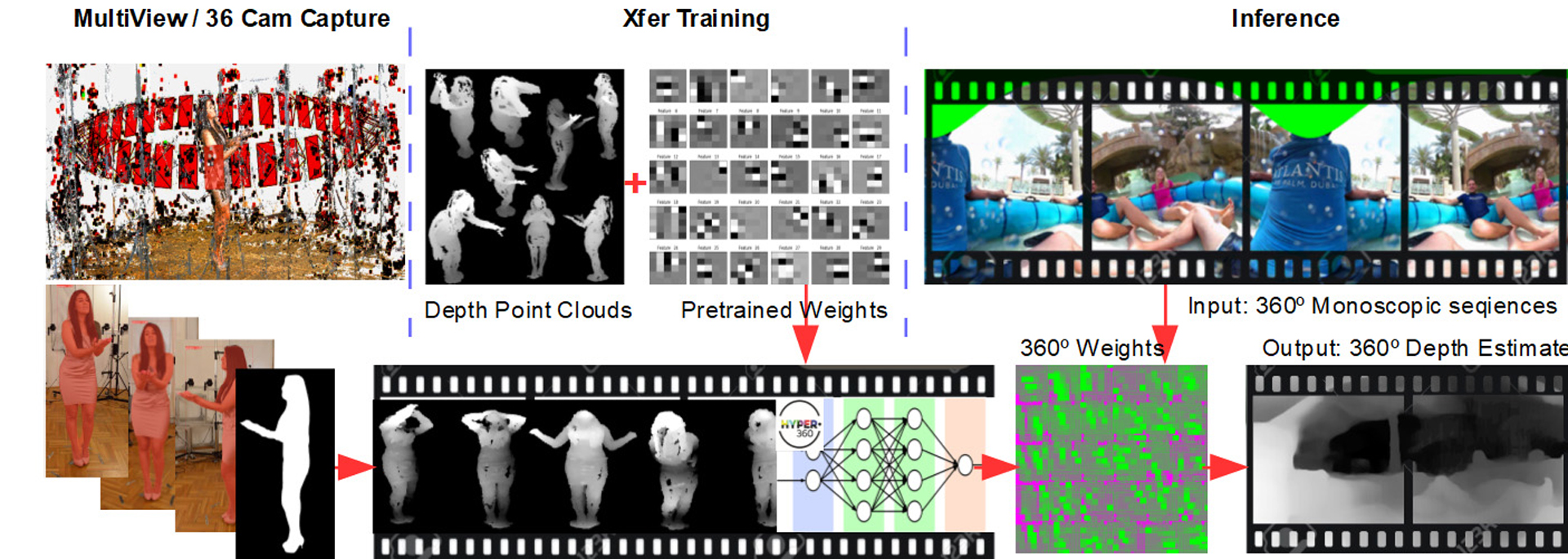

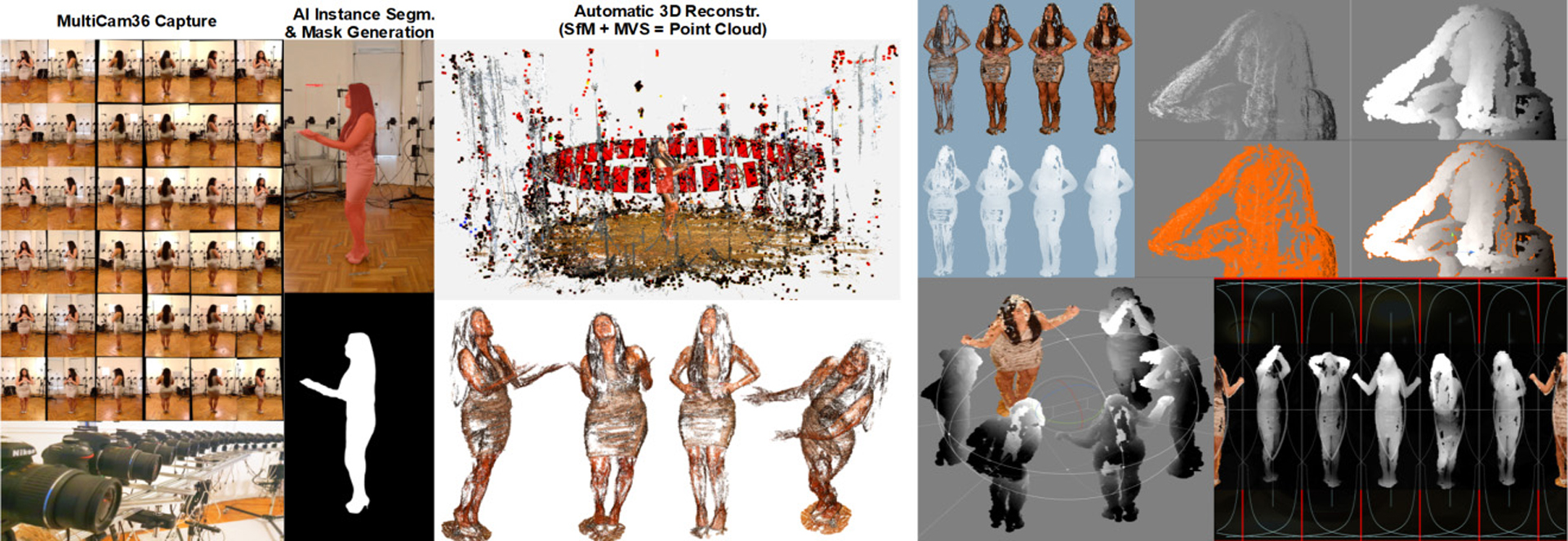

We estimate depth maps from real-life monocular 360.VR videos using a Deep Neural Net architecture trained on 3D point-based renderings of people (called depth “mannequins”) captured with a Multi36 camera setup and processed with a combined pipeline of AI Instance Segmentation, Structure from Motion and Multi View Stereo methods.

Keyword(s):

Additional Images:

Acknowledgements:

This work has received funding from the European Union’s Horizon 2020 research and innovation programme, grant no 761934, Hyper360 (“Enriching 360 media with 3D storytelling and personalisation elements”). http://www.hyper360.eu/