“Learning meaningful controls for fluids” by Chu, Thuerey, Seidel, Theobalt and Zayer

Conference:

Type(s):

Title:

- Learning meaningful controls for fluids

Presenter(s)/Author(s):

Abstract:

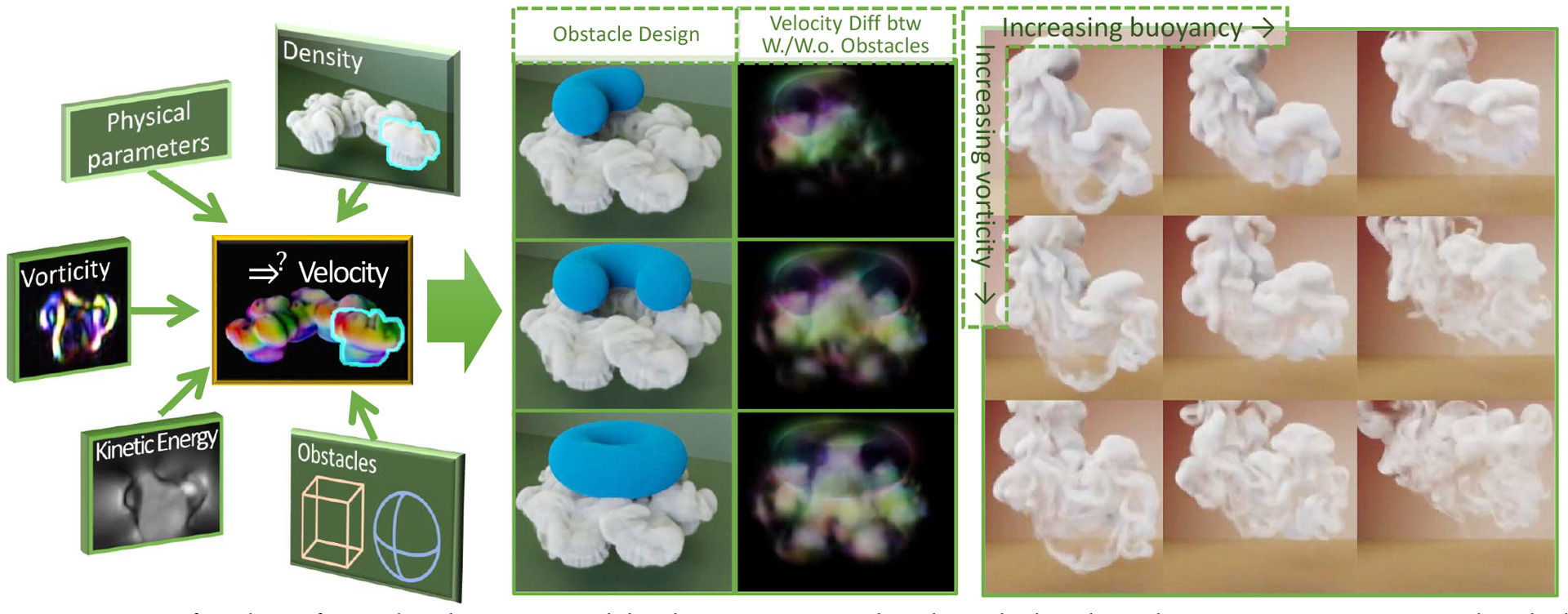

While modern fluid simulation methods achieve high-quality simulation results, it is still a big challenge to interpret and control motion from visual quantities, such as the advected marker density. These visual quantities play an important role in user interactions: Being familiar and meaningful to humans, these quantities have a strong correlation with the underlying motion. We propose a novel data-driven conditional adversarial model that solves the challenging and theoretically ill-posed problem of deriving plausible velocity fields from a single frame of a density field. Besides density modifications, our generative model is the first to enable the control of the results using all of the following control modalities: obstacles, physical parameters, kinetic energy, and vorticity. Our method is based on a new conditional generative adversarial neural network that explicitly embeds physical quantities into the learned latent space, and a new cyclic adversarial network design for control disentanglement. We show the high quality and versatile controllability of our results for density-based inference, realistic obstacle interaction, and sensitive responses to modifications of physical parameters, kinetic energy, and vorticity. Code, models, and results can be found at https://github.com/RachelCmy/den2vel.

References:

1. Thiemo Alldieck, Gerard Pons-Moll, Christian Theobalt, and Marcus Magnor. 2019. Tex2shape: Detailed full human body geometry from a single image. In Proceedings of the IEEE International Conference on Computer Vision. 2293–2303.Google ScholarCross Ref

2. Amir Arsalan Soltani, Haibin Huang, Jiajun Wu, Tejas D Kulkarni, and Joshua B Tenenbaum. 2017. Synthesizing 3d shapes via modeling multi-view depth maps and silhouettes with deep generative networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1511–1519.Google ScholarCross Ref

3. David Berthelot, Thomas Schumm, and Luke Metz. 2017. BEGAN: Boundary Equilibrium Generative Adversarial Networks. arXiv:1703.10717Google Scholar

4. Robert Bridson. 2015. Fluid simulation for computer graphics. CRC press.Google ScholarDigital Library

5. Mark Browning, Connelly Barnes, Samantha Ritter, and Adam Finkelstein. 2014. Stylized keyframe animation of fluid simulations. In Proceedings of the Workshop on Non-Photorealistic Animation and Rendering. 63–70.Google ScholarDigital Library

6. Xi Chen, Yan Duan, Rein Houthooft, John Schulman, Ilya Sutskever, and Pieter Abbeel. 2016. InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets. In Advances in Neural Information Processing Systems, Vol. 29. Curran Associates, Inc., Barcelona, 2172–2180.Google Scholar

7. Mengyu Chu and Nils Thuerey. 2017. Data-driven synthesis of smoke flows with CNN-based feature descriptors. ACM Transactions on Graphics (TOG) 36, 4 (2017).Google ScholarDigital Library

8. Ronald Fedkiw, Jos Stam, and Henrik Wann Jensen. 2001. Visual simulation of smoke. In Proceedings of the 28th annual conference on Computer graphics and interactive techniques. 15–22.Google ScholarDigital Library

9. Zahra Forootaninia and Rahul Narain. 2020. Frequency-domain smoke guiding. ACM Transactions on Graphics (TOG) 39, 6 (2020), 1–10.Google ScholarDigital Library

10. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. In Advances in neural information processing systems. Curran Associates, Inc., Montreal, 2672–2680.Google Scholar

11. Ishaan Gulrajani, Faruk Ahmed, Martin Arjovsky, Vincent Dumoulin, and Aaron C Courville. 2017. Improved training of wasserstein gans. Advances in neural information processing systems 30 (2017), 5767–5777.Google Scholar

12. Philipp Holl, Nils Thuerey, and Vladlen Koltun. 2020. Learning to Control PDEs with Differentiable Physics. In International Conference on Learning Representations.Google Scholar

13. Yuanming Hu, Luke Anderson, Tzu-Mao Li, Qi Sun, Nathan Carr, Jonathan Ragan-Kelley, and Fredo Durand. 2019. DiffTaichi: Differentiable Programming for Physical Simulation. In International Conference on Learning Representations.Google Scholar

14. Xun Huang, Ming-Yu Liu, Serge Belongie, and Jan Kautz. 2018. Multimodal unsupervised image-to-image translation. In Proceedings of the European conference on computer vision (ECCV). 172–189.Google ScholarDigital Library

15. Tiffany Inglis, M-L Eckert, James Gregson, and Nils Thuerey. 2017. Primal-Dual Optimization for Fluids. In Computer Graphics Forum, Vol. 36. Wiley Online Library, 354–368.Google Scholar

16. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A. Efros. 2017. Image-To-Image Translation With Conditional Adversarial Networks. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, Hawaii, 1125–1134.Google Scholar

17. Ondřej Jamriška, Jakub Fišer, Paul Asente, Jingwan Lu, Eli Shechtman, and Daniel Sỳkora. 2015. LazyFluids: appearance transfer for fluid animations. ACM Transactions on Graphics (TOG) 34, 4 (2015), 1–10.Google ScholarDigital Library

18. Chenfanfu Jiang, Craig Schroeder, Joseph Teran, Alexey Stomakhin, and Andrew Selle. 2016. The material point method for simulating continuum materials. In ACM SIGGRAPH 2016 Courses. 1–52.Google ScholarDigital Library

19. Alexia Jolicoeur-Martineau. 2018. The relativistic discriminator: a key element missing from standard GAN. arXiv preprint arXiv:1807.00734 (2018).Google Scholar

20. Anton S Kaplanyan, Anton Sochenov, Thomas Leimkühler, Mikhail Okunev, Todd Goodall, and Gizem Rufo. 2019. DeepFovea: Neural reconstruction for foveated rendering and video compression using learned statistics of natural videos. ACM Transactions on Graphics (TOG) 38, 6 (2019), 1–13.Google ScholarDigital Library

21. Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2020. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 8110–8119.Google ScholarCross Ref

22. T Kelly, P Guerrero, A Steed, P Wonka, and NJ Mitra. 2018. FrankenGAN: guided detail synthesis for building mass models using style-synchonized GANs. ACM Transactions on Graphics 37, 6, Article 216 (November 2018), 14 pages.Google ScholarDigital Library

23. Byungsoo Kim, Vinicius C. Azevedo, Markus Gross, and Barbara Solenthaler. 2019a. Transport-Based Neural Style Transfer for Smoke Simulations. ACM Trans. Graph. 38, 6, Article 188 (Nov. 2019), 11 pages.Google ScholarDigital Library

24. Byungsoo Kim, Vinicius C Azevedo, Nils Thuerey, Theodore Kim, Markus Gross, and Barbara Solenthaler. 2019b. Deep fluids: A generative network for parameterized fluid simulations. In Computer Graphics Forum, Vol. 38. Wiley Online Library, 59–70.Google Scholar

25. Theodore Kim, Nils Thuerey, Doug James, and Markus Gross. 2008. Wavelet turbulence for fluid simulation. ACM Transactions on Graphics (TOG) 27, 3 (2008), 1–6.Google ScholarDigital Library

26. Georg Kohl, Kiwon Um, and Nils Thuerey. 2020. Learning Similarity Metrics for Numerical Simulations. In Proceedings of the 37th International Conference on Machine Learning (Proceedings of Machine Learning Research, Vol. 119), Hal Daumé III and Aarti Singh (Eds.). PMLR, 5349–5360.Google Scholar

27. Dan Koschier, Jan Bender, Barbara Solenthaler, and Matthias Teschner. 2019. Smoothed Particle Hydrodynamics Techniques for the Physics Based Simulation of Fluids and Solids. In Eurographics 2019 – Tutorials, Wenzel Jakob and Enrico Puppo (Eds.). The Eurographics Association.Google Scholar

28. Chongyang Ma, Li-Yi Wei, Baining Guo, and Kun Zhou. 2009. Motion field texture synthesis. In ACM SIGGRAPH Asia 2009 papers. 1–8.Google Scholar

29. Pingchuan Ma, Yunsheng Tian, Zherong Pan, Bo Ren, and Dinesh Manocha. 2018. Fluid directed rigid body control using deep reinforcement learning. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–11.Google ScholarDigital Library

30. Xudong Mao, Qing Li, Haoran Xie, Raymond YK Lau, Zhen Wang, and Stephen Paul Smolley. 2017. Least squares generative adversarial networks. In Proceedings of the IEEE international conference on computer vision. 2794–2802.Google ScholarCross Ref

31. A. Marzouk, P. Barros, M. Eppe, and S. Wermter. 2019. The Conditional Boundary Equilibrium Generative Adversarial Network and its Application to Facial Attributes. In 2019 International Joint Conference on Neural Networks (IJCNN). IEEE, Budapest, 1–7.Google Scholar

32. Antoine McNamara, Adrien Treuille, Zoran Popović, and Jos Stam. 2004. Fluid Control Using the Adjoint Method. ACM Transactions on Graphics / SIGGRAPH 2004 23, 3 (Aug. 2004).Google Scholar

33. Mehdi Mirza and Simon Osindero. 2014. Conditional Generative Adversarial Nets. arXiv:1411.1784Google Scholar

34. Joe J Monaghan. 1992. Smoothed particle hydrodynamics. Annual review of astronomy and astrophysics 30, 1 (1992), 543–574.Google Scholar

35. Michael B Nielsen and Robert Bridson. 2011. Guide shapes for high resolution naturalistic liquid simulation. In ACM SIGGRAPH 2011 papers. 1–8.Google ScholarDigital Library

36. Zherong Pan, Jin Huang, Yiying Tong, Changxi Zheng, and Hujun Bao. 2013. Interactive localized liquid motion editing. ACM Transactions on Graphics (TOG) 32, 6 (2013), 1–10.Google ScholarDigital Library

37. Zherong Pan and Dinesh Manocha. 2017. Efficient solver for spacetime control of smoke. ACM Transactions on Graphics (TOG) 36, 4 (2017), 1.Google ScholarDigital Library

38. Tim Salimans, Ian J. Goodfellow, Wojciech Zaremba, Vicki Cheung, Alec Radford, and Xi Chen. 2016. Improved Techniques for Training GANs. In NIPS. 2226–2234.Google Scholar

39. Alvaro Sanchez-Gonzalez, Jonathan Godwin, Tobias Pfaff, Rex Ying, Jure Leskovec, and Peter W Battaglia. 2020. Learning to simulate complex physics with graph networks. arXiv preprint arXiv:2002.09405 (2020).Google Scholar

40. Syuhei Sato, Yoshinori Dobashi, Theodore Kim, and Tomoyuki Nishita. 2018. Example-based turbulence style transfer. ACM Transactions on Graphics (TOG) 37, 4 (2018).Google ScholarDigital Library

41. Lin Shi and Yizhou Yu. 2005. Taming liquids for rapidly changing targets. In Proceedings of the 2005 ACM SIGGRAPH/Eurographics symposium on Computer animation. 229–236.Google ScholarDigital Library

42. Akash Srivastava, Lazar Valkov, Chris Russell, Michael U. Gutmann, and Charles A. Sutton. 2017. VEEGAN: Reducing Mode Collapse in GANs using Implicit Variational Learning. In NIPS. 3310–3320.Google Scholar

43. Jos Stam. 1999. Stable fluids. In Proceedings of the 26th annual conference on Computer graphics and interactive techniques. 121–128.Google ScholarDigital Library

44. Alexey Stomakhin, Craig Schroeder, Lawrence Chai, Joseph Teran, and Andrew Selle. 2013. A material point method for snow simulation. ACM Transactions on Graphics (TOG) 32, 4 (2013), 1–10.Google ScholarDigital Library

45. Tiancheng Sun, Jonathan T Barron, Yun-Ta Tsai, Zexiang Xu, Xueming Yu, Graham Fyffe, Christoph Rhemann, Jay Busch, Paul E Debevec, and Ravi Ramamoorthi. 2019. Single image portrait relighting. ACM Trans. Graph. 38, 4 (2019), 79–1.Google ScholarDigital Library

46. Simron Thapa, Nianyi Li, and Jinwei Ye. 2020. Dynamic Fluid Surface Reconstruction Using Deep Neural Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 21–30.Google ScholarCross Ref

47. Nils Thuerey and Tobias Pfaff. 2018. MantaFlow. http://mantaflow.com.Google Scholar

48. Nils Thürey, Richard Keiser, Mark Pauly, and Ulrich Rüde. 2009. Detail-preserving fluid control. Graphical Models 71, 6 (2009), 221–228.Google ScholarDigital Library

49. Jonathan Tompson, Kristofer Schlachter, Pablo Sprechmann, and Ken Perlin. 2017. Accelerating eulerian fluid simulation with convolutional networks. In International Conference on Machine Learning. PMLR, 3424–3433.Google Scholar

50. Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu, Andrew Tao, Jan Kautz, and Bryan Catanzaro. 2018. High-resolution image synthesis and semantic manipulation with conditional gans. In Proceedings of the IEEE conference on computer vision and pattern recognition. 8798–8807.Google ScholarCross Ref

51. Steffen Wiewel, Moritz Becher, and Nils Thuerey. 2019. Latent space physics: Towards learning the temporal evolution of fluid flow. In Computer graphics forum, Vol. 38. 71–82.Google Scholar

52. Chang Xiao, Peilin Zhong, and Changxi Zheng. 2018. BourGAN: Generative Networks with Metric Embeddings. In NeurIPS. 2275–2286.Google Scholar

53. You Xie, Erik Franz, Mengyu Chu, and Nils Thuerey. 2018. tempoGAN: A Temporally Coherent, Volumetric GAN for Super-resolution Fluid Flow. ACM Transactions on Graphics (TOG) 37, 4 (2018), 95.Google ScholarDigital Library

54. Guowei Yan, Zhili Chen, Jimei Yang, and Huamin Wang. 2020. Interactive liquid splash modeling by user sketches. ACM Transactions on Graphics (TOG) 39, 6 (2020), 1–13.Google ScholarDigital Library

55. Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE conference on computer vision and pattern recognition. 586–595.Google ScholarCross Ref

56. Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A Efros. 2017. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision. IEEE, Venice, 2223–2232.Google ScholarCross Ref

57. Yongning Zhu and Robert Bridson. 2005. Animating sand as a fluid. ACM Transactions on Graphics (TOG) 24, 3 (2005), 965–972.Google ScholarDigital Library