“Kernel-Based Frame Interpolation for Spatio-Temporally Adaptive Rendering” by Martins Briedis, Djelouah, Ortiz, Meyer, Gross, et al. …

Conference:

Type(s):

Title:

- Kernel-Based Frame Interpolation for Spatio-Temporally Adaptive Rendering

Session/Category Title: Real-time Rendering: Gotta Go Fast!

Presenter(s)/Author(s):

- Karlis Martins Briedis

- Abdelaziz Djelouah

- Raphael Ortiz

- Mark Meyer

- Markus Gross

- Christopher Schroers

Moderator(s):

Abstract:

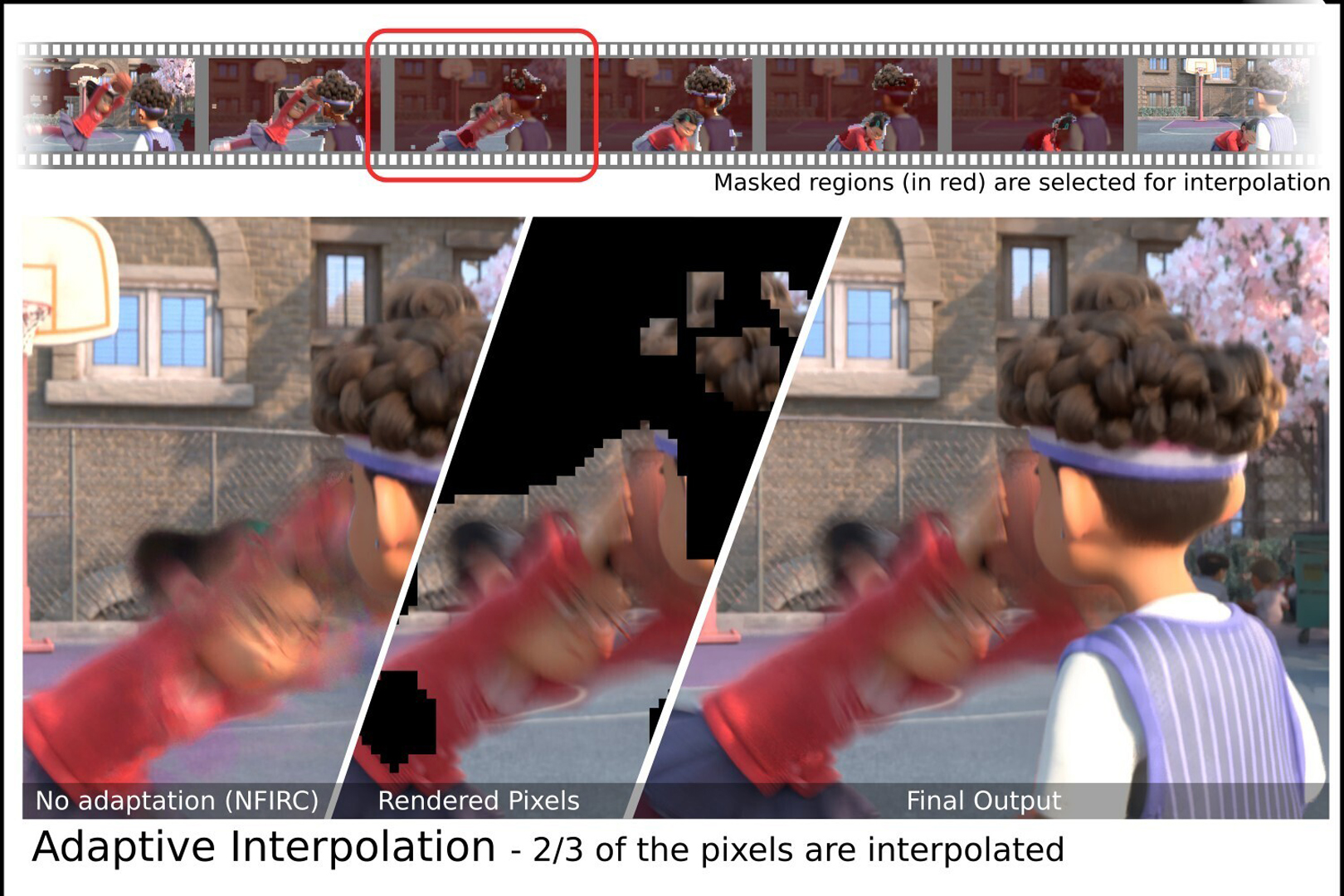

Recently, there has been exciting progress in frame interpolation for rendered content. In this offline rendering setting, additional inputs, such as albedo and depth, can be extracted from a scene at a very low cost and, when integrated in a suitable fashion, can significantly improve the quality of the interpolated frames. Although existing approaches have been able to show good results, most high-quality interpolation methods use a synthesis network for direct color prediction. In complex scenarios, this can result in unpredictable behavior and lead to color artifacts. To mitigate this and to increase robustness, we propose to estimate the interpolated frame by predicting spatially varying kernels that operate on image splats. Kernel prediction ensures a linear mapping from the input images to the output and enables new opportunities, such as consistent and efficient interpolation of alpha values or many other additional channels and render passes that might exist. Additionally, we present an adaptive strategy that allows predicting full or partial keyframes that should be rendered with color samples solely based on the auxiliary features of a shot. This content-based spatio-temporal adaptivity allows rendering significantly fewer color pixels as compared to a fixed-step scheme when wanting to maintain a certain quality. Overall, these contributions lead to a more robust method and significant further reductions of the rendering costs.

References:

1. Steve Bako, Thijs Vogels, Brian McWilliams, Mark Meyer, Jan Novák, Alex Harvill, Pradeep Sen, Tony DeRose, and Fabrice Rousselle. 2017. Kernel-Predicting Convolutional Networks for Denoising Monte Carlo Renderings. ACM Transactions on Graphics (Proceedings of SIGGRAPH 2017) 36, 4, Article 97 (2017), 97:1–97:14 pages. https://doi.org/10.1145/3072959.3073708

2. Wenbo Bao, Wei-Sheng Lai, Chao Ma, Xiaoyun Zhang, Zhiyong Gao, and Ming-Hsuan Yang. 2019. Depth-Aware Video Frame Interpolation. In IEEE Conference on Computer Vision and Pattern Recognition.

3. Wenbo Bao, Wei-Sheng Lai, Xiaoyun Zhang, Zhiyong Gao, and Ming-Hsuan Yang. 2021. MEMC-Net: Motion Estimation and Motion Compensation Driven Neural Network for Video Interpolation and Enhancement. IEEE Transactions on Pattern Analysis and Machine Intelligence 43, 3 (2021), 933–948. https://doi.org/10.1109/TPAMI.2019.2941941

4. Karlis Martins Briedis, Abdelaziz Djelouah, Mark Meyer, Ian McGonigal, Markus Gross, and Christopher Schroers. 2021. Neural Frame Interpolation for Rendered Content. ACM Trans. Graph. 40, 6, Article 239 (dec 2021), 13 pages. https://doi.org/10.1145/3478513.3480553

5. Jiawen Chen, Andrew Adams, Neal Wadhwa, and Samuel W. Hasinoff. 2016. Bilateral Guided Upsampling. ACM Trans. Graph. 35, 6, Article 203 (dec 2016), 8 pages. https://doi.org/10.1145/2980179.2982423

6. Zhixiang Chi, Rasoul Mohammadi Nasiri, Zheng Liu, Juwei Lu, Jin Tang, and Konstantinos N. Plataniotis. 2020. All at Once: Temporally Adaptive Multi-frame Interpolation with Advanced Motion Modeling. In Computer Vision – ECCV 2020, Andrea Vedaldi, Horst Bischof, Thomas Brox, and Jan-Michael Frahm (Eds.). Springer International Publishing, Cham, 107–123.

7. Zhixiang Chi, Rasoul Mohammadi Nasiri, Zheng Liu, Yuanhao Yu, Juwei Lu, Jin Tang, and Konstantinos N Plataniotis. 2022. Error-Aware Spatial Ensembles for Video Frame Interpolation. arXiv preprint arXiv:2207.12305 (2022).

8. Myungsub Choi, Heewon Kim, Bohyung Han, Ning Xu, and Kyoung Mu Lee. 2020. Channel Attention Is All You Need for Video Frame Interpolation. In AAAI.

9. Djork-Arné Clevert, Thomas Unterthiner, and Sepp Hochreiter. 2016. Fast and Accurate Deep Network Learning by Exponential Linear Units (ELUs). In 4th International Conference on Learning Representations, ICLR 2016, San Juan, Puerto Rico, May 2-4, 2016, Conference Track Proceedings, Yoshua Bengio and Yann LeCun (Eds.). http://arxiv.org/abs/1511.07289

10. Duolikun Danier, Fan Zhang, and David Bull. 2022. ST-MFNet: A Spatio-Temporal Multi-Flow Network for Frame Interpolation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 3521–3531.

11. Tianyu Ding, Luming Liang, Zhihui Zhu, and Ilya Zharkov. 2021. CDFI: Compression-Driven Network Design for Frame Interpolation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 8001–8011.

12. Elmar Eisemann and Frédo Durand. 2004. Flash Photography Enhancement via Intrinsic Relighting. ACM Trans. Graph. 23, 3 (aug 2004), 673–678. https://doi.org/10.1145/1015706.1015778

13. D. Fourure, R. Emonet, E. Fromont, D. Muselet, A. Tremeau, and C. Wolf. 2017. Residual conv-deconv grid network for semantic segmentation. arXiv preprint arXiv:1707.07958 (2017).

14. Jie Guo, Xihao Fu, Liqiang Lin, Hengjun Ma, Yanwen Guo, Shiqiu Liu, and Ling-Qi Yan. 2021. ExtraNet: Real-Time Extrapolated Rendering for Low-Latency Temporal Supersampling. ACM Trans. Graph. 40, 6, Article 278 (dec 2021), 16 pages. https://doi.org/10.1145/3478513.3480531

15. Kaiming He, Jian Sun, and Xiaoou Tang. 2013. Guided Image Filtering. IEEE Transactions on Pattern Analysis and Machine Intelligence 35, 6 (2013), 1397–1409. https://doi.org/10.1109/TPAMI.2012.213

16. Ping Hu, Simon Niklaus, Stan Sclaroff, and Kate Saenko. 2022. Many-to-many Splatting for Efficient Video Frame Interpolation. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2022), 3543–3552.

17. Zhewei Huang, Tianyuan Zhang, Wen Heng, Boxin Shi, and Shuchang Zhou. 2022. Real-Time Intermediate Flow Estimation for Video Frame Interpolation. In Proceedings of the European Conference on Computer Vision (ECCV).

18. Junhwa Hur and Stefan Roth. 2019. Iterative Residual Refinement for Joint Optical Flow and Occlusion Estimation. In CVPR.

19. Mustafa Işık, Krishna Mullia, Matthew Fisher, Jonathan Eisenmann, and Michaël Gharbi. 2021. Interactive Monte Carlo Denoising Using Affinity of Neural Features. ACM Trans. Graph. 40, 4, Article 37 (jul 2021), 13 pages. https://doi.org/10.1145/3450626.3459793

20. Huaizu Jiang, Deqing Sun, Varun Jampani, Ming-Hsuan Yang, Erik Learned-Miller, and Jan Kautz. 2018. Super slomo: High quality estimation of multiple intermediate frames for video interpolation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 9000–9008.

21. Nima Khademi Kalantari, Steve Bako, and Pradeep Sen. 2015. A Machine Learning Approach for Filtering Monte Carlo Noise. ACM Trans. Graph. 34, 4, Article 122 (jul 2015), 12 pages. https://doi.org/10.1145/2766977

22. Tarun Kalluri, Deepak Pathak, Manmohan Chandraker, and Du Tran. 2021. FLAVR: Flow-Agnostic Video Representations for Fast Frame Interpolation. (2021).

23. Lingtong Kong, Boyuan Jiang, Donghao Luo, Wenqing Chu, Xiaoming Huang, Ying Tai, Chengjie Wang, and Jie Yang. 2022. IFRNet: Intermediate Feature Refine Network for Efficient Frame Interpolation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 1969–1978.

24. Alexandr Kuznetsov, Nima Khademi Kalantari, and Ravi Ramamoorthi. 2018. Deep adaptive sampling for low sample count rendering. In Computer Graphics Forum, Vol. 37. Wiley Online Library, 35–44.

25. Hyeongmin Lee, Taeoh Kim, Tae young Chung, Daehyun Pak, Yuseok Ban, and Sangyoun Lee. 2020. AdaCoF: Adaptive Collaboration of Flows for Video Frame Interpolation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

26. Sungho Lee, Narae Choi, and Woong Il Choi. 2022. Enhanced Correlation Matching based Video Frame Interpolation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 2839–2847.

27. Tzu-Mao Li, Yu-Ting Wu, and Yung-Yu Chuang. 2012. SURE-Based Optimization for Adaptive Sampling and Reconstruction. ACM Trans. Graph. 31, 6, Article 194 (nov 2012), 9 pages. https://doi.org/10.1145/2366145.2366213

28. Yihao Liu, Liangbin Xie, Li Siyao, Wenxiu Sun, Yu Qiao, and Chao Dong. 2020. Enhanced quadratic video interpolation. In European Conference on Computer Vision Workshops.

29. Gucan Long, Laurent Kneip, Jose M Alvarez, Hongdong Li, Xiaohu Zhang, and Qifeng Yu. 2016. Learning image matching by simply watching video. In European Conference on Computer Vision. Springer, 434–450.

30. Liying Lu, Ruizheng Wu, Huaijia Lin, Jiangbo Lu, and Jiaya Jia. 2022. Video Frame Interpolation With Transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 3532–3542.

31. Simone Meyer, Abdelaziz Djelouah, Brian McWilliams, Alexander Sorkine-Hornung, Markus Gross, and Christopher Schroers. 2018. PhaseNet for Video Frame Interpolation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

32. Simone Meyer, Oliver Wang, Henning Zimmer, Max Grosse, and Alexander Sorkine-Hornung. 2015. Phase-Based Frame Interpolation for Video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 1410–1418. https://doi.org/10.1109/CVPR.2015.7298747

33. Simon Niklaus, Ping Hu, and Jiawen Chen. 2023. Splatting-Based Synthesis for Video Frame Interpolation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV). 713–723.

34. Simon Niklaus and Feng Liu. 2018. Context-Aware Synthesis for Video Frame Interpolation. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

35. Simon Niklaus and Feng Liu. 2020. Softmax Splatting for Video Frame Interpolation. In IEEE Conference on Computer Vision and Pattern Recognition.

36. Simon Niklaus, Long Mai, and Feng Liu. 2017a. Video Frame Interpolation via Adaptive Convolution. In IEEE Conference on Computer Vision and Pattern Recognition.

37. Simon Niklaus, Long Mai, and Feng Liu. 2017b. Video Frame Interpolation via Adaptive Separable Convolution. In IEEE International Conference on Computer Vision.

38. Simon Niklaus, Long Mai, and Oliver Wang. 2021. Revisiting Adaptive Convolutions for Video Frame Interpolation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV). 1099–1109.

39. Moritz Nottebaum, Stefan Roth, and Simone Schaub-Meyer. 2022. Efficient Feature Extraction for High-resolution Video Frame Interpolation. In British Machine Vision Conference BMVC.

40. Junheum Park, Keunsoo Ko, Chul Lee, and Chang-Su Kim. 2020. BMBC: Bilateral Motion Estimation with Bilateral Cost Volume for Video Interpolation. In Computer Vision – ECCV 2020, Andrea Vedaldi, Horst Bischof, Thomas Brox, and Jan-Michael Frahm (Eds.). Springer International Publishing, Cham, 109–125.

41. Junheum Park, Chul Lee, and Chang-Su Kim. 2021. Asymmetric Bilateral Motion Estimation for Video Frame Interpolation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). 14539–14548.

42. Georg Petschnigg, Richard Szeliski, Maneesh Agrawala, Michael Cohen, Hugues Hoppe, and Kentaro Toyama. 2004. Digital Photography with Flash and No-Flash Image Pairs. ACM Trans. Graph. 23, 3 (aug 2004), 664–672. https://doi.org/10.1145/1015706.1015777

43. Fitsum Reda, Janne Kontkanen, Eric Tabellion, Deqing Sun, Caroline Pantofaru, and Brian Curless. 2022. FILM: Frame Interpolation for Large Motion. In European Conference on Computer Vision (ECCV).

44. Wentao Shangguan, Yu Sun, Weijie Gan, and Ulugbek S. Kamilov. 2022. Learning Cross-Video Neural Representations for High-Quality Frame Interpolation. In Proc. European Conference on Computer Vision (ECCV). Tel Aviv, Israel, 511–528.

45. Zhihao Shi, Xiangyu Xu, Xiaohong Liu, Jun Chen, and Ming-Hsuan Yang. 2022. Video Frame Interpolation Transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 17482–17491.

46. Hyeonjun Sim, Jihyong Oh, and Munchurl Kim. 2021. XVFI: eXtreme Video Frame Interpolation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV).

47. Karen Simonyan and Andrew Zisserman. 2015. Very Deep Convolutional Networks for Large-Scale Image Recognition. In International Conference on Learning Representations.

48. Deqing Sun, Xiaodong Yang, Ming-Yu Liu, and Jan Kautz. 2018. Pwc-net: Cnns for optical flow using pyramid, warping, and cost volume. In Proceedings of the IEEE conference on computer vision and pattern recognition. 8934–8943.

49. Zachary Teed and Jia Deng. 2020. RAFT: Recurrent All-Pairs Field Transforms for Optical Flow. In Computer Vision – ECCV 2020 – 16th European Conference(Lecture Notes in Computer Science, Vol. 12347). Springer, 402–419. https://doi.org/10.1007/978-3-030-58536-5_24

50. C. Tomasi and R. Manduchi. 1998. Bilateral filtering for gray and color images. In Sixth International Conference on Computer Vision (IEEE Cat. No.98CH36271). 839–846. https://doi.org/10.1109/ICCV.1998.710815

51. Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Ł ukasz Kaiser, and Illia Polosukhin. 2017. Attention is All you Need. In Advances in Neural Information Processing Systems, I. Guyon, U. Von Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett (Eds.). Vol. 30. Curran Associates, Inc.https://proceedings.neurips.cc/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf

52. Thijs Vogels, Fabrice Rousselle, Brian McWilliams, Gerhard Röthlin, Alex Harvill, David Adler, Mark Meyer, and Jan Novák. 2018. Denoising with Kernel Prediction and Asymmetric Loss Functions. ACM Transactions on Graphics (Proceedings of SIGGRAPH 2018) 37, 4, Article 124 (2018), 124:1–124:15 pages. https://doi.org/10.1145/3197517.3201388

53. Lei Xiao, Salah Nouri, Matt Chapman, Alexander Fix, Douglas Lanman, and Anton Kaplanyan. 2020. Neural Supersampling for Real-Time Rendering. ACM Trans. Graph. 39, 4, Article 142 (July 2020), 12 pages. https://doi.org/10.1145/3386569.3392376

54. Xiangyu Xu, Li Siyao, Wenxiu Sun, Qian Yin, and Ming-Hsuan Yang. 2019. Quadratic Video Interpolation. In Advances in Neural Information Processing Systems, H. Wallach, H. Larochelle, A. Beygelzimer, F. dÀlché-Buc, E. Fox, and R. Garnett (Eds.). Vol. 32. Curran Associates, Inc.https://proceedings.neurips.cc/paper/2019/file/d045c59a90d7587d8d671b5f5aec4e7c-Paper.pdf

55. Tianfan Xue, Baian Chen, Jiajun Wu, Donglai Wei, and William T Freeman. 2019. Video enhancement with task-oriented flow. International Journal of Computer Vision 127, 8 (2019), 1106–1125.

56. Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In CVPR.

57. Henning Zimmer, Fabrice Rousselle, Wenzel Jakob, Oliver Wang, David Adler, Wojciech Jarosz, Olga Sorkine-Hornung, and Alexander Sorkine-Hornung. 2015. Path-space Motion Estimation and Decomposition for Robust Animation Filtering. Computer Graphics Forum (Proceedings of EGSR) 34, 4 (June 2015). https://doi.org/10/f7mb34