“GANimator: neural motion synthesis from a single sequence” by Li, Aberman, Zhang, Hanocka and Sorkine-Hornung

Conference:

Type(s):

Title:

- GANimator: neural motion synthesis from a single sequence

Presenter(s)/Author(s):

Abstract:

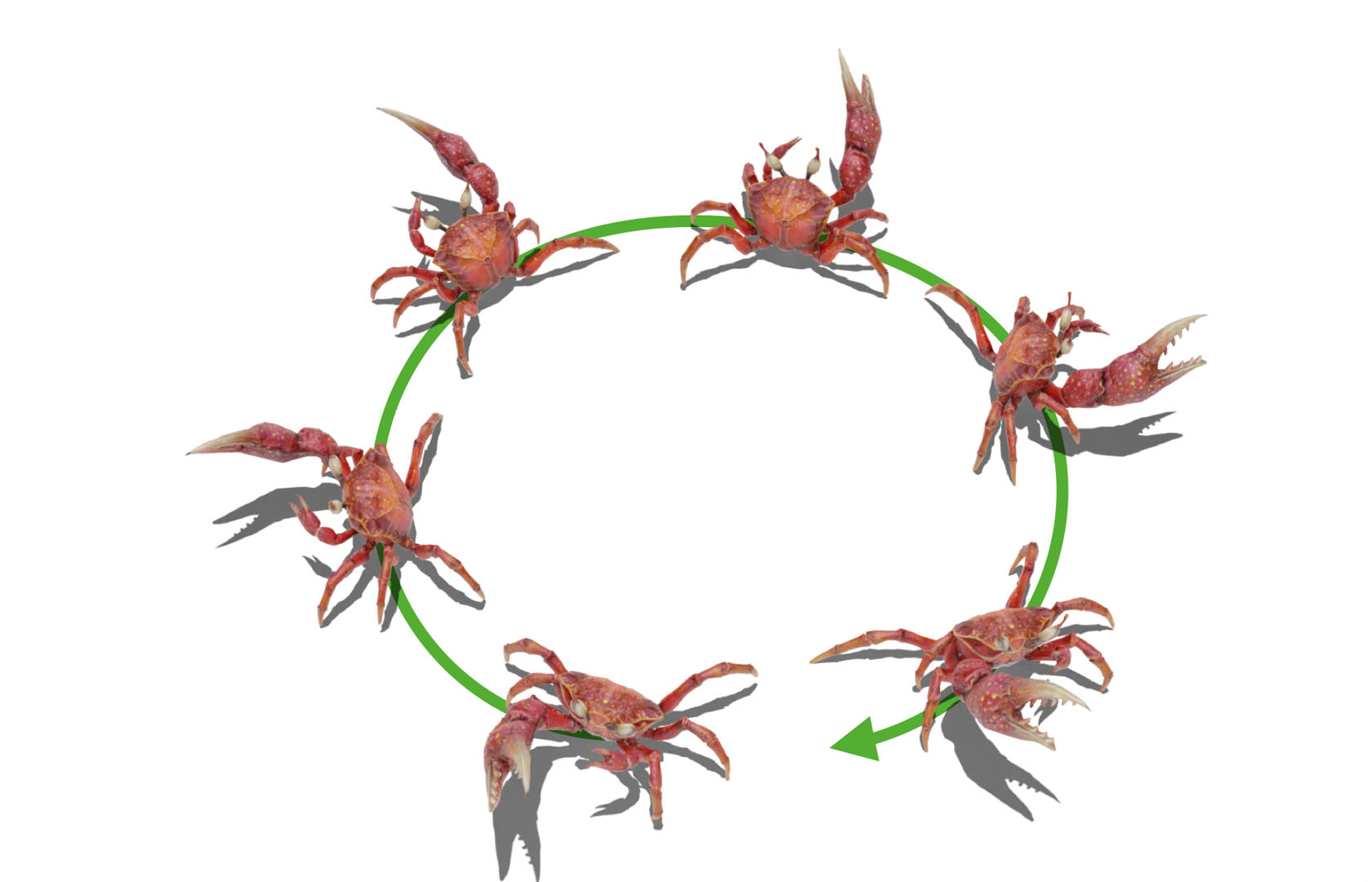

We present GANimator, a generative model that learns to synthesize novel motions from a single, short motion sequence. GANimator generates motions that resemble the core elements of the original motion, while simultaneously synthesizing novel and diverse movements. Existing data-driven techniques for motion synthesis require a large motion dataset which contains the desired and specific skeletal structure. By contrast, GANimator only requires training on a single motion sequence, enabling novel motion synthesis for a variety of skeletal structures e.g., bipeds, quadropeds, hexapeds, and more. Our framework contains a series of generative and adversarial neural networks, each responsible for generating motions in a specific frame rate. The framework progressively learns to synthesize motion from random noise, enabling hierarchical control over the generated motion content across varying levels of detail. We show a number of applications, including crowd simulation, key-frame editing, style transfer, and interactive control, which all learn from a single input sequence. Code and data for this paper are at https://peizhuoli.github.io/ganimator.

References:

1. Kfir Aberman, Peizhuo Li, Dani Lischinski, Olga Sorkine-Hornung, Daniel Cohen-Or, and Baoquan Chen. 2020a. Skeleton-aware networks for deep motion retargeting. ACM Transactions on Graphics (TOG) 39, 4 (2020), 62–1.Google ScholarDigital Library

2. Kfir Aberman, Yijia Weng, Dani Lischinski, Daniel Cohen-Or, and Baoquan Chen. 2020b. Unpaired motion style transfer from video to animation. ACM Transactions on Graphics (TOG) 39, 4 (2020), 64–1.Google ScholarDigital Library

3. Kfir Aberman, Rundi Wu, Dani Lischinski, Baoquan Chen, and Daniel Cohen-Or. 2019. Learning Character-Agnostic Motion for Motion Retargeting in 2D. ACM Trans. Graph. 38, 4 (2019), 75.Google ScholarDigital Library

4. Adobe Systems Inc. 2021. Mixamo. https://www.mixamo.com Accessed: 2021-12-25.Google Scholar

5. Shailen Agrawal, Shuo Shen, and Michiel van de Panne. 2013. Diverse motion variations for physics-based character animation. In Proceedings of the 12th ACM SIGGRAPH/Eurographics Symposium on Computer Animation. 37–44.Google Scholar

6. Okan Arikan and David A Forsyth. 2002. Interactive motion generation from examples. ACM Transactions on Graphics (TOG) 21, 3 (2002), 483–490.Google ScholarDigital Library

7. Andreas Aristidou, Anastasios Yiannakidis, Kfir Aberman, Daniel Cohen-Or, Ariel Shamir, and Yiorgos Chrysanthou. 2021. Rhythm is a Dancer: Music-Driven Motion Synthesis with Global Structure. arXiv preprint arXiv:2111.12159 (2021).Google Scholar

8. Richard Bowden. 2000. Learning statistical models of human motion. In IEEE Workshop on Human Modeling, Analysis and Synthesis, CVPR, Vol. 2000. Citeseer.Google Scholar

9. Matthew Brand and Aaron Hertzmann. 2000. Style machines. In Proceedings of the 27th annual conference on Computer graphics and interactive techniques. 183–192.Google ScholarDigital Library

10. Michael B?ttner and Simon Clavet. 2015. Motion Matching-The Road to Next Gen Animation. Proc. of Nucl. ai 2015 (2015). https://www.youtube.com/watch?v=z_wpgHFSWss&t=658sGoogle Scholar

11. Jinxiang Chai and Jessica K Hodgins. 2007. Constraint-based motion optimization using a statistical dynamic model. In ACM SIGGRAPH 2007 papers. 8–es.Google ScholarDigital Library

12. Katerina Fragkiadaki, Sergey Levine, Panna Felsen, and Jitendra Malik. 2015. Recurrent network models for human dynamics. In Proceedings of the IEEE International Conference on Computer Vision. 4346–4354.Google ScholarDigital Library

13. Thomas Geijtenbeek, Nicolas Pronost, Arjan Egges, and Mark H. Overmars. 2011. Interactive Character Animation using Simulated Physics. In Eurographics 2011 – State of the Art Reports, N. John and B. Wyvill (Eds.). The Eurographics Association. Google ScholarCross Ref

14. Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative adversarial nets. Advances in neural information processing systems 27 (2014).Google Scholar

15. Keith Grochow, Steven L Martin, Aaron Hertzmann, and Zoran Popovi?. 2004. Style-based inverse kinematics. In ACM SIGGRAPH 2004 Papers. 522–531.Google ScholarDigital Library

16. Ishaan Gulrajani, Faruk Ahmed, Martin Arjovsky, Vincent Dumoulin, and Aaron Courville. 2017. Improved training of wasserstein GANs. In Proceedings of the 31st International Conference on Neural Information Processing Systems. 5769–5779.Google Scholar

17. F?lix G Harvey, Mike Yurick, Derek Nowrouzezahrai, and Christopher Pal. 2020. Robust motion in-betweening. ACM Transactions on Graphics (TOG) 39, 4 (2020), 60–1.Google ScholarDigital Library

18. Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition. 770–778.Google ScholarCross Ref

19. Rachel Heck and Michael Gleicher. 2007. Parametric motion graphs. In Proceedings of the 2007 symposium on Interactive 3D graphics and games. 129–136.Google ScholarDigital Library

20. Nicolas Heess, Dhruva TB, Srinivasan Sriram, Jay Lemmon, Josh Merel, Greg Wayne, Yuval Tassa, Tom Erez, Ziyu Wang, SM Eslami, et al. 2017. Emergence of locomotion behaviours in rich environments. arXiv preprint arXiv:1707.02286 (2017).Google Scholar

21. Gustav Eje Henter, Simon Alexanderson, and Jonas Beskow. 2020. Moglow: Probabilistic and controllable motion synthesis using normalising flows. ACM Transactions on Graphics (TOG) 39, 6 (2020), 1–14.Google ScholarDigital Library

22. Tobias Hinz, Matthew Fisher, Oliver Wang, and Stefan Wermter. 2021. Improved techniques for training single-image gans. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 1300–1309.Google ScholarCross Ref

23. Daniel Holden, Oussama Kanoun, Maksym Perepichka, and Tiberiu Popa. 2020. Learned motion matching. ACM Transactions on Graphics (TOG) 39, 4 (2020), 53–1.Google ScholarDigital Library

24. Daniel Holden, Taku Komura, and Jun Saito. 2017. Phase-functioned neural networks for character control. ACM Transactions on Graphics (TOG) 36, 4 (2017), 1–13.Google ScholarDigital Library

25. Daniel Holden, Jun Saito, and Taku Komura. 2016. A deep learning framework for character motion synthesis and editing. ACM Transactions on Graphics (TOG) 35, 4 (2016), 1–11.Google ScholarDigital Library

26. Daniel Holden, Jun Saito, Taku Komura, and Thomas Joyce. 2015. Learning motion manifolds with convolutional autoencoders. In SIGGRAPH Asia 2015 Technical Briefs. 1–4.Google Scholar

27. Leslie Ikemoto, Okan Arikan, and David Forsyth. 2009. Generalizing motion edits with gaussian processes. ACM Transactions on Graphics (TOG) 28, 1 (2009), 1–12.Google ScholarDigital Library

28. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2017. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1125–1134.Google ScholarCross Ref

29. Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. 2018. Progressive Growing of GANs for Improved Quality, Stability, and Variation. In International Conference on Learning Representations.Google Scholar

30. Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).Google Scholar

31. Lucas Kovar and Michael Gleicher. 2004. Automated extraction and parameterization of motions in large data sets. ACM Transactions on Graphics (ToG) 23, 3 (2004), 559–568.Google ScholarDigital Library

32. Lucas Kovar, Michael Gleicher, and Fr?d?ric Pighin. 2002. Motion Graphs. In Proceedings of the 29th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’02). Association for Computing Machinery, New York, NY, USA, 473–482. Google ScholarDigital Library

33. Manfred Lau, Ziv Bar-Joseph, and James Kuffner. 2009. Modeling spatial and temporal variation in motion data. ACM Transactions on Graphics (TOG) 28, 5 (2009), 1–10.Google ScholarDigital Library

34. Jehee Lee, Jinxiang Chai, Paul SA Reitsma, Jessica K Hodgins, and Nancy S Pollard. 2002. Interactive control of avatars animated with human motion data. In Proceedings of the 29th annual conference on Computer graphics and interactive techniques. 491–500.Google ScholarDigital Library

35. Kyungho Lee, Seyoung Lee, and Jehee Lee. 2018. Interactive character animation by learning multi-objective control. ACM Transactions on Graphics (TOG) 37, 6 (2018), 1–10.Google ScholarDigital Library

36. Seyoung Lee, Sunmin Lee, Yongwoo Lee, and Jehee Lee. 2021. Learning a family of motor skills from a single motion clip. ACM Transactions on Graphics (TOG) 40, 4 (2021), 1–13.Google ScholarDigital Library

37. Sergey Levine, Jack M Wang, Alexis Haraux, Zoran Popovi?, and Vladlen Koltun. 2012. Continuous character control with low-dimensional embeddings. ACM Transactions on Graphics (TOG) 31, 4 (2012), 1–10.Google ScholarDigital Library

38. Chuan Li and Michael Wand. 2016. Precomputed real-time texture synthesis with markovian generative adversarial networks. In European conference on computer vision. Springer, 702–716.Google ScholarCross Ref

39. Yan Li, Tianshu Wang, and Heung-Yeung Shum. 2002. Motion texture: a two-level statistical model for character motion synthesis. In Proceedings of the 29th annual conference on Computer graphics and interactive techniques. 465–472.Google ScholarDigital Library

40. Ying-Sheng Luo, Jonathan Hans Soeseno, Trista Pei-Chun Chen, and Wei-Chao Chen. 2020. Carl: Controllable agent with reinforcement learning for quadruped locomotion. ACM Transactions on Graphics (TOG) 39, 4 (2020), 38–1.Google ScholarDigital Library

41. Ian Mason, Sebastian Starke, and Taku Komura. 2022. Real-Time Style Modelling of Human Locomotion via Feature-Wise Transformations and Local Motion Phases. arXiv preprint arXiv:2201.04439 (2022).Google Scholar

42. Jianyuan Min and Jinxiang Chai. 2012. Motion graphs++ a compact generative model for semantic motion analysis and synthesis. ACM Transactions on Graphics (TOG) 31, 6 (2012), 1–12.Google ScholarDigital Library

43. Mark Mizuguchi, John Buchanan, and Tom Calvert. 2001. Data driven motion transitions for interactive games.. In Eurographics (Short Presentations).Google Scholar

44. Lucas Mourot, Ludovic Hoyet, Fran?ois Le Clerc, Fran?ois Schnitzler, and Pierre Hellier. 2021. A Survey on Deep Learning for Skeleton-Based Human Animation. Computer Graphics Forum (2021).Google Scholar

45. Aaron van den Oord, Sander Dieleman, Heiga Zen, Karen Simonyan, Oriol Vinyals, Alex Graves, Nal Kalchbrenner, Andrew Senior, and Koray Kavukcuoglu. 2016. Wavenet: A generative model for raw audio. arXiv preprint arXiv:1609.03499 (2016).Google Scholar

46. Sang Il Park, Hyun Joon Shin, and Sung Yong Shin. 2002. On-line locomotion generation based on motion blending. In Proceedings of the 2002 ACM SIGGRAPH/Eurographics symposium on Computer animation. 105–111.Google ScholarDigital Library

47. Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Kopf, Edward Yang, Zachary DeVito, Martin Raison, Alykhan Tejani, Sasank Chilamkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. 2019. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32, H. Wallach, H. Larochelle, A. Beygelzimer, F. d’Alch?-Buc, E. Fox, and R. Garnett (Eds.). Curran Associates, Inc., 8024–8035. http://papers.neurips.cc/paper/9015-pytorch-an-imperative-style-high-performance-deep-learning-library.pdfGoogle Scholar

48. Dario Pavllo, David Grangier, and Michael Auli. 2018. Quaternet: A quaternion-based recurrent model for human motion. arXiv preprint arXiv:1805.06485 (2018).Google Scholar

49. Xue Bin Peng, Pieter Abbeel, Sergey Levine, and Michiel van de Panne. 2018. Deepmimic: Example-guided deep reinforcement learning of physics-based character skills. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–14.Google ScholarDigital Library

50. Xue Bin Peng, Glen Berseth, KangKang Yin, and Michiel Van De Panne. 2017. Deeploco: Dynamic locomotion skills using hierarchical deep reinforcement learning. ACM Transactions on Graphics (TOG) 36, 4 (2017), 1–13.Google ScholarDigital Library

51. Ken Perlin. 1985. An image synthesizer. ACM Siggraph Computer Graphics 19, 3 (1985), 287–296.Google ScholarDigital Library

52. Ken Perlin and Athomas Goldberg. 1996. Improv: A system for scripting interactive actors in virtual worlds. In Proceedings of the 23rd annual conference on Computer graphics and interactive techniques. 205–216.Google ScholarDigital Library

53. Katherine Pullen and Christoph Bregler. 2000. Animating by multi-level sampling. In Proceedings Computer Animation 2000. IEEE, 36–42.Google ScholarDigital Library

54. Katherine Pullen and Christoph Bregler. 2002. Motion capture assisted animation: Texturing and synthesis. In Proceedings of the 29th annual conference on Computer graphics and interactive techniques. 501–508.Google ScholarDigital Library

55. Charles Rose, Michael F Cohen, and Bobby Bodenheimer. 1998. Verbs and adverbs: Multidimensional motion interpolation. IEEE Computer Graphics and Applications 18, 5 (1998), 32–40.Google ScholarDigital Library

56. Charles Rose, Brian Guenter, Bobby Bodenheimer, and Michael F Cohen. 1996. Efficient generation of motion transitions using spacetime constraints. In Proceedings of the 23rd annual conference on Computer graphics and interactive techniques. 147–154.Google ScholarDigital Library

57. Alla Safonova and Jessica K Hodgins. 2007. Construction and optimal search of interpolated motion graphs. In ACM SIGGRAPH 2007 papers. 106–es.Google ScholarDigital Library

58. Tamar Rott Shaham, Tali Dekel, and Tomer Michaeli. 2019. SinGAN: Learning a generative model from a single natural image. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 4570–4580.Google ScholarCross Ref

59. Assaf Shocher, Shai Bagon, Phillip Isola, and Michal Irani. 2019. Ingan: Capturing and retargeting the” dna” of a natural image. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 4492–4501.Google ScholarCross Ref

60. Sebastian Starke, Yiwei Zhao, Taku Komura, and Kazi Zaman. 2020. Local motion phases for learning multi-contact character movements. ACM Transactions on Graphics (TOG) 39, 4 (2020), 54–1.Google ScholarDigital Library

61. Sebastian Starke, Yiwei Zhao, Fabio Zinno, and Taku Komura. 2021. Neural animation layering for synthesizing martial arts movements. ACM Transactions on Graphics (TOG) 40, 4 (2021), 1–16.Google ScholarDigital Library

62. Luis Molina Tanco and Adrian Hilton. 2000. Realistic synthesis of novel human movements from a database of motion capture examples. In Proceedings Workshop on Human Motion. IEEE, 137–142.Google ScholarCross Ref

63. Graham W Taylor and Geoffrey E Hinton. 2009. Factored conditional restricted Boltzmann machines for modeling motion style. In Proceedings of the 26th annual international conference on machine learning. 1025–1032.Google ScholarDigital Library

64. Truebones Motions Animation Studios. 2022. Truebones. https://truebones.gumroad.com/ Accessed: 2022-1-15.Google Scholar

65. Ruben Villegas, Jimei Yang, Duygu Ceylan, and Honglak Lee. 2018. Neural kinematic networks for unsupervised motion retargetting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 8639–8648.Google ScholarCross Ref

66. Jack M Wang, David J Fleet, and Aaron Hertzmann. 2007. Gaussian process dynamical models for human motion. IEEE transactions on pattern analysis and machine intelligence 30, 2 (2007), 283–298.Google Scholar

67. Xiaolin Wei, Jianyuan Min, and Jinxiang Chai. 2011. Physically valid statistical models for human motion generation. ACM Transactions on Graphics (TOG) 30, 3 (2011), 1–10.Google ScholarDigital Library

68. Douglas J Wiley and James K Hahn. 1997. Interpolation synthesis of articulated figure motion. IEEE Computer Graphics and Applications 17, 6 (1997), 39–45.Google ScholarDigital Library

69. Yuting Ye and C Karen Liu. 2010. Synthesis of responsive motion using a dynamic model. In Computer Graphics Forum, Vol. 29. Wiley Online Library, 555–562.Google Scholar

70. He Zhang, Sebastian Starke, Taku Komura, and Jun Saito. 2018. Mode-adaptive neural networks for quadruped motion control. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–11.Google ScholarDigital Library

71. Liming Zhao, Aline Normoyle, Sanjeev Khanna, and Alla Safonova. 2009. Automatic construction of a minimum size motion graph. In Proceedings of the 2009 ACM SIGGRAPH/Eurographics symposium on Computer animation. 27–35.Google ScholarDigital Library

72. Yi Zhou, Connelly Barnes, Jingwan Lu, Jimei Yang, and Hao Li. 2019. On the continuity of rotation representations in neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5745–5753.Google ScholarCross Ref

73. Yi Zhou, Zimo Li, Shuangjiu Xiao, Chong He, Zeng Huang, and Hao Li. 2018. Auto-Conditioned Recurrent Networks for Extended Complex Human Motion Synthesis. In International Conference on Learning Representations.Google Scholar