“Facial performance sensing head-mounted display” by Li, Trutoiu, Olszewski, Trutna, Hsieh, et al. …

Conference:

Type(s):

Title:

- Facial performance sensing head-mounted display

Session/Category Title:

- Face Reality

Presenter(s)/Author(s):

- Hao Li

- Laura Trutoiu

- Kyle Olszewski

- Tristan Trutna

- Pei-Lun Hsieh

- Aaron Nicholls

- Chongyang Ma

- Lingyu Wei

Moderator(s):

Abstract:

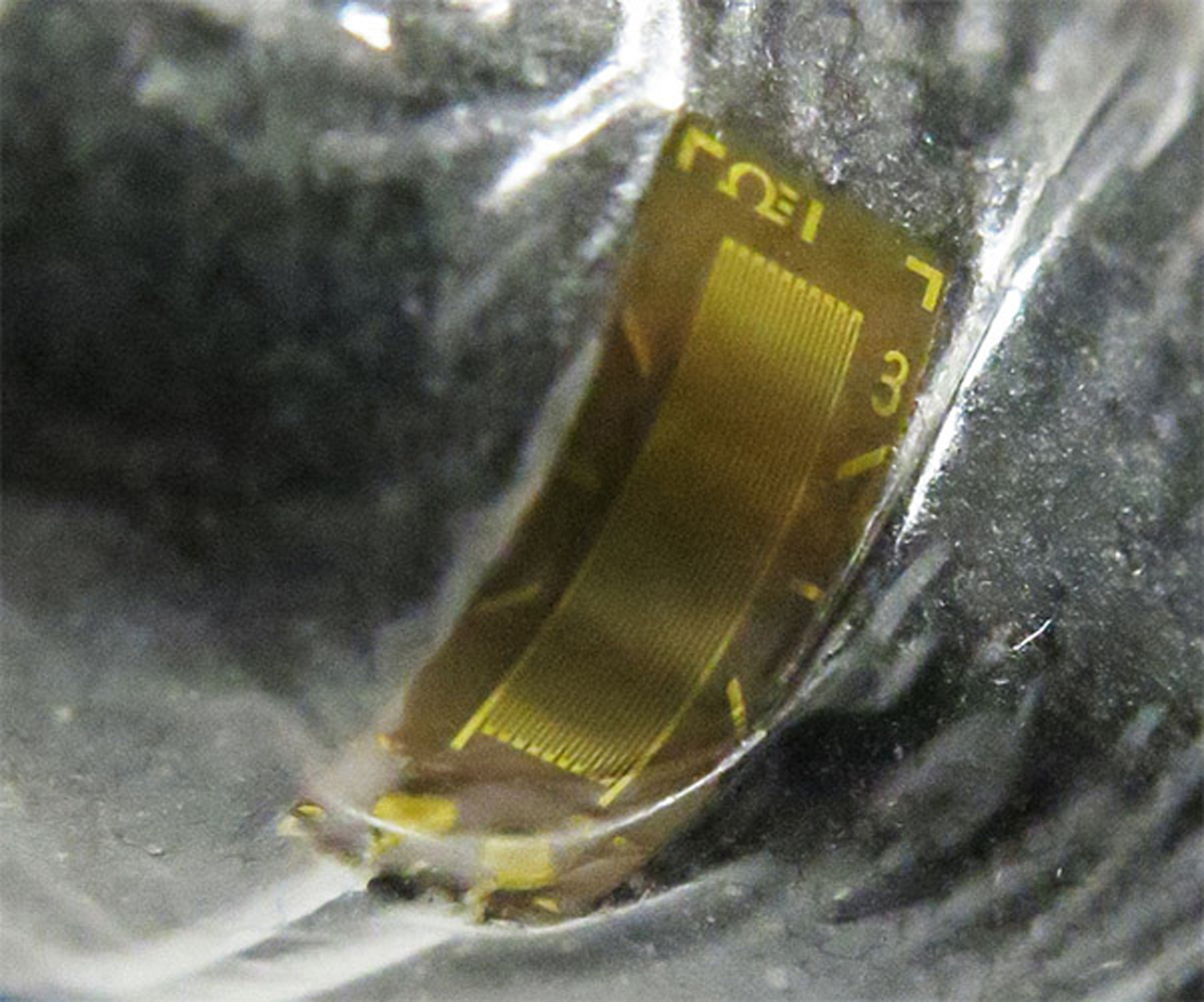

There are currently no solutions for enabling direct face-to-face interaction between virtual reality (VR) users wearing head-mounted displays (HMDs). The main challenge is that the headset obstructs a significant portion of a user’s face, preventing effective facial capture with traditional techniques. To advance virtual reality as a next-generation communication platform, we develop a novel HMD that enables 3D facial performance-driven animation in real-time. Our wearable system uses ultra-thin flexible electronic materials that are mounted on the foam liner of the headset to measure surface strain signals corresponding to upper face expressions. These strain signals are combined with a head-mounted RGB-D camera to enhance the tracking in the mouth region and to account for inaccurate HMD placement. To map the input signals to a 3D face model, we perform a single-instance offline training session for each person. For reusable and accurate online operation, we propose a short calibration step to readjust the Gaussian mixture distribution of the mapping before each use. The resulting animations are visually on par with cutting-edge depth sensor-driven facial performance capture systems and hence, are suitable for social interactions in virtual worlds.

References:

1. Abrash, M., 2012. Latency — the sine qua non of AR and VR. http://blogs.valvesoftware.com/abrash/latency-the-sine-qua-non-of-ar-and-vr/.Google Scholar

2. Bhat, K. S., Goldenthal, R., Ye, Y., Mallet, R., and Koperwas, M. 2013. High fidelity facial animation capture and retargeting with contours. In SCA ’13, 7–14. Google ScholarDigital Library

3. Bickel, B., Botsch, M., Angst, R., Matusik, W., Otaduy, M., Pfister, H., and Gross, M. 2007. Multi-scale capture of facial geometry and motion. ACM Trans. Graph. 26, 3. Google ScholarDigital Library

4. Blanz, V., and Vetter, T. 1999. A morphable model for the synthesis of 3d faces. In SIGGRAPH ’99, 187–194. Google ScholarDigital Library

5. Bouaziz, S., wang, Y., and Pauly, M. 2013. Online modeling for realtime facial animation. ACM Trans. Graph. 32, 4, 40:1–40:10. Google ScholarDigital Library

6. Brand, M. 1999. Voice puppetry. In SIGGRAPH’99, 21–28. Google ScholarDigital Library

7. Bregler, C., Covell, M., and Slaney, M. 1997. Video rewrite: Driving visual speech with audio. In SIGGRAPH ’97, 353–360. Google ScholarDigital Library

8. Cao, C., Weng, Y., Lin, S., and Zhou, K. 2013. 3d shape regression for real-time facial animation. ACM Trans. Graph. 32, 4, 41:1–41:10. Google ScholarDigital Library

9. Cao, C., Hou, Q., and Zhou, K. 2014. Displaced dynamic expression regression for real-time facial tracking and animation. ACM Trans. Graph. 33, 4, 43:1–43:10. Google ScholarDigital Library

10. Chai, J.-X., Xiao, J., and Hodgins, J. 2003. Vision-based control of 3d facial animation. In SCA ’03, 193–206. Google ScholarDigital Library

11. Chen, Y.-L., Wu, H.-T., Shi, F., Tong, X., and Chai, J. 2013. Accurate and robust 3d facial capture using a single rgbd camera. In ICCV, IEEE, 3615–3622. Google ScholarDigital Library

12. Cootes, T. F., Edwards, G. J., and Taylor, C. J. 2001. Active appearance models. IEEE TPAMI 23, 6, 681–685. Google ScholarDigital Library

13. Cristinacce, D., and Cootes, T. 2008. Automatic feature localisation with constrained local models. Pattern Recogn. 41, 10, 3054–3067. Google ScholarDigital Library

14. Ekman, P., and Friesen, W. 1978. Facial Action Coding System: A Technique for the Measurement of Facial Movement. Consulting Psychologists Press, Palo Alto.Google Scholar

15. Gales, M. 1998. Maximum likelihood linear transformations for hmm-based speech recognition. Computer Speech and Language 12, 75–98.Google ScholarCross Ref

16. Garrido, P., Valgaert, L., Wu, C., and Theobalt, C. 2013. Reconstructing detailed dynamic face geometry from monocular video. ACM Trans. Graph. 32, 6, 158:1–158:10. Google ScholarDigital Library

17. Gruebler, A., and Suzuki, K. 2014. Design of a wearable device for reading positive expressions from facial emg signals. Affective Computing, IEEE Transactions on 5, 3, 227–237.Google ScholarCross Ref

18. Hibbeler, R. 2005. Mechanics of Materials. Prentice Hall, Upper Saddle River.Google Scholar

19. Hsieh, P.-L., Ma, C., Yu, J., and Li, H. 2015. Unconstrained realtime facial performance capture. In CVPR, to appear.Google Scholar

20. Intel, 2014. Intel realsense ivcam. https://software.intel.com/en-us/intel-realsense-sdk.Google Scholar

21. Kramer, J., and Leifer, L. 1988. The talking glove. SIGCAPH Comput. Phys. Handicap., 39, 12–16. Google ScholarDigital Library

22. Li, H., Weise, T., and Pauly, M. 2010. Example-based facial rigging. ACM Trans. Graph. 29, 4, 32:1–32:6. Google ScholarDigital Library

23. Li, H., Yu, J., Ye, Y., and Bregler, C. 2013. Realtime facial animation with on-the-fly correctives. ACM Trans. Graph. 32, 4, 42:1–42:10. Google ScholarDigital Library

24. Lucero, J. C., and Munhall, K. G. 1999. A model of facial biomechanics for speech production. The Journal of the Acoustical Society of America 106, 5, 2834–2842.Google ScholarCross Ref

25. McFarland, D. J., and Wolpaw, J. R. 2011. Brain-computer interfaces for communication and control. Commun. ACM 54, 5, 60–66. Google ScholarDigital Library

26. NI, 2014. National instruments. http://www.ni.com/white-paper/3642/en/.Google Scholar

27. Oculus VR, 2014. Oculus rift dk2. https://www.oculus.com/dk2/.Google Scholar

28. Omega, 2014. Omega strain gages. http://www.omega.com/Pressure/pdf/KFH.pdf.Google Scholar

29. Parke, F. I., and Waters, K. 1996. Computer Facial Animation. A. K. Peters. Google ScholarDigital Library

30. Pighin, F., and Lewis, J. P. 2006. Performance-driven facial animation. In ACM SIGGRAPH 2006 Courses, SIGGRAPH ’06.Google Scholar

31. Rasmussen, C. E., and Williams, C. K. I. 2005. Gaussian Processes for Machine Learning (Adaptive Computation and Machine Learning). The MIT Press. Google ScholarDigital Library

32. Rusinkiewicz, S., and Levoy, M. 2001. Efficient variants of the icp algorithm. In International Conference on 3-D Digital Imaging and Modeling, IEEE, 145–152.Google Scholar

33. Saragih, J. M., Lucey, S., and Cohn, J. F. 2011. Deformable model fitting by regularized landmark mean-shift. International Journal of Computer Vision 91, 2, 200–215. Google ScholarDigital Library

34. Scheirer, J., Fernandez, R., and Picard, R. W. 1999. Expression glasses: A wearable device for facial expression recognition. In CHI EA ’99, 262–263. Google ScholarDigital Library

35. Sekitani, T., Kaltenbrunner, M., Yokota, T., and Someya, T. 2014. Imperceptible electronic skin. SID Symposium Digest of Technical Papers 45, 1, 122–125.Google ScholarCross Ref

36. Shi, F., Wu, H.-T., Tong, X., and Chai, J. 2014. Automatic acquisition of high-fidelity facial performances using monocular videos. ACM Trans. Graph. 33, 6, 222:1–222:13. Google ScholarDigital Library

37. Sifakis, E., Neverov, I., and Fedkiw, R. 2005. Automatic determination of facial muscle activations from sparse motion capture marker data. ACM Trans. Graph. 24, 3, 417–425. Google ScholarDigital Library

38. SMI, 2014. Sensomotoric instruments. http://www.smivision.com/.Google Scholar

39. Sugimoto, T., Fukushima, M., and Ibaraki, T. 1995. A parallel relaxation method for quadratic programming problems with interval constraints. Journal of Computational and Applied Mathematics 60, 12, 219–236. Google ScholarDigital Library

40. Sumner, R. W., and Popović, J. 2004. Deformation transfer for triangle meshes. ACM Trans. Graph. 23, 3, 399–405. Google ScholarDigital Library

41. Terzopoulos, D., and Waters, K. 1990. Physically-based facial modelling, analysis, and animation. The Journal of Visualization and Computer Animation 1, 2, 73–80.Google ScholarCross Ref

42. Weise, T., Bouaziz, S., Li, H., and Pauly, M. 2011. Realtime performance-based facial animation. ACM Trans. Graph. 30, 4, 77:1–77:10. Google ScholarDigital Library

43. Xiong, X., and De la Torre, F. 2013. Supervised descent method and its application to face alignment. In CVPR, IEEE, 532–539. Google ScholarDigital Library