“Example-based Motion Synthesis via Generative Motion Matching” by Li, Chen, Li, Sorkine-Hornung and Chen

Conference:

Type(s):

Title:

- Example-based Motion Synthesis via Generative Motion Matching

Session/Category Title: Character Animation

Presenter(s)/Author(s):

Moderator(s):

Abstract:

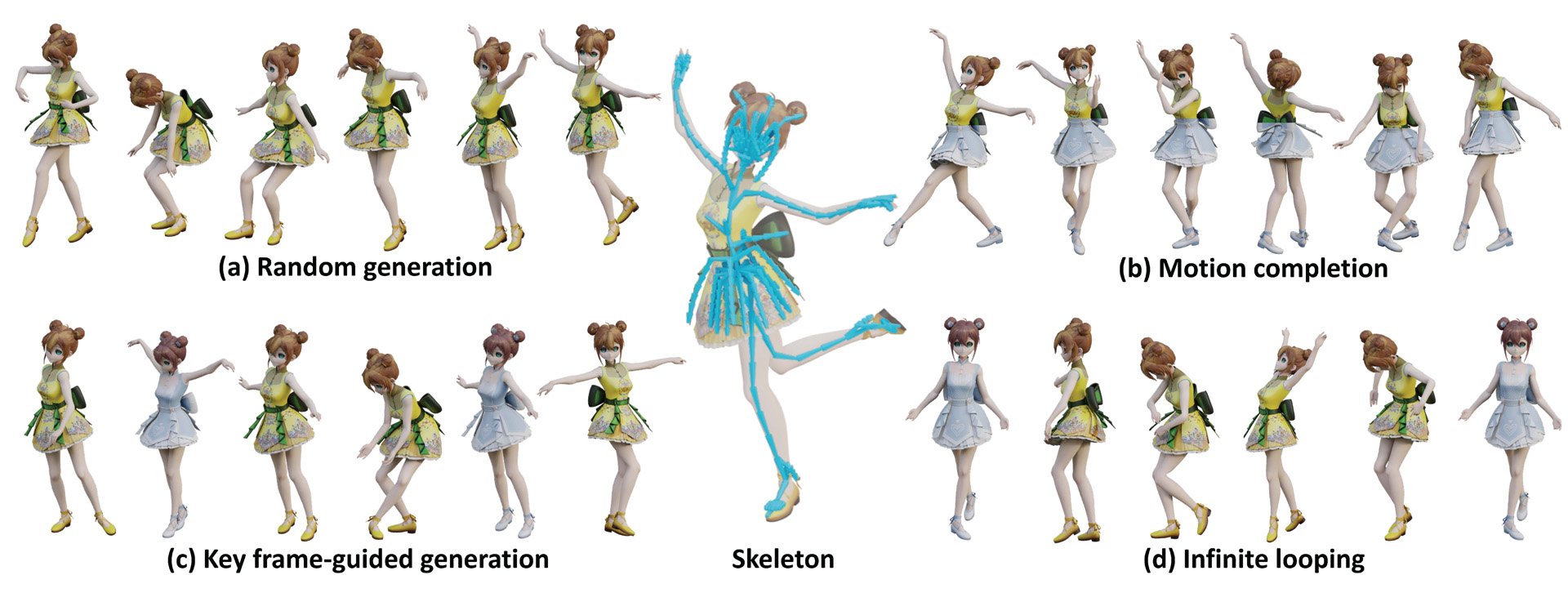

We present GenMM, a generative model that “mines” as many diverse motions as possible from a single or few example sequences. In stark contrast to existing data-driven methods, which typically require long offline training time, are prone to visual artifacts, and tend to fail on large and complex skeletons, GenMM inherits the training-free nature and the superior quality of the well-known Motion Matching method. GenMM can synthesize a high-quality motion within a fraction of a second, even with highly complex and large skeletal structures. At the heart of our generative framework lies the generative motion matching module, which utilizes the bidirectional visual similarity as a generative cost function to motion matching, and operates in a multi-stage framework to progressively refine a random guess using exemplar motion matches. In addition to diverse motion generation, we show the versatility of our generative framework by extending it to a number of scenarios that are not possible with motion matching alone, including motion completion, key frame-guided generation, infinite looping, and motion reassembly.

References:

1. Adobe Systems Inc. 2022. Mixamo. https://www.mixamo.com Accessed: 2022-03-25.

2. Okan Arikan and David A Forsyth. 2002. Interactive motion generation from examples. ACM Transactions on Graphics (TOG) 21, 3 (2002), 483–490.

3. Connelly Barnes, Eli Shechtman, Adam Finkelstein, and Dan B Goldman. 2009. PatchMatch: A randomized correspondence algorithm for structural image editing. ACM Transactions on Graphics (TOG) 28, 3 (2009), 24.

4. Connelly Barnes and Fang-Lue Zhang. 2017. A survey of the state-of-the-art in patch-based synthesis. Computational Visual Media 3, 1 (2017), 3–20.

5. Blender Online Community. 2023. Blender – a 3D modelling and rendering package. Blender Foundation, Blender Institute, Amsterdam.

6. Richard Bowden. 2000. Learning statistical models of human motion. In IEEE Workshop on Human Modeling, Analysis and Synthesis, CVPR, Vol. 2000. Citeseer.

7. Matthew Brand and Aaron Hertzmann. 2000. Style machines. In Proceedings of the 27th annual conference on Computer graphics and interactive techniques. 183–192.

8. Peter J Burt and Edward H Adelson. 1987. The Laplacian pyramid as a compact image code. In Readings in computer vision. Elsevier, 671–679.

9. Michael Buttner. 2019. Machine learning for motion synthesis and character control in games. Proc. of I3D 2019 (2019).

10. Michael Büttner and Simon Clavet. 2015. Motion Matching – The Road to Next Gen Animation. https://www.youtube.com/watch?v=z_wpgHFSWss&t=658s

11. Jinxiang Chai and Jessica K Hodgins. 2007. Constraint-based motion optimization using a statistical dynamic model. In ACM SIGGRAPH 2007 papers. 8–es.

12. Jeremy S De Bonet. 1997. Multiresolution sampling procedure for analysis and synthesis of texture images. In Proceedings of the 24th annual conference on Computer graphics and interactive techniques. 361–368.

13. Yinglin Duan, Yue Lin, Zhengxia Zou, Yi Yuan, Zhehui Qian, and Bohan Zhang. 2022. A Unified Framework for Real Time Motion Completion. (2022).

14. Katerina Fragkiadaki, Sergey Levine, Panna Felsen, and Jitendra Malik. 2015. Recurrent network models for human dynamics. In Proceedings of the IEEE International Conference on Computer Vision. 4346–4354.

15. Ian J Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron C Courville, and Yoshua Bengio. 2014. Generative Adversarial Nets. In NIPS.

16. Niv Granot, Ben Feinstein, Assaf Shocher, Shai Bagon, and Michal Irani. 2022. Drop the gan: In defense of patches nearest neighbors as single image generative models. In Conference on Computer Vision and Pattern Recognition (CVPR). 13460–13469.

17. Keith Grochow, Steven L Martin, Aaron Hertzmann, and Zoran Popović. 2004. Style-based inverse kinematics. In ACM SIGGRAPH 2004 Papers. 522–531.

18. Ikhsanul Habibie, Mohamed Elgharib, Kripasindhu Sarkar, Ahsan Abdullah, Simbarashe Nyatsanga, Michael Neff, and Christian Theobalt. 2022. A Motion Matching-based Framework for Controllable Gesture Synthesis from Speech. In ACM SIGGRAPH 2022 Conference Proceedings. 1–9.

19. Charles Han, Eric Risser, Ravi Ramamoorthi, and Eitan Grinspun. 2008. Multiscale texture synthesis. In ACM SIGGRAPH 2008 papers. 1–8.

20. Geof Harrower. 2018. Real player motion tech in’ea sports ufc 3′. Proc. of GDC 2018 (2018).

21. Félix G Harvey, Mike Yurick, Derek Nowrouzezahrai, and Christopher Pal. 2020. Robust motion in-betweening. ACM Transactions on Graphics (TOG) 39, 4 (2020), 60–1.

22. Chengan He, Jun Saito, James Zachary, Holly Rushmeier, and Yi Zhou. 2022. NeMF: Neural Motion Fields for Kinematic Animation. In Advances in Neural Information Processing Systems.

23. David J Heeger and James R Bergen. 1995. Pyramid-based texture analysis/synthesis. In Proceedings of the 22nd annual conference on Computer graphics and interactive techniques. 229–238.

24. Gustav Eje Henter, Simon Alexanderson, and Jonas Beskow. 2020. Moglow: Probabilistic and controllable motion synthesis using normalising flows. ACM Transactions on Graphics (TOG) 39, 6 (2020), 1–14.

25. Daniel Holden, Oussama Kanoun, Maksym Perepichka, and Tiberiu Popa. 2020. Learned motion matching. ACM Transactions on Graphics (TOG) 39, 4 (2020), 53–1.

26. Daniel Holden, Taku Komura, and Jun Saito. 2017. Phase-functioned neural networks for character control. ACM Transactions on Graphics (TOG) 36, 4 (2017), 1–13.

27. Daniel Holden, Jun Saito, and Taku Komura. 2016. A deep learning framework for character motion synthesis and editing. ACM Transactions on Graphics (TOG) 35, 4 (2016), 1–11.

28. Daniel Holden, Jun Saito, Taku Komura, and Thomas Joyce. 2015. Learning motion manifolds with convolutional autoencoders. In SIGGRAPH Asia 2015 technical briefs. 1–4.

29. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A Efros. 2017. Image-to-Image Translation with Conditional Adversarial Networks. CVPR (2017).

30. Deok-Kyeong Jang, Soomin Park, and Sung-Hee Lee. 2022. Motion Puzzle: Arbitrary Motion Style Transfer by Body Part. ACM Transactions on Graphics (TOG) (2022).

31. Tero Karras, Timo Aila, Samuli Laine, and Jaakko Lehtinen. 2018. Progressive Growing of GANs for Improved Quality, Stability, and Variation. In International Conference on Learning Representations.

32. Lucas Kovar, Michael Gleicher, and Frédéric Pighin. 2002. Motion Graphs. In Proceedings of the 29th Annual Conference on Computer Graphics and Interactive Techniques (San Antonio, Texas) (SIGGRAPH ’02). Association for Computing Machinery, New York, NY, USA, 473–482.

33. Jehee Lee, Jinxiang Chai, Paul SA Reitsma, Jessica K Hodgins, and Nancy S Pollard. 2002. Interactive control of avatars animated with human motion data. In Proceedings of the 29th annual conference on Computer graphics and interactive techniques. 491–500.

34. Seyoung Lee, Jiye Lee, and Jehee Lee. 2022. Learning Virtual Chimeras by Dynamic Motion Reassembly. ACM Trans. Graph. 41, 6, Article 182 (2022).

35. Yongjoon Lee, Kevin Wampler, Gilbert Bernstein, Jovan Popović, and Zoran Popović. 2010. Motion fields for interactive character locomotion. In ACM Transactions on Graphics (TOG). 1–8.

36. Sergey Levine, Jack M Wang, Alexis Haraux, Zoran Popović, and Vladlen Koltun. 2012. Continuous character control with low-dimensional embeddings. ACM Transactions on Graphics (TOG) 31, 4 (2012), 1–10.

37. Peizhuo Li, Kfir Aberman, Zihan Zhang, Rana Hanocka, and Olga Sorkine-Hornung. 2022. GANimator: Neural Motion Synthesis from a Single Sequence. ACM Transactions on Graphics (TOG) 41, 4 (2022), 138.

38. Yan Li, Tianshu Wang, and Heung-Yeung Shum. 2002. Motion texture: a two-level statistical model for character motion synthesis. In Proceedings of the 29th annual conference on Computer graphics and interactive techniques. 465–472.

39. Dario Pavllo, David Grangier, and Michael Auli. 2018. Quaternet: A quaternion-based recurrent model for human motion. arXiv preprint arXiv:1805.06485 (2018).

40. Xue Bin Peng, Pieter Abbeel, Sergey Levine, and Michiel Van de Panne. 2018. Deepmimic: Example-guided deep reinforcement learning of physics-based character skills. ACM Transactions On Graphics (TOG) 37, 4 (2018), 1–14.

41. Xue Bin Peng, Ze Ma, Pieter Abbeel, Sergey Levine, and Angjoo Kanazawa. 2021. Amp: Adversarial motion priors for stylized physics-based character control. ACM Transactions on Graphics (TOG) 40, 4 (2021), 1–20.

42. Ken Perlin. 1985. An image synthesizer. ACM Siggraph Computer Graphics 19, 3 (1985), 287–296.

43. Ken Perlin and Athomas Goldberg. 1996. Improv: A system for scripting interactive actors in virtual worlds. In Proceedings of the 23rd annual conference on Computer graphics and interactive techniques. 205–216.

44. Katherine Pullen and Christoph Bregler. 2000. Animating by multi-level sampling. In Proceedings Computer Animation 2000. IEEE, 36–42.

45. Katherine Pullen and Christoph Bregler. 2002. Motion capture assisted animation: Texturing and synthesis. In Proceedings of the 29th annual conference on Computer graphics and interactive techniques. 501–508.

46. Jia Qin, Youyi Zheng, and Kun Zhou. 2022. Motion In-betweening via Two-stage Transformers. ACM Transactions on Graphics (TOG) 41, 6 (2022), 1–16.

47. Sigal Raab, Inbal Leibovitch, Peizhuo Li, Kfir Aberman, Olga Sorkine-Hornung, and Daniel Cohen-Or. 2023a. MoDi: Unconditional Motion Synthesis from Diverse Data. (2023).

48. Sigal Raab, Inbal Leibovitch, Guy Tevet, Moab Arar, Amit H Bermano, and Daniel Cohen-Or. 2023b. Single Motion Diffusion. arXiv preprint arXiv:2302.05905 (2023).

49. Davis Rempe, Tolga Birdal, Aaron Hertzmann, Jimei Yang, Srinath Sridhar, and Leonidas J Guibas. 2021. Humor: 3d human motion model for robust pose estimation. In Proceedings of the IEEE/CVF international conference on computer vision. 11488–11499.

50. Tamar Rott Shaham, Tali Dekel, and Tomer Michaeli. 2019. Singan: Learning a generative model from a single natural image. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 4570–4580.

51. Mingyi Shi, Kfir Aberman, Andreas Aristidou, Taku Komura, Dani Lischinski, Daniel Cohen-Or, and Baoquan Chen. 2020. Motionet: 3d human motion reconstruction from monocular video with skeleton consistency. ACM Transactions on Graphics (TOG) 40, 1 (2020), 1–15.

52. Denis Simakov, Yaron Caspi, Eli Shechtman, and Michal Irani. 2008. Summarizing visual data using bidirectional similarity. In Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 1–8.

53. Sebastian Starke, Ian Mason, and Taku Komura. 2022. Deepphase: Periodic autoencoders for learning motion phase manifolds. ACM Transactions on Graphics (TOG) 41, 4 (2022), 1–13.

54. Guy Tevet, Brian Gordon, Amir Hertz, Amit H Bermano, and Daniel Cohen-Or. 2022a. MotionCLIP: Exposing Human Motion Generation to CLIP Space. arXiv preprint arXiv:2203.08063 (2022).

55. Guy Tevet, Sigal Raab, Brian Gordon, Yonatan Shafir, Daniel Cohen-Or, and Amit H Bermano. 2022b. Human motion diffusion model. arXiv preprint arXiv:2209.14916 (2022).

56. Truebones Motions Animation Studios. 2022. Truebones. https://truebones.gumroad.com/ Accessed: 2022-9-2.

57. Jonathan Tseng, Rodrigo Castellon, and C Karen Liu. 2022. EDGE: Editable Dance Generation From Music. arXiv preprint arXiv:2211.10658 (2022).

58. Aaron Van Den Oord, Oriol Vinyals, et al. 2017. Neural discrete representation learning. Advances in neural information processing systems 30 (2017).

59. Jack M Wang, David J Fleet, and Aaron Hertzmann. 2007. Gaussian process dynamical models for human motion. IEEE transactions on pattern analysis and machine intelligence 30, 2 (2007), 283–298.

60. Li-Yi Wei, Sylvain Lefebvre, Vivek Kwatra, and Greg Turk. 2009. State of the art in example-based texture synthesis. Eurographics 2009, State of the Art Report, EG-STAR (2009), 93–117.

61. Li-Yi Wei and Marc Levoy. 2000. Fast texture synthesis using tree-structured vector quantization. In Proceedings of the 27th annual conference on Computer graphics and interactive techniques. 479–488.

62. Mengyi Zhao, Mengyuan Liu, Bin Ren, Shuling Dai, and Nicu Sebe. 2023. Modiff: Action-Conditioned 3D Motion Generation with Denoising Diffusion Probabilistic Models. arXiv preprint arXiv:2301.03949 (2023).

63. Yi Zhou, Connelly Barnes, Jingwan Lu, Jimei Yang, and Hao Li. 2019. On the continuity of rotation representations in neural networks. In Conference on Computer Vision and Pattern Recognition (CVPR). 5745–5753.

64. Yi Zhou, Zimo Li, Shuangjiu Xiao, Chong He, Zeng Huang, and Hao Li. 2018. Auto-conditioned recurrent networks for extended complex human motion synthesis. In International Conference on Learning Representations.