“DeepFaceVideoEditing: sketch-based deep editing of face videos” by Liu, Chen, Lai, Li, Jiang, et al. …

Conference:

Type(s):

Title:

- DeepFaceVideoEditing: sketch-based deep editing of face videos

Presenter(s)/Author(s):

Abstract:

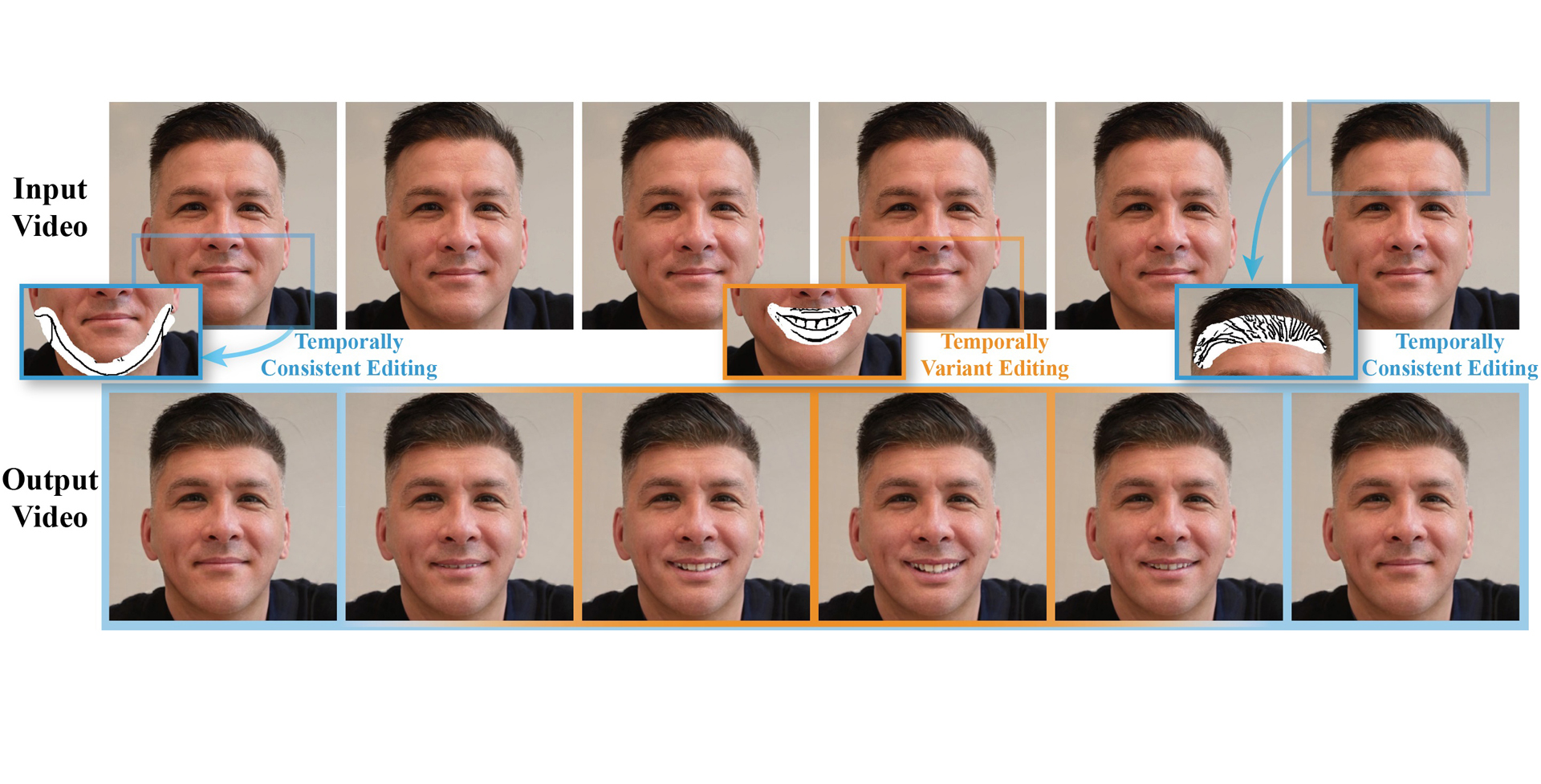

Sketches, which are simple and concise, have been used in recent deep image synthesis methods to allow intuitive generation and editing of facial images. However, it is nontrivial to extend such methods to video editing due to various challenges, ranging from appropriate manipulation propagation and fusion of multiple editing operations to ensure temporal coherence and visual quality. To address these issues, we propose a novel sketch-based facial video editing framework, in which we represent editing manipulations in latent space and propose specific propagation and fusion modules to generate high-quality video editing results based on StyleGAN3. Specifically, we first design an optimization approach to represent sketch editing manipulations by editing vectors, which are propagated to the whole video sequence using a proper strategy to cope with different editing needs. Specifically, input editing operations are classified into two categories: temporally consistent editing and temporally variant editing. The former (e.g., change of face shape) is applied to the whole video sequence directly, while the latter (e.g., change of facial expression or dynamics) is propagated with the guidance of expression or only affects adjacent frames in a given time window. Since users often perform different editing operations in multiple frames, we further present a region-aware fusion approach to fuse diverse editing effects. Our method supports video editing on facial structure and expression movement by sketch, which cannot be achieved by previous works. Both qualitative and quantitative evaluations show the superior editing ability of our system to existing and alternative solutions.

References:

1. Rameen Abdal, Peihao Zhu, Niloy J Mitra, and Peter Wonka. 2021. StyleFlow: Attribute-conditioned exploration of StyleGAN-generated images using conditional continuous normalizing flows. ACM Trans. Graph. 40, 3 (2021), 21:1–21:21.Google ScholarDigital Library

2. Yuval Alaluf, Or Patashnik, and Daniel Cohen-Or. 2021a. Only a matter of style: age transformation using a style-based regression model. ACM Trans. Graph. 40, 4 (2021), 45:1–45:12.Google ScholarDigital Library

3. Yuval Alaluf, Omer Tov, Ron Mokady, Rinon Gal, and Amit H. Bermano. 2021b. HyperStyle: StyleGAN Inversion with HyperNetworks for Real Image Editing. CoRR abs/2111.15666 (2021).Google Scholar

4. Kiran S. Bhat, Steven M. Seitz, Jessica K. Hodgins, and Pradeep K. Khosla. 2004. Flowbased video synthesis and editing. ACM Trans. Graph. 23, 3 (2004), 360–363.Google ScholarDigital Library

5. Mikolaj Binkowski, Danica J. Sutherland, Michael Arbel, and Arthur Gretton. 2018. Demystifying MMD GANs. In ICLR.Google Scholar

6. Shu-Yu Chen, Feng-Lin Liu, Yu-Kun Lai, Paul L. Rosin, Chunpeng Li, Hongbo Fu, and Lin Gao. 2021. DeepFaceEditing: deep face generation and editing with disentangled geometry and appearance control. ACM Trans. Graph. 40, 4 (2021), 90:1–90:15.Google ScholarDigital Library

7. Shu-Yu Chen, Wanchao Su, Lin Gao, Shihong Xia, and Hongbo Fu. 2020. DeepFace-Drawing: deep generation of face images from sketches. ACM Trans. Graph. 39, 4 (2020), 72:1–72:16.Google ScholarDigital Library

8. Yu Deng, Jiaolong Yang, Sicheng Xu, Dong Chen, Yunde Jia, and Xin Tong. 2019. Accurate 3D face reconstruction with weakly-supervised learning: From single image to image set. In CVPR Workshops. 285–295.Google ScholarCross Ref

9. Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge. 2016. Image Style Transfer Using Convolutional Neural Networks. In CVPR. 2414–2423.Google Scholar

10. Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron C. Courville, and Yoshua Bengio. 2014. Generative Adversarial Networks. CoRR abs/1406.2661 (2014). arXiv:1406.2661Google Scholar

11. Shuyang Gu, Jianmin Bao, Hao Yang, Dong Chen, Fang Wen, and Lu Yuan. 2019. Mask-Guided Portrait Editing With Conditional GANs. In CVPR. 3436–3445.Google Scholar

12. Erik Härkönen, Aaron Hertzmann, Jaakko Lehtinen, and Sylvain Paris. 2020. Ganspace: Discovering interpretable gan controls. (2020), 9841–9850.Google Scholar

13. Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. 2017. GANs trained by a two time-scale update rule converge to a local Nash equilibrium. In NeurIPS. 6626–6637.Google Scholar

14. Shi-Min Hu, Dun Liang, Guo-Ye Yang, Guo-Wei Yang, and Wen-Yang Zhou. 2020. Jittor: a novel deep learning framework with meta-operators and unified graph execution. Science China Information Sciences 63, 222103 (2020), 1–21.Google Scholar

15. Jia-Bin Huang, Sing Bing Kang, Narendra Ahuja, and Johannes Kopf. 2016. Temporally coherent completion of dynamic video. ACM Trans. Graph. 35, 6 (2016), 196:1–196:11.Google ScholarDigital Library

16. Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A. Efros. 2017. Image-to-Image Translation with Conditional Adversarial Networks. In CVPR. 5967–5976.Google Scholar

17. Ondrej Jamriska, Sárka Sochorová, Ondrej Texler, Michal Lukác, Jakub Fiser, Jingwan Lu, Eli Shechtman, and Daniel Sýkora. 2019. Stylizing video by example. ACM Trans. Graph. 38, 4 (2019), 107:1–107:11.Google ScholarDigital Library

18. Youngjoo Jo and Jongyoul Park. 2019. SC-FEGAN: Face Editing Generative Adversarial Network With User’s Sketch and Color. In ICCV. 1745–1753.Google Scholar

19. Tero Karras, Miika Aittala, Samuli Laine, Erik Härkönen, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2021. Alias-free generative adversarial networks. NeurIPS (2021), 852–863.Google Scholar

20. Tero Karras, Samuli Laine, and Timo Aila. 2019. A Style-Based Generator Architecture for Generative Adversarial Networks. In CVPR. 4401–4410.Google Scholar

21. Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2020. Analyzing and Improving the Image Quality of StyleGAN. In CVPR. 8107–8116.Google Scholar

22. Yoni Kasten, Dolev Ofri, Oliver Wang, and Tali Dekel. 2021. Layered Neural Atlases for Consistent Video Editing. ACM Trans. Graph. 40, 6 (2021), 210:1–210:12.Google ScholarDigital Library

23. Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).Google Scholar

24. Cheng-Han Lee, Ziwei Liu, Lingyun Wu, and Ping Luo. 2020. MaskGAN: Towards Diverse and Interactive Facial Image Manipulation. In CVPR. 5548–5557.Google Scholar

25. Chenyang Lei and Qifeng Chen. 2019. Fully automatic video colorization with self-regularization and diversity. In CVPR. 3753–3761.Google Scholar

26. Huan Ling, Karsten Kreis, Daiqing Li, Seung Wook Kim, Antonio Torralba, and Sanja Fidler. 2021. EditGAN: High-Precision Semantic Image Editing. NeurIPS (2021), 16331–16345.Google Scholar

27. Yongyi Lu, Shangzhe Wu, Yu-Wing Tai, and Chi-Keung Tang. 2018. Image Generation from Sketch Constraint Using Contextual GAN. In ECCV, Vol. 11220. 213–228.Google Scholar

28. BR Mallikarjun, Ayush Tewari, Abdallah Dib, Tim Weyrich, Bernd Bickel, Hans Peter Seidel, Hanspeter Pfister, Wojciech Matusik, Louis Chevallier, Mohamed A Elgharib, et al. 2021. PhotoApp: Photorealistic appearance editing of head portraits. ACM Trans. Graph. 40, 4 (2021), 44:1–44:16.Google Scholar

29. Simone Meyer, Victor Cornillère, Abdelaziz Djelouah, Christopher Schroers, and Markus H. Gross. 2018. Deep Video Color Propagation. In British Machine Vision Conference (BMVC). 128.Google Scholar

30. Mehdi Mirza and Simon Osindero. 2014. Conditional Generative Adversarial Nets. CoRR abs/1411.1784 (2014). arXiv:1411.1784Google Scholar

31. Prashant Pandey, Mrigank Raman, Sumanth Varambally, and Prathosh AP. 2021. Generalization on Unseen Domains via Inference-Time Label-Preserving Target Projections. In CVPR. 12924–12933.Google Scholar

32. A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga, and et al. 2019. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In NeurIPS. 8024–8035.Google Scholar

33. Or Patashnik, Zongze Wu, Eli Shechtman, Daniel Cohen-Or, and Dani Lischinski. 2021. Styleclip: Text-driven manipulation of stylegan imagery. In ICCV. 2085–2094.Google Scholar

34. Tiziano Portenier, Qiyang Hu, Attila Szabó, Siavash Arjomand Bigdeli, Paolo Favaro, and Matthias Zwicker. 2018. FaceShop: deep sketch-based face image editing. ACM Trans. Graph. 37, 4 (2018), 99:1–99:13.Google ScholarDigital Library

35. Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, and Ilya Sutskever. 2021. Learning Transferable Visual Models From Natural Language Supervision. In ICML, Marina Meila and Tong Zhang (Eds.), Vol. 139. 8748–8763.Google Scholar

36. Elad Richardson, Yuval Alaluf, Or Patashnik, Yotam Nitzan, Yaniv Azar, Stav Shapiro, and Daniel Cohen-Or. 2021. Encoding in Style: A StyleGAN Encoder for Image-to-Image Translation. In CVPR. 2287–2296.Google Scholar

37. Daniel Roich, Ron Mokady, Amit H. Bermano, and Daniel Cohen-Or. 2021. Pivotal Tuning for Latent-based Editing of Real Images. CoRR abs/2106.05744 (2021).Google Scholar

38. Manuel Ruder, Alexey Dosovitskiy, and Thomas Brox. 2018. Artistic Style Transfer for Videos and Spherical Images. Int. J. Comput. Vis. 126, 11 (2018), 1199–1219.Google ScholarDigital Library

39. Yujun Shen, Jinjin Gu, Xiaoou Tang, and Bolei Zhou. 2020. Interpreting the latent space of gans for semantic face editing. In CVPR. 9243–9252.Google Scholar

40. Aliaksandr Siarohin, Stéphane Lathuilière, Sergey Tulyakov, Elisa Ricci, and Nicu Sebe. 2019. First order motion model for image animation. NeurIPS (2019), 7137–7147.Google Scholar

41. Ayush Tewari, Mohamed Elgharib, Florian Bernard, Hans-Peter Seidel, Patrick Pérez, Michael Zollhöfer, and Christian Theobalt. 2020a. PIE: Portrait image embedding for semantic control. ACM Trans. Graph. 39, 6 (2020), 223:1–223:14.Google ScholarDigital Library

42. Ayush Tewari, Mohamed Elgharib, Gaurav Bharaj, Florian Bernard, Hans-Peter Seidel, Patrick Pérez, Michael Zollhofer, and Christian Theobalt. 2020b. StyleRig: Rigging stylegan for 3D control over portrait images. In CVPR. 6142–6151.Google Scholar

43. Ondrej Texler, David Futschik, Michal Kucera, Ondrej Jamriska, Sárka Sochorová, Menglei Chai, Sergey Tulyakov, and Daniel Sýkora. 2020. Interactive video stylization using few-shot patch-based training. ACM Trans. Graph. 39, 4 (2020), 73:1–73:11.Google ScholarDigital Library

44. Yu Tian, Jian Ren, Menglei Chai, Kyle Olszewski, Xi Peng, Dimitris N. Metaxas, and Sergey Tulyakov. 2021. A Good Image Generator Is What You Need for High-Resolution Video Synthesis. In ICLR.Google Scholar

45. Omer Tov, Yuval Alaluf, Yotam Nitzan, Or Patashnik, and Daniel Cohen-Or. 2021. Designing an encoder for StyleGAN image manipulation. ACM Trans. Graph. 40, 4 (2021), 133:1–133:14.Google ScholarDigital Library

46. Carl Vondrick, Abhinav Shrivastava, Alireza Fathi, Sergio Guadarrama, and Kevin Murphy. 2018. Tracking Emerges by Colorizing Videos. In ECCV, Vittorio Ferrari, Martial Hebert, Cristian Sminchisescu, and Yair Weiss (Eds.), Vol. 11217. 402–419.Google Scholar

47. Ting-Chun Wang, Ming-Yu Liu, Andrew Tao, Guilin Liu, Bryan Catanzaro, and Jan Kautz. 2019. Few-shot Video-to-Video Synthesis. In NeurIPS. 5014–5025.Google Scholar

48. Yiqian Wu, Yong-Liang Yang, Qinjie Xiao, and Xiaogang Jin. 2021. Coarse-to-fine: facial structure editing of portrait images via latent space classifications. ACM Trans. Graph. 40, 4 (2021), 46:1–46:13.Google ScholarDigital Library

49. Chao Yang, Xin Lu, Zhe Lin, Eli Shechtman, Oliver Wang, and Hao Li. 2017. High-Resolution Image Inpainting Using Multi-scale Neural Patch Synthesis. In CVPR. 4076–4084.Google Scholar

50. Shuai Yang, Zhangyang Wang, Jiaying Liu, and Zongming Guo. 2020. Deep Plastic Surgery: Robust and Controllable Image Editing with Human-Drawn Sketches. In ECCV, Andrea Vedaldi, Horst Bischof, Thomas Brox, and Jan-Michael Frahm (Eds.), Vol. 12360. 601–617.Google Scholar

51. Xu Yao, Alasdair Newson, Yann Gousseau, and Pierre Hellier. 2021. A Latent Transformer for Disentangled Face Editing in Images and Videos. In ICCV. 13789–13798.Google Scholar

52. Changqian Yu, Changxin Gao, Jingbo Wang, Gang Yu, Chunhua Shen, and Nong Sang. 2021. BiSeNet v2: Bilateral network with guided aggregation for real-time semantic segmentation. International Journal of Computer Vision 129, 11 (2021), 3051–3068.Google ScholarDigital Library

53. Bo Zhang, Mingming He, Jing Liao, Pedro V. Sander, Lu Yuan, Amine Bermak, and Dong Chen. 2019. Deep Exemplar-Based Video Colorization. In CVPR. 8052–8061.Google Scholar

54. Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The unreasonable effectiveness of deep features as a perceptual metric. In CVPR. 586–595.Google Scholar

55. Peihao Zhu, Rameen Abdal, John Femiani, and Peter Wonka. 2021. Barbershop: GAN-Based Image Compositing Using Segmentation Masks. ACM Trans. Graph. 40, 6 (2021), 215:1–215:13.Google ScholarDigital Library

56. Peihao Zhu, Rameen Abdal, Yipeng Qin, and Peter Wonka. 2020. SEAN: Image Synthesis With Semantic Region-Adaptive Normalization. In CVPR. 5103–5112.Google Scholar