“DeepFocus: Learned Image Synthesis for Computational Display”

Conference:

Type(s):

Entry Number: 04

Title:

- DeepFocus: Learned Image Synthesis for Computational Display

Presenter(s)/Author(s):

Abstract:

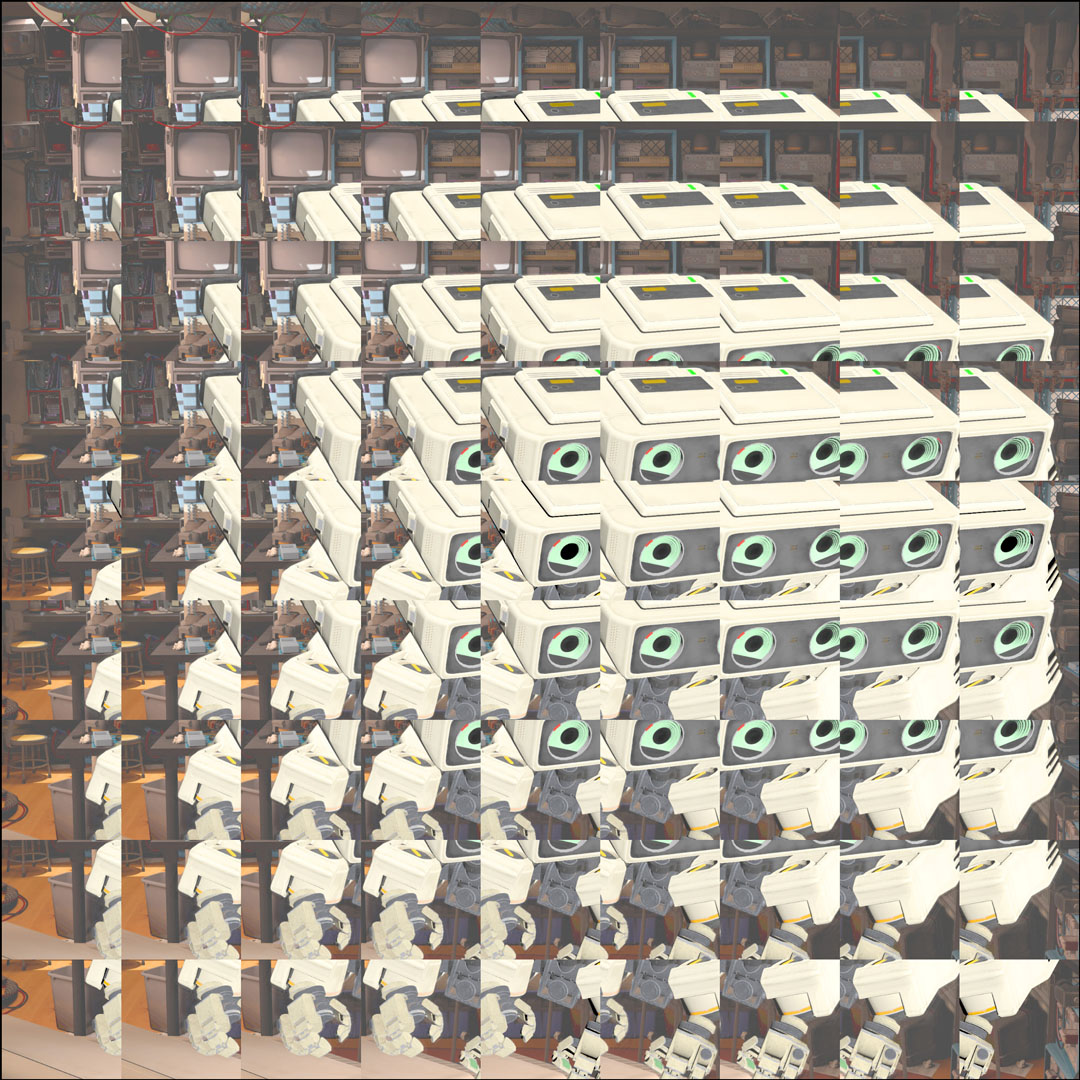

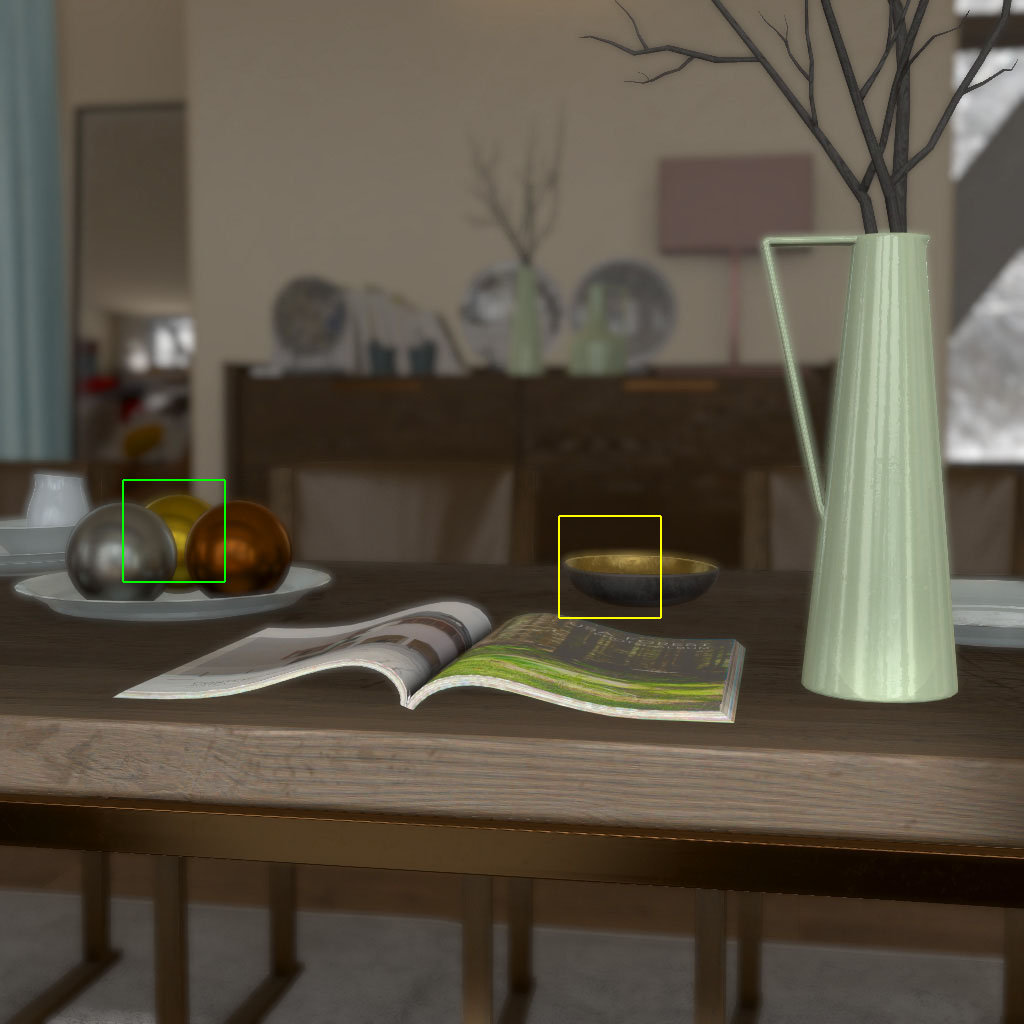

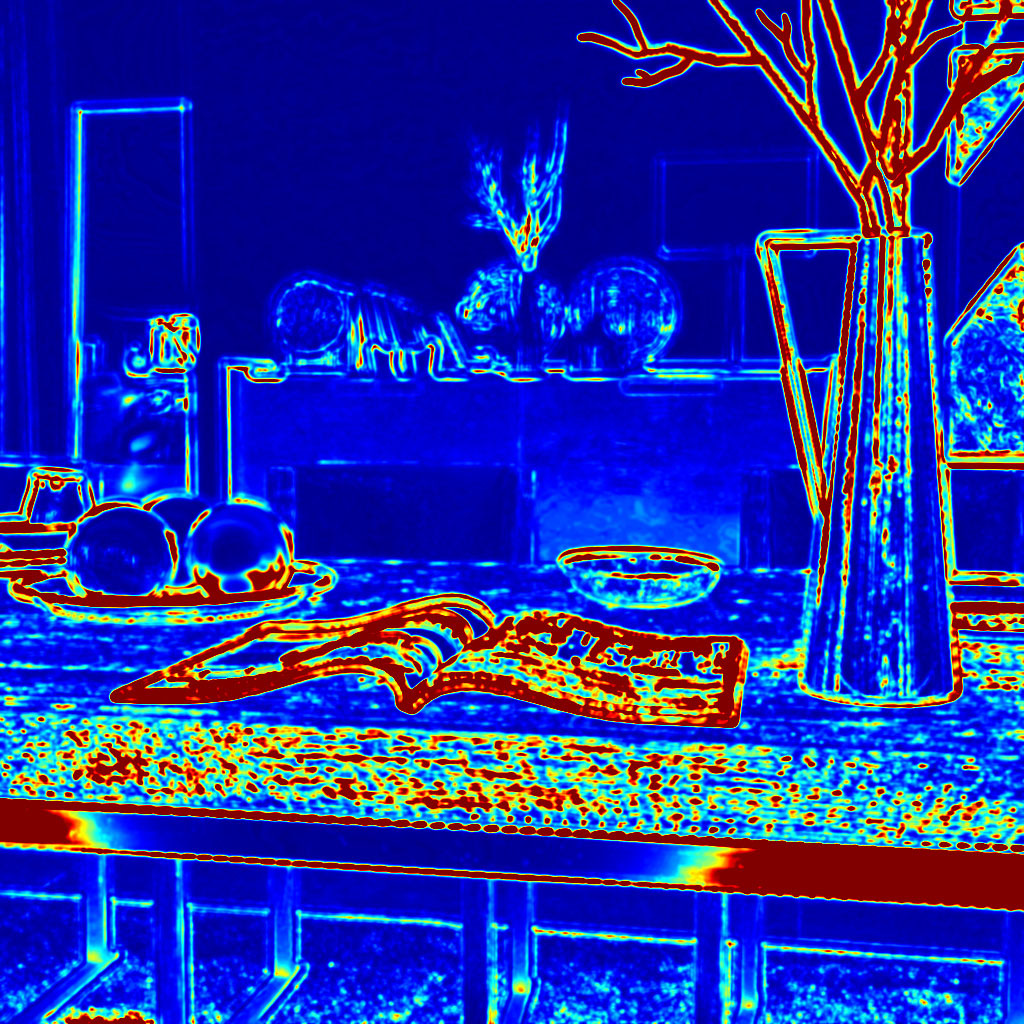

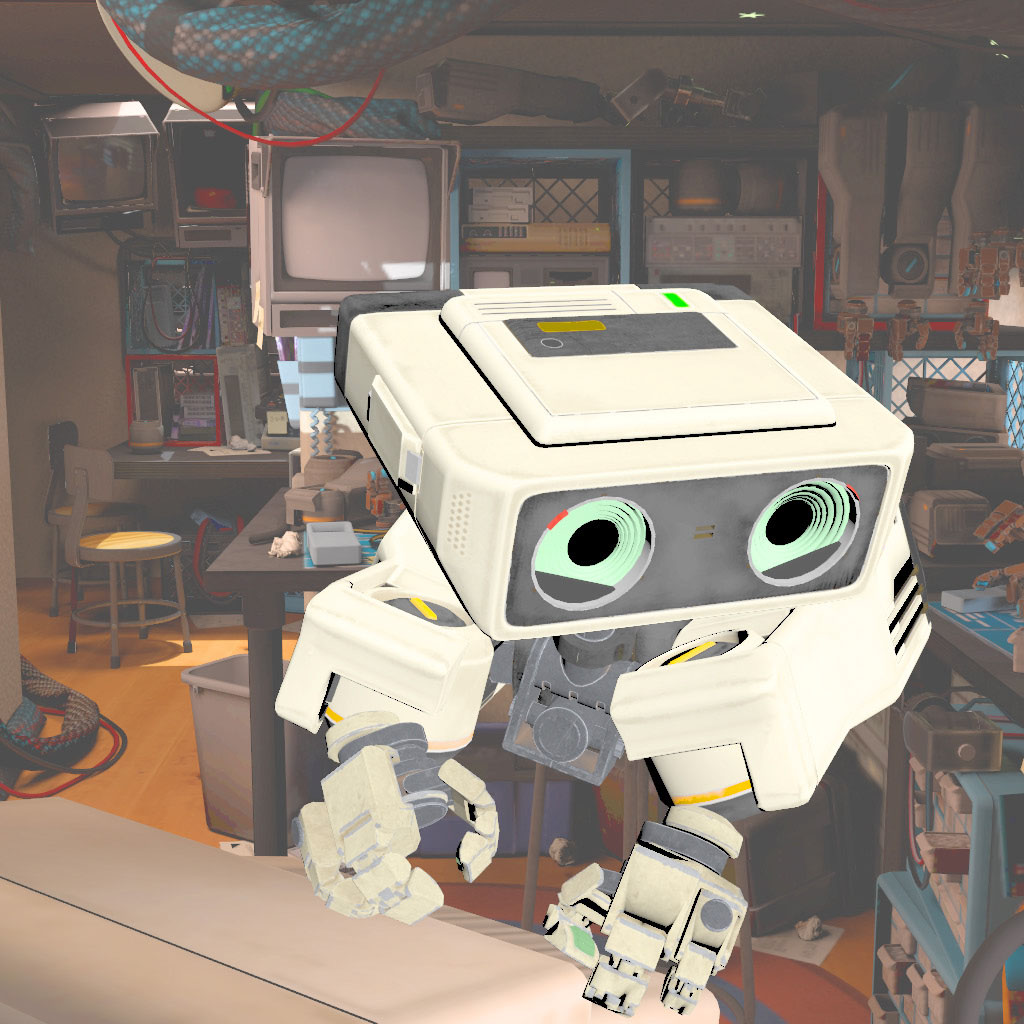

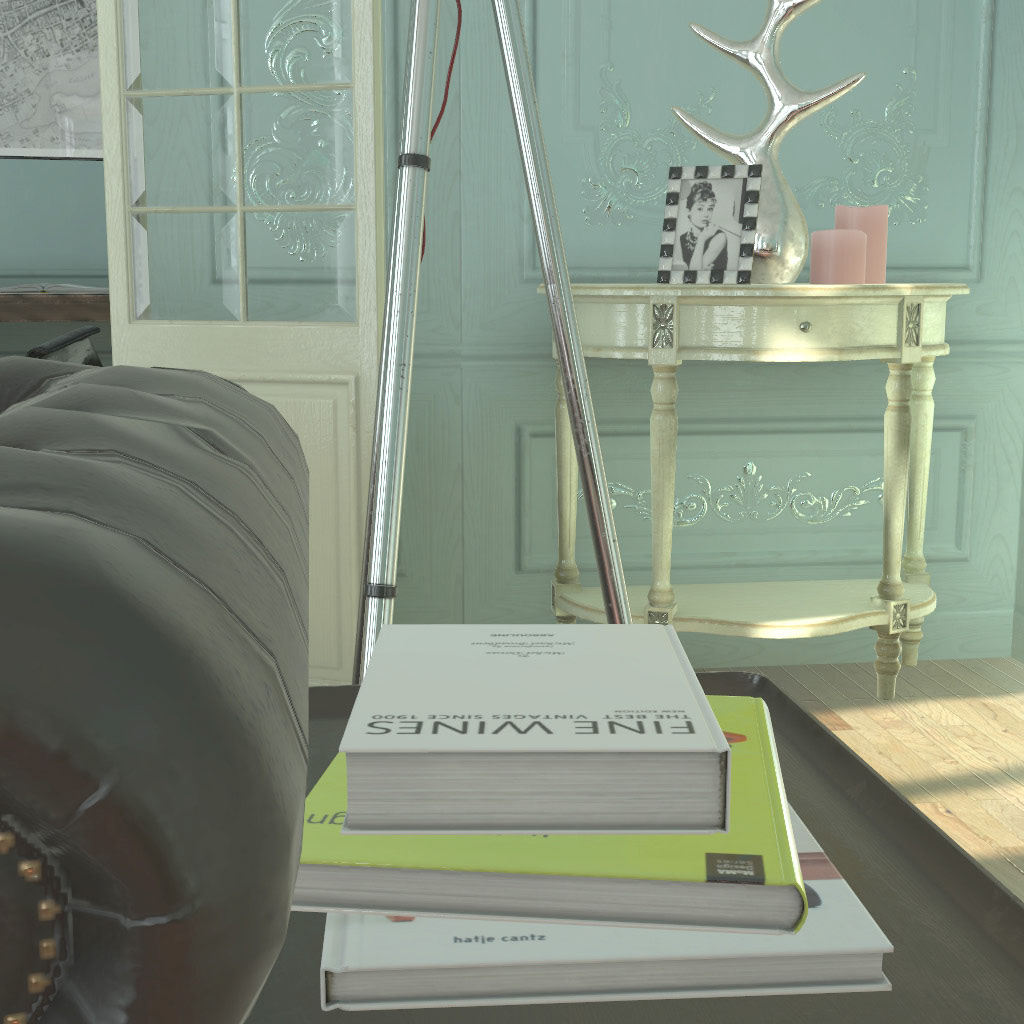

Reproducing accurate retinal defocus blur is important to correctly drive accommodation and address vergence-accommodation conflict in head-mounted displays (HMDs). Numerous accommodation-supporting HMDs have been proposed. Three architectures have received particular attention: varifocal, multifocal, and light field displays. These designs all extend depth of focus, but rely on computationally expensive rendering and optimization algorithms to reproduce accurate retinal blur (often limiting content complexity and interactive applications). To date, no unified computational framework has been proposed to support driving these emerging HMDs using commodity content. In this paper, we introduce Deep-Focus, a generic, end-to-end trainable convolutional neural network designed to efficiently solve the full range of computational tasks for accommodation-supporting HMDs. This network is demonstrated to accurately synthesize defocus blur, focal stacks, multilayer decompositions, and multiview imagery using commonly available RGB-D images. Leveraging recent advances in GPU hardware and best practices for image synthesis networks, DeepFocus enables real-time, near-correct depictions of retinal blur with a broad set of accommodation-supporting HMDs.

References:

Kurt Akeley, Simon J. Watt, Ahna R. Girshick, and Martin S. Banks. 2004. A Stereo Display Prototype with Multiple Focal Distances. ACM Trans. Graph. 23, 3 (2004), 804–813.

Douglas Lanman and David Luebke. 2013. Near-Eye Light Field Displays. ACM Trans. Graph. 32, 6, Article 220 (2013), 10 pages.

Olivier Mercier, Yusufu Sulai, Kevin Mackenzie, Marina Zannoli, James Hillis, Derek Nowrouzezahrai, and Douglas Lanman. 2017. Fast Gaze-Contingent Optimal De compositions for Multifocal Displays. ACM Trans. Graph. 36, 6 (2017), 237.

O. Nalbach, E. Arabadzhiyska, D. Mehta, H.-P. Seidel, and T. Ritschel. 2017. Deep Shading: Convolutional Neural Networks for Screen Space Shading. Comput. Graph. Forum 36, 4 (2017), 65–78.

Nvidia Corporation. 2017–2018. TensorRT. https://developer.nvidia.com/tensorrt. (2017–2018).

Unity Technologies. 2005–2018. Unity Engine. http://unity3d.com. (2005–2018).

Keyword(s):

- Computational Displays

- deep learning

- Depth of field

- Varifocal Displays

- multifocal

- light fields

- accommodation