“Catch & Carry: Reusable Neural Controllers for Vision-GuidedWhole-Body Tasks” by Merel, Tunyasuvunakool, Ahuja, Tassa, Hasenclever, et al. …

Conference:

Type(s):

Title:

- Catch & Carry: Reusable Neural Controllers for Vision-GuidedWhole-Body Tasks

Session/Category Title: Physically-Based Character Animation

Presenter(s)/Author(s):

Abstract:

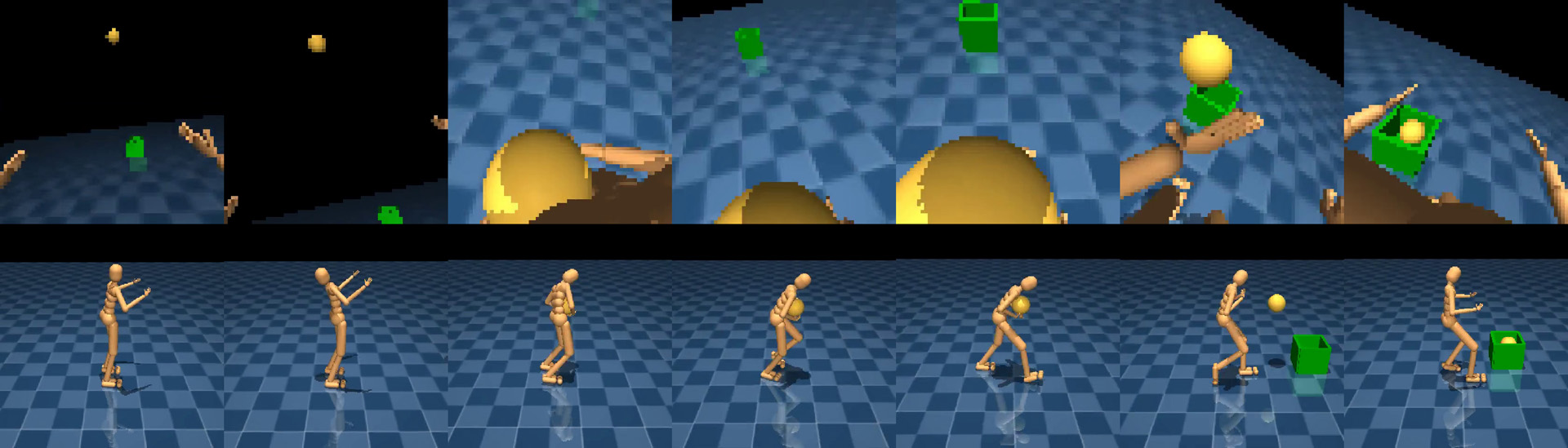

We address the longstanding challenge of producing flexible, realistic humanoid character controllers that can perform diverse whole-body tasks involving object interactions. This challenge is central to a variety of fields, from graphics and animation to robotics and motor neuroscience. Our physics-based environment uses realistic actuation and first-person per- ception – including touch sensors and egocentric vision – with a view to producing active-sensing behaviors (e.g. gaze direction), transferability to real robots, and comparisons to the biology. We develop an integrated neural- network based approach consisting of a motor primitive module, human demonstrations, and an instructed reinforcement learning regime with cur- ricula and task variations. We demonstrate the utility of our approach for several tasks, including goal-conditioned box carrying and ball catching, and we characterize its behavioral robustness. The resulting controllers can be deployed in real-time on a standard PC.1