“Automatic head-movement control for emotional speech” by Kawamoto, Yotsukura, Morishima and Nakamura

Conference:

Type:

Title:

- Automatic head-movement control for emotional speech

Presenter(s)/Author(s):

Abstract:

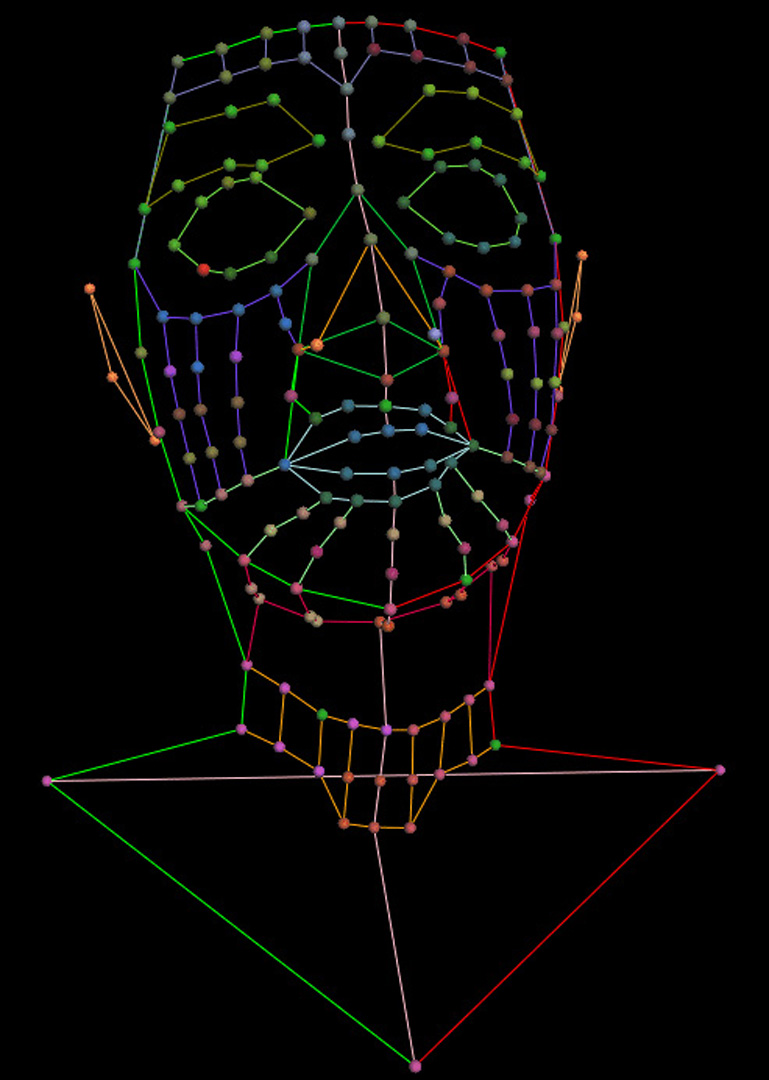

Creation of Speaking animation for CG characters needs to synchronously control various factors, such as lips, facial expression, head-movement, gestures, and so on. Lip movement helps a listener to understand speech. In addition, facial expression, head movement, and gestures help to make emotions understandable. There have been many studies that focused on automatic lip animation.

References:

1. Brand, M. 1999. Voice puppetry. In SIGGRAPH, 21–28.

2. Ezzat, T., Geiger, G., And Poggio, T. 2002. Trainable videorealistic speech animation. In SIGGRAPH, 388–398.

3. Kawamoto, S., Shimodaira, H., Nitta, T., Nishimoto, T., Nakamura, S., Itou, K., Morishima, S., Yotsukura, T., Kai, A., Lee, A., Yamashita, Y., Kobayashi, T., Tokuda, K., Hirose, K., Minematsu, N., Yamada, A., Den, Y., Utsuro, T., And Sagayama, S. 2002. Opensource software for developing anthropomorphic spoken dialog agent. In PRICAI-02, Int’l Workshop on Lifelike Animated Agents, 64–69.

Additional Images: