“An anatomically-constrained local deformation model for monocular face capture”

Conference:

Type(s):

Title:

- An anatomically-constrained local deformation model for monocular face capture

Session/Category Title: CAPTURING HUMANS

Presenter(s)/Author(s):

Moderator(s):

Abstract:

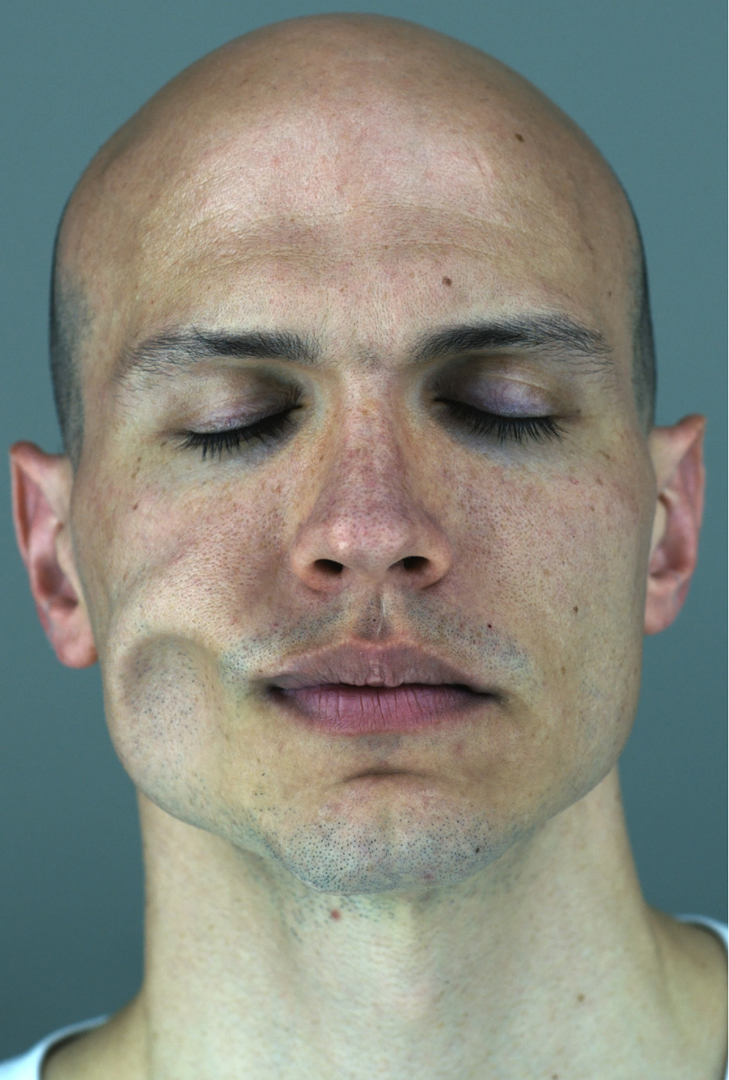

We present a new anatomically-constrained local face model and fitting approach for tracking 3D faces from 2D motion data in very high quality. In contrast to traditional global face models, often built from a large set of blendshapes, we propose a local deformation model composed of many small subspaces spatially distributed over the face. Our local model offers far more flexibility and expressiveness than global blendshape models, even with a much smaller model size. This flexibility would typically come at the cost of reduced robustness, in particular during the under-constrained task of monocular reconstruction. However, a key contribution of this work is that we consider the face anatomy and introduce subspace skin thickness constraints into our model, which constrain the face to only valid expressions and helps counteract depth ambiguities in monocular tracking. Given our new model, we present a novel fitting optimization that allows 3D facial performance reconstruction from a single view at extremely high quality, far beyond previous fitting approaches. Our model is flexible, and can be applied also when only sparse motion data is available, for example with marker-based motion capture or even face posing from artistic sketches. Furthermore, by incorporating anatomical constraints we can automatically estimate the rigid motion of the skull, obtaining a rigid stabilization of the performance for free. We demonstrate our model and single-view fitting method on a number of examples, including, for the first time, extreme local skin deformation caused by external forces such as wind, captured from a single high-speed camera.

References:

1. Beeler, T., and Bradley, D. 2014. Rigid stabilization of facial expressions. ACM Trans. Graphics (Proc. SIGGRAPH) 33, 4, 44:1–44:9. Google ScholarDigital Library

2. Beeler, T., Bickel, B., Sumner, R., Beardsley, P., and Gross, M. 2010. High-quality single-shot capture of facial geometry. ACM Trans. Graphics (Proc. SIGGRAPH). Google ScholarDigital Library

3. Beeler, T., Hahn, F., Bradley, D., Bickel, B., Beardsley, P., Gotsman, C., Sumner, R. W., and Gross, M. 2011. High-quality passive facial performance capture using anchor frames. ACM Trans. Graphics (Proc. SIGGRAPH) 30, 75:1–75:10. Google ScholarDigital Library

4. Black, M., and Yacoob, Y. 1995. Tracking and recognizing rigid and non-rigid facial motions using local parametric models of image motion. In ICCV, 374–381. Google ScholarDigital Library

5. Blanz, V., and Vetter, T. 1999. A morphable model for the synthesis of 3d faces. In Proc. SIGGRAPH, 187–194. Google ScholarDigital Library

6. Bouaziz, S., Wang, Y., and Pauly, M. 2013. Online modeling for realtime facial animation. ACM Trans. Graphics (Proc. SIGGRAPH) 32, 4, 40:1–40:10. Google ScholarDigital Library

7. Bradley, D., Heidrich, W., Popa, T., and Sheffer, A. 2010. High resolution passive facial performance capture. ACM Trans. Graphics (Proc. SIGGRAPH) 29, 41:1–41:10. Google ScholarDigital Library

8. Bregler, C., Malik, J., and Pullen, K. 2004. Twist based acquisition and tracking of animal and human kinematics. IJCV 56, 3, 179–194. Google ScholarDigital Library

9. Brox, T., Bruhn, A., Papenberg, N., and Weickert, J. 2004. High accuracy optical flow estimation based on a theory for warping. In ECCV. 25–36.Google Scholar

10. Brunton, A., Bolkart, T., and Wuhrer, S. 2014. Multilinear wavelets: A statistical shape space for human faces. In ECCV.Google Scholar

11. Cao, C., Weng, Y., Lin, S., and Zhou, K. 2013. 3d shape regression for real-time facial animation. ACM Trans. Graphics (Proc. SIGGRAPH) 32, 4, 41:1–41:10. Google ScholarDigital Library

12. Cao, C., Hou, Q., and Zhou, K. 2014. Displaced dynamic expression regression for real-time facial tracking and animation. ACM Trans. Graphics (Proc. SIGGRAPH) 33, 4, 43:1–43:10. Google ScholarDigital Library

13. Cao, C., Bradley, D., Zhou, K., and Beeler, T. 2015. Real-time high-fidelity facial performance capture. ACM Trans. Graphics (Proc. SIGGRAPH). Google ScholarDigital Library

14. Chen, Y.-L., Wu, H.-T., Shi, F., Tong, X., and Chai, J. 2013. Accurate and robust 3d facial capture using a single rgbd camera. In ICCV. Google ScholarDigital Library

15. Cootes, T. F., Edwards, G. J., and Taylor, C. J. 2001. Active appearance models. IEEE TPAMI 23, 6, 681–685. Google ScholarDigital Library

16. DeCarlo, D., and Metaxas, D. 1996. The integration of optical flow and deformable models with applications to human face shape and motion estimation. In CVPR, 231. Google ScholarDigital Library

17. Ekman, P., and Friesen, W. V. 1977. Facial action coding system.Google Scholar

18. Essa, I., Basu, S., Darrell, T., and Pentland, A. 1996. Modeling, tracking and interactive animation of faces and heads using input from video. In Proc. of Computer Animation, 68. Google ScholarDigital Library

19. Fyffe, G., Jones, A., Alexander, O., Ichikari, R., and Debevec, P. 2014. Driving high-resolution facial scans with video performance capture. ACM Trans. Graphics 34, 1, 8:1–8:14. Google ScholarDigital Library

20. Garrido, P., Valgaert, L., Wu, C., and Theobalt, C. 2013. Reconstructing detailed dynamic face geometry from monocular video. ACM Trans. Graphics (Proc. SIGGRAPH Asia) 32, 6, 158:1–158:10. Google ScholarDigital Library

21. Ghosh, A., Fyffe, G., Tunwattanapong, B., Busch, J., Yu, X., and Debevec, P. 2011. Multiview face capture using polarized spherical gradient illumination. ACM Trans. Graphics (Proc. SIGGRAPH Asia) 30, 6, 129:1–129:10. Google ScholarDigital Library

22. Gower, J. C. 1975. Generalized procrustes analysis. Psychometrika 40, 1.Google ScholarCross Ref

23. Huang, H., Chai, J., Tong, X., and Wu, H.-T. 2011. Leveraging motion capture and 3d scanning for high-fidelity facial performance acquisition. ACM Trans. Graphics (Proc. SIGGRAPH) 30, 4, 74:1–74:10. Google ScholarDigital Library

24. Joshi, P., Tien, W. C., Desbrun, M., and Pighin, F. 2003. Learning controls for blend shape based realistic facial animation. In SCA, 187–192. Google ScholarDigital Library

25. Kobbelt, L., Vorsatz, J., and Seidel, H.-P. 1999. Multiresolution hierarchies on unstructured triangle meshes. Comput. Geom. Theory Appl. 14, 1-3, 5–24. Google ScholarDigital Library

26. Lau, M., Chai, J., Xu, Y.-Q., and Shum, H.-Y. 2009. Face poser: Interactive modeling of 3d facial expressions using facial priors. ACM Trans. Graph. 29, 1 (Dec.), 3:1–3:17. Google ScholarDigital Library

27. Lewis, J. P., Anjyo, K., Rhee, T., Zhang, M., Pighin, F., and Deng, Z. 2014. Practice and Theory of Blendshape Facial Models. In Eurographics 2014 – State of the Art Reports, The Eurographics Association, S. Lefebvre and M. Spagnuolo, Eds.Google Scholar

28. Li, H., Roivainen, P., and Forcheimer, R. 1993. 3-d motion estimation in model-based facial image coding. IEEE TPAMI 15, 6, 545–555. Google ScholarDigital Library

29. Li, H., Yu, J., Ye, Y., and Bregler, C. 2013. Realtime facial animation with on-the-fly correctives. ACM Trans. Graphics (Proc. SIGGRAPH) 32, 4, 42:1–42:10. Google ScholarDigital Library

30. Na, K.-G., and Jung, M.-R. 2011. Local shape blending using coherent weighted regions. The Vis. Comp. 27, 6-8, 575–584. Google ScholarDigital Library

31. Neumann, T., Varanasi, K., Wenger, S., Wacker, M., Magnor, M., and Theobalt, C. 2013. Sparse localized deformation components. ACM Trans. Graphics (Proc. SIGGRAPH Asia) 32, 6, 179:1–179:10. Google ScholarDigital Library

32. Rhee, T., Hwang, Y., Kim, J. D., and Kim, C. 2011. Real-time facial animation from live video tracking. In Proc. SCA, 215–224. Google ScholarDigital Library

33. Saragih, J. M., Lucey, S., and Cohn, J. F. 2011. Deformable model fitting by regularized landmark mean-shift. IJCV 91, 2, 200–215. Google ScholarDigital Library

34. Shi, F., Wu, H.-T., Tong, X., and Chai, J. 2014. Automatic acquisition of high-fidelity facial performances using monocular videos. ACM Trans. Graphics (Proc. SIGGRAPH Asia) 33. Google ScholarDigital Library

35. Suwajanakorn, S., Kemelmacher-Shlizerman, I., and Seitz, S. M. 2014. Total moving face reconstruction. In ECCV.Google Scholar

36. Tena, J. R., De la Torre, F., and Matthews, I. 2011. Interactive region-based linear 3d face models. ACM Trans. Graphics (Proc. SIGGRAPH) 30, 4, 76:1–76:10. Google ScholarDigital Library

37. Valgaerts, L., Wu, C., Bruhn, A., Seidel, H.-P., and Theobalt, C. 2012. Lightweight binocular facial performance capture under uncontrolled lighting. ACM Trans. Graphics (Proc. SIGGRAPH Asia) 31, 6. Google ScholarDigital Library

38. Vlasic, D., Brand, M., Pfister, H., and Popović, J. 2005. Face transfer with multilinear models. ACM Trans. Graphics (Proc. SIGGRAPH) 24, 3, 426–433. Google ScholarDigital Library

39. Weise, T., Li, H., Van Gool, L., and Pauly, M. 2009. Face/off: live facial puppetry. In Proc. SCA, 7–16. Google ScholarDigital Library

40. Weise, T., Bouaziz, S., Li, H., and Pauly, M. 2011. Realtime performance-based facial animation. ACM Trans. Graphics (Proc. SIGGRAPH) 30, 4, 77:1–77:10. Google ScholarDigital Library

41. Zhang, L., Snavely, N., Curless, B., and Seitz, S. M. 2004. Spacetime faces: high resolution capture for modeling and animation. ACM Trans. Graphics (Proc. SIGGRAPH), 548–558. Google ScholarDigital Library

42. Zollhöfer, M., Niessner, M., Izadi, S., Rehmann, C., Zach, C., Fisher, M., Wu, C., Fitzgibbon, A., Loop, C., Theobalt, C., and Stamminger, M. 2014. Real-time non-rigid reconstruction using an rgb-d camera. ACM Trans. Graphics (Proc. SIGGRAPH) 33, 4, 156:1–156:12. Google ScholarDigital Library