“VR Planet: Interface for Meta-view and Feet Interaction of VR Contents” by Fan, Chan, Kato, Minamizawa and Inami

Conference:

- SIGGRAPH 2016

-

More from SIGGRAPH 2016:

Type(s):

Title:

- VR Planet: Interface for Meta-view and Feet Interaction of VR Contents

Presenter(s):

Description:

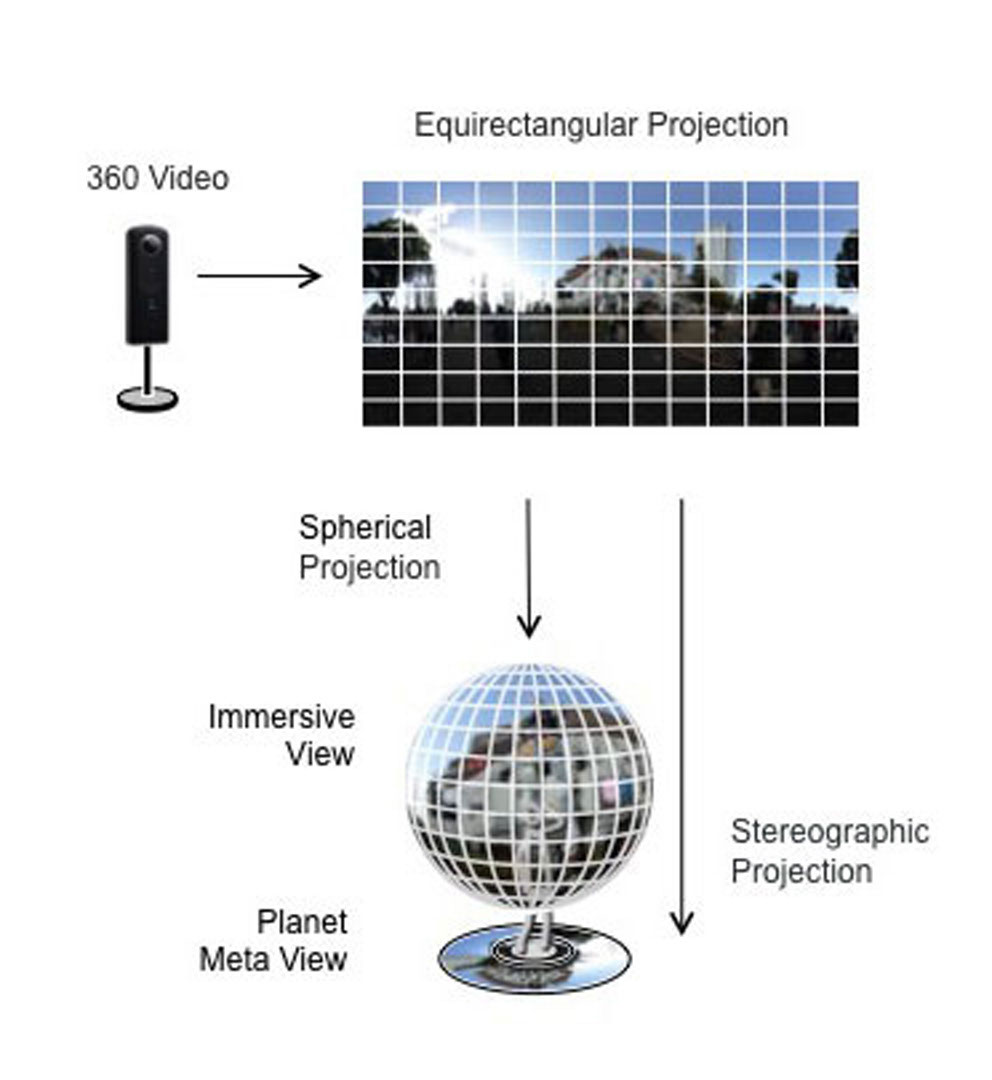

The emergence of head-mount-displays(HMDs) have enabled us to experience virtual environments in an immersive mean. At the same time, omnidirectional cameras which capture real-life environments in all 360-degree angles in either still image or motion video are also getting attention. Using HMDs, we can view those captured omnidirectional images in immersion, as though we are actually “being there”. However, as a requirement for immersion, our view of these omnidirectional images in the HMD is usually presented as first-person-view and limited by our natural field of view (FOV), i.e. we only see a fraction of the environment which we are facing, while the rest of the 360-degree environment is hidden from our view. This is even more problematic in telexistence situations where the scene is live so setting a default facing direction for the HMD is impratical. We can often observe people, while wearing HMDs, turn their heads frantically trying to locate interesting occurrences in the omnidirectional environment they are viewing.