“Menagerie” by Fisher

Conference:

Experience Type(s):

Title:

- Menagerie

Program Title:

- Tomorrow's Realities

Entry Number: 41

Organizer(s)/Presenter(s):

Collaborator(s):

Description:

Beyond Simulation: Telepresence and Virtual Reality

Telepresence and virtual reality (VR) are terms used to describe technology that enables people to feel as if they are actually present in a different place or time. Other names include “cyberspace,” “teleexistence,” and “tele-symbiosis.” The current state of telepresence technology has evolved from a rich multidisciplinary background of developments in many different fields and for a wide spectrum of applications. In particular, the steady increase in knowledge describing how humans sense, process, and act on information about the world around them has in turn led to the development of technologies that can provide a rudimentary but adequate sense of presence in a synthetic or remotely sensed environment. As this convergence of disciplines evolves, the experiences and applications possible through telepresence will become more and more compelling.

Designing Virtual Experiences

Telepresence is a medium. Two definitions of the term “medium” are appropriate in this context: l) a channel or system of communication, information, or entertainment, and 2) material or technical means of artistic expression. Examples of other media that meet these definitions are painting, sculpture, film, television, and personal computers. A key feature of a medium is that it can be employed to represent a variety of different kinds of content. The television medium, for instance, supports diverse content and forms, including drama, documentary, news programming, sports, and advertising.

Today, the medium of telepresence is in its infancy. In most laboratories and institutions where people are working with telepresence, the emphasis is still on technology and engineering. A rudimentary language of telepresence is beginning to emerge, and people are beginning to envision a variety of applications. These ideas are beginning to influence the direction of technological development by suggesting new performance criteria. They are also giving birth to methodologies and tools for the design of telepresence experiences. The technologies developed so far provide the capabilities to give people a sense of presence in a wide variety of worlds, with compelling sensory richness.

But it is not the hardware that people might use that will determine whether telepresence becomes a powerful and popular medium-instead, it will be the experiences that they are able to have that will drive its acceptance and growth. The central challenge for telepresence remains: What do you do when you get there? What actually occurs in a virtual world? What kinds of actions can a user take there? How does the world respond to what someone does in it? What kinds of things might happen? and What makes and keeps a virtual world interesting? These are issues of experience design. Exploring the boundaries of these issues will launch this new medium far beyond its origins in photo-realistic computer graphics and traditional simulation.

Menagerie: A Virtual Experience by Michael Girard and Susan Amkraut

The goal of this effort is to demonstrate one of the first fully immersive virtual environment installations that is inhabited by virtual characters and presences especially designed to respond to and interact with its users. This experience allows a visitor to become visually and aurally immersed in a 3D computer generated environment that is inhabited by many virtual animals. The animal enter and exit the space through portholes and door that materialize and dematerialize around the viewer. As a user explores the virtual space, s/he will encounter several species of computer-generated animals, birds, and insects that move about independently and interactively respond to the user’ presence in various ways.

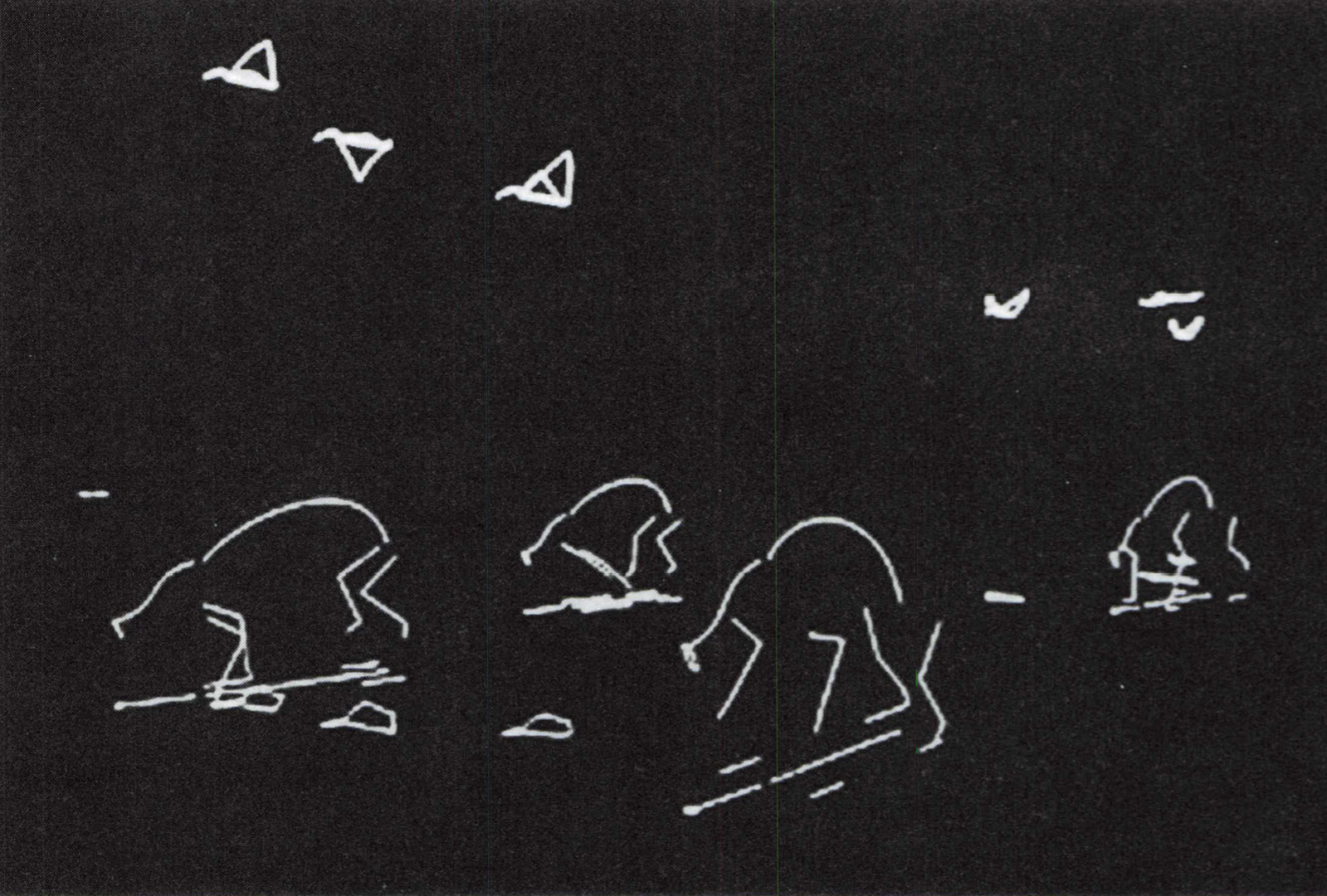

For example, if the user moves towards a group of birds gathered on the ground, they might take off and swirl around the u er with realistic flocking behavior, fly off into the distance, and return to the ground in another location. Several four-legged animals will approach the user with different gaits and behavioral reactions. The visitor might also tum toward the 3D localized sound of other animals as they follow from behind.

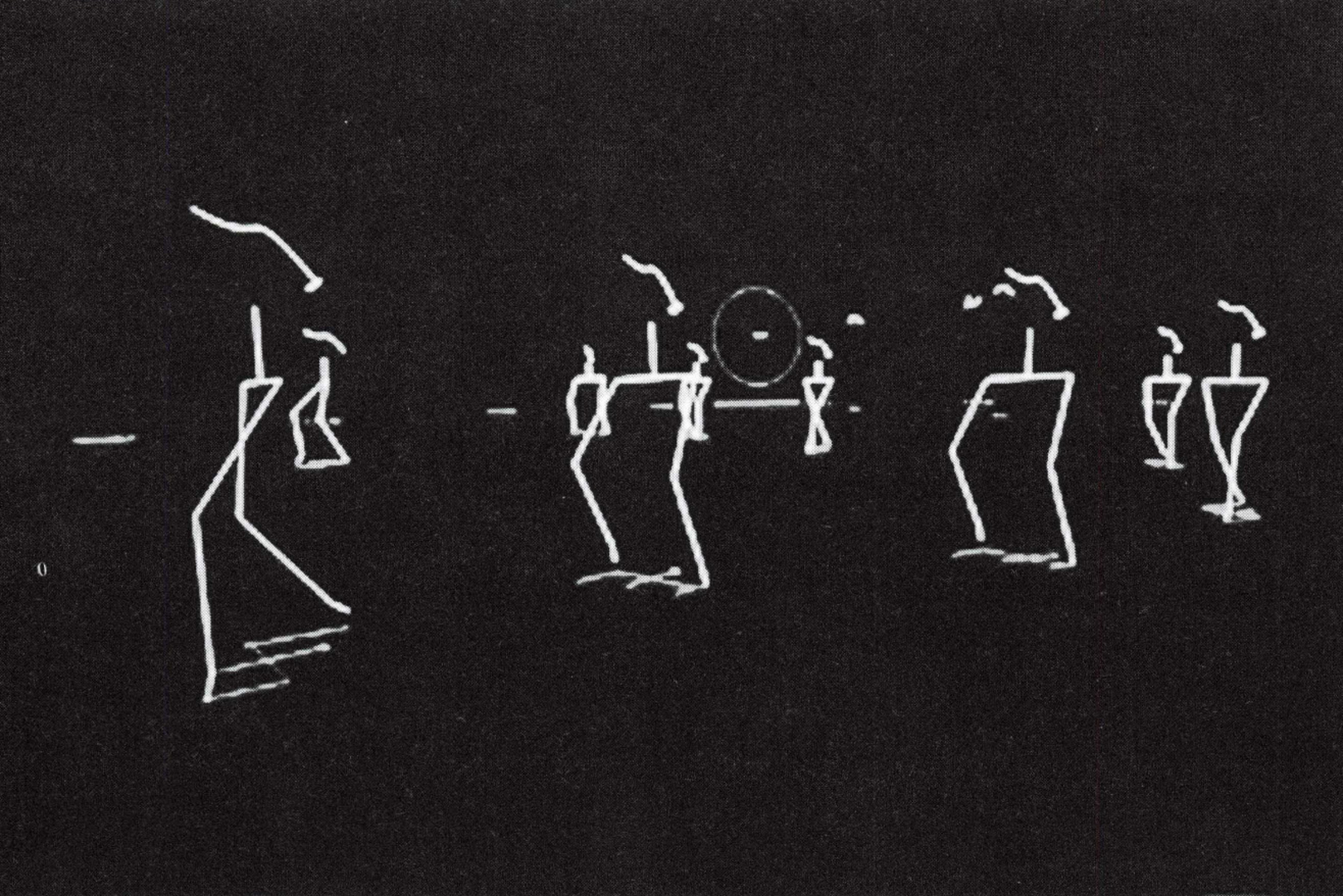

The hardware configuration of this installation includes a head-coupled, stereoscopic color viewer that is comfortably used like a pair of very wide-angle binoculars looking into the virtual space (Fakespace “BOOM-2C Viewer”). Realistic, 3D localized sound cues are linked to characters and events in the virtual space by means of special DSP hardware (Crystal River Engineering “Beachtron”). And the virtual environment and characters surrounding the user are generated by a high-performance, real-time computer graphics platform (Silicon Graphics “Reality Engine”).

Following is a description of the artists’ objectives and algorithms:

“We know how an animal moves, not just what it looks like. In our computer simulations of ‘virtual’ animals, the geometric representations are deliberately designed to be simple in order to emphasize the motion of the animals, rather than the details of their appearance. For us, the essential expression is in the abstraction of the motion and what it suggests to the imagination of the viewer.

“The motion of the animals is modeled with computer programs that simulate the physical qualities of movement. Many of the techniques employed are inspired by the robotics field. Legged animals respond to simulated gravity as they walk and run in various gaits. They are able to spontaneously plan footholds on the ground so that they appear to be dynamically balanced. Birds and other flying creatures accelerate when flapping their wings and bank realistically into turns. Flocking and herding algorithms direct the patterns of flow for large groups of animals.

“All animals maintain a degree of autonomy as they adaptively alter their motion in response to their surroundings, avoiding collisions with both other animals and the virtual environment user. Animals may follow general goals, such as “walk along any path from door X to door Y” or “fly toward region Z and land on any unoccupied spot on the ground.” However, their precise movements are unpredictable since they depend on the constantly shifting circumstances of interaction between each of the animal and the user.”

Supported by Magic Box Productions, Inc., including the contribution of “Virtual Clay” modeling software. Additional support from Fakespace, Inc. and Crystal River Engineering, Inc.

Graphics System

? Silicon Graphics, Inc.

? CRIMSON/Reality Engine system

? Silicon Graphics CRIMSON/Reality Engine system with:

? 2 Raster Managers

Software Development Option

? 1 /4″ tape drive

? 64M memory

? 380 Mbytes disk

Display System

? Fakespace, Inc.

? BOOM 2C

3D Sound System

? Crystal River Engineering, Inc.

? Beachtron cards

? 486 PC and Monitor

? Headphones

? Headphone Amp

Video Projector

? (SONY, Barco, etc.) to display virtual world and user interaction for those waiting and/ or observing.

Software

? Michael Girard/Susan Amkraut: Custom Simulation Software

? Fakespace, Inc.: BOOM Software VLIB-SGI

? Crystal River Engineering, Inc.: Beachtron Software