Jing Ting Lai: Understand_V.T.S.

Artist(s):

Title:

- Understand_V.T.S.

Exhibition:

Category:

Artist Statement:

Summary

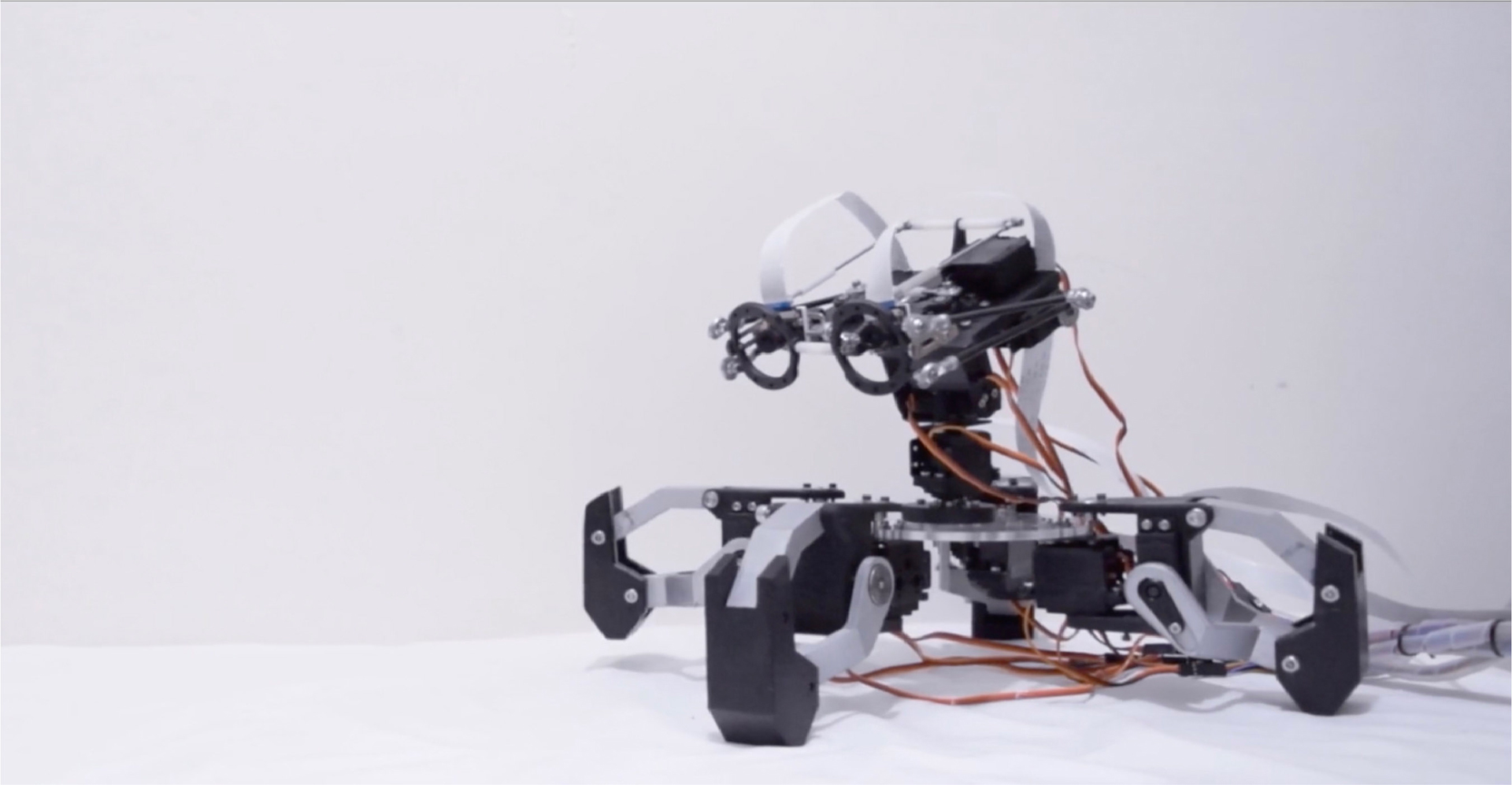

Understand_V.T.S?is an interactive sensory substitution installation, conducting the experiment in which the possibility of the cooperation between our brain and artificial algorithms are assessed. Control the vision extension robot wanders about the surroundings for you. Eventually, your brain will manage to recognize surroundings signals.

Abstract

Understand_V.T.S is an interactive sensory substitution installation, which is carried out through the creation of new neurons in charge of tactile sensory in brains, serves as an approach to data physicalization.

Is there any other way for us to understand this world?

In this piece of work, it conducts the experiment in which the possibility of the cooperation between natural and artificial algorithms are assessed. That is, it tries out how well our brains(natural) work with AI (man-made).

The feature of neuroplasticity allows our senses to perceive the world in various ways in which we might see not with our eyes, but with skins or listen not through our ears, but through taste buds, to name but a few.

General skin vision relies on brain parsing pieces of information and shaping cognitions thereafter.

In this respect, Lai Jiun Ting introduced an object recognition system ? YOLO v3 You Only Look One to it, converting the results given by YOLO 3 into Braille reading system to thigh skin, and the other side converting tactile image(pixel) to motor on users? back directly, that?s how the installation?s system ? V.T.S.HAOS (Vision to Touch Sensory Human and Artificial Operate System ) work.

Besides, with the motor stimulating the skins, the experiment mentioned above in which we aim to test the collaboration between brains and AI is thus carried out. The system run by machine learning and human brains can generate a new neural network to identify words, texts, and pixels.

Technical Information:

Understand_V.T.S is a sensory substitution installation for us to perceive the world.

Let me give a brief description of this work’s mechanism: You can control the robot wanders about the surroundings of you. The signals its left eye receive will translates the result of Ai object detect to Braille and deliver it to your leg; while its right eye converts the signal received into a tactile image to your back.

So eventually your brain will manage to comprehend the meaning of these signals.unlock a new tactile cognition.

In this respect, I introduced an object recognition system — YOLO v3 You Only Look Oneto it, converting the results given by YOLO 3 into Braille reading system to thigh skin, and the other side converting pixel to motor on back directly.

Besides, with the motor stimulating the skins, the experiment mentioned above in which we aim to test the collaboration between brains and AI is thus carried out. The system run by machine learning and human brains can generate a new neural network to identify words, texts, and pixels.

You might be wondering why I was like “meditating” in the video when experiencing through the device. It was because meditations have been serving as a way of connecting one’s body mind and spirit for more than 3000 years in the orient.

As for the wheel spinning behind me, it symbolizes the “Halo”, a common interreligious embodiment of the utter integrity of one’s spirit and body.

The Game Controller, which controls the small robot, uses meditation gestures to imply the relationship between reality and virtual games

It took me 3 hours to recognize the numbers 1 to 10 as well as my own appearance through this very device.

The fact that both algorithms: the artificial one performed by the device and the natural one by the brain, cooperate perfectly well on Me is just as impressive as it is cutting-edge.

As an experiment of a new mode of art, it provides different sensory perspectives and sends us into the unchartered territory of artistic experience. Despite the fact that the ways we perceive art are still somewhat limited, with a modest amount of learning it will know no bounds.

Process Information:

For the field of art, I reckon the technique of sensory substitution could be a major breakthrough in human enhancement. With this technique, it became possible for us to experience any data like never before, meaning every work of art that ever existed, everything on earth could be re-perceived as long as it can be digitalized. Developing sensors that perceive what our senses couldn’t approach, explore and get to know the world and even, to destroy the political relationship of the sensory system with this very technique. Scientifically speaking, our brains are still the most powerful processor.

I tried to introduce the results detected and obtained by AI to human bodies, expanding our maximum range of perception and thus we’ll be able to receive some critical information in the form of the Braille alphabets and tactile image. On top of that, the largest organ of our body — skins, open more gateways for information to be taken in, which allows us to directly perceive the meaning of the information, making it an intriguing and practical wearable AI human augmentation.

This work is just a beginning. I aim at developing multiple sensory points of view so that we can re-recognize the different possible faces of the world and re-savor art with different perspectives of the sensors and look at the world with a critical eye thereafter. Cybernetic human augmentation makes technology prevail over our bodies on their own. Its prevalence reveals the fact that humans are at risk of losing their freedom, meaning we might no longer have the control over our own source of signals.

However, if we attach wearable AI augmentation to our body which is removable rather than implantable, we can still, take it off and stop the input of messages. This is the very reason why I’m promoting and developing this technology: we still have the right to say no.

Before I finish my compulsory military service, I received an invitation from the Art and Emerging Technology at Tsinghua University. But I want to complete the military service first and give myself an opportunity to think about my life. After my service is over, I give spent two months doing art and tried my best to utilize machine learning related technology I learned during my time in the military service, discovering my own potential and drew inspiration from designing and making stuffs. At that point, I completed my first personal artwork and at the same time understand my characteristics as an artist. In the state of making works wholeheartedly, I completely forgot to apply for the postgraduate school at Tsinghua University.

However, even though I didn’t make it to Tsinghua University, I was very proud of myself that I have been performing with all my heart then. And my hard work paid off when I received the opportunity to participate in SIGGRAPH Asia. On top of that, I even got to take part in the follow-up recruitment of artists from Taiwan Industrial Technology Research Institute to compete with the predecessors of well-known artists in Taiwan and I successfully stood out as one of the winners, who gets the ticket to create their own works in the institute.

Other Information:

Inspiration Behind the Project

To tell what is human from what is not is a gateway to understand how one can become non-human, which is a possible option when it comes to knowing where the boundary of human beings is. ?Cybernetic human augmentation is the most direct way to explore the boundary. However, it lets technology prevail over our bodies on their own and is very likely to rob us of our right to make our own choices.

One thing I?m dying to know is that what other choices are there for us to evolve besides Cybernetic human augmentation??For me Wearable AI human augmentation is the answer.

This interactive installation of sensory substitution is the beginning of me proposing other options besides the Cybernetic one and is my first interactive work of art that is created completely on my own, attempting to get rid of the mindset I was used to when I was composing so that I can find out more possibilities, and further open up the thinking of wearing devices.

Key Takeaways for the Audience

To be honest, I hope that they can experience this product directly, due to the outbreak of Coronavirus pandemic, the work has only been experienced by about 10 people?

After all, it is an interactive installation. My expectation is that every audience gets to have a purposeless exploration that can expand our cognitions, questioning the actual appearance of the world and thinking about their current state as they gradually get to know their life.