“Depth compositing for augmented reality”

Conference:

Type(s):

Title:

- Depth compositing for augmented reality

Presenter(s)/Author(s):

Abstract:

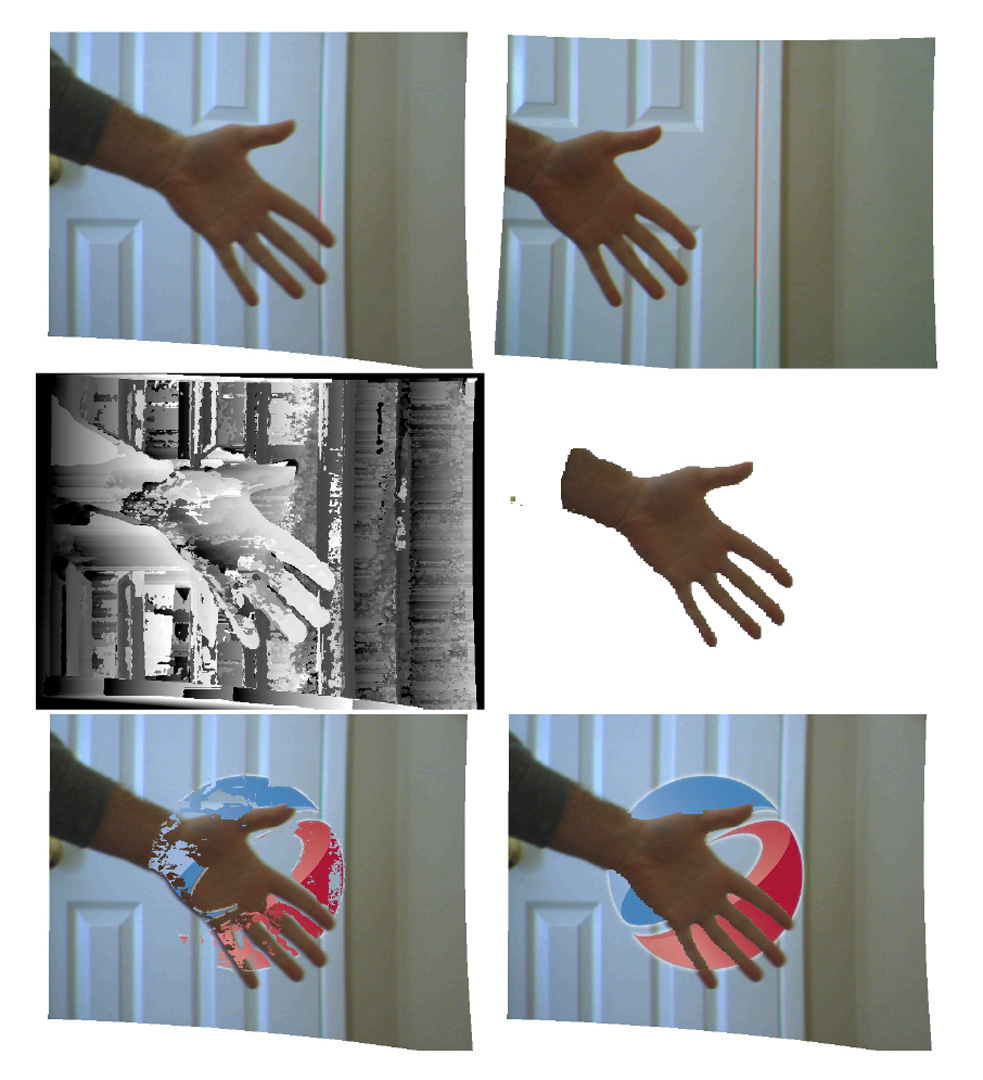

Correct handling of occlusion is a significant challenge when compositing real and virtual content, whether it be for augmented reality or film. For film, the solution is often solved offline by arduously creating alpha mattes by hand. In the augmented reality context, the compositing must be real-time, so offline solutions are not possible. Occlusions are usually disregarded in augmented reality, so that virtual objects are always rendered on top of physical objects. If a highly accurate 3D model of the scene is available, then depth compositing can be performed automatically; however, such models can be difficult to create, and limit the range of the system to the extent of the model.

References:

1. Berger, M.-O. 1997. Resolving occlusion in augmented reality: a contour based approach without 3d reconstruction. Computer Vision and Pattern Recognition, 1997. Proceedings., 1997 IEEE Computer Society Conference on, 91–96.

2. Kolmogorov, V., Criminisi, A., Blake, A., Cross, G., and Rother, C. 2005. Bi-layer segmentation of binocular stereo video. Computer Vision and Pattern Recognition, 2005. CVPR 2005. IEEE Computer Society Conference on 2, 1186 vol. 2-.

3. Schmidt, J., Niemann, H., and Vogt, S. 2002. Dense disparity maps in real-time with an application to augmented reality. Applications of Computer Vision, 2002. (WACV 2002). Proceedings. Sixth IEEE Workshop on, 225–230.

4. Wloka, M. M., and Anderson, B. G. 1995. Resolving occlusion in augmented reality. In SI3D ’95: Proceedings of the 1995 symposium on Interactive 3D graphics, ACM, New York, NY, USA, 5–12.