“Video retrieval based on user-specified deformation” by Kawate, Okabe, Onai, Hirano and Sanjo

Conference:

Type(s):

Title:

- Video retrieval based on user-specified deformation

Presenter(s)/Author(s):

Abstract:

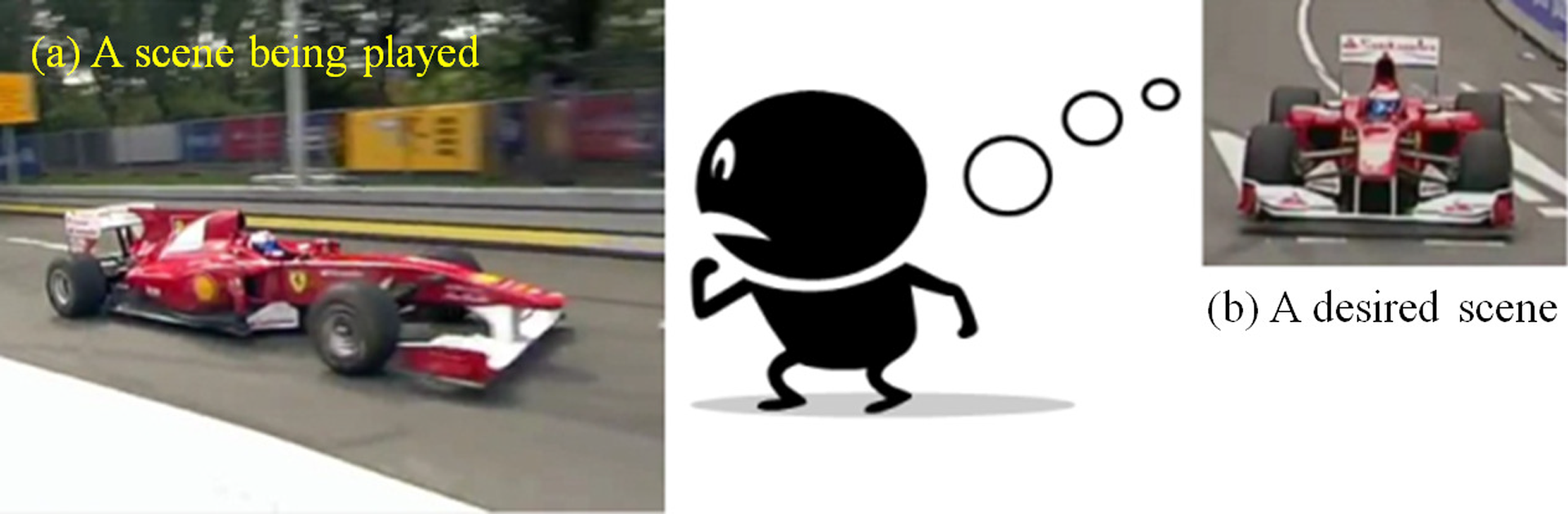

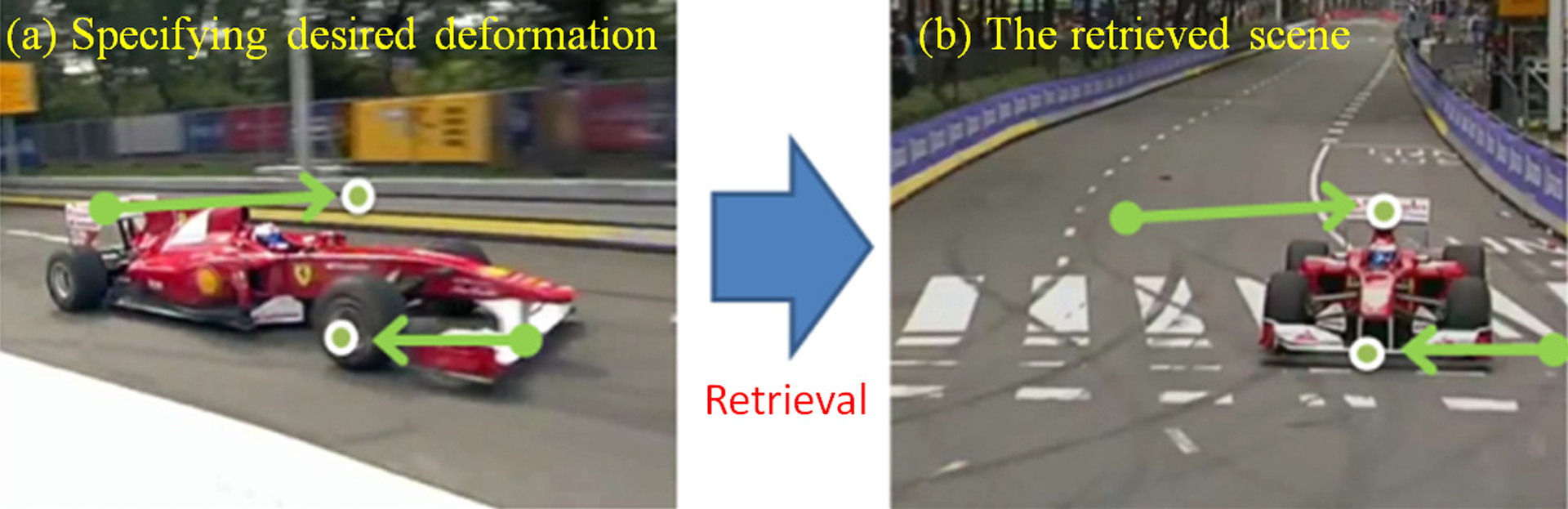

Let’s assume that we are now watching the scene of Fig. 1-a, where a red racing car is running from left to right. At the same time, we feel like watching another scene like Fig. 1-b, where a similar car is running toward us. To find such a desired scene, we usually push the forward and rewind buttons or move the play bar. If we cannot find the scene in the currently watching video, we have to go to a video sharing service like YouTube to further search for it. However, these are tedious tasks. Goldman et al. propose a method to navigate a single video [2008], but we want to find a scene not only in a single video but also a different video from the currently watching one.

References:

1. D. B. Goldman, C. Gonterman, B. Curless, D. Salesin, and S. M. Seitz. 2008. Video object annotation, navigation, and composition. In Proc. of UIST, 3–12.

2. P. Sand and S. Teller. 2008. Particle Video: Long-range motion estimation using point trajectories. IJCV, 80, 1, pp 72–91.

Additional Images: