“Tangible and modular input device for character articulation” by Jacobson, Panozzo, Glauser, Pradalier, Hilliges, et al. …

Conference:

Type(s):

Title:

- Tangible and modular input device for character articulation

Session/Category Title: Animating Characters

Presenter(s)/Author(s):

Moderator(s):

Abstract:

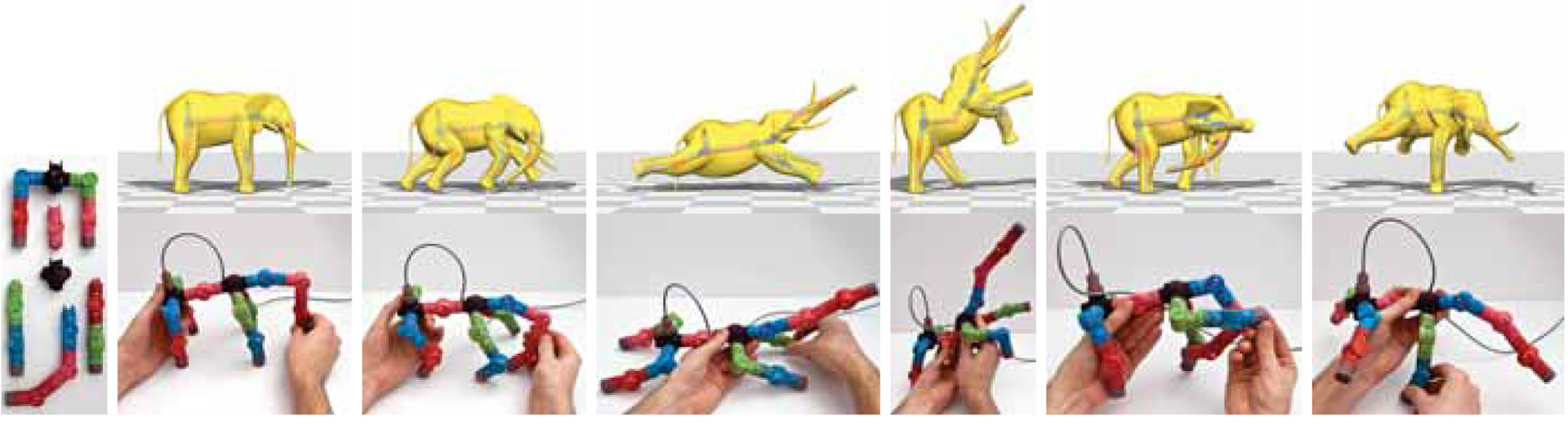

Articulation of 3D characters requires control over many degrees of freedom: a difficult task with standard 2D interfaces. We present a tangible input device composed of interchangeable, hot-pluggable parts. Embedded sensors measure the device’s pose at rates suitable for real-time editing and animation. Splitter parts allow branching to accommodate any skeletal tree. During assembly, the device recognizes topological changes as individual parts or pre-assembled subtrees are plugged and unplugged. A novel semi-automatic registration approach helps the user quickly map the device’s degrees of freedom to a virtual skeleton inside the character. User studies report favorable comparisons to mouse and keyboard interfaces for the tasks of target acquisition and pose replication. Our device provides input for character rigging and automatic weight computation, direct skeletal deformation, interaction with physical simulations, and handle-based variational geometric modeling.

References:

1. Ando, Y., Takahashi, S., and Shibayama, E. 2002. A 3D animation system with superimposing cg on a physical armature. Proc. APCHI.Google Scholar

2. Bächer, M., Bickel, B., James, D. L., and Pfister, H. 2012. Fabricating articulated characters from skinned meshes. ACM Trans. Graph. 31, 4. Google ScholarDigital Library

3. Baran, I., and Popović, J. 2007. Automatic rigging and animation of 3D characters. ACM Trans. Graph. 26, 3, 72:1–72:8. Google ScholarDigital Library

4. Baran, I., Vlasic, D., Grinspun, E., and Popović, J. 2009. Semantic deformation transfer. ACM Trans. Graph. 28, 3. Google ScholarDigital Library

5. Barnes, C., Jacobs, D. E., Sanders, J., Goldman, D. B., Rusinkiewicz, S., Finkelstein, A., and Agrawala, M. 2008. Video puppetry: a performative interface for cutout animation. ACM Trans. Graph. 27, 5, 124. Google ScholarDigital Library

6. Bharaj, G., Thormählen, T., Seidel, H.-P., and Theobalt, C. 2012. Automatically rigging multi-component characters. Comput. Graph. Forum 30, 2. Google ScholarDigital Library

7. Calì, J., Calian, D. A., Amati, C., Kleinberger, R., Steed, A., Kautz, J., and Weyrich, T. 2012. 3d-printing of non-assembly, articulated models. ACM Trans. Graph. 31, 6. Google ScholarDigital Library

8. Celsys, Inc., 2013. QUMARION. http://www.clip-studio.com.Google Scholar

9. Chen, J., Izadi, S., and Fitzgibbon, A. 2012. Kinetre: Animating the world with the human body. In Proc. UIST, ACM Press, New York, New York, USA, 435. Google ScholarDigital Library

10. Dontcheva, M., Yngve, G., and Popović, Z. 2003. Layered acting for character animation. ACM Trans. Graph. 22 (July). Google ScholarDigital Library

11. Esposito, C., Paley, W. B., and Ong, J. 1995. Of mice and monkeys: a specialized input device for virtual body animation. In Proc. I3D. Google ScholarDigital Library

12. Feng, T.-C., Gunawardane, P., Davis, J., and Jiang, B. 2008. Motion capture data retrieval using an artist’s doll. In Proc. ICPR, 1–4.Google Scholar

13. Held, R., Gupta, A., Curless, B., and Agrawala, M. 2012. 3d puppetry: A kinect-based interface for 3d animation. In Proc. UIST, ACM, New York, NY, USA, 423–434. Google ScholarDigital Library

14. Ishigaki, S., White, T., Zordan, V. B., and Liu, C. K. 2009. Performance-based control interface for character animation. ACM Trans. Graph. 28, 3. Google ScholarDigital Library

15. Ishii, H., and Ullmer, B. 1997. Tangible bits: Towards seamless interfaces between people, bits and atoms. In Proc. CHI. Google ScholarDigital Library

16. Jacob, R. J. K., Sibert, L. E., McFarlane, D. C., and Mullen, Jr., M. P. 1994. Integrality and separability of input devices. ACM Trans. Comput.-Hum. Interact. 1, 1 (Mar.), 3–26. Google ScholarDigital Library

17. Jacobson, A., Baran, I., Popović, J., and Sorkine, O. 2011. Bounded biharmonic weights for real-time deformation. ACM Trans. Graph. 30, 4, 78:1–78:8. Google ScholarDigital Library

18. Jacobson, A., Panozzo, D., et al., 2013. libigl: A simple C++ geometry processing library. http://igl.ethz.ch/projects/libigl/.Google Scholar

19. Johnson, M. P., Wilson, A., Blumberg, B., Kline, C., and Bobick, A. 1999. Sympathetic interfaces. In Proc. CHI.Google Scholar

20. Kabsch, W. 1976. A solution of the best rotation to relate two sets of vectors. Acta Crystallographica, 32, 922.Google ScholarCross Ref

21. Knep, B., Hayes, C., Sayre, R., and Williams, T. 1995. Dinosaur input device. In Proc. CHI, 304–309. Google ScholarDigital Library

22. Kuhn, H. W. 1955. The hungarian method for the assignment problem. Naval research logistics quarterly 2, 1–2, 83–97.Google Scholar

23. Lin, J., Igarashi, T., Mitani, J., Liao, M., and He, Y. 2012. A sketching interface for sitting pose design in the virtual environment. IEEE TVCG 18, 11, 1979–1991. Google ScholarDigital Library

24. Melexis Sys., 2013. MLX90316 DataSheet.Google Scholar

25. Oikonomidis, I., Kyriazis, N., and Argyros, A. A. 2012. Tracking the Articulated Motion of Two Strongly Interacting Hands. In IEEE CVPR. Google ScholarDigital Library

26. Oore, S., Terzopoulos, D., and Hinton, G. 2002. A desktop input device and interface for interactive 3D character animation. In Proc. Graphics Interface, 133–140.Google Scholar

27. Raffle, H. S., Parkes, A. J., and Ishii, H. 2004. Topobo: a constructive assembly system with kinetic memory. In Proc. CHI. Google ScholarDigital Library

28. Romero, J., Kjellstrom, H., and Kragic, D. 2010. Hands in action: real-time 3D reconstruction of hands in interaction with objects. In IEEE ICRA, 458–463.Google Scholar

29. Seol, Y., O’Sullivan, C., and Lee, J. 2013. Creature features: online motion puppetry for non-human characters. In Proc. SCA. Google ScholarDigital Library

30. Shim, B. 2010. Best student project prize talk: The wonder hospital. SIGGRAPH Computer Animation Festival.Google Scholar

31. Shiratori, T., Mahler, M., Trezevant, W., and Hodgins, J. K. 2013. Expressing animated performances through puppeteering. In 3DUI, IEEE, 59–66.Google Scholar

32. Shneiderman, B. 1997. Direct manipulation for comprehensible, predictable and controllable user interfaces. In Proc. IUI, 33–39. Google ScholarDigital Library

33. Shoemake, K. 1992. Arcball: A user interface for specifying three-dimensional orientation using a mouse. In Proc. CGI. Google ScholarDigital Library

34. Shotton, J., Sharp, T., Kipman, A., Fitzgibbon, A., Finocchio, M., Blake, A., Cook, M., and Moore, R. 2013. Real-time human pose recognition in parts from single depth images. Commun. ACM 56, 1. Google ScholarDigital Library

35. Sorkine, O., and Alexa, M. 2007. As-rigid-as-possible surface modeling. In Proc. SGP, 109–116. Google ScholarDigital Library

36. Sridhar, S., Oulasvirta, A., and Theobalt, C. 2013. Interactive markerless articulated hand motion tracking using rgb and depth data. In Proc. ICCV. Google ScholarDigital Library

37. Sumner, R., and Popović, J. 2004. Deformation transfer for triangle meshes. ACM Trans. Graph. 23, 3, 399–405. Google ScholarDigital Library

38. Tagliasacchi, A., Alhashim, I., Olson, M., and Zhang, H. 2012. Mean curvature skeletons. Comput. Graph. Forum 31, 5. Google ScholarDigital Library

39. Wang, R. Y., and Popović, J. 2009. Real-time hand-tracking with a color glove. ACM Trans. Graph. 28, 3, 63:1–63:8. Google ScholarDigital Library

40. Weller, M. P., Do, E. Y.-L., and Gross, M. D. 2008. Posey: instrumenting a poseable hub and strut construction toy. In Proc. TEI, 39–46. Google ScholarDigital Library

41. Yoshizaki, W., Sugiura, Y., Chiou, A. C., Hashimoto, S., Inami, M., Igarashi, T., Akazawa, Y., Kawachi, K., Kagami, S., and Mochimaru, M. 2011. An actuated physical puppet as an input device for controlling a digital manikin. In Proc. CHI, 637–646. Google ScholarDigital Library