“Stereoscopic Architectural Image Inpainting” by Lu, Chu and Lin

Conference:

Type(s):

Entry Number: 96

Title:

- Stereoscopic Architectural Image Inpainting

Presenter(s)/Author(s):

Abstract:

Example-based image inpainting is widely used to fill in the holes caused by removing foreground objects in an image, but artefacts usually appears during the inpainting process. Criminisi et al. [2003] determined patch filling orders at missing region boundary by measuring the surrounding known pixels and isophote strength. Wang et al. [2008] used stereo images with corresponding disparity maps as input. They took advantage of the two-view and disparity information to produce a more reasonable result than those by single-view methods. Darabi et al. [2012] enriched the patch search space with additional geometric and photometric transformations.

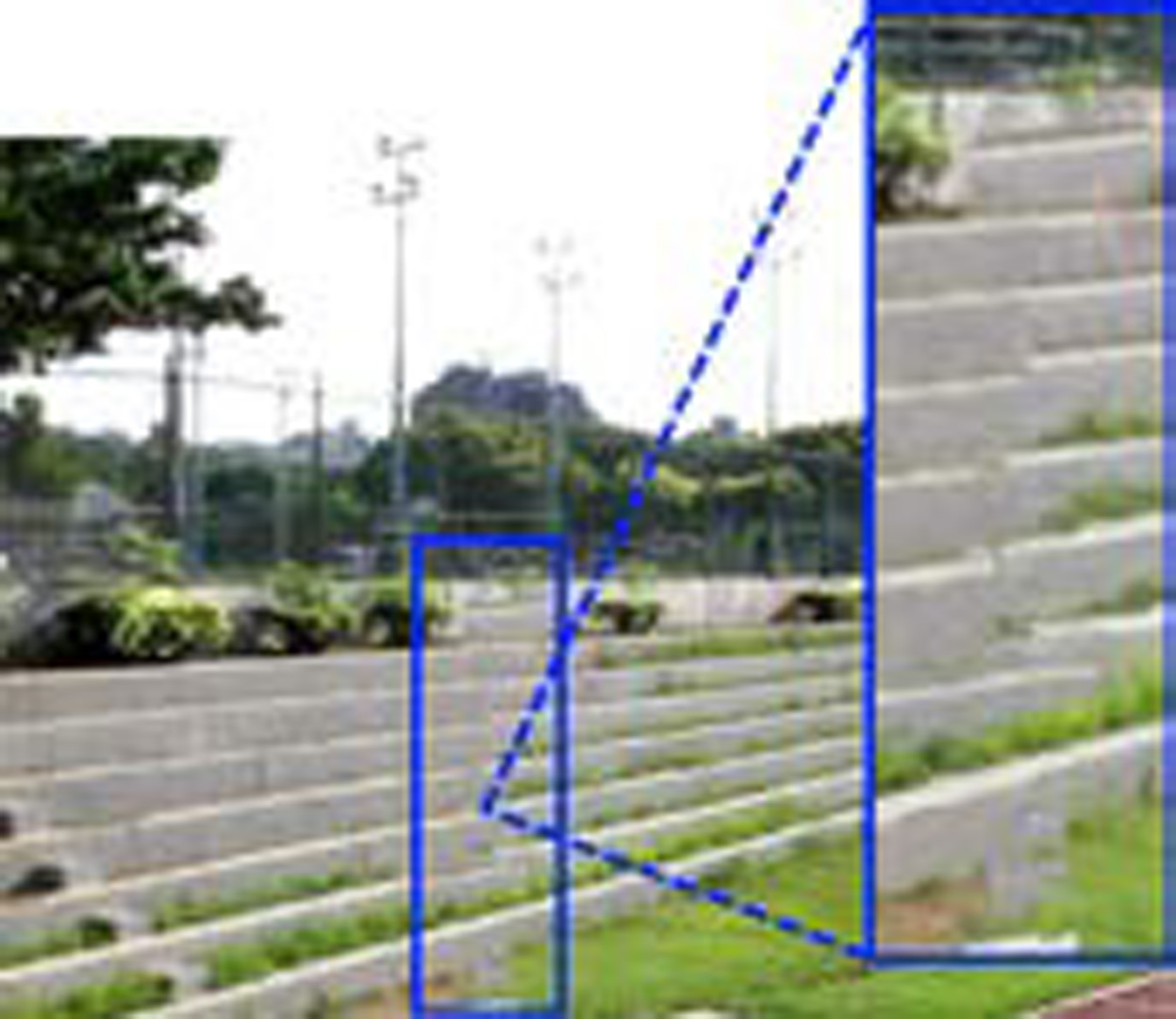

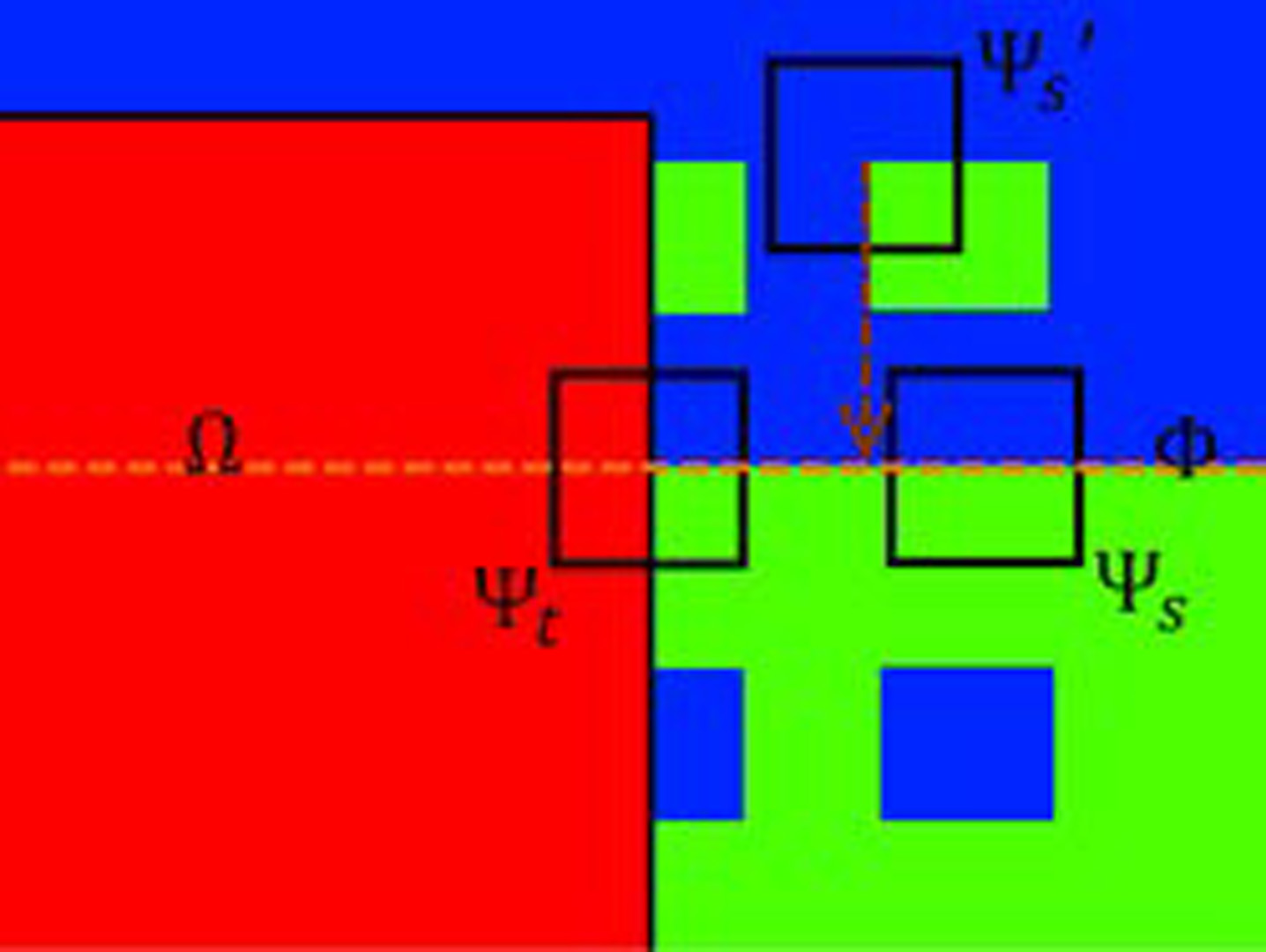

We present an automatic image completion method for stereoscopic architectural images. We take advantage of two-view information to reduce obstacle pixels and preserve parallax consistency. The vanishing point and lines in the scene are used for perspective correction. The obstacles in the corrected scenes are then inpainted by the proposed structure-enhanced patch searching. The experiments shows that our method can reduce artefacts for architectural scenes.

References:

- Criminisi, A., Perez, P., and Toyama, K. 2003. Object removal by exemplar-based inpainting. In Proc. IEEE Conf. on Computer Vision and Pattern Recognition, vol. 2, II-721–II-728.

- Darabi, S., Shechtman, E., Barnes, C., Goldman, D. B., and Sen, P. 2012. Image Melding: Combining inconsistent images using patch-based synthesis. ACM Trans. Graphics 31, 4, 82:1–82:10.

- Wang, L., Jin, H., Yang, R., and Gong, M. 2008. Stereoscopic inpainting: Joint color and depth completion from stereo images. In Proc. IEEE Conf. Computer Vision and Pattern Recognition, 1–8.

Acknowledgements:

This work was partially supported by National Science Council, Taiwan, under grant no. 102-2221-E-009-124 and 103-2218-E- 009-009.