“Speech-Driven Realtime Lip-Synch Animation with Viseme-Dependent Filters” by Kawamoto

Conference:

Type(s):

Title:

- Speech-Driven Realtime Lip-Synch Animation with Viseme-Dependent Filters

Presenter(s)/Author(s):

Entry Number:

- 96

Abstract:

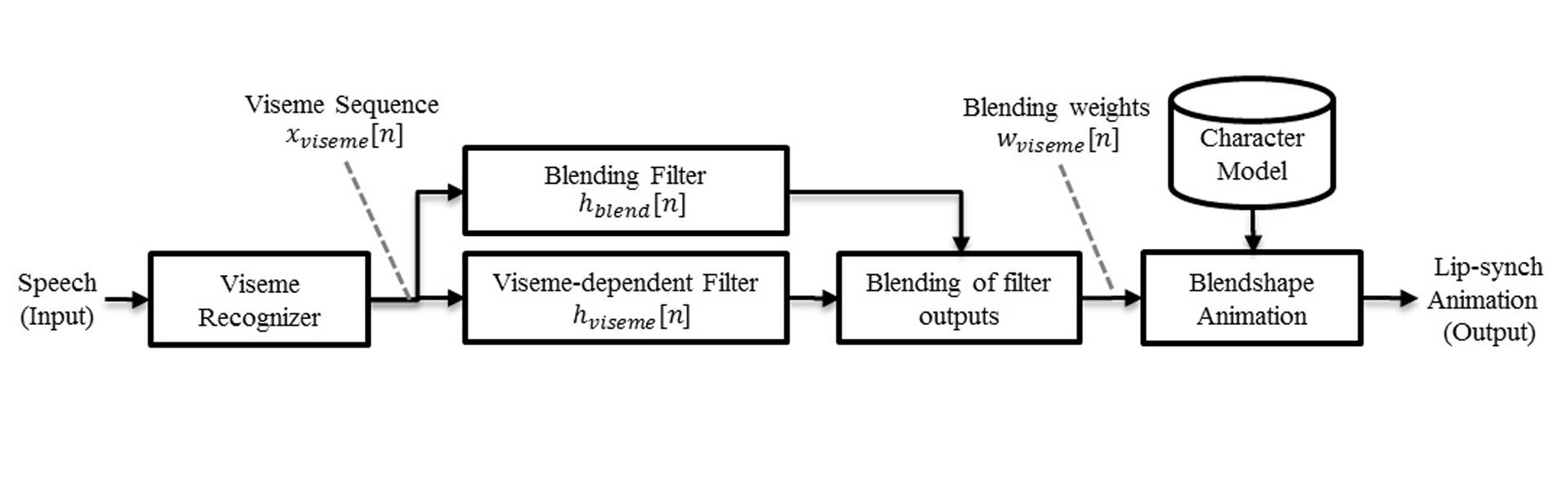

This paper presents a speech-driven realtime lip-synching technique by customizable viseme-dependent filters for blending weight sequences based on blendshapes, linear shape interpolation model. Lip-synching is one of fundamental components for creating facial animation. Mouth movement is synchronized along with the speech, when a character utters a word or phrase. Especially, speech-driven real-time lip-synching animation is useful for helping speech communication. Various speech-driven lip-synch animation techniques were proposed by many researchers (e.g. [Morishima 1998]). Most researchers constructed a mapping between speech and mouth-shape directly. These direct mapping approaches can realize lip-synching with small delay. However, it is sometimes unnatural since mouth movement is mismatched between the speaker and the pre-designed characters. In this case, the dynamic property of mouth movement should be designed for pre-designed characters. To solve this, we consider the customization of mouth movement by viseme-dependent filters designed for each mouth shape of given characters. Viseme is a basic unit of mouth shapes that are classified visually, and mapped to some phonemes.

References:

1. LEE, A., AND KAWAHARA, T. 2009. Recent development of open-source speech recognition engine julius. In APSIPA ASC.

2. MORISHIMA, S. 1998. Real-time talking head driven by voice and its application to communication and entertainment. In Proc. AVSP.

3. YOTSUKURA, T., MORISHIMA, S., AND NAKAMURA, S. 2003. Model-based talking face synthesis for anthropomorphic spoken dialog agent system. In Proc. 11th ACM MM, 351–354.

Acknowledgements:

This study was supported in part by JSPS KAKENHI Grant Number 24700191.