“Realtime style transfer for unlabeled heterogeneous human motion” by Xia, Wang, Chai and Hodgins

Conference:

Type(s):

Title:

- Realtime style transfer for unlabeled heterogeneous human motion

Presenter(s)/Author(s):

Abstract:

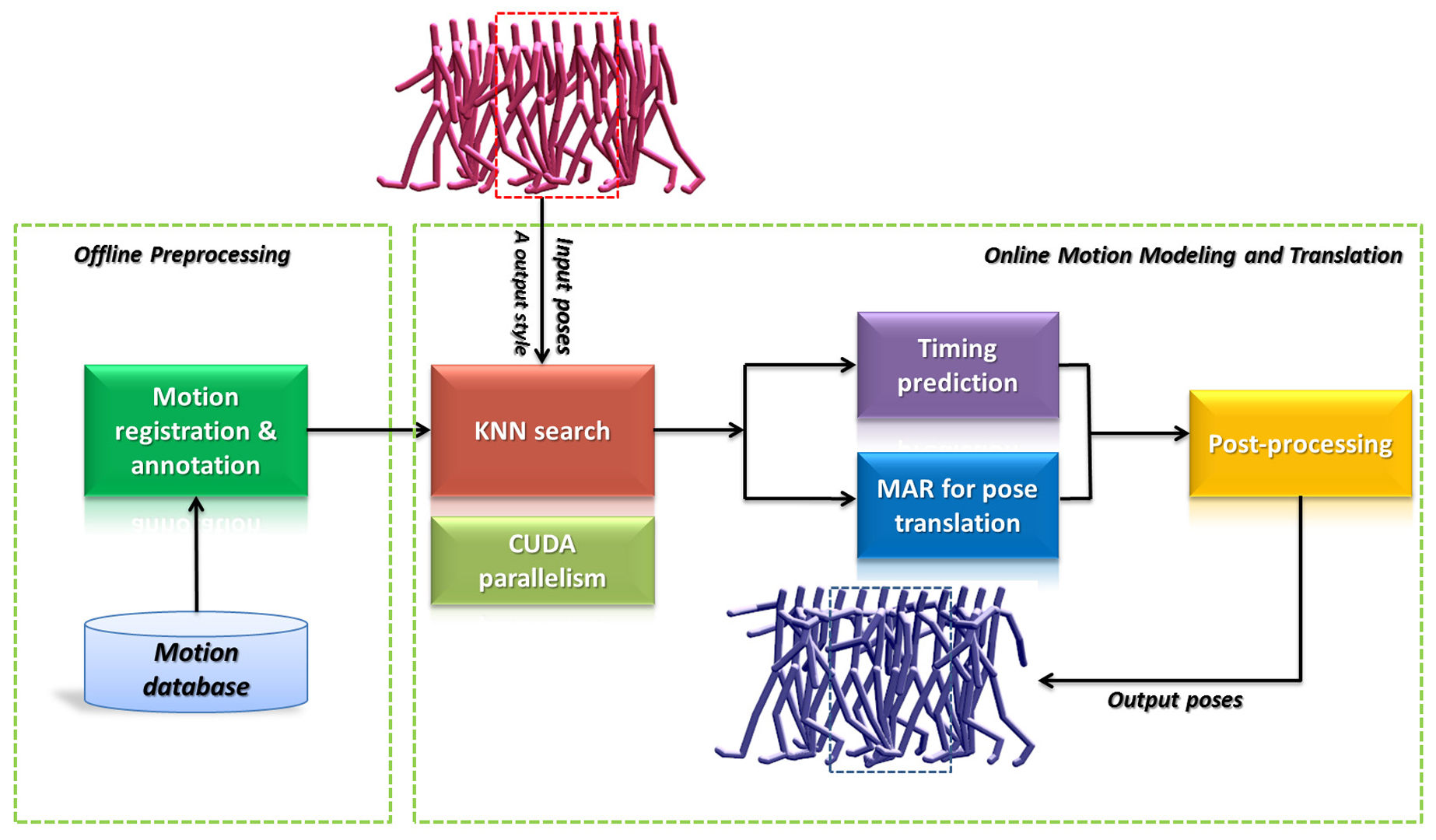

This paper presents a novel solution for realtime generation of stylistic human motion that automatically transforms unlabeled, heterogeneous motion data into new styles. The key idea of our approach is an online learning algorithm that automatically constructs a series of local mixtures of autoregressive models (MAR) to capture the complex relationships between styles of motion. We construct local MAR models on the fly by searching for the closest examples of each input pose in the database. Once the model parameters are estimated from the training data, the model adapts the current pose with simple linear transformations. In addition, we introduce an efficient local regression model to predict the timings of synthesized poses in the output style. We demonstrate the power of our approach by transferring stylistic human motion for a wide variety of actions, including walking, running, punching, kicking, jumping and transitions between those behaviors. Our method achieves superior performance in a comparison against alternative methods. We have also performed experiments to evaluate the generalization ability of our data-driven model as well as the key components of our system.

References:

1. Aha, D. W. 1997. Editorial, Special issue on lazy learning. In Artificial Intelligence Review. 11(1–5):1–6. Google ScholarDigital Library

2. Amaya, K., Bruderlin, A., and Calvert, T. 1996. Emotion from motion. In Proceedings of Graphics Interface 1996, 222–229. Google ScholarDigital Library

3. Brand, M., and Hertzmann, A. 2000. Style machines. In Proceedings of ACM SIGGRAPH 2000, 183–192. Google ScholarDigital Library

4. Chai, J., and Hodgins, J. 2005. Performance animation from low-dimensional control signals. ACM Transactions on Graphics 24, 3, 686–696. Google ScholarDigital Library

5. Chai, J., and Hodgins, J. 2007. Constraint-based motion optimization using a statistical dynamic model. ACM Transactions on Graphics 26, 3, Article No. 8. Google ScholarDigital Library

6. Grassia, F. S. 1998. Practical parameterization of rotations using the exponential map. Journal of Graphics Tools 3, 3, 29–48. Google ScholarDigital Library

7. Grochow, K., Martin, S. L., Hertzmann, A., and Popović, Z. 2004. Style-based inverse kinematics. ACM Transactions on Graphics 23, 3, 522–531. Google ScholarDigital Library

8. Hsu, E., Pulli, K., and Popović, J. 2005. Style translation for human motion. ACM Transactions on Graphics 24, 3, 1082–1089. Google ScholarDigital Library

9. Ikemoto, L., Arikan, O., and Forsyth, D. 2009. Generalizing motion edits with gaussian processes. ACM Transactions on Graphics 28, 1, 1:1–1:12. Google ScholarDigital Library

10. Kovar, L., and Gleicher, M. 2003. Registration curves. In ACM SIGGRAPH/EUROGRAPH Symposium on Computer Animation. 214–224. Google ScholarDigital Library

11. Kovar, L., and Gleicher, M. 2004. Automated extraction and parameterization of motions in large data sets. ACM Transactions on Graphics 23, 3 (Aug.), 559–568. Google ScholarDigital Library

12. Kovar, L., Gleicher, M., and Pighin, F. 2002. Motion graphs. ACM Transactions on Graphics 21, 3 (July), 473–482. Google ScholarDigital Library

13. Lau, M., Bar-Joseph, Z., and Kuffner, J. 2009. Modeling spatial and temporal variation in motion data. ACM Transactions on Graphics 28, 5, Article No. 171. Google ScholarDigital Library

14. Min, J., and Chai, J. 2012. Motion graphs++: A compact generative model for semantic motion analysis and synthesis. ACM Transactions on Graphics 31, 6, 153:1–153:12. Google ScholarDigital Library

15. Min, J., Liu, H., and Chai, J. 2010. Synthesis and editing of personalized stylistic human motion. In Proceedings of the 2010 ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games, 39–46. Google ScholarDigital Library

16. Monzani, J., Baerlocher, P., Boulic, R., and Thalmann, D. 2000. Using an intermediate skeleton and inverse kinematics for motion retargeting. Computer Graphics Forum 19, 3, 11–19.Google ScholarCross Ref

17. Rasmussen, C. E., and Nickisch, H. 2010. Gaussian processes for machine learning (gpml) toolbox. Journal of Machine Learning Research 11, 3011–3015. Google ScholarDigital Library

18. Rose, C., Cohen, M. F., and Bodenheimer, B. 1998. Verbs and adverbs: Multidimensional motion interpolation. In IEEE Computer Graphics and Applications. 18(5):32–40. Google ScholarDigital Library

19. Shapiro, A., Cao, Y., and Faloutsos, P. 2006. Style components. In Proceedings of Graphics Interface 2006, 33–39. Google ScholarDigital Library

20. Vicon, 2015. http://www.vicon.com.Google Scholar

21. Wang, J. M., Fleet, D. J., and Hertzmann, A. 2007. Multifactor gaussian process models for style-content separation. Proceedings of the 24th International Conference on Machine Learning. 975–982. Google ScholarDigital Library

22. Wei, X., Zhang, P., and Chai, J. 2012. Accurate realtime full-body motion capture using a single depth camera. ACM Transactions on Graphics 31, 6 (Nov.), 188:1–188:12. Google ScholarDigital Library

23. Wong, C. S., and Li, W. K. 2000. On a mixture autoregressive model. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 62, 1, 95–115.Google ScholarCross Ref