“Realtime performance-based facial animation” by Weise, Bouaziz, Li and Pauly

Conference:

Type(s):

Title:

- Realtime performance-based facial animation

Presenter(s)/Author(s):

Abstract:

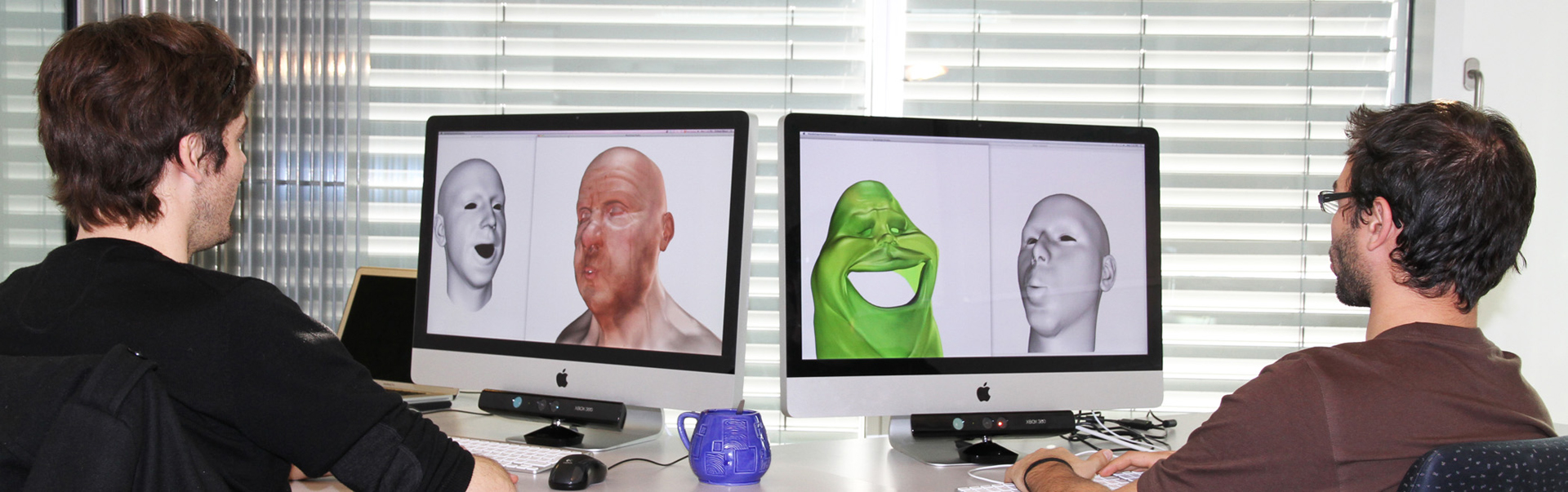

This paper presents a system for performance-based character animation that enables any user to control the facial expressions of a digital avatar in realtime. The user is recorded in a natural environment using a non-intrusive, commercially available 3D sensor. The simplicity of this acquisition device comes at the cost of high noise levels in the acquired data. To effectively map low-quality 2D images and 3D depth maps to realistic facial expressions, we introduce a novel face tracking algorithm that combines geometry and texture registration with pre-recorded animation priors in a single optimization. Formulated as a maximum a posteriori estimation in a reduced parameter space, our method implicitly exploits temporal coherence to stabilize the tracking. We demonstrate that compelling 3D facial dynamics can be reconstructed in realtime without the use of face markers, intrusive lighting, or complex scanning hardware. This makes our system easy to deploy and facilitates a range of new applications, e.g. in digital gameplay or social interactions.

References:

1. Alexander, O., Rogers, M., Lambeth, W., Chang, M., and Debevec, P. 2009. The digital emily project: photoreal facial modeling and animation. ACM SIGGRAPH 2009 Courses. Google Scholar

2. Beeler, T., Bickel, B., Beardsley, P., Sumner, B., and Gross, M. 2010. High-quality single-shot capture of facial geometry. ACM Trans. Graph. 29, 40:1–40:9. Google ScholarDigital Library

3. Black, M. J., and Yacoob, Y. 1995. Tracking and recognizing rigid and non-rigid facial motions using local parametric models of image motion. In ICCV, 374–381. Google Scholar

4. Blanz, V., and Vetter, T. 1999. A morphable model for the synthesis of 3d faces. In Proc. SIGGRAPH 99. Google Scholar

5. Borshukov, G., Piponi, D., Larsen, O., Lewis, J. P., and Tempelaar-Lietz, C. 2005. Universal capture – image-based facial animation for “the matrix reloaded”. In SIGGRAPH 2005 Courses. Google Scholar

6. Bradley, D., Heidrich, W., Popa, T., and Sheffer, A. 2010. High resolution passive facial performance capture. ACM Trans. Graph. 29, 41:1–41:10. Google ScholarDigital Library

7. Chai, J. X., Xiao, J., and Hodgins, J. 2003. Vision-based control of 3d facial animation. In SCA. Google Scholar

8. Chuang, E., and Bregler, C. 2002. Performance driven facial animation using blendshape interpolation. Tech. rep., Stanford University.Google Scholar

9. Cootes, T., Edwards, G., and Taylor, C. 2001. Active appearance models. PAMI 23, 681–685. Google ScholarDigital Library

10. Covell, M. 1996. Eigen-points: Control-point location using principle component analyses. In FG ’96. Google Scholar

11. DeCarlo, D., and Metaxas, D. 1996. The integration of optical flow and deformable models with applications to human face shape and motion estimation. In CVPR. Google Scholar

12. DeCarlo, D., and Metaxas, D. 2000. Optical flow constraints on deformable models with applications to face tracking. IJCV 38, 99–127. Google ScholarCross Ref

13. Ekman, P., and Friesen, W. 1978. Facial Action Coding System: A Technique for the Measurement of Facial Movement. Consulting Psychologists Press.Google Scholar

14. Essa, I., Basu, S., Darrell, T., and Pentland, A. 1996. Modeling, tracking and interactive animation of faces and heads using input from video. In Proc. Computer Animation. Google ScholarDigital Library

15. Furukawa, Y., and Ponce, J. 2009. Dense 3d motion capture for human faces. In CVPR.Google Scholar

16. Grochow, K., Martin, S. L., Hertzmann, A., and Popović, Z. 2004. Style-based inverse kinematics. ACM Trans. Graph. 23, 522–531. Google ScholarDigital Library

17. Guenter, B., Grimm, C., Wood, D., Malvar, H., and Pighin, F. 1993. Making faces. IEEE Computer Graphics and Applications 13, 6–8.Google Scholar

18. Ikemoto, L., Arikan, O., and Forsyth, D. 2009. Generalizing motion edits with gaussian processes. ACM Trans. Graph. 28, 1:1–1:12. Google ScholarDigital Library

19. Lau, M., Chai, J., Xu, Y.-Q., and Shum, H.-Y. 2007. Face poser: interactive modeling of 3d facial expressions using model priors. In SCA. Google Scholar

20. Li, H., Roivainen, P., and Forcheimer, R. 1993. 3-d motion estimation in model-based facial image coding. PAMI 15, 545–555. Google ScholarDigital Library

21. Li, H., Adams, B., Guibas, L. J., and Pauly, M. 2009. Robust single-view geometry and motion reconstruction. ACM Trans. Graph. 28, 175:1–175:10. Google ScholarDigital Library

22. Li, H., Weise, T., and Pauly, M. 2010. Example-based facial rigging. ACM Trans. Graph. 29, 32:1–32:6. Google ScholarDigital Library

23. Lin, I.-C., and Ouhyoung, M. 2005. Mirror mocap: Automatic and efficient capture of dense 3d facial motion parameters from video. The Visual Computer 21, 6, 355–372.Google ScholarCross Ref

24. Lou, H., and Chai, J. 2010. Example-based human motion denoising. IEEE Trans. on Visualization and Computer Graphics 16, 870–879. Google ScholarDigital Library

25. Lu, P., Nocedal, J., Zhu, C., Byrd, R. H., and Byrd, R. H. 1994. A limited-memory algorithm for bound constrained optimization. SIAM Journal on Scientific Computing.Google Scholar

26. Ma, W.-C., Hawkins, T., Peers, P., Chabert, C.-F., Weiss, M., and Debevec, P. 2007. Rapid acquisition of specular and diffuse normal maps from polarized spherical gradient illumination. In EUROGRAPHICS Symposium on Rendering. Google Scholar

27. McLachlan, G. J., and Krishnan, T. 1996. The EM Algorithm and Extensions. Wiley-Interscience.Google Scholar

28. Pérez, P., Gangnet, M., and Blake, A. 2003. Poisson image editing. ACM Trans. Graph. 22, 313–318. Google ScholarDigital Library

29. Pighin, F., and Lewis, J. P. 2006. Performance-driven facial animation. In ACM SIGGRAPH 2006 Courses. Google Scholar

30. Pighin, F., Szeliski, R., and Salesin, D. 1999. Resynthesizing facial animation through 3d model-based tracking. ICCV 1, 143–150.Google Scholar

31. Roberts, S. 1959. Control chart tests based on geometric moving averages. In Technometrics, 239250.Google Scholar

32. Tipping, M. E., and Bishop, C. M. 1999. Probabilistic principal component analysis. Journal of the Royal Statistical Society, Series B.Google ScholarCross Ref

33. Tipping, M. E., and Bishop, C. M. 1999. Mixtures of probabilistic principal component analyzers. Neural Computation 11. Google Scholar

34. Viola, P., and Jones, M. 2001. Rapid object detection using a boosted cascade of simple features. In CVPR.Google Scholar

35. Weise, T., Leibe, B., and Gool, L. V. 2008. Accurate and robust registration for in-hand modeling. In CVPR.Google Scholar

36. Weise, T., Li, H., Gool, L. V., and Pauly, M. 2009. Face/off: Live facial puppetry. In SCA. Google Scholar

37. Williams, L. 1990. Performance-driven facial animation. In Comp. Graph. (Proc. SIGGRAPH 90). Google Scholar

38. Wilson, C. A., Ghosh, A., Peers, P., Chiang, J.-Y., Busch, J., and Debevec, P. 2010. Temporal upsampling of performance geometry using photometric alignment. ACM Trans. Graph. 29, 17:1–17:11. Google ScholarDigital Library

39. Zhang, S., and Huang, P. 2004. High-resolution, real-time 3d shape acquisition. In CVPR Workshop. Google ScholarDigital Library

40. Zhang, L., Snavely, N., Curless, B., and Seitz, S. M. 2004. Spacetime faces: high resolution capture for modeling and animation. ACM Trans. Graph. 23, 548–558. Google ScholarDigital Library