“Real-time controllable motion transition for characters” by Tang, Wang, Hu, Gong, Yi, et al. …

Conference:

Type(s):

Title:

- Real-time controllable motion transition for characters

Presenter(s)/Author(s):

Abstract:

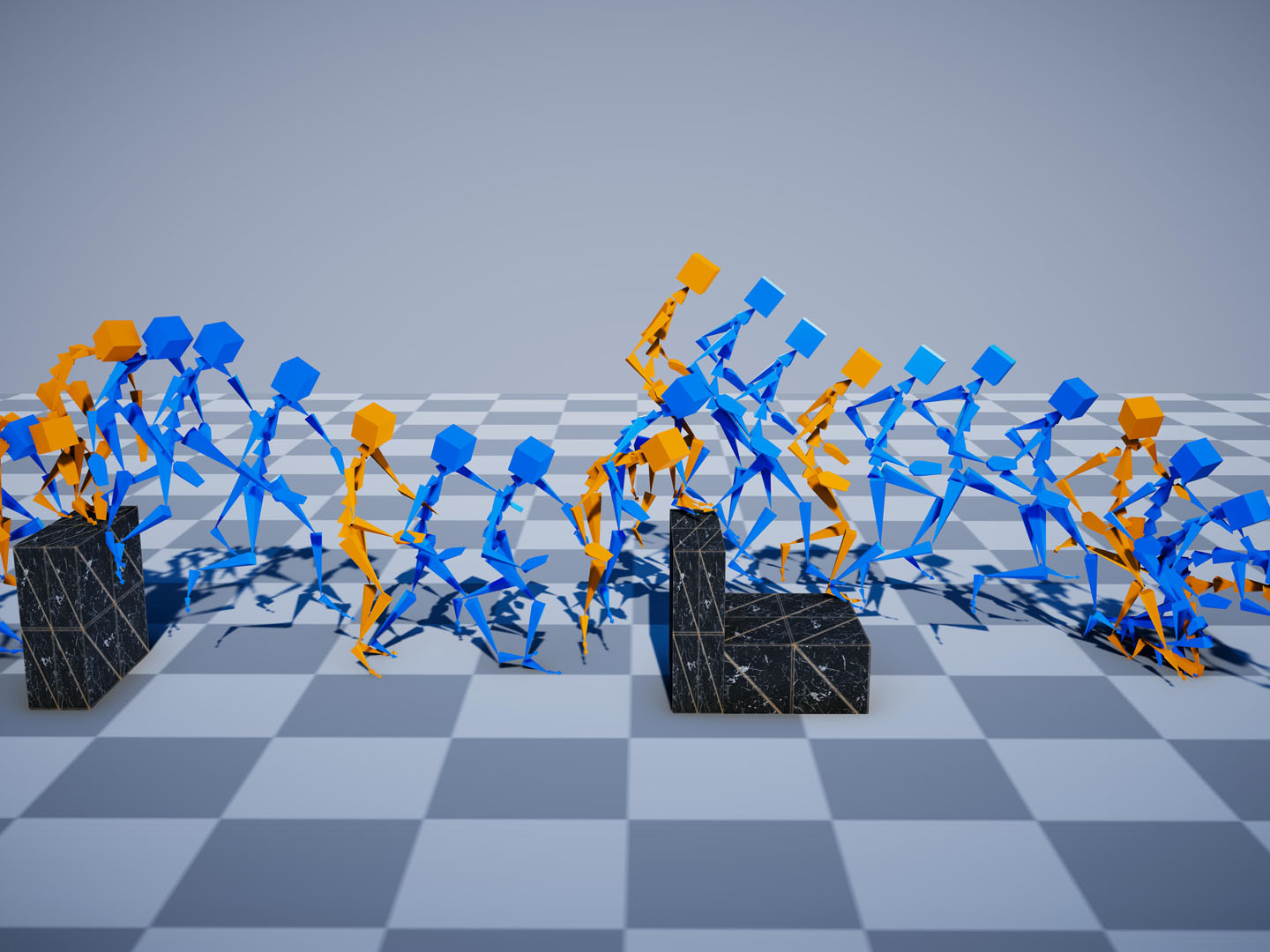

Real-time in-between motion generation is universally required in games and highly desirable in existing animation pipelines. Its core challenge lies in the need to satisfy three critical conditions simultaneously: quality, controllability and speed, which renders any methods that need offline computation (or post-processing) or cannot incorporate (often unpredictable) user control undesirable. To this end, we propose a new real-time transition method to address the aforementioned challenges. Our approach consists of two key components: motion manifold and conditional transitioning. The former learns the important low-level motion features and their dynamics; while the latter synthesizes transitions conditioned on a target frame and the desired transition duration. We first learn a motion manifold that explicitly models the intrinsic transition stochasticity in human motions via a multi-modal mapping mechanism. Then, during generation, we design a transition model which is essentially a sampling strategy to sample from the learned manifold, based on the target frame and the aimed transition duration. We validate our method on different datasets in tasks where no post-processing or offline computation is allowed. Through exhaustive evaluation and comparison, we show that our method is able to generate high-quality motions measured under multiple metrics. Our method is also robust under various target frames (with extreme cases).

References:

1. Okan Arikan and D. A. Forsyth. 2002. Interactive motion generation from examples. ACM Transactions on Graphics 21, 3 (2002), 483–490.Google ScholarDigital Library

2. Philippe Beaudoin, Stelian Coros, Michiel van de Panne, and Pierre Poulin. 2008. Motionmotif graphs. In Proceedings of the 2008 ACM SIGGRAPH/Eurographics Symposium on Computer Animation. 117–126.Google Scholar

3. Jinxiang Chai and Jessica K. Hodgins. 2007. Constraint-based motion optimization using a statistical dynamic model. ACM Transactions on Graphics 26, 3 (2007), 8–es.Google ScholarDigital Library

4. Wenheng Chen, He Wang, Yi Yuan, Tianjia Shao, and Kun Zhou. 2020. Dynamic future net: diversified human motion generation. In Proceedings of the 28th ACM International Conference on Multimedia. 2131–2139.Google ScholarDigital Library

5. Hsu-kuang Chiu, Ehsan Adeli, Borui Wang, De-An Huang, and Juan Carlos Niebles. 2019. Action-agnostic human pose forecasting. In 2019 IEEE Winter Conference on Applications of Computer Vision (WACV). 1423–1432.Google Scholar

6. Yinglin Duan, Tianyang Shi, Zhengxia Zou, Yenan Lin, Zhehui Qian, Bohan Zhang, and Yi Yuan. 2021. Single-Shot Motion Completion with Transformer. arXiv:2103.00776 [cs] (March 2021).Google Scholar

7. Katerina Fragkiadaki, Sergey Levine, Panna Felsen, and Jitendra Malik. 2015. Recurrent network models for human dynamics. In Proceedings of the IEEE International Conference on Computer Vision. 4346–4354.Google ScholarDigital Library

8. Félix G. Harvey and Christopher Pal. 2018. Recurrent transition networks for character locomotion. In SIGGRAPH Asia 2018 Technical Briefs (SA ’18). Association for Computing Machinery, 1–4.Google Scholar

9. Félix G. Harvey, Mike Yurick, Derek Nowrouzezahrai, and Christopher Pal. 2020. Robust motion in-betweening. ACM Transactions on Graphics 39, 4, Article 60 (2020).Google ScholarDigital Library

10. Alejandro Hernandez, Jurgen Gall, and Francesc Moreno-Noguer. 2019. Human motion prediction via spatio-temporal inpainting. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 7134–7143.Google ScholarCross Ref

11. Daniel Holden, Oussama Kanoun, Maksym Perepichka, and Tiberiu Popa. 2020. Learned motion matching. ACM Transactions on Graphics 39, 4, Article 53 (2020).Google ScholarDigital Library

12. Daniel Holden, Taku Komura, and Jun Saito. 2017. Phase-functioned neural networks for character control. ACM Transactions on Graphics 36, 4 (2017), 1–13.Google ScholarDigital Library

13. Daniel Holden, Jun Saito, and Taku Komura. 2016. A deep learning framework for character motion synthesis and editing. ACM Transactions on Graphics 35, 4 (2016), 1–11.Google ScholarDigital Library

14. Catalin Ionescu, Fuxin Li, and Cristian Sminchisescu. 2011. Latent structured models for human pose estimation. In 2011 International Conference on Computer Vision. 2220–2227.Google ScholarDigital Library

15. Catalin Ionescu, Dragos Papava, Vlad Olaru, and Cristian Sminchisescu. 2014. Human3.6M: large scale datasets and predictive methods for 3D human sensing in natural environments. IEEE Transactions on Pattern Analysis and Machine Intelligence 36, 7 (2014), 1325–1339.Google ScholarDigital Library

16. Ashesh Jain, Amir R. Zamir, Silvio Savarese, and Ashutosh Saxena. 2016. Structural-RNN: deep learning on spatio-temporal graphs. In 2016 IEEE Conference on Computer Vision and Pattern Recognition. 5308–5317.Google ScholarCross Ref

17. Manuel Kaufmann, Emre Aksan, Jie Song, Fabrizio Pece, Remo Ziegler, and Otmar Hilliges. 2020. Convolutional autoencoders for human motion infilling. In 2020 International Conference on 3D Vision. 918–927.Google ScholarCross Ref

18. Lucas Kovar, Michael Gleicher, and Frédéric Pighin. 2008. Motion graphs. In ACM SIGGRAPH 2008 Classes (SIGGRAPH ’08).Google ScholarDigital Library

19. Sergey Levine, Jack M Wang, Alexis Haraux, Zoran Popović, and Vladlen Koltun. 2012. Continuous character control with low-dimensional embeddings. ACM Transactions on Graphics (TOG) 31, 4 (2012), 1–10.Google ScholarDigital Library

20. Jiaman Li, Ruben Villegas, Duygu Ceylan, Jimei Yang, Zhengfei Kuang, Hao Li, and Yajie Zhao. 2021. Task-generic hierarchical human motion prior using vaes. In 2021 International Conference on 3D Vision. IEEE, 771–781.Google ScholarCross Ref

21. Hung Yu Ling, Fabio Zinno, George Cheng, and Michiel van de Panne. 2020. Character controllers using motion VAEs. ACM Transactions on Graphics 39, 4, Article 40 (2020).Google ScholarDigital Library

22. Julieta Martinez, Michael J Black, and Javier Romero. 2017. On human motion prediction using recurrent neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2891–2900.Google ScholarCross Ref

23. Jianyuan Min and Jinxiang Chai. 2012. Motion graphs++: a compact generative model for semantic motion analysis and synthesis. ACM Transactions on Graphics 31, 6, Article 153 (2012), 12 pages.Google ScholarDigital Library

24. Dario Pavllo, Christoph Feichtenhofer, Michael Auli, and David Grangier. 2020. Modeling human motion with quaternion-based neural networks. International Journal of Computer Vision 128 (2020), 855–872.Google ScholarDigital Library

25. Mathis Petrovich, Michael J. Black, and Gül Varol. 2021. Action-Conditioned 3D Human Motion Synthesis with Transformer VAE. arXiv:2104.05670 [cs] (2021).Google Scholar

26. Davis Rempe, Tolga Birdal, Aaron Hertzmann, Jimei Yang, Srinath Sridhar, and Leonidas J Guibas. 2021. Humor: 3d human motion model for robust pose estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 11488–11499.Google ScholarCross Ref

27. Alla Safonova and Jessica K. Hodgins. 2007. Construction and optimal search of interpolated motion graphs. ACM Transactions on Graphics 26 (2007).Google Scholar

28. Yijun Shen, He Wang, Edmond S. L. Ho, Longzhi Yang, and Hubert P. H. Shum. 2017. Posture-based and action-based graphs for boxing skill visualization. Computers and Graphics 69, Supplement C (2017), 104–115.Google ScholarDigital Library

29. Sebastian Starke, Yiwei Zhao, Taku Komura, and Kazi Zaman. 2020. Local motion phases for learning multi-contact character movements. ACM Transactions on Graphics 39, 4, Article 54 (July 2020).Google ScholarDigital Library

30. Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. In Advances in Neural Information Processing Systems. 5998–6008.Google Scholar

31. He Wang, Edmond SL Ho, and Taku Komura. 2015. An energy-driven motion planning method for two distant postures. IEEE Transactions on Visualization and Computer Graphics 21, 1 (2015), 18–30.Google ScholarCross Ref

32. He Wang, Edmond S. L. Ho, Hubert P. H. Shum, and Zhanxing Zhu. 2021. Spatiotemporal manifold learning for human motions via long-Horizon modeling. IEEE Transactions on Visualization and Computer Graphics 27, 1 (2021), 216–227.Google ScholarDigital Library

33. He Wang and Taku Komura. 2011. Energy-based pose unfolding and interpolation for 3D articulated characters. In Motion in Games. 110–119.Google Scholar

34. He Wang, Kirill A Sidorov, Peter Sandilands, and Taku Komura. 2013. Harmonic parameterization by electrostatics. ACM Transactions on Graphics 32, 5 (2013), 155.Google ScholarDigital Library

35. Andrew Witkin and Michael Kass. 1988. Spacetime constraints. ACM Siggraph Computer Graphics 22, 4 (1988), 159–168.Google ScholarDigital Library

36. Yuting Ye and C. Karen Liu. 2010. Synthesis of responsive motion using a dynamic model. Computer Graphic Forum 29, 2 (2010), 555–562.Google ScholarCross Ref

37. Ye Yuan, Umar Iqbal, Pavlo Molchanov, Kris Kitani, and Jan Kautz. 2021. GLAMR: Global Occlusion-Aware Human Mesh Recovery with Dynamic Cameras. arXiv preprint arXiv:2112.01524 (2021).Google Scholar

38. He Zhang, Sebastian Starke, Taku Komura, and Jun Saito. 2018. Mode-adaptive neural networks for quadruped motion control. ACM Transactions on Graphics 37, 4 (2018), 1–11.Google ScholarDigital Library

39. Xinyi Zhang and Michiel van de Panne. 2018. Data-driven autocompletion for keyframe animation. In Proceedings of the 11th Annual International Conference on Motion, Interaction, and Games. 1–11.Google Scholar

40. Yi Zhou, Jingwan Lu, Connelly Barnes, Jimei Yang, Sitao Xiang, et al. 2020. Generative tweening: Long-term inbetweening of 3d human motions. arXiv preprint arXiv:2005.08891 (2020).Google Scholar