“Predictive and generative neural networks for object functionality” by Hu, Yan, Zhang, Kaick, Shamir, et al. …

Conference:

Type(s):

Title:

- Predictive and generative neural networks for object functionality

Session/Category Title: Shape Analysis

Presenter(s)/Author(s):

Moderator(s):

Entry Number: 151

Abstract:

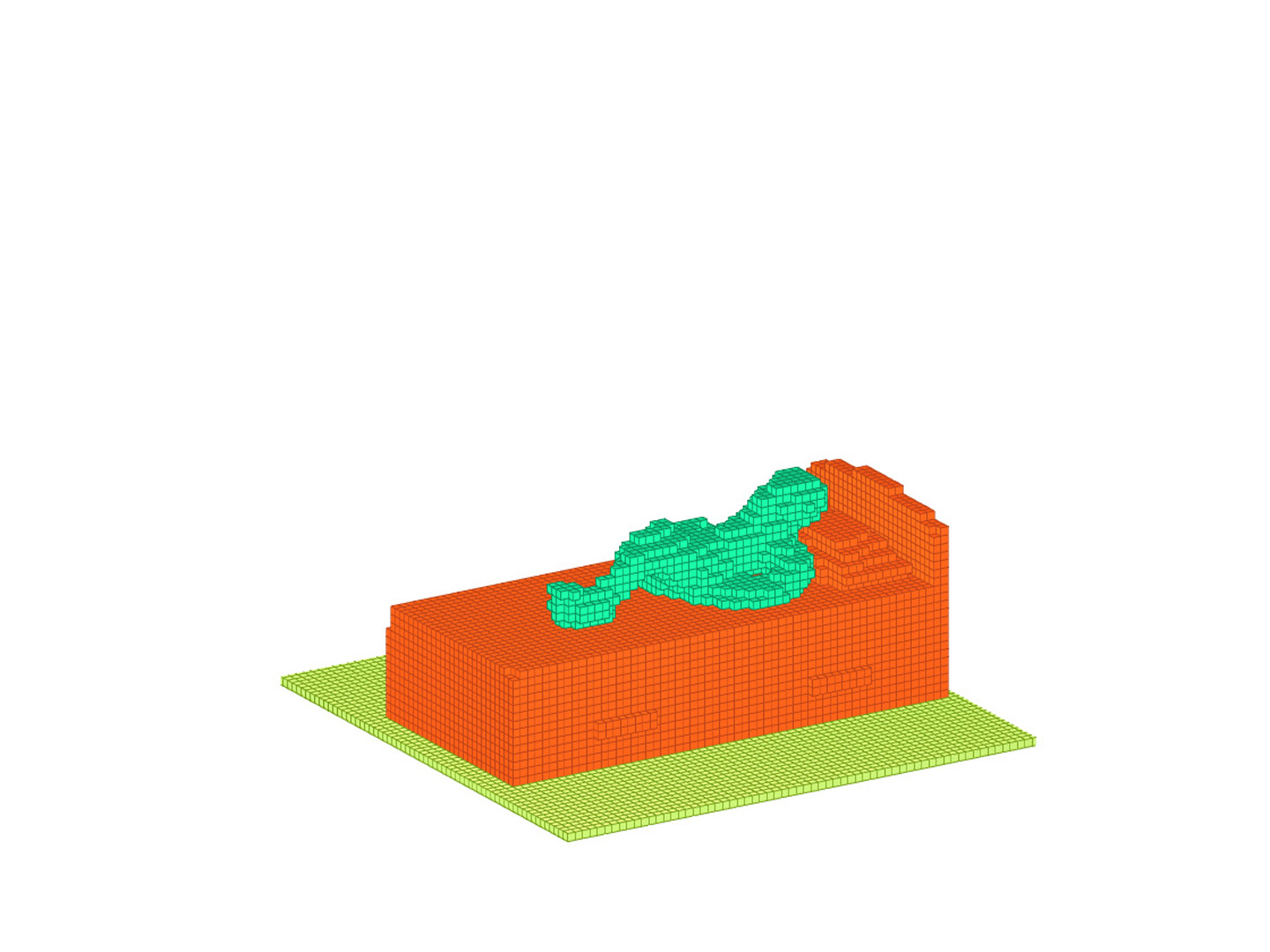

Humans can predict the functionality of an object even without any surroundings, since their knowledge and experience would allow them to “hallucinate” the interaction or usage scenarios involving the object. We develop predictive and generative deep convolutional neural networks to replicate this feat. Specifically, our work focuses on functionalities of man-made 3D objects characterized by human-object or object-object interactions. Our networks are trained on a database of scene contexts, called interaction contexts, each consisting of a central object and one or more surrounding objects, that represent object functionalities. Given a 3D object in isolation, our functional similarity network (fSIM-NET), a variation of the triplet network, is trained to predict the functionality of the object by inferring functionality-revealing interaction contexts. fSIM-NET is complemented by a generative network (iGEN-NET) and a segmentation network (iSEG-NET). iGEN-NET takes a single voxelized 3D object with a functionality label and synthesizes a voxelized surround, i.e., the interaction context which visually demonstrates the corresponding functionality. iSEG-NET further separates the interacting objects into different groups according to their interaction types.

References:

1. Yuri Boykov and Vladimir Kolmogorov. 2004. An experimental comparison of min-cut/max-flow algorithms for energy minimization in vision. IEEE transactions on pattern analysis and machine intelligence 26, 9 (2004), 1124–1137. Google ScholarDigital Library

2. Haoqiang Fan, Hao Su, and Leonidas Guibas. 2017. A Point Set Generation Network for 3D Object Reconstruction from a Single Image. In Proc. IEEE Conf. on Computer Vision & Pattern Recognition. 2463–2471.Google ScholarCross Ref

3. Matthew Fisher, Daniel Ritchie, Manolis Savva, Thomas Funkhouser, and Pat Hanrahan. 2012. Example-based synthesis of 3D object arrangements. ACM Trans. on Graphics 31, 6 (2012), 135:1–11. Google ScholarDigital Library

4. Qiang Fu, Xiaowu Chen, Xiaotian Wang, Sijia Wen, Bin Zhou, and Hongbo Fu. 2017. Adaptive Synthesis of Indoor Scenes via Activity-associated Object Relation Graphs. ACM Trans. on Graphics 36, 6 (2017), 201:1–13. Google ScholarDigital Library

5. Rohit Girdhar, David F. Fouhey, Mikel Rodriguez, and Abhinav Gupta. 2016. Learning a Predictable and Generative Vector Representation for Objects. In Proc. Euro. Conf. on Computer Vision, Vol. 6. 484–499.Google ScholarCross Ref

6. Helmut Grabner, Juergen Gall, and Luc J. Van Gool. 2011. What makes a chair a chair?. In Proc. IEEE Conf. on Computer Vision & Pattern Recognition. 1529–1536. Google ScholarDigital Library

7. Michelle R. Greene, Christopher Baldassano, Diane M. Beck, and Fei-Fei Li. 2016. Visual Scenes Are Categorized by Function. Journal of Experimental Psychology: General 145, 1 (2016), 82–94.Google ScholarCross Ref

8. Paul Guerrero, Niloy J. Mitra, and Peter Wonka. 2016. RAID: A Relation-augmented Image Descriptor. ACM Trans. on Graphics 35, 4 (2016), 46:1–12. Google ScholarDigital Library

9. X. Han, Z. Li, H. Huang, E. Kalogerakis, and Y. Yu. 2017. High-Resolution Shape Completion Using Deep Neural Networks for Global Structure and Local Geometry Inference. In Proc. Int. Conf. on Computer Vision. 85–93.Google Scholar

10. Ruizhen Hu, Wenchao Li, Oliver van Kaick, Ariel Shamir, Hao Zhang, and Hui Huang. 2017. Learning to Predict Part Mobility from a Single Static Snapshot. ACM Trans. on Graphics 36, 6 (2017), 227:1–13. Google ScholarDigital Library

11. Ruizhen Hu, Oliver van Kaick, Bojian Wu, Hui Huang, Ariel Shamir, and Hao Zhang. 2016. Learning How Objects Function via Co-Analysis of Interactions. ACM Trans. on Graphics 35, 4 (2016), 47:1–12. Google ScholarDigital Library

12. Ruizhen Hu, Chenyang Zhu, Oliver van Kaick, Ligang Liu, Ariel Shamir, and Hao Zhang. 2015. Interaction Context (ICON): Towards a Geometric Functionality Descriptor. ACM Trans. on Graphics 34, 4 (2015), 83:1–12. Google ScholarDigital Library

13. Max Jaderberg, Karen Simonyan, Andrew Zisserman, and koray kavukcuoglu. 2015. Spatial Transformer Networks. In NIPS. 2017–2025. Google ScholarDigital Library

14. Yun Jiang, Hema Koppula, and Ashutosh Saxena. 2013. Hallucinated Humans As the Hidden Context for Labeling 3D Scenes. In Proc. IEEE Conf. on Computer Vision & Pattern Recognition. 2993–3000. Google ScholarDigital Library

15. Vladimir G. Kim, Siddhartha Chaudhuri, Leonidas Guibas, and Thomas Funkhouser. 2014. Shape2Pose: Human-Centric Shape Analysis. ACM Trans. on Graphics 33, 4 (2014), 120:1–12. Google ScholarDigital Library

16. Jun Li, Kai Xu, Siddhartha Chaudhuri, Ersin Yumer, Hao Zhang, and Leonidas Guibas. 2017. GRASS: Generative Recursive Autoencoders for Shape Structures. ACM Trans. on Graphics 36, 4 (2017), 52:1–14. Google ScholarDigital Library

17. Zhaoliang Lun, Evangelos Kalogerakis, and Alla Sheffer. 2015. Elements of Style: Learning Perceptual Shape Style Similarity. ACM Trans. on Graphics 34, 4 (2015), 84:1–14. Google ScholarDigital Library

18. Rui Ma, Honghua Li, Changqing Zou, Zicheng Liao, Xin Tong, and Hao Zhang. 2016. Action-Driven 3D Indoor Scene Evolution. ACM Trans. on Graphics 35, 6 (2016), 173:1–13. Google ScholarDigital Library

19. Jonathan Masci, Davide Boscaini, Michael M. Bronstein, and Pierre Vandergheynst. 2015. Geodesic Convolutional Neural Networks on Riemannian Manifolds. In IEEE Workshop on 3D Representation and Recognition. 832–840. Google ScholarDigital Library

20. Niloy Mitra, Michael Wand, Hao Zhang, Daniel Cohen-Or, and Martin Bokeloh. 2013. Structure-aware shape processing. In Eurographics State-of-the-art Report (STAR). 175–197.Google Scholar

21. Michael Pechuk, Octavian Soldea, and Ehud Rivlin. 2008. Learning function-based object classification from 3D imagery. Computer Vision and Image Understanding 110, 2 (2008), 173–191. Google ScholarDigital Library

22. S?ren Pirk, Vojtech Krs, Kaimo Hu, Suren Deepak Rajasekaran, Hao Kang, Bedrich Benes, Yusuke Yoshiyasu, and Leonidas J. Guibas. 2017. Understanding and Exploiting Object Interaction Landscapes. ACM Trans. on Graphics 36, 3 (2017), 31:1–14. Google ScholarDigital Library

23. Charles R. Qi, Li Yi, Hao Su, and Leonidas J. Guibas. 2017. PointNet+ + : Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In NIPS. 5105–5114.Google Scholar

24. Manolis Savva, Angel X. Chang, Pat Hanrahan, Matthew Fisher, and Matthias Nie?ner. 2014. SceneGrok: Inferring Action Maps in 3D Environments. ACM Trans. on Graphics 33, 6 (2014), 212:1–10. Google ScholarDigital Library

25. Manolis Savva, Angel X. Chang, Pat Hanrahan, Matthew Fisher, and Matthias Nie?ner. 2016. PiGraphs: Learning Interaction Snapshots from Observations. ACM Trans. on Graphics 35, 4 (2016), 139:1–12. Google ScholarDigital Library

26. Louise Stark and Kevin Bowyer. 1991. Achieving Generalized Object Recognition through Reasoning about Association of Function to Structure. IEEE Trans. Pattern Analysis & Machine Intelligence 13, 10 (1991), 1097–1104. Google ScholarDigital Library

27. Minhyuk Sung, Hao Su, Vladimir G. Kim, Siddhartha Chaudhuri, and Leonidas Guibas. 2017. ComplementMe: Weakly-supervised Component Suggestions for 3D Modeling. ACM Trans. on Graphics 36, 6 (2017), 226:1–12. Google ScholarDigital Library

28. Hanqing Wang, Wei Liang, and Lap-Fai Yu. 2017. Transferring Objects: Joint Inference of Container and Human Pose. In Proc. Int. Conf. on Computer Vision. 2952–2960.Google ScholarCross Ref

29. Jiang Wang, Yang Song, Thomas Leung, Chuck Rosenberg, Jingbin Wang, James Philbin, Bo Chen, and Ying Wu. 2014. Learning Fine-Grained Image Similarity with Deep Ranking. In Proc. IEEE Conf. on Computer Vision & Pattern Recognition. 1386–1393. Google ScholarDigital Library

30. Yanzhen Wang, Kai Xu, Jun Li, Hao Zhang, Ariel Shamir, Ligang Liu, Zhiquan Cheng, and Yueshan Xiong. 2011. Symmetry Hierarchy of Man-Made Objects. Computer Graphics Forum (Proc. of Eurographics) 30, 2 (2011), 287–296.Google ScholarCross Ref

31. Jiajun Wu, Chengkai Zhang, Tianfan Xue, William T. Freeman, and Joshua B. Tenenbaum. 2016. Learning a Probabilistic Latent Space of Object Shapes via 3D Generative-Adversarial Modeling. In NIPS. 82–90. Google ScholarDigital Library

32. Z. Wu, S. Song, A. Khosla, F. Yu, L. Zhang, X. Tang, and J. Xiao. 2015. 3D ShapeNets: A Deep Representation for Volumetric Shapes. In Proc. IEEE Conf. on Computer Vision & Pattern Recognition. 1912–1920.Google Scholar

33. Xi Zhao, Ruizhen Hu, Paul Guerrero, Niloy Mitra, and Taku Komura. 2016. Relationship templates for creating scene variations. ACM Trans. on Graphics 35, 6 (2016), 207:1–13. Google ScholarDigital Library

34. Xi Zhao, He Wang, and Taku Komura. 2014. Indexing 3D Scenes Using the Interaction Bisector Surface. ACM Trans. on Graphics 33, 3 (2014), 22:1–14. Google ScholarDigital Library

35. Youyi Zheng, Daniel Cohen-Or, and Niloy J. Mitra. 2013. Smart Variations: Functional Substructures for Part Compatibility. Computer Graphics Forum (Proc. of Eurographics) 32, 2pt2 (2013), 195–204.Google Scholar

36. Yuke Zhu, Alireza Fathi, and Li Fei-Fei. 2014. Reasoning About Object Affordances in a Knowledge Base Representation. In Proc. Euro. Conf. on Computer Vision, Vol. 2. 408–424.Google ScholarCross Ref

37. Yixin Zhu, Yibiao Zhao, and Song-Chun Zhu. 2015. Understanding tools: Task-oriented object modeling, learning and recognition. In Proc. IEEE Conf. on Computer Vision & Pattern Recognition. 2855–2864.Google ScholarCross Ref