“Optimizing walking controllers for uncertain inputs and environments” by Wang, Fleet and Hertzmann

Conference:

Type(s):

Title:

- Optimizing walking controllers for uncertain inputs and environments

Presenter(s)/Author(s):

Abstract:

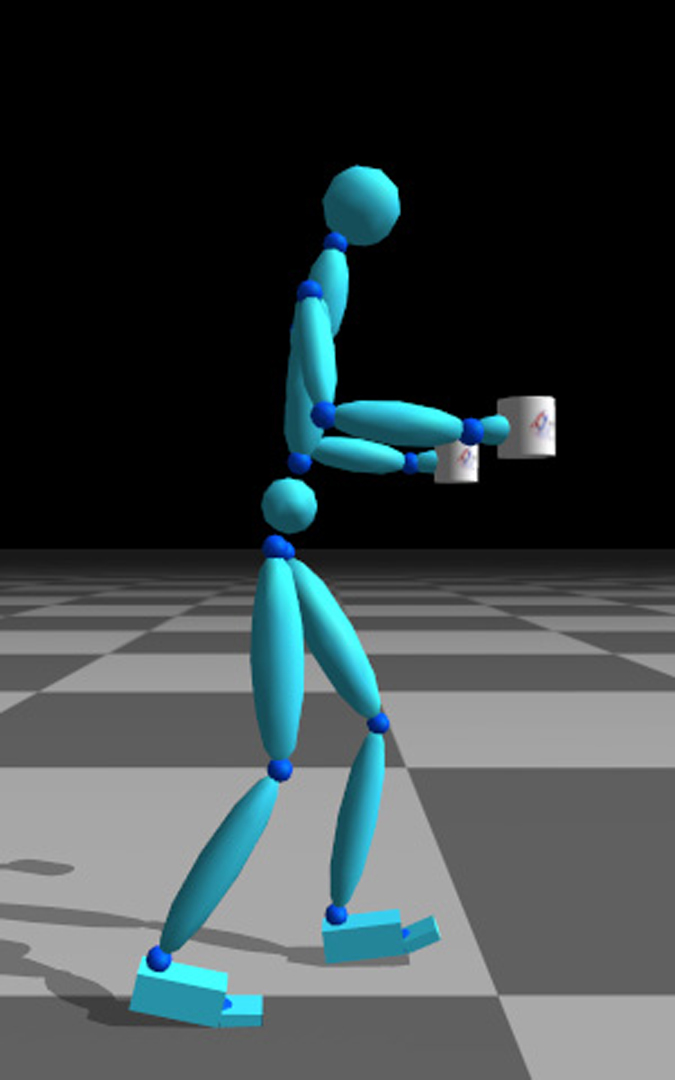

We introduce methods for optimizing physics-based walking controllers for robustness to uncertainty. Many unknown factors, such as external forces, control torques, and user control inputs, cannot be known in advance and must be treated as uncertain. These variables are represented with probability distributions, and a return function scores the desirability of a single motion. Controller optimization entails maximizing the expected value of the return, which is computed by Monte Carlo methods. We demonstrate examples with different sources of uncertainty and task constraints. Optimizing control strategies under uncertainty increases robustness and produces natural variations in style.

References:

1. Abbeel, P., Coates, A., Quigley, M., and Ng, A. Y. 2007. An application of reinforcement learning to aerobatic helicopter flight. In Adv. NIPS 19. MIT Press, 1–8.Google Scholar

2. Byl, K., and Tedrake, R. 2009. Metastable walking machines. Int. J. Rob. Res. 28, 8, 1040–1064. Google ScholarDigital Library

3. Coros, S., Beaudoin, P., and van de Panne, M. 2009. Robust task-based control policies for physics-based characters. ACM Trans. Graphics 28, 5, 170. Google ScholarDigital Library

4. da Silva, M., Abe, Y., and Popović, J. 2008. Interactive simulation of stylized human locomotion. ACM Trans. Graphics 27, 3, 82. Google ScholarDigital Library

5. Faisal, A. A., Selen, L. P. J., and Wolpert, D. M. 2008. Noise in the nervous system. Nature Rev. Neurosci. 9, 292–303.Google ScholarCross Ref

6. Faloutsos, P., van de Panne, M., and Terzopoulos, D. 2001. Composable controllers for physics-based character animation. In Proc. SIGGRAPH, ACM, 251–260. Google ScholarDigital Library

7. Grzeszczuk, R., and Terzopoulos, D. 1995. Automated learning of muscle-actuated locomotion through control abstraction. In Proc. SIGGRAPH, ACM, 63–70. Google ScholarDigital Library

8. Grzeszczuk, R., Terzopoulos, D., and Hinton, G. 1998. NeuroAnimator: Fast neural network emulation and control of physics-based models. In Proc. SIGGRAPH, ACM, 9–20. Google ScholarDigital Library

9. Hamilton, A. F. d. C., Jones, K. E., and Wolpert, D. M. 2004. The scaling of motor noise with muscle strength and motor unit in number in humans. Exp. Brain Res. 157, 4, 417–430.Google ScholarCross Ref

10. Hansen, N. 2006. The CMA evolution strategy: A comparing review. In Towards a New Evolutionary Computation. Advances on Estimation of Distribution Algorithms. Springer, 75–102.Google Scholar

11. Harris, C. M., and Wolpert, D. M. 1998. Signal-dependent noise determines motor planning. Nature 394, 780–784.Google ScholarCross Ref

12. Hasler, N., Stoll, C., Sunkel, M., Rosenhahn, B., and Seidel, H.-P. 2009. A statistical model of human pose and body shape. Computer Graphics Forum 28, 2, 337–346.Google ScholarCross Ref

13. Körding, K. 2007. Decision Theory: What “Should” the Nervous System Do? Science 318, 606–610.Google ScholarCross Ref

14. Lee, J., and Lee, K. H. 2006. Precomputing avatar behavior from human motion data. Graphical Models 68, 2, 158–174. Google ScholarDigital Library

15. Lee, Y., Lee, S. J., and Popović, Z. 2009. Compact character controllers. ACM Trans. Graphics 28, 5, 169. Google ScholarDigital Library

16. Lo, W.-Y., and Zwicker, M. 2008. Real-time planning for parameterized human motion. In Proc. Symposium on Computer Animation, ACM SIGGRAPH/Eurographics, 29–38. Google ScholarDigital Library

17. McCann, J., and Pollard, N. S. 2007. Responsive characters from motion fragments. ACM Trans. Graphics 26, 3, 6. Google ScholarDigital Library

18. Muico, U., Lee, Y., Popović, J., and Popović, Z. 2009. Contact-aware nonlinear control of dynamic characters. ACM Trans. Graphics 28, 3, 81. Google ScholarDigital Library

19. Ng, A. Y., and Jordan, M. I. 2000. PEGASUS: A policy search method for large MDPs and POMDPs. In Proc. UAI, AUAI, 406–415. Google ScholarDigital Library

20. Sharon, D., and van de Panne, M. 2005. Synthesis of controllers for stylized planar bipedal walking. In Proc. ICRA, IEEE, 2387–2392.Google Scholar

21. Sims, K. 1994. Evolving virtual creatures. In Proc. SIGGRAPH, ACM, 15–22. Google ScholarDigital Library

22. Sok, K. W., Kim, M., and Lee, J. 2007. Simulating biped behaviors from human motion data. ACM Trans. Graphics 26, 3, 107. Google ScholarDigital Library

23. Spall, J. C. 2003. Introduction to Stochastic Search and Optimization: Estimation, Simulation, and Control. Wiley. Google ScholarDigital Library

24. Sutton, R. S., and Barto, A. G. 1998. Reinforcement Learning: An Introduction. MIT Press. Google ScholarDigital Library

25. Tedrake, R., Zhang, T. W., and Seung, H. S. 2004. Stochastic policy gradient reinforcement learning on a simple 3D biped. In Proc. IROS, vol. 3, IEEE/RSJ, 2849–2854.Google Scholar

26. Todorov, E. 2004. Optimality principles in sensorimotor control. Nature Neuroscience 7, 9, 907–915.Google ScholarCross Ref

27. Treuille, A., Lee, Y., and Popović, Z. 2007. Near-optimal character animation with continuous control. ACM Trans. Graphics 26, 3, 7. Google ScholarDigital Library

28. van de Panne, M., and Fiume, E. 1993. Sensor-actuator networks. In Proc. SIGGRAPH, ACM, 335–342. Google ScholarDigital Library

29. van de Panne, M., and Lamouret, A. 1995. Guided optimization for balanced locomotion. In Proc. CAS, EG, 165–177.Google Scholar

30. Wang, J. M., Fleet, D. J., and Hertzmann, A. 2009. Optimizing walking controllers. ACM Trans. Graphics 28, 5, 168. Google ScholarDigital Library

31. Yin, K., Loken, K., and van de Panne, M. 2007. SIMBICON: Simple biped locomotion control. ACM Trans. Graphics 26, 3, 105. Google ScholarDigital Library