“Mouth Gesture based Emotion Awareness and Interaction in Virtual Reality” by Zhang, Ciftci and Yin

Conference:

Type(s):

Entry Number: 26

Title:

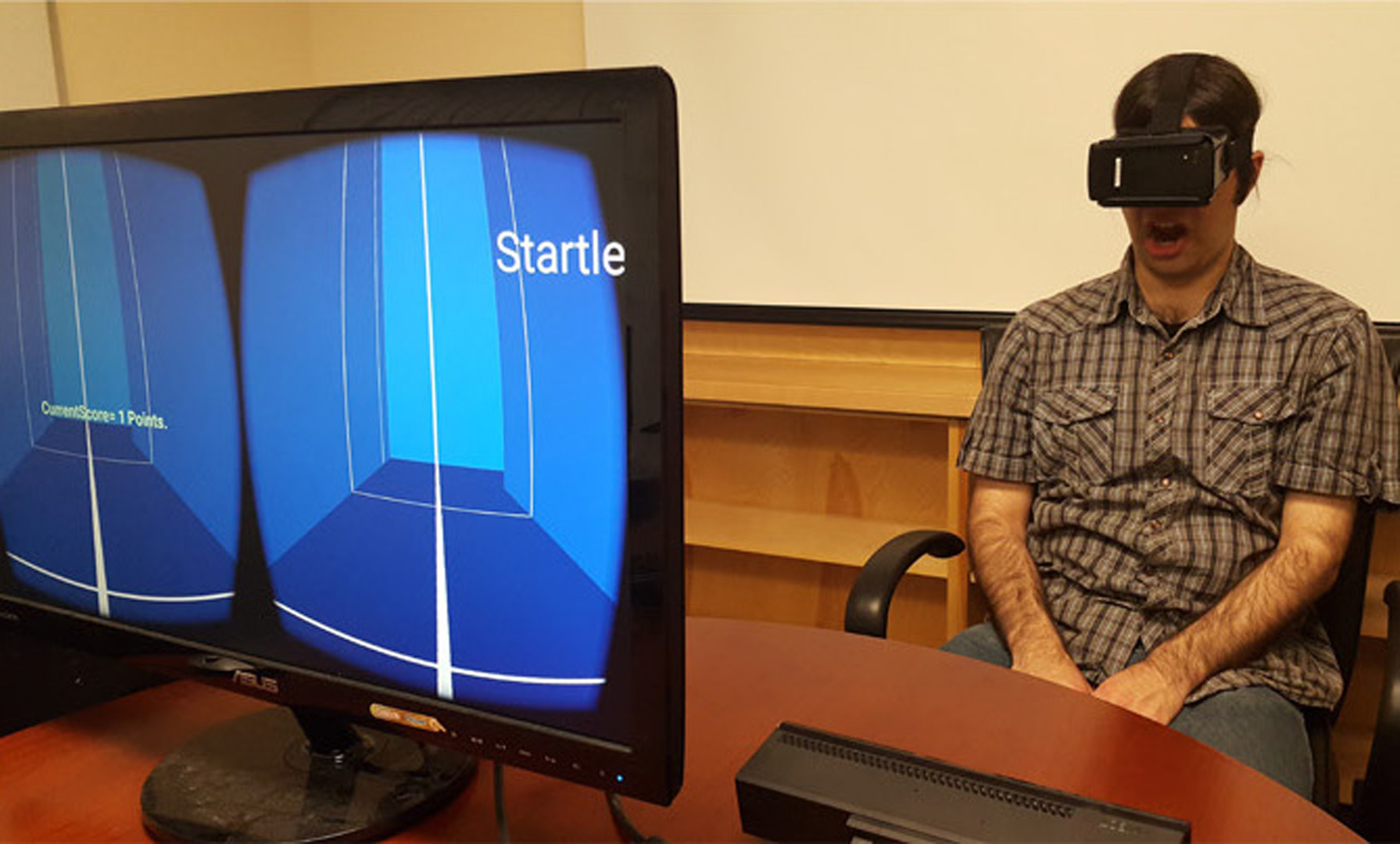

- Mouth Gesture based Emotion Awareness and Interaction in Virtual Reality

Presenter(s)/Author(s):

Abstract:

In recent years, Virtual Reality (VR) has become a new media to provide users an immersive experience. Events happening in the VR connect closer to our emotions as compared to other interfaces. The emotion variations are reflected as our facial expressions. However, the current VR systems concentrate on “giving” information to the user, yet ignore “receiving” emotional status from the user, while this information definitely contributes to the media content rating and the user experience. On the other hand, traditional controllers become difficult to use due to the obscured view point. Hand and head gesture based control is an option [Cruz-Neira et al. 1993]. However, certain sensor devices need to be worn to assure control accuracy and users are easy to feel tired. Although face tracking achieves accurate result in both 2D and 3D scenarios, the current state-of-the-art systems cannot work when half of the face is occluded by the VR headset because the shape model is trained by data from the whole face.