“Motion synthesis from annotations”

Conference:

Type(s):

Title:

- Motion synthesis from annotations

Presenter(s)/Author(s):

Abstract:

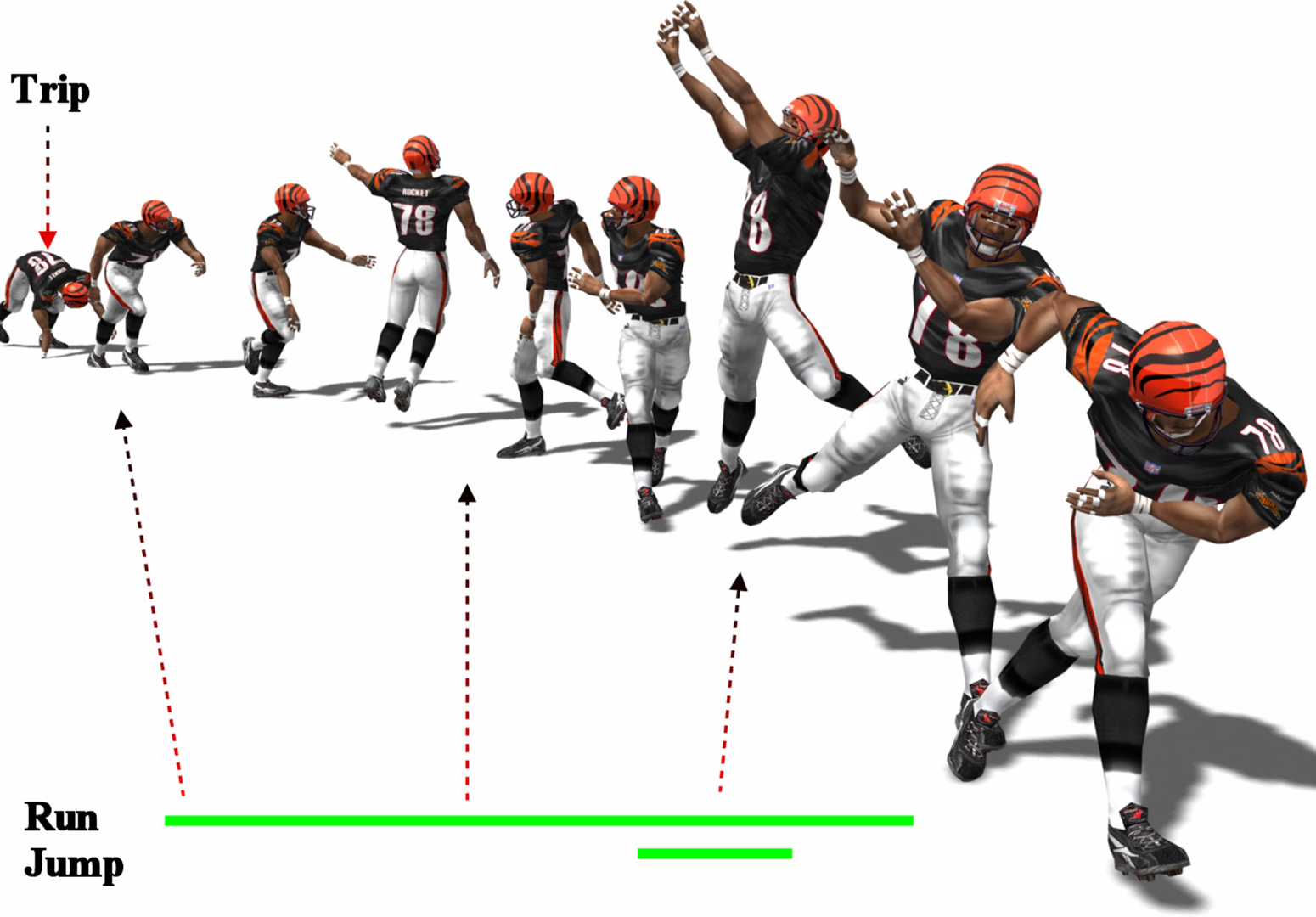

This paper describes a framework that allows a user to synthesize human motion while retaining control of its qualitative properties. The user paints a timeline with annotations — like walk, run or jump — from a vocabulary which is freely chosen by the user. The system then assembles frames from a motion database so that the final motion performs the specified actions at specified times. The motion can also be forced to pass through particular configurations at particular times, and to go to a particular position and orientation. Annotations can be painted positively (for example, must run), negatively (for example, may not run backwards) or as a don’t-care. The system uses a novel search method, based around dynamic programming at several scales, to obtain a solution efficiently so that authoring is interactive. Our results demonstrate that the method can generate smooth, natural-looking motion.The annotation vocabulary can be chosen to fit the application, and allows specification of composite motions (run and jump simultaneously, for example). The process requires a collection of motion data that has been annotated with the chosen vocabulary. This paper also describes an effective tool, based around repeated use of support vector machines, that allows a user to annotate a large collection of motions quickly and easily so that they may be used with the synthesis algorithm.

References:

1. ARIKAN, O., AND FORSYTH, D. 2002. Interactive motion generation from examples. In Proceedings of SIGGRAPH 2002, 483–490. Google ScholarDigital Library

2. BERTSEKAS, D. P. 2000. Dynamic Programming and Optimal Control. Athena Scientific. Google Scholar

3. BLUMBERG, B., AND GALYEAN, T. 1995. Multi-level direction of autonomous creatures for real-time virtual environments. In Proceedings of SIGGRAPH 1995, 47–54. Google Scholar

4. BRAND, M., AND HERTZMANN, A. 2000. Style machines. In Proceedings of SIGGRAPH 2000, 183–192. Google ScholarDigital Library

5. BREGLER, C., LOEB, L., CHUANG, E., AND DESHPANDE, H. 2002. Turning to masters: Motion capturing cartoons. In Proceedings of SIGGRAPH 2002, 399–407. Google ScholarDigital Library

6. CHANG, C. C., AND LIN, C. J. 2000. Libsvm: Introduction and benchmarks. Tech. rep., Department of Computer Science and Information Engineering, National Taiwan University.Google Scholar

7. FALOUTSOS, P., VANDE PANNE, M., AND TERZOPOULOS, D. 2001. Composable controllers for physics-based character animation. In Proceedings of SIGGRAPH 2001, 251–260. Google ScholarDigital Library

8. GLEICHER, M. 1998. Retargetting motion to new characters. In Proceedings of SIGGRAPH 1998, 33–42. Google ScholarDigital Library

9. GLEICHER, M. 2001. Motion path editing. In Proceedings of 2001 ACM Symposium on Interactive 3D Graphics, 195–202. Google Scholar

10. GRZESZCZUK, R., AND TERZOPOULOS, D. 1995. Automated learning of muscle-actuated locomotion through control abstraction. In Proceedings of SIGGRAPH 1995, 63–70. Google Scholar

11. HODGINS, J., WOOTEN, W., BROGAN, D., AND O’BRIEN, J. 1995. Animated human athletics. In Proceedings of SIGGRAPH 1995, 71–78. Google Scholar

12. KOVAR, L., GLEICHER, M., AND PIGHIN, F. 2002. Motion graphs. In Proceedings of SIGGRAPH 2002, 473–482. Google ScholarDigital Library

13. KOVAR, L., GLEICHER, M., AND SCHREINER, J. 2002. Footstake cleanup for motion capture editing. In ACM SIGGRAPH Symposium on Computer Animation 2002, 97–104. Google ScholarDigital Library

14. LEE, J., CHAI, J., REITSMA, P., HODGINS, J., AND POLLARD, N. 2002. Interactive control of avatars animated with human motion data. In Proceedings of SIGGRAPH 2002, 491–500. Google ScholarDigital Library

15. LI, Y., WANG, T., AND SHUM, H. Y. 2002. Motion texture: A two-level statistical model for character motion synthesis. In Proceedings of SIGGRAPH 2002, 465–472. Google Scholar

16. LIU, C. K., AND POPOVIC, Z. 2002. Synthesis of complex dynamic character motion from simple animations. In Proceedings of SIGGRAPH 2002, 408–416. Google ScholarDigital Library

17. MATARIC, M. J. 2000. Getting humanoids to move and imitate. In IEEE Intelligent Systems, IEEE, 18–24. Google Scholar

18. MOLINA-TANCO, L., AND HILTON, A. 2000. Realistic synthesis of novel human movements from a database of motion capture examples. In Workshop on Human Motion (HUMO’00), 137–142. Google ScholarCross Ref

19. PERLIN, K., AND GOLDBERG, A. 1996. Improv: A system for scripting interactive actors in virtual worlds. In Proceedings of SIGGRAPH 1996, 205–216. Google Scholar

20. PULLEN, K., AND BREGLER, C. 2002. Motion capture assisted animation: Texturing and synthesis. In Proceedings of SIGGRAPH 2002, 501–508. Google ScholarDigital Library

21. ROSE, C., GUENTER, B., BODENHEIMER, B., AND COHEN, M. F. 1996. Efficient generation of motion transitions using spacetime constraints. In Proceedings of SIGGRAPH 1996, vol. 30, 147–154. Google ScholarDigital Library

22. ROSE, C., COHEN, M. F., AND BODENHEIMER, B. 1998. Verbs and adverbs: Multidimensional motion interpolation. IEEE Computer Graphics and Applications 18, 5 (/), 32–41. Google Scholar

23. WITKIN, A., AND KASS, M. 1988. Spacetime constraints. In Proceedings of SIGGRAPH 1988, 159–168. Google ScholarDigital Library

24. WITKIN, A., AND POPOVIC, Z. 1995. Motion warping. In Proceedings of SIGGRAPH 1995, 105–108. Google Scholar