“Markerless human motion transfer” by Cheung, Baker, Hodgins and Kanade

Conference:

Type(s):

Title:

- Markerless human motion transfer

Session/Category Title: Motion

Presenter(s)/Author(s):

Abstract:

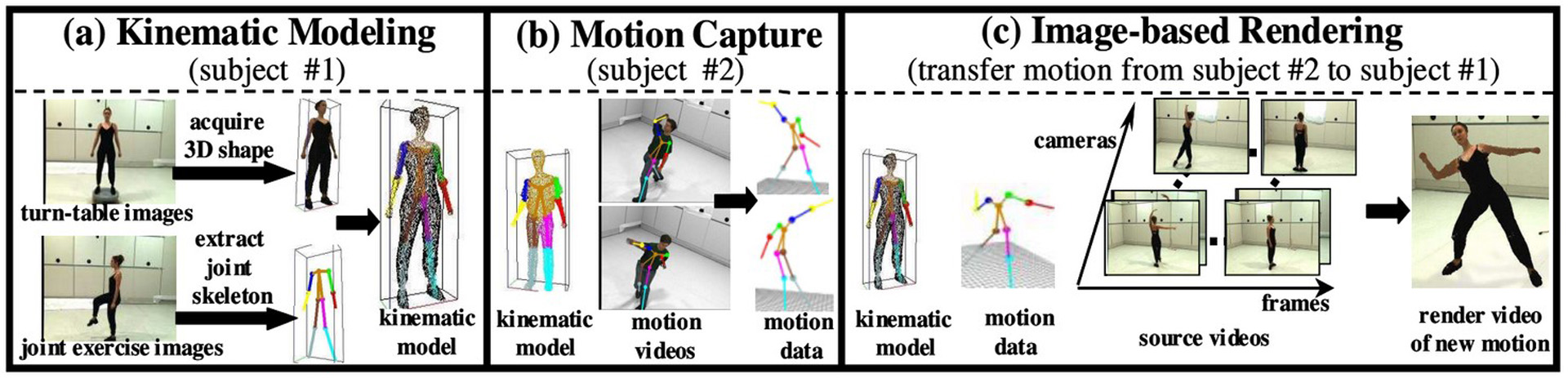

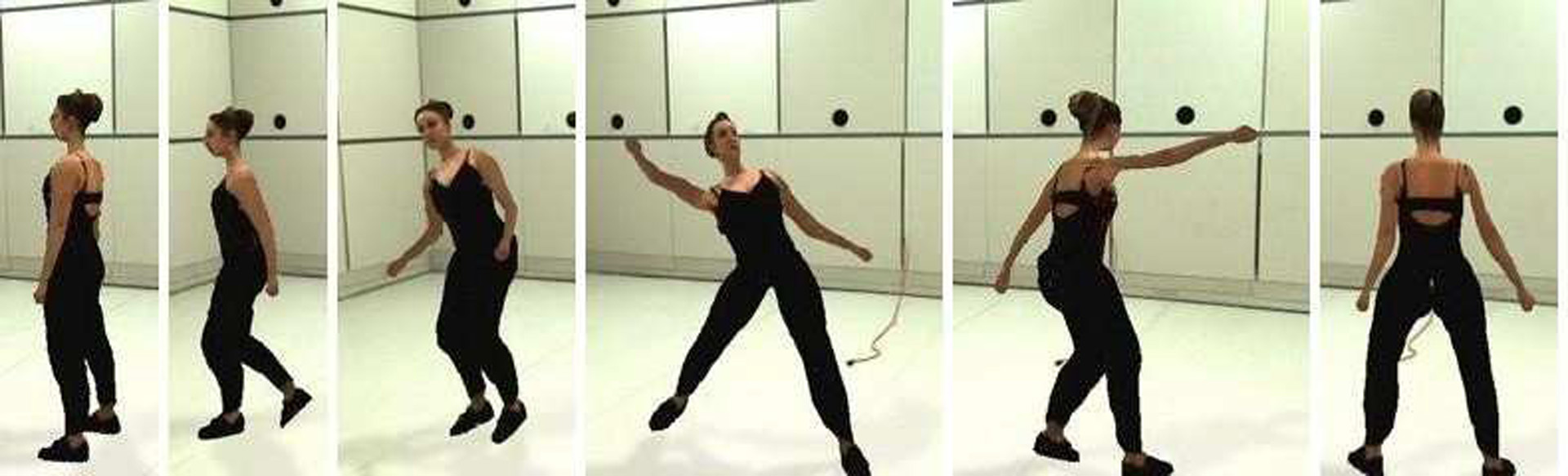

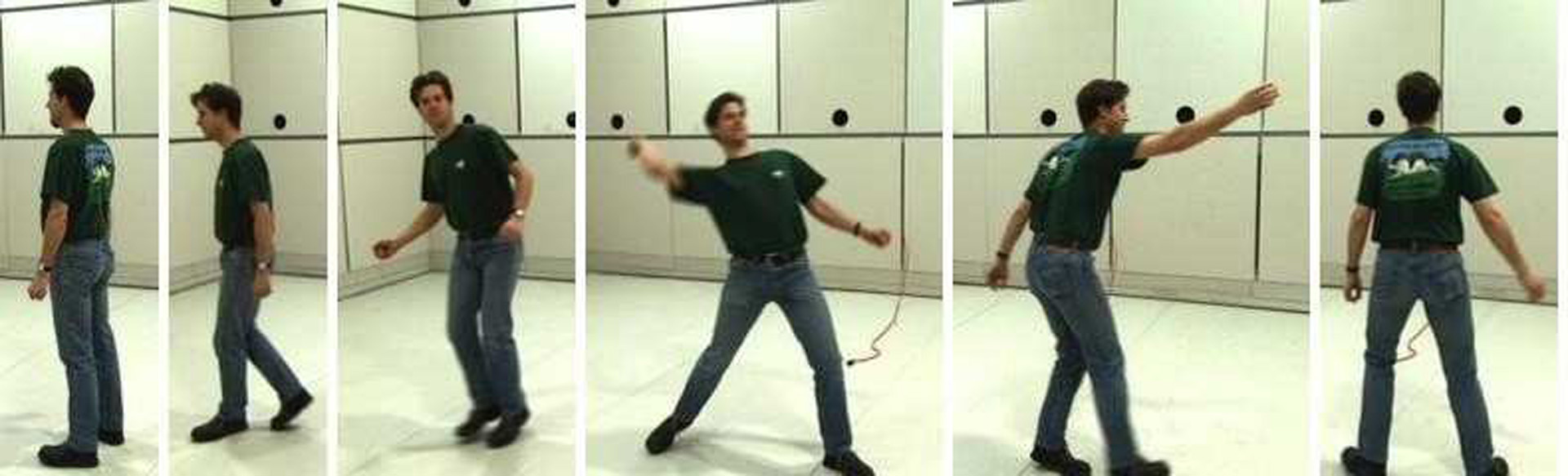

Generating animations of real-life characters with realistic motion and appearance is one of the most difficult problems in computer graphics. The ability to create realistic videos of a person performing new motions is essential to games development and special effects in movie production. In this research, we describe a markerless system to transfer articulated motion from one person to another: given video sequences of two people performing two different motions, we generate videos of each person performing the motion of the other person. Our system is based on computer vision techniques and uses no markers in any of the steps.

References:

Cheung, G., Baker, S., and Kanade, T. 2003. Shape-from-silhouette for articulated objects and its use for human body kinematics estimation and motion capture. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition.